Low Latency: The Ultimate Guide for Real-Time Applications (2025)

Introduction to Low Latency

Low latency refers to the minimal delay between a user's action and a computer system's response. In technical terms, it is the short time interval required for a data packet to travel from source to destination and back again. Low latency is crucial for modern applications demanding real-time communication and responsiveness. From high-frequency financial trading to

live streaming

and IoT, industries are increasingly dependent on low latency to deliver seamless user experiences. As we move further into 2025, the need for ultra-responsive networks and systems continues to shape the architecture of digital solutions across gaming, autonomous vehicles, video conferencing, and beyond.Understanding Latency and Low Latency

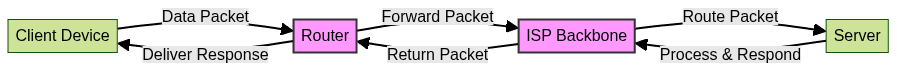

Latency in networks describes the total time it takes for data to move from the source to the destination and back. This round-trip time (RTT) is generally measured in milliseconds (ms) or microseconds (µs), and it can significantly affect application performance. In contrast, low latency is the optimized state where this delay is minimized, enabling near-instantaneous data exchanges.

Each hop introduces potential network latency due to switching, routing, and transmission delays. Achieving low latency requires optimizing each segment of this path. For developers building real-time communication tools, leveraging a robust

Video Calling API

can help minimize these delays and improve user experience.Why Low Latency Matters

User experience is the most immediate beneficiary of low latency. In fast-paced online gaming, even a few milliseconds of delay can determine outcomes. Video conferencing relies on real-time communication, where low latency ensures seamless, natural conversations. For live streaming and streaming analytics, minimal delay is vital to delivering up-to-date content and insights.

For those developing cross-platform communication apps, technologies like

flutter webrtc

andwebrtc android

are essential for achieving low latency in both mobile and web environments.In financial sectors, particularly high-frequency trading, low latency provides a competitive edge, enabling firms to execute trades faster than their rivals. IoT devices and autonomous vehicles depend on low latency networks for timely data transmission, crucial for safety and efficiency. In all these cases, latency-sensitive applications directly impact user engagement, satisfaction, and business success.

A business that can guarantee lower latency than competitors gains a measurable advantage, whether in customer retention, transaction speed, or overall application performance.

How is Latency Measured?

Latency is typically measured in milliseconds or, for ultra low latency applications, in microseconds. Several tools and methods are commonly used:

- Ping: Sends ICMP packets to a target and measures the round-trip time.

- Traceroute: Maps the path and latency across network hops.

- RTT (Round-Trip Time): The total time for a signal to go from source to destination and back.

- TTFB (Time to First Byte): Measures the time until the first byte of data is received from a server.

- RUM (Real User Monitoring): Gathers latency data from actual users’ sessions.

For developers working with different programming languages, using a

python video and audio calling sdk

or ajavascript video and audio calling sdk

can provide built-in tools and APIs to help monitor and optimize latency in real-time communication applications.Below is a basic code snippet for measuring network latency using ping in Python:

1import subprocess

2import sys

3

4def ping(host):

5 command = ["ping", "-c", "4", host]

6 return subprocess.run(command, stdout=subprocess.PIPE).stdout.decode()

7

8if __name__ == "__main__":

9 host = sys.argv[1] if len(sys.argv) > 1 else "google.com"

10 print(ping(host))

11Benchmarking is context-specific: a latency below 20ms may be necessary for online gaming, while a few hundred milliseconds could be acceptable for cloud data lake analytics. Understanding these thresholds is essential for meaningful latency measurement.

Achieving Low Latency: Key Factors and Technologies

Attaining low latency requires a multi-faceted approach:

- Network Infrastructure: Fiber optics offer faster signal transmission than copper cables, while 5G networks reduce wireless transmission delays. High-performance hardware, such as low-latency NICs (network interface cards), further minimizes delays.

- Routing and Protocols: Efficient routing protocols like BGP and OSPF, combined with advanced traffic engineering, help reduce unnecessary hops and optimize data paths.

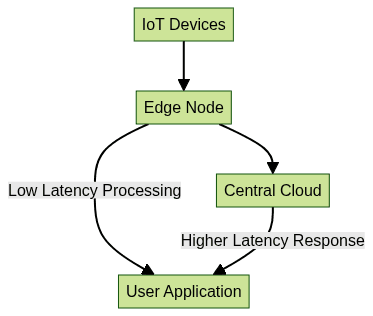

- Edge Computing and Data Management: Processing data closer to the source with edge computing reduces the distance and network congestion, enabling real-time analytics and action.

- Streaming-First Architectures: Systems designed to process and transmit data in streams, rather than batches, drastically cut down time-to-first-byte and end-to-end latency.

To further enhance real-time communication, integrating a

Voice SDK

can provide low-latency audio experiences, while anembed video calling sdk

allows you to quickly add high-performance video features to your applications.

Edge nodes process data locally, sending only necessary results to the central cloud, which reduces latency for latency-sensitive applications.

Code Implementation: Measuring and Reducing Latency

You can measure and optimize latency directly in your code. Below is an example Python script that measures HTTP request latency and implements a simple retry mechanism to reduce the impact of transient delays:

1import requests

2import time

3

4def measure_latency(url):

5 start = time.time()

6 response = requests.get(url)

7 latency = (time.time() - start) * 1000 # milliseconds

8 return latency, response.status_code

9

10def get_best_latency(url, retries=3):

11 min_latency = float('inf')

12 for _ in range(retries):

13 latency, status = measure_latency(url)

14 if latency < min_latency:

15 min_latency = latency

16 return min_latency

17

18if __name__ == "__main__":

19 url = "https://example.com"

20 print(f"Best latency: {get_best_latency(url):.2f} ms")

21If you're building mobile or cross-platform apps, leveraging a

react native video and audio calling sdk

can help you deliver low-latency video and audio experiences across devices.Tips for reducing latency:

- Place servers closer to users (CDNs, edge nodes)

- Optimize routing tables and DNS

- Minimize processing overhead in your application logic

- Use efficient serialization formats (like Protocol Buffers over JSON)

Challenges and Bottlenecks to Low Latency

Achieving consistently low latency is challenging.

- Network Congestion: High traffic leads to queuing and increased delays.

- Physical Distance: The farther data must travel, the higher the base latency due to the speed of light limits.

- Server/Resource Availability: Overloaded servers introduce processing bottlenecks.

- Security and Processing Overhead: Encryption, decryption, and deep packet inspection add measurable delays.

Addressing these requires proactive network optimization, infrastructure investment, and smart architectural choices. For those ready to implement these solutions, you can

Try it for free

and experience the benefits of low-latency APIs firsthand.Ultra Low Latency: Pushing the Boundaries

Ultra low latency is typically defined as end-to-end delays below 1 millisecond. Such stringent benchmarks are required in specialized sectors like high-frequency trading—where algorithms compete for nanosecond advantages—and in emerging fields such as telemedicine and remote robotics. In 2025, achieving ultra low latency often involves proprietary hardware, direct fiber connections, co-location, and custom network protocols, all pushing the boundaries of what is technologically possible.

Best Practices for Designing Low Latency Systems

- Infrastructure Optimization: Use the fastest available network links (fiber, 5G), and minimize the number of hops between endpoints

- Monitoring and Continuous Improvement: Leverage tools like RUM, synthetic monitoring, and automated latency benchmarks to detect and address issues proactively

- Application-Level Optimizations: Reduce payload sizes, adopt streaming-first data flows, and design for concurrency to avoid processing bottlenecks

Iterative improvement and relentless measurement are key. In 2025, successful low latency systems are those that adapt in real time to changing network and application conditions.

Conclusion

Low latency is not just a technical metric—it's a competitive differentiator in 2025. Whether you're building real-time applications, streaming platforms, or trading systems, mastering latency measurement and optimization is essential. As technology evolves, so too will the strategies for achieving ever-lower latency, enabling the next generation of responsive, real-time digital experiences.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ