Introduction to HLS Low Latency

HLS low latency is revolutionizing real-time streaming in 2025, providing developers and broadcasters with the tools to minimize delays and deliver near-instantaneous video experiences. Low latency is crucial in

live streaming

applications where every second counts—think live sports, auctions, gaming, and interactive broadcasts. Traditional HTTPLive Streaming

(HLS) often incurred delays of 18-30 seconds, but with advances like Low-Latency HLS (LL-HLS), those numbers are now measured in seconds or less. In this post, we’ll explore the evolution of the HLS protocol, key mechanisms behind LL-HLS, configuration tips, real-world workflows, and comparisons with alternative streaming protocols. By the end, you’ll have a comprehensive understanding of how to implement and optimize hls low latency solutions in your streaming workflow.What is HLS Low Latency?

Evolution of the HLS Protocol

Apple introduced the HLS protocol in 2009, making adaptive bitrate streaming accessible over standard HTTP infrastructure. Classic HLS was reliable but suffered from high latency due to large segment sizes and playlist update intervals. This meant viewers could experience delays upwards of 30 seconds—unacceptable for interactive or real-time events.

Low-Latency HLS (LL-HLS) emerged to address these issues, debuting as a draft in 2019 and maturing through 2025. LL-HLS introduces innovations like partial segments, blocking playlist reloads, and additional playlist parameters (HLSmsn, HLSpart). The result is dramatically reduced

live streaming

latency, often as low as 2–5 seconds, while retaining the scalability and reliability of traditional HLS.Use Cases for Low Latency HLS

LL-HLS unlocks new possibilities for live streaming:

- Live sports: Fans see events nearly in real-time, reducing spoilers and improving engagement.

- Auctions & betting: Ensures fairness and competitiveness with minimal lag.

- Second screen experiences: Enables synchronized interactivity (e.g., polls, trivia, live chats).

- Interactive events: Real-time Q&A, auctions, and live shopping benefit from lower glass-to-glass latency.

For developers building interactive features like live video chat, integrating a

Video Calling API

can further enhance audience engagement alongside low-latency streaming.How Does Low Latency HLS Work?

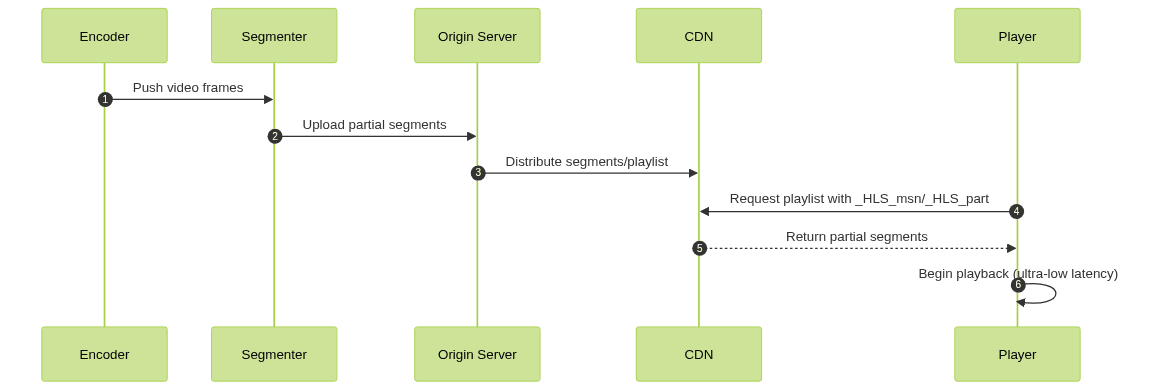

Key Mechanisms in LL-HLS

LL-HLS achieves low latency through several technical advancements:

- Blocking Playlist Reload: Clients use playlist requests that "wait" until new media is available, reducing polling frequency and enabling quicker segment delivery.

- Partial Segments: Segments are divided into smaller parts, allowing playback to begin before a full segment is written.

- Playlist Parameters: Parameters like

_HLS_msn(Media Sequence Number) and_HLS_part(Partial Segment Number) enable clients to request exactly what they need.

If you're building real-time communication apps on Android, exploring

webrtc android

can help you achieve sub-second latency and interactive features that complement LL-HLS streaming.Blocking Playlist Reload Example

1GET /stream/playlist.m3u8?_HLS_msn=123&_HLS_part=3&_HLS_skip=YES HTTP/1.1

2Host: example.com

3Accept: application/vnd.apple.mpegurl

4This request asks the server for the playlist, waiting until the next part is available, minimizing latency.

Protocol Extensions & Compatibility

Apple’s LL-HLS specification is tightly integrated with CMAF (Common Media Application Format), which enables chunked transfer encoding and standardized segmenting. Segments are typically 1–2 seconds, and partial segments (parts) may be 200–400ms for ultra-low latency.

- CMAF: Preferred for chunked, low-latency workflows, but TS (Transport Stream) segments are still supported for legacy setups.

- Player and Server Support: Modern HLS players (hls.js, Video.js, Safari native) and servers (AWS Elemental, Oryx, Wowza) support LL-HLS extensions, but compatibility varies across devices and browsers.

If you're developing cross-platform video apps, check out

flutter webrtc

for building real-time communication features in Flutter that can work seamlessly with low-latency streaming.

Configuring a Low Latency HLS Workflow

Encoder and Segmenter Settings

The foundation of any hls low latency setup is the encoder and segmenter. Optimal settings are crucial:

- GOP (Group of Pictures): Set GOP duration to match or be a multiple of your segment duration (e.g., 2 seconds).

- Profile: Use

mainorbaselinefor maximum compatibility. - Tune: Use

zerolatencyor similar encoder flags to minimize buffer bloat.

For developers working with web-based video chat, integrating a

javascript video and audio calling sdk

can help add interactive features to your streaming workflow.Sample FFmpeg Configuration

1ffmpeg \

2 -i input.mp4 \

3 -c:v libx264 \

4 -preset veryfast \

5 -tune zerolatency \

6 -g 48 -keyint_min 48 \

7 -sc_threshold 0 \

8 -hls_time 2 \

9 -hls_part_size 0.333 \

10 -hls_flags +independent_segments+split_by_time \

11 -hls_playlist_type event \

12 -master_pl_name master.m3u8 \

13 output_%v.m3u8

14For OBS, set the keyframe interval to match segment duration and enable any available low-latency options.

Server and CDN Optimization

- Origin Servers: Use LL-HLS-enabled solutions like AWS Elemental MediaPackage, Oryx, or Wowza Streaming Engine.

- CDN Settings: Adjust cache rules to allow rapid playlist updates. Set low Time-To-Live (TTL) for playlist files (2-4 seconds) and longer TTL for segments.

- Buffer Management: Configure edge servers to handle partial segment delivery efficiently and avoid unnecessary rebuffering.

For those building mobile streaming apps, leveraging a

react native video and audio calling sdk

can help you add real-time communication to your low-latency video workflows.Player Settings and Best Practices

- hls.js / Video.js: Ensure the latest player versions with LL-HLS enabled. Configure buffer lengths (e.g., 2–3 seconds) and playback start policies for minimal delay.

- Apple HLS (Safari, iOS): Native support for LL-HLS—set

AVPlayerpreferredForwardBufferDuration for ultra-low latency.

If you want to try these features in your own projects,

Try it for free

and experiment with low-latency streaming and real-time APIs.Example hls.js Initialization

1const hls = new Hls({

2 lowLatencyMode: true,

3 maxLiveSyncPlaybackRate: 1.0,

4 maxBufferLength: 3,

5});

6hls.loadSource('https://example.com/stream/playlist.m3u8');

7hls.attachMedia(videoElement);

8- Best Practices: Always test across devices, monitor latency in production, and balance buffer size for rebuffering protection.

Challenges and Trade-Offs

Quality vs Latency

Reducing latency often means reducing forward buffer size, increasing the risk of playback interruptions during network fluctuations. Developers must balance smooth playback with the desire for real-time delivery, especially over unpredictable mobile or WiFi networks.

If you need to add interactive video chat to your live streaming workflow, consider using a

Video Calling API

that integrates seamlessly with your existing stack.Compatibility Considerations

Not all players, browsers, or CDNs fully support LL-HLS as of 2025. Some devices may fall back to traditional HLS or other protocols, which can reintroduce higher latency. Always provide robust fallbacks and monitor audience device/browser statistics.

HLS Low Latency vs Other Protocols

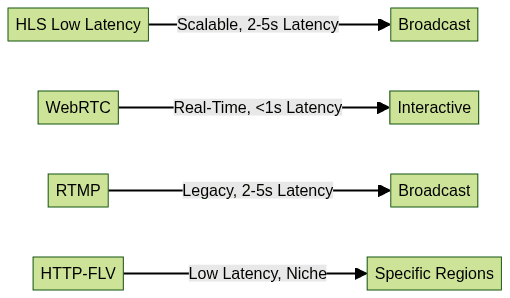

How does hls low latency compare with other real-time streaming protocols?

- WebRTC: Offers sub-second latency and true real-time interactivity, but is complex to scale for large audiences.

- RTMP: Legacy protocol, low latency (~2–5s), but lacks native adaptive bitrate and is being phased out.

- HTTP-FLV: Used in China, good for low-latency push, but less standardized and less robust across networks than HLS.

LL-HLS is ideal for scalable, low-latency broadcast to large audiences, while WebRTC is best for interactive, small-scale sessions.

Real-World Implementation Example

Open Source and Cloud Solutions

A practical LL-HLS deployment might use an open-source stack like

lhls-simple-live-platform

or cloud services such as AWS Elemental or Oryx.For developers interested in building hybrid solutions, combining LL-HLS with

webrtc android

orflutter webrtc

can enable both large-scale broadcast and real-time interactivity across platforms.Example: lhls-simple-live-platform

1git clone https://github.com/epiclabs-io/lhls-simple-live-platform.git

2cd lhls-simple-live-platform

3docker-compose up

4This launches a local LL-HLS origin, segmenter, and player UI. For production, cloud providers offer managed LL-HLS pipelines:

- AWS Elemental MediaLive/MediaPackage

- Oryx Streaming Platform

- Wowza Streaming Engine

Each provides reference architectures and API access to enable rapid, scalable deployments in 2025.

Conclusion and Key Takeaways

HLS low latency is reshaping real-time streaming by combining sub-5-second glass-to-glass latency with the reach and reliability of HTTP delivery. By adopting LL-HLS, tuning encoder and server settings, and optimizing player experiences, you can deliver interactive, ultra-fast streams at scale. Experiment, monitor, and iterate—2025 is the year for next-gen live streaming.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ