Introduction: Understanding the Need for Low Latency Streaming Protocols

In today's fast-paced digital world, users expect instant gratification. When it comes to streaming video and audio, delays can be frustrating and unacceptable. This is where the importance of low latency streaming protocols comes into play. These protocols are designed to minimize the delay between when a video or audio signal is captured and when it's displayed to the end-user.

What is Low Latency Streaming?

Low latency streaming refers to the delivery of video and audio content with minimal delay, ideally approaching real-time. This means the time it takes for a signal to travel from the source to the viewer is significantly reduced. It's about reducing the perceived lag to create a more interactive and engaging experience.

Why is Low Latency Important?

Low latency is crucial for a variety of applications. Consider live sports events where viewers want to see the action as it happens. In interactive live streaming, like online gaming or video conferencing, even slight delays can disrupt the experience. Real-time communication, such as tele-surgery or drone control, demands ultra-low latency streaming to ensure accurate and immediate responses. The benefits of low latency include improved user engagement, enhanced interactivity, and the ability to support real-time applications.

For businesses, low latency video streaming translates to better customer satisfaction and a competitive edge. Reducing delays improves the user experience, making your streaming service more appealing than competitors with higher latency.

The Landscape of Low Latency Streaming Protocols

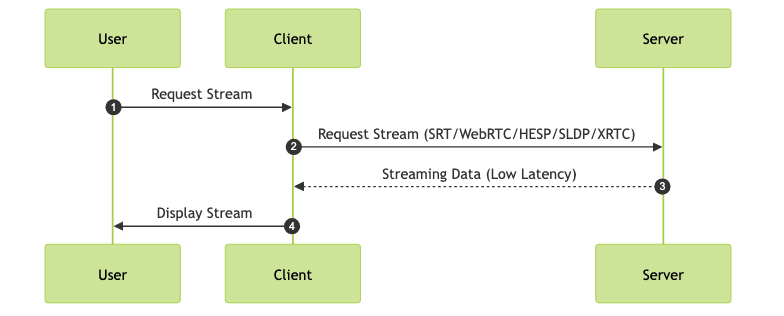

The world of real-time streaming protocols is diverse, with various options available, each with its own strengths and weaknesses. Some popular low delay streaming protocols include SRT, WebRTC, HESP, SLDP, and XRTC. Understanding the nuances of each protocol is key to selecting the best fit for your specific use case.

Top Low Latency Streaming Protocols: A Comparative Analysis

Let's dive into a detailed comparison of some of the leading high-efficiency streaming protocols designed for low latency.

SRT (Secure Reliable Transport)

SRT (Secure Reliable Transport) is an open-source video transport protocol that optimizes streaming performance across unpredictable networks. It prioritizes secure and reliable delivery of high-quality video with low latency. SRT uses Advanced Encryption Standard (AES) to protect content and provides error correction to minimize packet loss over lossy networks. SRT is particularly well-suited for contribution and distribution workflows, especially when traversing the public internet. It is good to find a low latency streaming solution with SRT.

SRT Server Configuration Example

1import srt

2

3# Create an SRT socket

4s = srt.Socket()

5

6# Bind the socket to an address and port

7s.bind(('0.0.0.0', 1234))

8

9# Listen for incoming connections

10s.listen(10)

11

12print("SRT server listening on port 1234...")

13

14# Accept a connection

15conn, addr = s.accept()

16

17print("Connection from:", addr)

18

19# Receive data

20while True:

21 try:

22 data = conn.recv(1316)

23 if not data:

24 break

25 # Process the received data

26 print("Received data:", data)

27 except srt.SRTError as e:

28 print("SRT error:", e)

29 break

30

31# Close the connection

32conn.close()

33s.close()

34SRT Client Configuration Example

1import srt

2

3# Create an SRT socket

4s = srt.Socket()

5

6# Connect to the server

7try:

8 s.connect(('server_ip_address', 1234))

9 print("Connected to SRT server")

10except srt.SRTError as e:

11 print("SRT connection error:", e)

12 exit()

13

14# Send data

15message = b"Hello, SRT server!"

16

17try:

18 s.send(message)

19 print("Sent data:", message)

20except srt.SRTError as e:

21 print("SRT error:", e)

22

23# Close the socket

24s.close()

25WebRTC (Web Real-Time Communication)

WebRTC (Web Real-Time Communication) is an open-source project that provides browsers and mobile applications with real-time communication capabilities via simple APIs. WebRTC supports video, voice, and generic data to be sent between peers. Its primary advantages are its widespread browser support and its peer-to-peer architecture which reduces server load for many use cases. WebRTC is often used in video conferencing, live streaming, and gaming applications where sub-second latency streaming is required. Choosing the best low latency streaming protocol is crucial for real-time app development.

WebRTC Peer Connection Setup

1const peerConnection = new RTCPeerConnection(configuration);

2

3// Send any ice candidates to the other peer

4peerConnection.onicecandidate = event => {

5 if (event.candidate) {

6 // Send the candidate to the remote peer

7 sendMessage({

8 type: 'candidate',

9 candidate: event.candidate

10 });

11 }

12};

13

14// Handle incoming streams

15peerConnection.ontrack = event => {

16 remoteVideo.srcObject = event.streams[0];

17};

18

19// Create an offer

20peerConnection.createOffer()

21 .then(offer => peerConnection.setLocalDescription(offer))

22 .then(() => {

23 // Send the offer to the remote peer

24 sendMessage({

25 type: 'offer',

26 sdp: peerConnection.localDescription.sdp

27 });

28 });

29HESP (High Efficiency Streaming Protocol)

HESP (High Efficiency Streaming Protocol) is a relatively new protocol designed to deliver high-quality video with low latency and low bandwidth consumption. It is optimized for adaptive bitrate streaming (ABR) and offers advanced features such as real-time switching between quality levels. This makes HESP a compelling choice for applications where bandwidth is limited or unpredictable. It is great for adaptive bitrate streaming low latency.

SLDP (Softvelum Low Delay Protocol)

SLDP (Softvelum Low Delay Protocol) is a proprietary protocol developed by Softvelum. It's designed for low latency streaming with a focus on reliability and scalability. SLDP supports a variety of codecs and features, including adaptive bitrate streaming, and is often used in professional broadcasting and live event streaming scenarios. Companies are looking for ways to get latency reduction techniques into their daily live events.

XRTC (XRTC Protocol)

XRTC (XRTC Protocol) is another emerging protocol focused on real-time communication and low latency streaming. XRTC aims to combine the best features of existing protocols while adding new innovations. However, XRTC is still under development, meaning it is not as mature or widely adopted as some of the other protocols on this list. It is important to understand the streaming protocol performance.

XRTC Connection Example

1// Initialize XRTC client

2XRTCClient client = new XRTCClient();

3

4// Set server address and port

5client.setServerAddress("xrtc.example.com");

6client.setServerPort(8080);

7

8// Connect to the server

9try {

10 client.connect();

11 System.out.println("Connected to XRTC server.");

12

13 // Send data

14 client.send("Hello XRTC Server!".getBytes());

15

16 // Receive data

17 byte[] receivedData = client.receive();

18 System.out.println("Received: " + new String(receivedData));

19

20} catch (XRTCException e) {

21 System.err.println("XRTC Error: " + e.getMessage());

22} finally {

23 // Disconnect

24 client.disconnect();

25 System.out.println("Disconnected from XRTC server.");

26}

27

Choosing the Right Low Latency Streaming Protocol for Your Needs

Selecting the appropriate low latency transport protocol is a critical decision that depends on various factors. No single protocol is universally optimal; the best choice is heavily influenced by your specific requirements and constraints. Consider the benefits of low latency streaming for your use case.

Factors to Consider

- Latency Requirements: What is the maximum acceptable delay for your application? Some protocols offer lower latency than others.

- Network Conditions: How reliable is the network you will be streaming over? Some protocols are more resilient to packet loss and network congestion.

- Scalability: How many concurrent viewers do you need to support? Some protocols are more scalable than others.

- Browser Compatibility: Does your application require browser support? If so, WebRTC might be a good choice.

- Security: Do you need to protect your content from unauthorized access? SRT offers built-in encryption.

- Cost: Are there any licensing fees associated with the protocol or its implementation?

Protocol Comparison Table

| Protocol | Latency | Network Resilience | Scalability | Browser Support | Security | Complexity | Use Cases |

|---|---|---|---|---|---|---|---|

| SRT | Low | High | Good | No | Yes | Medium | Contribution, Distribution, Remote Production |

| WebRTC | Very Low | Medium | Medium | Yes | Yes | High | Video Conferencing, Interactive Streaming |

| HESP | Low | Good | High | No | No | Medium | Adaptive Bitrate Streaming, Broadcast |

| SLDP | Low | Good | High | No | Yes | Medium | Professional Broadcasting, Live Events |

| XRTC | Very Low | Medium | Medium | Limited | Yes | High | Real-time Communication, Emerging Apps |

Use Cases for Different Protocols

- SRT: Ideal for contribution and distribution workflows, such as sending live video feeds from remote locations to a broadcast studio.

- WebRTC: Well-suited for interactive applications, such as video conferencing, online gaming, and remote collaboration tools.

- HESP: A strong contender for adaptive bitrate streaming in broadcast and OTT environments.

- SLDP: Appropriate for professional broadcasting and live event streaming where reliability and scalability are paramount.

- XRTC: Suitable for emerging real-time communication applications, such as metaverse experiences and advanced IoT devices.

Choosing the right streaming protocol depends on your requirements. Understand the applications of low latency streaming.

Implementing a Low Latency Streaming Solution

Implementing a low latency streaming implementation requires careful planning and execution. Here's a general overview of the process.

Setting up Your Infrastructure

- Choose a streaming server: Select a streaming server that supports your chosen protocol. Options include Nimble Streamer, Wowza Streaming Engine, and custom solutions.

- Configure your network: Ensure your network is properly configured to handle the bandwidth requirements of your stream. Use a CDN if you need global distribution.

- Optimize your encoding settings: Use appropriate encoding settings to minimize latency without sacrificing video quality. Use techniques like Constant Low Latency (CLL) encoding.

Integrating the Protocol into Your Application

- Use a streaming SDK or library: Integrate a streaming SDK or library into your application to handle the protocol-specific details.

- Implement error handling: Implement robust error handling to gracefully handle network issues and other potential problems.

- Develop player application: Develop a player application which can handle the video stream from streaming server. Consider using existing frameworks or build something from scratch.

Testing and Optimization

- Test your stream under various network conditions: Simulate different network conditions to ensure your stream is resilient to packet loss and congestion.

- Monitor latency: Use monitoring tools to track latency and identify potential bottlenecks. Adjust settings based on this, to find the optimal low latency streaming architecture.

- Optimize your configuration: Continuously optimize your configuration to achieve the lowest possible latency without compromising video quality.

Future Trends in Low Latency Streaming

The field of low latency streaming is constantly evolving. New technologies and protocols are emerging to meet the growing demand for real-time video and audio delivery. There is a future of low latency streaming.

Emerging Technologies

- AV1 codec: AV1 is a next-generation video codec that offers improved compression efficiency compared to H.264 and H.265, potentially reducing bandwidth requirements and improving video quality at low latencies.

- 5G networks: 5G networks offer lower latency and higher bandwidth compared to 4G, enabling new possibilities for real-time streaming applications.

- Edge computing: Edge computing involves processing data closer to the source, reducing latency and improving the responsiveness of streaming applications.

Challenges and Opportunities

While the future of low latency streaming is bright, there are still low latency streaming challenges to overcome.

- Bandwidth limitations: Bandwidth limitations remain a challenge in many areas, especially for mobile users.

- Complexity: Implementing low latency streaming solutions can be complex, requiring specialized expertise.

- Security: Ensuring the security of low latency streams is crucial, especially for sensitive content. On the other hand, there are many opportunities to improve low latency streaming. For example, improving ABR algorithms, developing new codecs, and creating better tools to test and monitor performance.

Conclusion: The Evolution of Low Latency Streaming

Low latency streaming protocols are essential for delivering real-time experiences to viewers around the world. As technology continues to evolve, we can expect to see even lower latencies, improved video quality, and new applications for real-time streaming. The landscape of low latency streaming is dynamic and exciting, promising to revolutionize how we consume and interact with video and audio content. Understanding various protocols enables developers to make informed decisions and create next-generation streaming applications.

Learn more about SRT:

The SRT Alliance: A deep dive into the Secure Reliable Transport protocol

WebRTC documentation:

Official WebRTC documentation for developers

Understanding Adaptive Bitrate Streaming:

Understanding ABR for efficient streaming

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ