Introduction: Why Low Latency Java?

Low latency Java refers to the specialized use of the Java platform to achieve sub-millisecond response times, minimizing the time between input and system response. In 2025, this is critical in domains like financial trading platforms, audio processing, multiplayer gaming, and other real-time systems where every microsecond counts. While high performance generally means maximizing throughput or operations per second, low latency Java prioritizes consistently short response times, even under load. This distinction is crucial for applications where unpredictable delays (jitter) can cause failures or missed opportunities. With advancements in JVM technology and a robust ecosystem, Java continues to be a strong contender for low-latency solutions in mission-critical environments.

Understanding Low Latency in Java

Low latency Java typically targets response times in the sub-millisecond range, often aiming for soft real-time guarantees, where deadlines are met with high probability but not absolute certainty. Common use cases include electronic trading systems, audio DSP chains, robotics, and telemetry. Achieving such low latency is challenging due to the Java Virtual Machine (JVM) architecture, garbage collection (GC) pauses, and OS-level thread scheduling overhead.

For example, GC pauses can introduce unpredictable delays, while thread scheduling can cause context switches that disrupt real-time guarantees. The key challenge for Java developers is to architect systems that minimize these sources of jitter. Real-world low latency Java applications often rely on custom concurrency models, careful memory management, and advanced JVM tuning. In the context of real-time communications, developers working with

webrtc android

orflutter webrtc

must also consider these latency factors to ensure seamless audio and video experiences.Key Principles of Low Latency Java

The core principle of low latency Java is to minimize response time rather than just maximize throughput. In practice, this means designing for predictability—ensuring that worst-case latencies are as low as possible, even if it means sacrificing some raw speed. Predictable latency is often more valuable than peak throughput in real-time Java systems, especially when Java garbage collection or OS thread scheduling can introduce spikes.

Key best practices include:

- Avoiding blocking operations and locks

- Designing for concurrent Java workloads using lock-free programming

- Monitoring and minimizing GC pauses

- Prioritizing code paths that impact critical response times

For developers building real-time communication platforms, leveraging a robust

Video Calling API

or integrating aLive Streaming API SDK

can help maintain low-latency interactions while focusing on these core Java principles.Java JVM and Garbage Collection

Garbage collection is one of the main culprits for latency spikes in Java. Traditional GC algorithms, like ParallelGC or CMS, introduce stop-the-world pauses, where all application threads are halted to reclaim memory. These pauses, even if short, are unacceptable in low latency Java systems.

Recent JVM innovations, such as ZGC and Shenandoah (available in OpenJDK as of 2025), offer pauseless or near-pauseless GC, dramatically reducing the worst-case GC pause times to single-digit milliseconds or less. These collectors accomplish this by performing most of their work concurrently with application threads. However, they require tuning to strike the right balance between throughput and latency.

GC tuning strategies for low latency Java include:

- Selecting a low-latency GC algorithm (e.g., ZGC or Shenandoah)

- Setting small heap sizes or leveraging region-based allocation

- Using JVM flags to limit GC-induced jitter

Example JVM arguments for low-latency GC:

1-javaagent:flight-recorder.jar \

2-XX:+UseZGC \

3-XX:MaxGCPauseMillis=1 \

4-XX:+UnlockExperimentalVMOptions \

5-XX:ZCollectionInterval=2 \

6-XX:SoftRefLRUPolicyMSPerMB=1

7Monitoring GC pauses with tools like Java Flight Recorder can help identify and mitigate sources of latency. For developers looking to

embed video calling sdk

into their Java applications, understanding and tuning GC behavior is essential for delivering a smooth user experience.Threading, Concurrency, and Context Switching

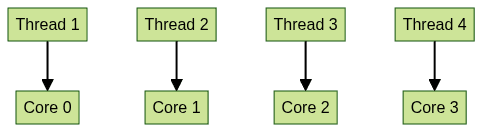

Thread management is a crucial factor in low latency Java. Context switches—when the OS moves a thread from one CPU core to another—can introduce unpredictable delays. Techniques like thread affinity and thread pinning (forcing Java threads to run on specific CPU cores) help reduce these delays and improve predictability.

Lock-free programming (using atomic variables and CAS operations) is essential for minimizing thread contention and avoiding locks that can cause unpredictable blocking. In low-latency systems, queues are often used to communicate between threads. The Java ring buffer (popularized by the Disruptor library) is a high-performance, single-producer/single-consumer queue optimized for low GC overhead and minimal context switches.

For cross-platform development, using a

javascript video and audio calling sdk

or areact native video and audio calling sdk

can help maintain low-latency communication across different environments.Example: Simple Java ring buffer (single-producer, single-consumer):

1public class RingBuffer {

2 private final int[] buffer;

3 private final int size;

4 private volatile int write = 0, read = 0;

5 public RingBuffer(int size) {

6 this.size = size;

7 this.buffer = new int[size];

8 }

9 public boolean offer(int value) {

10 int next = (write + 1) % size;

11 if (next == read) return false; // Buffer full

12 buffer[write] = value;

13 write = next;

14 return true;

15 }

16 public Integer poll() {

17 if (read == write) return null; // Buffer empty

18 int value = buffer[read];

19 read = (read + 1) % size;

20 return value;

21 }

22}

23

Memory Management and Off-Heap Techniques

On-heap memory is managed by the JVM and subject to garbage collection, while off-heap memory is allocated outside the heap and managed manually. Using off-heap memory in low latency Java reduces GC pressure and enables direct, deterministic access to memory, which is crucial for predictable latency.

Popular techniques include:

DirectByteBufferfor off-heap bufferssun.misc.Unsafe(with caution) for manual memory management- Libraries like Chronicle for ultra-low-latency off-heap storage

- JNI/JNA for interfacing with native code

Example: Simple off-heap allocation using

ByteBuffer:1ByteBuffer offHeap = ByteBuffer.allocateDirect(1024);

2offHeap.putInt(42);

3offHeap.flip();

4int value = offHeap.getInt();

5These tools help keep GC pauses out of the critical path, improving low latency Java performance in real-time scenarios. For applications that require high-quality audio, integrating a

Voice SDK

can further enhance real-time communication capabilities.Performance Tuning and Benchmarking

Performance tuning is an iterative process in low latency Java. Key tools include:

- JVM options for GC and JIT tuning

- Java Flight Recorder for tracing latency spikes

- JMH (Java Microbenchmark Harness) for precise micro-latency measurements

Profiling for latency hotspots and measuring GC pauses are essential. Logging detailed pause times and application-level latency distributions provides insight for ongoing tuning.

Example: Simple JMH benchmark for micro-latency measurement:

1@Benchmark

2public void testRingBufferOffer() {

3 ringBuffer.offer(ThreadLocalRandom.current().nextInt());

4}

5JMH enables statistical analysis of tail latencies, helping you identify rare, long delays in your Java code. If you want to experiment with these techniques in your own projects,

Try it for free

and see how low-latency Java can power your next real-time application.Real-World Patterns and Libraries

Several libraries and patterns have emerged to help developers build low latency Java systems:

- Disruptor and custom ring buffers for fast inter-thread messaging

- Aeron for low-latency UDP messaging

- AsyncTransport for high-throughput networking

These libraries are widely used in trading systems, audio processing pipelines, and telemetry applications where sub-millisecond response times are mandatory. For example, a trading system might use Disruptor for matching engine events, while an audio application might use a low-latency ring buffer for sample transport.

Choosing when to use these libraries depends on your latency requirements and throughput needs. Specialized libraries often provide predictable, low GC overhead implementations that are hard to match with plain Java collections.

Best Practices and Common Pitfalls

Low latency Java demands discipline and careful engineering. Some key best practices:

- Avoid locks and minimize thread contention

- Prefer lock-free, single-writer designs for critical paths

- Monitor latency distributions, not just averages

- Tune JVM and GC parameters iteratively

- Focus on predictability over micro-optimizations

Common pitfalls include over-tuning based on synthetic benchmarks, ignoring the impact of GC, and failing to account for OS-level jitter.

Conclusion: The Future of Low Latency Java

As JVM technology advances in 2025 and beyond, Java continues to close the gap with lower-level languages for real-time and mission-critical systems. With ongoing improvements in garbage collection, JIT compilation, and concurrency models, low latency Java remains a powerful choice for developers building reliable, predictable, and high-performance applications.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ