Introduction to Low Latency Cloud Computing

Low latency cloud computing has become the backbone of the digital experience in 2025, driving the demand for ultra-fast, highly responsive applications. In the context of cloud computing, "low latency" refers to the minimal delay between a user action and the corresponding system response. As real-time applications like online gaming, financial trading, AI inference, and IoT proliferate, reducing latency is now a mission-critical objective for developers and architects.

This blog explores the core principles, architecture patterns, best practices, and real-world use cases that define low latency cloud computing. We'll demystify technical concepts, showcase hands-on examples—including code and diagrams—and examine how modern infrastructure empowers latency-sensitive workloads. Whether you're designing high-frequency trading platforms or multiplayer games, this guide will help you architect for speed, reliability, and scalability.

Understanding Latency in Cloud Computing

What is latency?

Latency, in the realm of cloud computing, is the time it takes for data to travel from the source to the destination—typically measured as round-trip time (RTT) in milliseconds. It's crucial to distinguish between latency and bandwidth: while bandwidth measures how much data can be transferred per second, latency focuses on how fast a single unit of data can make the journey.

Latency is influenced by several factors:

- Physical distance: The farther the data must travel, the higher the latency.

- Routing complexity: Suboptimal network paths and multiple hops increase delays.

- Hardware performance: Network interface cards (NICs), switches, and routers introduce processing delays.

- Congestion and jitter: Variable delays (jitter) and crowded links further exacerbate latency.

For developers building real-time communication solutions, understanding latency is especially important when working with technologies such as

flutter webrtc

orwebrtc android

, where milliseconds can make a significant difference in user experience.Why is low latency critical?

The push for low latency cloud computing is driven by high-stakes, real-time applications like:

- Financial trading: Microseconds matter in algorithmic trading; latency advantages can mean millions in gains or losses.

- Online gaming: Gamers expect instantaneous responses—lag can ruin gameplay and user retention.

- AI inference: Real-time decision engines (e.g., fraud detection, recommendation systems) must analyze and respond with minimal delay.

- IoT and autonomous systems: Drones, robots, and sensors require ultra-low latency for safe, reliable operations.

In all these scenarios, a few milliseconds of delay can be the difference between success and failure. This is also true for modern communication platforms that leverage

Video Calling API

to deliver seamless, real-time interactions.Key Principles of Low Latency Cloud Computing

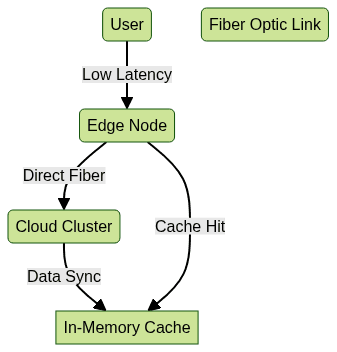

Edge Computing and Proximity

Edge computing revolutionizes low latency cloud computing by processing data closer to the source—at the network edge—rather than in distant centralized data centers. By placing compute resources geographically near end-users or devices, edge computing reduces round-trip times and enables real-time responses for latency-sensitive applications. For example, integrating a

Live Streaming API SDK

can help deliver interactive live video experiences with minimal delay by leveraging edge infrastructure.Optimized Routing and Network Infrastructure

Optimizing routing and network infrastructure is essential for minimizing latency. Techniques include:

- Traffic engineering: Dynamically adjusting network paths to avoid congestion.

- Direct routing: Bypassing unnecessary hops and connecting data centers directly.

- Intelligent protocols: Leveraging protocols like BGP (Border Gateway Protocol) and OSPF (Open Shortest Path First) to optimize path selection and convergence.

Such strategies ensure that packets take the shortest, least congested routes, slashing end-to-end delays. When building applications with

javascript video and audio calling sdk

, these network optimizations are crucial for maintaining high-quality, real-time communication.In-Memory Caching and Cluster Placement

Caching and intelligent cluster placement are powerful tools in low latency cloud computing. In-memory data stores like Redis or Memcached allow applications to retrieve frequently accessed data with sub-millisecond latency. Placement groups ensure that related compute nodes are physically close, reducing intra-cluster communication delays.

1# Example: Redis caching setup for low latency data retrieval

2import redis

3

4r = redis.StrictRedis(host="localhost", port=6379, db=0)

5r.set("user:123", "Alice")

6user = r.get("user:123")

7print(user.decode()) # Output: Alice

8By minimizing network hops and maximizing cache hit rates, these techniques dramatically improve response times. For teams looking to

embed video calling sdk

into their applications, leveraging in-memory caching can further enhance call setup and media delivery speeds.Architecture Patterns for Low Latency Cloud Computing

Cloud-Native Patterns

Modern low latency cloud computing architectures leverage cloud-native design paradigms:

- Microservices: Decompose applications into loosely coupled, independently deployable services. This allows for granular scaling and targeted latency optimizations.

- Serverless: Event-driven and function-based execution reduces idle time and scales instantly to meet demand.

These patterns promote agility, resilience, and consistent low-latency performance. When building cross-platform solutions, using a

react native video and audio calling sdk

can help developers maintain low latency across devices.Direct Communication Techniques

Direct communication between services is critical for reducing overhead. Technologies like ZeroMQ enable high-performance, low-latency messaging between microservices or distributed components.

1# Sample ZeroMQ socket implementation for direct communication

2import zmq

3

4context = zmq.Context()

5socket = context.socket(zmq.REQ)

6socket.connect("tcp://localhost:5555")

7

8socket.send(b"Hello")

9message = socket.recv()

10print(f"Received reply: {message}")

11This approach avoids intermediary brokers and minimizes serialization/deserialization delays. For audio-based applications, integrating a

phone call api

can further streamline direct communication and reduce latency in voice interactions.Hardware and Network Optimization

Ultra-low latency cloud computing leverages high-performance networking hardware:

- Fiber optic links: Provide near light-speed data transfer between data centers.

- RDMA-enabled NICs: Allow direct memory access across the network, bypassing CPU overhead.

- Specialized switches and routers: Built for minimal packet processing delay.

This setup ensures that user requests are handled as close to the edge as possible, leveraging direct fiber links and in-memory caching to minimize latency.

Best Practices for Achieving Low Latency at Scale

Application-Level Optimizations

- Efficient data structures: Use structures like hash tables, tries, or bloom filters to minimize lookup times.

- Asynchronous processing: Offload I/O and non-blocking operations to concurrent threads or event loops.

- Multithreading: Leverage multicore CPUs to parallelize workloads, reducing per-request response time.

1// Example: Asynchronous handler in Go for low latency HTTP API

2func asyncHandler(w http.ResponseWriter, r *http.Request) {

3 go func() {

4 // Perform processing

5 time.Sleep(10 * time.Millisecond)

6 log.Println("Processed asynchronously")

7 }()

8 w.Write([]byte("Accepted"))

9}

10For developers aiming to deliver high-quality, real-time experiences, utilizing a

Video Calling API

that supports asynchronous and multithreaded processing can be a game-changer.Platform and Infrastructure Strategies

- Workload placement: Deploy latency-sensitive workloads in regions closest to end-users.

- Cluster placement groups: Use provider tools (e.g., AWS Placement Groups, Azure Proximity Placement Groups) to ensure low-latency inter-node communication.

- Autoscaling: Automatically add or remove resources to maintain performance under variable load.

Database and Data Path Optimization

- Aggressive caching: Leverage multi-tier caches (memory, SSD, distributed caches) to maximize data locality.

- Database indexing: Ensure queries are optimized with proper indexing for fast retrieval.

- Direct data paths: Reduce layers between application and storage—use direct-attached storage or high-speed, low-jitter network links when possible.

These best practices, when combined, create a robust foundation for low latency cloud computing at scale.

Common Challenges and Solutions in Low Latency Cloud Computing

Low latency cloud computing presents several persistent challenges:

- Jitter: Variability in packet delay can disrupt time-sensitive workflows.

- Scalability: Maintaining low latency as user demand grows is non-trivial.

- Cost: Premium network hardware and edge deployments increase operational expenses.

- Data sovereignty: Regulatory requirements may limit where data can be processed geographically.

Solutions include:

- Leveraging cloud-native tools for automated scaling and monitoring

- Distributing workloads across multiple geographic regions for proximity

- Employing automation to manage dynamic placement and configuration

By proactively addressing these issues, organizations can sustain low latency without sacrificing scalability or compliance.

Real-World Use Cases and Performance Benchmarks

Low latency cloud computing powers mission-critical workloads across diverse industries:

- Financial trading: Low latency is essential for executing trades ahead of the competition. For example, an AWS-powered exchange prototype achieved sub-millisecond round-trip times between trading engines and order books by strategically placing compute clusters and optimizing network paths.

- Online gaming: Multiplayer game servers leverage edge computing to keep latency below 20ms, ensuring smooth, real-time player interactions worldwide.

- AI/ML inference: Real-time recommendation engines use in-memory caches and GPU-accelerated clusters at the edge to deliver predictions with minimal delay.

Performance benchmarks consistently show that optimized architectures—combining edge nodes, direct routing, and in-memory caching—can cut end-to-end latency by 50% or more compared to traditional cloud setups.

Future Trends in Low Latency Cloud Computing

Looking ahead to 2025 and beyond, several trends are poised to further transform low latency cloud computing:

- 5G networks: Ultra-fast mobile connectivity lowers last-mile latency for edge workloads.

- Next-generation cloud infrastructure: Hyperscalers are deploying specialized hardware (e.g., SmartNICs, FPGAs) for even greater speed.

- AI-driven routing: Machine learning optimizes network paths and predicts congestion before it impacts performance.

- Advances in hardware: Continued improvements in fiber optics, quantum networking, and memory technologies.

These innovations will extend the boundaries of what's possible in real-time, latency-sensitive applications. If you're interested in exploring these advancements for your own projects,

Try it for free

and experience the next generation of low latency cloud solutions.Conclusion

Low latency cloud computing is the foundation for tomorrow's digital innovations, enabling ultra-responsive, real-time applications across industries. By embracing edge computing, optimized architectures, and best practices, developers can achieve high performance and reliability at scale. As technology evolves, staying ahead in low latency engineering will be essential—now is the time to architect for speed, proximity, and competitive advantage.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ