The Ultimate Guide to Live Transcription API: Real-Time Speech-to-Text for Your Applications

Introduction to Live Transcription API

A live transcription API is a programmable interface that enables real-time conversion of audio streams into text using robust speech recognition technology. By integrating a live transcription API, developers can deliver seamless, real-time speech-to-text features directly within web, desktop, or mobile applications. This technology is transforming the way users interact with digital content, making it accessible, searchable, and actionable.

Modern applications across various industries now rely on live transcription APIs. Whether it’s for accessibility (live captions), note-taking in meetings, media production, or enhancing customer support in call centers, the demand for accurate and instant transcription continues to grow. Live transcription APIs empower developers to build innovative solutions that cater to these evolving needs.

Industries such as education, media, entertainment, healthcare, and call centers leverage live transcription APIs to drive accessibility, improve user engagement, and extract valuable insights from spoken content. As we move into 2025, these APIs are becoming essential components of inclusive, intelligent, and interactive digital experiences.

How Live Transcription APIs Work

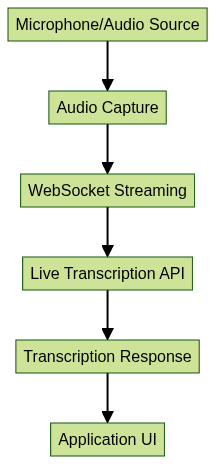

Live transcription APIs operate by capturing audio—typically from a user's microphone or a media source—and streaming it in real-time to a cloud-based speech recognition engine. The API processes the incoming audio and returns transcribed text almost instantly, enabling applications to display live captions or transcripts.

The core of a live transcription API workflow involves audio streaming protocols, with WebSocket being the most popular due to its low latency and bidirectional communication capabilities. Other protocols such as HTTP/2 or gRPC may also be used depending on the provider. The typical data flow looks like this:

- The application captures audio via browser APIs or a client SDK.

- Audio data is chunked and streamed to the live transcription API endpoint.

- The API returns transcription results asynchronously, often with timestamps and speaker labels.

- The client app processes and displays the live transcript.

For developers looking to integrate real-time voice features alongside transcription, leveraging a

Voice SDK

can streamline the process of capturing and transmitting audio data.

This architecture enables developers to build highly responsive applications that can process and present spoken content in real time.

Key Features of Live Transcription APIs

Real-Time Speech Recognition

Live transcription APIs provide immediate conversion of spoken words into text, making them ideal for live captioning, meeting transcription, and interactive applications where speed is critical.

If your application requires both video and audio communication with transcription, consider integrating a

javascript video and audio calling sdk

to enable seamless media streaming and interaction.Multi-Language Support

Many APIs offer support for dozens of languages and dialects, enabling global reach and multilingual accessibility.

Timestamps and Speaker Diarization

Advanced APIs return timestamps for each word or phrase, and some include speaker diarization—identifying who said what in multi-speaker environments.

Custom Vocabulary and Profanity Filtering

APIs often allow developers to supply domain-specific vocabulary (e.g., technical terms, names) and manage profanity filtering to ensure transcripts are both accurate and appropriate.

For applications that require robust video communication features, integrating a

Video Calling API

can further enhance user engagement by combining live transcription with real-time video conferencing.Feature Comparison Table

| Feature | Supported By Most APIs | Customizable |

|---|---|---|

| Real-Time Speech Recognition | Yes | No |

| Multi-Language Support | Yes | No |

| Timestamps | Yes | No |

| Speaker Diarization | Some | No |

| Custom Vocabulary | Some | Yes |

| Profanity Filter | Most | Yes |

| WebSocket Streaming | Yes | No |

| REST API Integration | Yes | No |

| Security/Authentication | Yes | No |

| Event Notifications | Some | Yes |

Popular Use Cases for Live Transcription API

Accessibility and Live Captioning

Live transcription APIs are vital for creating accessible digital experiences. They power live captions for users who are deaf or hard of hearing, ensuring compliance with legal standards and expanding audience reach.

For live events and broadcasts, using a

Live Streaming API SDK

in combination with live transcription can deliver real-time captions to large audiences.Meeting, Webinar, and Event Transcription

From virtual meetings to large conferences, live transcription APIs enable instant note-taking, searchable transcripts, and automated meeting minutes, boosting productivity and collaboration.

If your use case involves integrating phone-based conversations, exploring a

phone call api

can help you add telephony features alongside live transcription for comprehensive meeting solutions.Media, Entertainment, and Call Centers

Media companies use live transcription to generate real-time captions for broadcasts, while call centers leverage it to monitor and analyze conversations for quality assurance, compliance, and training purposes.

For developers building on mobile, leveraging

webrtc android

orflutter webrtc

can simplify the process of capturing and streaming audio for transcription on Android and Flutter platforms respectively.Choosing the Right Live Transcription API: Factors to Consider

Selecting the appropriate live transcription API for your project involves evaluating several key criteria:

- Accuracy and Latency: How precise are the transcripts, and how quickly are results delivered? Low latency is critical for live use cases.

- Supported Languages: Does the API support all languages and dialects relevant to your audience?

- Integration Options: Assess the availability of SDKs, REST endpoints, and WebSocket support for your tech stack.

- Security and Data Privacy: Ensure the API complies with industry standards (GDPR, HIPAA) and offers robust authentication and encryption.

- Pricing and Scalability: Review cost structures, usage limits, and the ability to scale with your application's growth.

For projects that need to quickly add video calling and transcription without building from scratch, you can

embed video calling sdk

solutions to accelerate your development process.Careful consideration of these factors will help you choose a live transcription API that aligns with your technical and business requirements.

Step-by-Step Guide: Implementing a Live Transcription API

Prerequisites and Getting an API Key

Before you begin, register with your chosen live transcription API provider and obtain an API key. This key is required for authentication in all requests.

Setting Up Audio Streaming from the Browser

To capture live audio, use the

getUserMedia API in JavaScript. Audio data can then be transmitted over a WebSocket connection to the transcription API.1// Capture audio and set up WebSocket connection

2const ws = new WebSocket(\"wss://transcription.api.provider/live\");

3navigator.mediaDevices.getUserMedia({ audio: true })

4 .then(stream => {

5 const audioContext = new (window.AudioContext || window.webkitAudioContext)();

6 const source = audioContext.createMediaStreamSource(stream);

7 const processor = audioContext.createScriptProcessor(4096, 1, 1);

8 source.connect(processor);

9 processor.connect(audioContext.destination);

10 processor.onaudioprocess = e => {

11 const inputData = e.inputBuffer.getChannelData(0);

12 // Convert Float32Array to Int16Array for API

13 const buffer = new ArrayBuffer(inputData.length * 2);

14 const view = new DataView(buffer);

15 for (let i = 0; i < inputData.length; i++) {

16 view.setInt16(i * 2, inputData[i] * 0x7fff, true);

17 }

18 ws.send(buffer);

19 };

20 });

21Sending Audio Data to the API

Stream audio data in small intervals to maintain low latency and avoid buffer overflows.

1// Send audio data every 100ms

2let intervalId;

3function startStreaming(audioStream) {

4 intervalId = setInterval(() => {

5 // Assume getAudioChunk() gets the latest audio buffer

6 const chunk = getAudioChunk(audioStream);

7 if (chunk) {

8 ws.send(chunk);

9 }

10 }, 100);

11}

12Handling Transcription Responses

Handle WebSocket messages to parse and display live transcription results in your application UI.

1ws.onmessage = function(event) {

2 const response = JSON.parse(event.data);

3 if (response.transcript) {

4 displayTranscript(response.transcript, response.timestamp);

5 }

6};

7function displayTranscript(text, timestamp) {

8 const transcriptDiv = document.getElementById(\"transcript\");

9 transcriptDiv.innerHTML += `<div><span>[${timestamp}]</span> ${text}</div>`;

10}

11Displaying Live Status and Progress

Provide user feedback for connection status, streaming progress, and errors:

1ws.onopen = function() {

2 updateStatus(\"Connected to Live Transcription API\");

3};

4ws.onerror = function(error) {

5 updateStatus(\"Error: \" + error.message);

6};

7ws.onclose = function() {

8 updateStatus(\"Disconnected\");

9};

10function updateStatus(message) {

11 document.getElementById(\"status\").innerText = message;

12}

13Best Practices for Live Transcription API Integration

- API Key Protection: Never expose your API key in public repositories or frontend code. Use secure environment variables and server-side token exchanges.

- Optimizing Audio Quality: Use high-quality microphones and minimize background noise for improved transcription accuracy.

- Error Handling and Retries: Implement robust error handlers and automatic retries for network disruptions or server errors.

If your application requires advanced audio experiences, integrating a

Voice SDK

can help you manage live audio rooms and enhance real-time communication.By following these best practices, you ensure a secure, reliable, and high-quality integration of live transcription API features.

Challenges and Limitations of Live Transcription API

While live transcription APIs offer immense value, they are not without challenges. Network reliability can impact real-time performance, especially on unstable connections. Accent and language variations may reduce transcription accuracy, particularly for less-common dialects. Privacy and compliance are crucial—always ensure audio data is securely transmitted and stored, and that your implementation aligns with regulations such as GDPR or HIPAA.

Future Trends in Live Transcription APIs (2025 and Beyond)

Advancements in AI and machine learning are set to improve the accuracy and contextual understanding of live transcription APIs. Expect broader language coverage, more sophisticated real-time translation features, and tighter integration with NLP tools for actionable insights in 2025 and beyond.

Conclusion

Live transcription APIs are revolutionizing real-time speech-to-text solutions. By integrating these APIs, developers can build more accessible, intelligent, and interactive applications. Start exploring live transcription APIs today to unlock the next level of user engagement in 2025. If you're ready to get started,

Try it for free

and experience the power of real-time transcription in your own projects.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ