Introduction to Real-Time Speech-to-Text

What is Real-Time Speech-to-Text?

Real-time speech-to-text, also known as real-time speech recognition or live transcription, is the immediate conversion of spoken audio into written text as it's being spoken. Unlike traditional speech-to-text processes that analyze recorded audio files, real-time systems provide immediate transcriptions, making them invaluable for a wide range of applications.

The Power of Instant Transcription

The ability to instantly transcribe spoken words opens up a world of possibilities. It facilitates communication, enhances accessibility, and enables new forms of human-computer interaction. The power lies in its immediacy – getting the text version without any delay.

Working Example : AI Voice Agent

AI-powered voice agent that joins meetings, transcribes speech in real-time using Deepgram STT, and responds intelligently.

Applications of Real-Time Speech-to-Text

Real-time speech-to-text technology finds applications across various sectors, including:

- Live Captioning: Providing real-time subtitles for video conferences, webinars, and broadcasts, improving accessibility for individuals with hearing impairments.

- Voice Assistants: Powering voice-controlled devices and applications like smart speakers and virtual assistants.

- Dictation Software: Enabling users to dictate text directly into documents or applications.

- Meeting Transcription: Automatically transcribing meeting minutes and discussions in real time.

- Customer Service: Assisting customer service agents by transcribing conversations and providing real-time support.

- Accessibility Software: Aids individuals with disabilities to interact with computers using their voice.

- Speech Analytics: Analyzes the content of spoken conversations.

How Real-Time Speech-to-Text Works

The Process of Speech Recognition

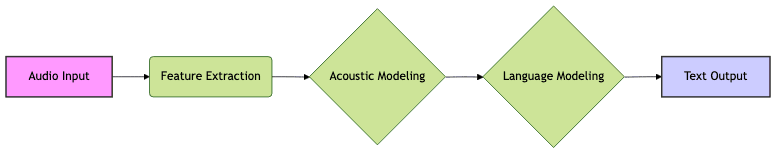

Real-time speech-to-text involves a complex process of analyzing audio signals and converting them into written text. The process typically involves these stages:

- Audio Input: Capturing audio from a microphone or other audio source.

- Feature Extraction: Extracting relevant features from the audio signal, such as frequencies and amplitudes.

- Acoustic Modeling: Using acoustic models to identify phonemes (basic units of sound) within the audio.

- Language Modeling: Applying language models to predict the most likely sequence of words based on the identified phonemes.

- Text Output: Generating the final transcribed text.

Key Technologies: Acoustic Modeling, Language Modeling

- Acoustic Modeling: This involves training statistical models on large datasets of speech to map acoustic features to phonemes. Deep learning techniques, particularly deep neural networks (DNNs), are commonly used for acoustic modeling.

- Language Modeling: This involves creating statistical models that predict the probability of word sequences. N-gram models and recurrent neural networks (RNNs) are often used for language modeling. Modern systems often leverage deep learning and transformer models for enhanced accuracy.

Challenges in Real-Time Transcription: Noise, Accents, Background Sounds

Real-time transcription faces several challenges:

- Noise: Background noise can interfere with the accuracy of speech recognition.

- Accents: Different accents can make it difficult for the system to accurately identify phonemes.

- Background Sounds: Music, conversations, and other background sounds can disrupt the transcription process.

- Latency: Minimizing delay in transcribing.

Top APIs and SDKs for Real-Time Speech-to-Text

Several APIs and SDKs offer robust real-time speech-to-text capabilities. Here are some of the leading options:

Deepgram

Deepgram provides a powerful speech-to-text API optimized for real-time transcription. It excels in accuracy and speed, offering comprehensive support and detailed documentation. Deepgram is very developer-friendly. Their API is REST based and utilizes web sockets for streaming audio. They are focused on delivering high accuracy and very low latency. They support a wide range of audio formats and codecs, and various programming languages through their SDKs.

python

1import asyncio

2import deepgram

3

4# Your Deepgram API Key

5DEEPGRAM_API_KEY = "YOUR_DEEPGRAM_API_KEY"

6

7# Path to the audio file to transcribe

8AUDIO_FILE = 'path/to/your/audio.wav'

9

10async def main():

11

12 # Initialize the Deepgram SDK

13 dg_client = deepgram.Deepgram(DEEPGRAM_API_KEY)

14

15 # Create a websocket connection to Deepgram

16 try:

17 # Create a websocket for streaming audio from the file

18 ws = await dg_client.listen.asynclisten.v("1").realtime.stream({})

19

20 ws.on("utterance_end", ws.send)

21

22 ws.on("transcript_received", (payload) => {

23 console.dir(payload, { depth: null });

24 })

25

26 ws.on("metadata", ws.send)

27

28 # Send streaming audio from the file

29 with open(AUDIO_FILE, 'rb') as file:

30 while True:

31 data = file.read(1024)

32 if not data:

33 break

34 ws.send(data)

35

36 # Indicate that we've finished sending data

37 await ws.finish()

38

39 except Exception as e:

40 print(f"Could not open socket: {e}")

41 return

42

43

44if __name__ == "__main__":

45 asyncio.run(main())

46

47AssemblyAI

AssemblyAI offers a robust suite of AI-powered APIs, including a high-performance speech-to-text API with excellent accuracy. They provide tools for various applications, including real-time transcription. AssemblyAI allows to customize models and is focused on AI models. They support streaming API for real-time transcription. Webhooks and callback URLs are also supported in the platform to receive asynchronous transcription results.

javascript

1const AssemblyAI = require('assemblyai');

2

3const assembly = new AssemblyAI({

4 apiKey: 'YOUR_ASSEMBLYAI_API_KEY',

5});

6

7const connection = assembly.realtime.connect({

8 sampleRate: 16_000,

9 onOpen: () => {

10 console.log("Connected");

11 // send microphone data

12 },

13 onMessage: (data) => {

14 console.log("Received: ", data.transcripts[0].text);

15 },

16 onClose: () => {

17 console.log("Closed");

18 },

19 onError: (error) => {

20 console.error("Error: ", error);

21 },

22});

23

24Google Cloud Speech-to-Text API

Google Cloud Speech-to-Text API is a powerful cloud-based service that offers real-time speech recognition with high accuracy and scalability. It supports a wide range of languages and provides various customization options.

python

1import io

2import os

3

4from google.cloud import speech

5

6os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = 'path/to/your/google_credentials.json'

7

8def transcribe_streaming(stream_file):

9 """Streams transcription of the given audio file."""

10

11 client = speech.SpeechClient()

12

13 with open(stream_file, "rb") as audio_file:

14 content = audio_file.read()

15

16 # In practice, stream should be a generator yielding chunks of audio data.

17 stream = [content]

18

19 audio_config = speech.RecognitionConfig(

20 encoding=speech.RecognitionConfig.AudioEncoding.LINEAR16,

21 sample_rate_hertz=16000,

22 language_code="en-US",

23 )

24 streaming_config = speech.StreamingRecognitionConfig(

25 config=audio_config,

26 interim_results=True

27 )

28

29 requests = (speech.StreamingRecognizeRequest(audio_content=chunk) for chunk in stream)

30

31 responses = client.streaming_recognize(config=streaming_config, requests=requests)

32

33 # Now, put the transcription responses to use. For example, write to the

34 # transcript file.

35 for response in responses:

36 # Once the transcription settles, the `stability` will be 1 (or close to 1).

37 # Use the `confidence` to validate transcription in uncertain situations.

38 print(f"Transcript: {response.results[0].alternatives[0].transcript}")

39

40

41transcribe_streaming('path/to/your/audio.raw')

42Amazon Transcribe

Amazon Transcribe provides real-time and batch transcription services. It's a cost-effective option tightly integrated with other AWS services. Refer to the

Amazon Transcribe documentation

for details.Other Notable APIs

- Microsoft Azure Speech to Text: Part of Microsoft's Azure Cognitive Services, offering robust speech recognition capabilities.

- Rev AI: Provides accurate and reliable speech-to-text services, including real-time transcription.

Building Your Own Real-Time Speech-to-Text Application

Choosing the Right API

Selecting the right API depends on your specific requirements, budget, and technical expertise. Consider factors like accuracy, latency, language support, and pricing when making your decision.

Setting up Your Development Environment

Before diving into coding, set up your development environment. Install the necessary SDKs, libraries, and tools. Choose a programming language that is supported by your API of choice (e.g., Python, JavaScript, Java).

Frontend Development: User Interface and Interaction

Develop a user interface (UI) that allows users to input audio and view the transcribed text. Consider using a framework like React, Angular, or Vue.js for building a responsive and user-friendly interface. Integrate with the browser's Web Speech API, or a dedicated microphone access library if necessary.

Backend Development: API Integration and Data Handling

Create a backend server to handle communication with the speech-to-text API. This server will receive audio from the frontend, send it to the API, and relay the transcribed text back to the frontend. Use a framework like Node.js, Python (Flask or Django), or Java (Spring) for backend development.

Testing and Deployment

Thoroughly test your application to ensure accuracy, reliability, and performance. Deploy your application to a cloud platform like AWS, Google Cloud, or Azure for scalability and accessibility.

Advanced Features and Considerations

Speaker Diarization

Speaker diarization involves identifying and separating speech segments by speaker. This feature is useful for transcribing multi-party conversations, such as meetings and interviews. Some APIs provide speaker diarization as an advanced feature.

Language Identification and Translation

Some APIs offer automatic language identification, allowing you to transcribe speech in different languages without specifying the language beforehand. Real-time translation can also be integrated to provide immediate translations of spoken content.

Handling Noise and Background Sounds

Implement noise reduction techniques to minimize the impact of background noise on transcription accuracy. Some APIs offer built-in noise filtering capabilities.

Security and Privacy

Ensure the security and privacy of user data. Encrypt audio streams and transcribed text to protect sensitive information. Comply with data privacy regulations, such as GDPR and HIPAA. Be especially careful with Personally Identifiable Information (PII).

The Future of Real-Time Speech-to-Text

Advancements in AI and Machine Learning

Advancements in AI and machine learning are continuously improving the accuracy and performance of real-time speech-to-text systems. New deep learning models and training techniques are driving significant progress.

Emerging Applications and Trends

Emerging applications of real-time speech-to-text include:

- AI-powered assistants: Integrating speech recognition into advanced AI assistants for more natural and intuitive interactions.

- Real-time translation services: Providing immediate translation of spoken content for seamless communication across languages.

- Accessibility tools: Developing new accessibility tools for individuals with disabilities.

- Speech Analytics and Customer Support: improving customer experience with analysis

Ethical Considerations and Bias Mitigation

Addressing ethical considerations and mitigating bias in speech recognition models is crucial. Ensure that models are trained on diverse datasets to avoid perpetuating biases against certain accents or demographic groups. Fairness, Accountability, and Transparency are key.

Conclusion

Real-time speech-to-text is a transformative technology with numerous applications and significant potential. By understanding the underlying principles, exploring available APIs, and considering advanced features, developers can build innovative solutions that leverage the power of instant transcription. The field is constantly evolving, driven by advancements in AI and machine learning, promising even more accurate and versatile speech recognition systems in the future. Remember to always consider ethical implications and fairness while working with speech to text real time technology.

- Learn more about Deepgram's real-time transcription:

Deepgram API documentation

- Explore Google Cloud's Speech-to-Text API:

Google Cloud Speech-to-Text

- Read more about AssemblyAI's real-time transcription:

AssemblyAI API

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ