Introduction to Low Latency Kafka

Real-time data streaming has become the backbone of modern digital systems, powering everything from high-frequency financial trading to IoT telemetry and competitive online gaming. In these mission-critical environments, even milliseconds of delay can have major business or user experience impacts. Apache Kafka, as a distributed event streaming platform, is widely adopted for its reliability and scalability. But maximizing its potential often requires squeezing every millisecond out of the data pipeline. Here, we explore what low latency means in Kafka, why it matters, and how to achieve it in 2025.

Low latency in Kafka refers to minimizing the time it takes for data to travel from producers, through brokers, to consumers. This is essential for applications where delays can lead to missed opportunities, outdated information, or negative user experiences. Understanding, measuring, and optimizing Kafka latency is key for building robust, real-time systems.

Understanding Kafka Latency: Key Concepts and Metrics

What is Kafka Latency?

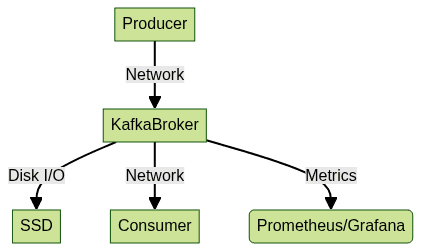

Kafka latency is the end-to-end time taken for a message to travel from the producer, through Kafka brokers, and finally to the consumer. It includes:

- Producer-to-broker latency: Time from message creation to successful write on the broker

- Broker-to-consumer latency: Time from message persistence to consumption

Both are influenced by a variety of configuration and infrastructure factors. For developers building real-time communication features, such as

Video Calling API

integrations, understanding and minimizing these latencies is especially critical.Kafka Latency vs. Throughput

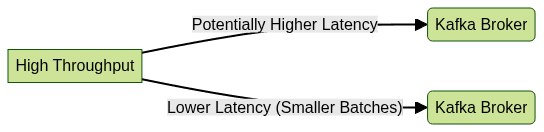

While throughput measures how many messages Kafka can process per second, latency is about how quickly each individual message moves through the system. Often, tuning for high throughput can increase latency, and vice versa. This trade-off is particularly relevant for applications leveraging

Live Streaming API SDK

solutions, where both high throughput and low latency are essential for seamless user experiences.

Why Low Latency Matters

For mission-critical and real-time applications, high latency can cause:

- Missed trades in financial applications

- Delayed alerts in IoT and monitoring systems

- Laggy user experiences in gaming or live analytics

Optimizing Kafka for low latency ensures data is actionable the moment it arrives, unlocking competitive advantages and user satisfaction. This is especially important for interactive use cases such as

react video call

apps, where even minor delays can disrupt communication flow.Main Causes of Kafka Latency

Producer-side Factors

- Batching (

queue.buffering.max.ms/linger.ms): Larger batches increase throughput but add wait time before sending. - Compression: Reduces network usage but adds serialization/deserialization time.

- Acknowledgment (

acks): Waiting for more replicas to acknowledge increases durability but adds delay.

For developers working with

webrtc android

orflutter webrtc

technologies, understanding how producer-side batching and acknowledgment settings affect latency is crucial for delivering real-time audio and video experiences.Broker-side Factors

- Replication lag: Time taken for messages to be replicated to other brokers.

- Log flush interval: How often messages are flushed from memory to disk (

log.flush.interval.ms). - Segment size: Smaller segments can help flushes but may increase overhead.

Consumer-side Factors

fetch.wait.max.ms: Maximum time the broker waits before responding to fetch requests.- Consumer lag: Delay between message availability and consumption.

In scenarios where you need to

embed video calling sdk

into your application, minimizing consumer lag is essential to maintain a responsive and interactive environment.Infrastructure

- Network: Latency and bandwidth constraints between producers, brokers, and consumers.

- Disk I/O: SSDs outperform HDDs for log persistence and recovery.

- CPU: Impacts serialization, compression, and network handling.

For audio-centric applications, leveraging a robust

Voice SDK

can help ensure that Kafka's infrastructure is optimized for low-latency, high-quality streaming.Kafka Low Latency Configuration: Producer, Broker, Consumer

Achieving low latency requires precise tuning across all components of the Kafka pipeline.

Producer Configuration for Low Latency

Key settings:

queue.buffering.max.ms(orlinger.msin newer producers): Lower values reduce waiting time before sending.batch.size: Smaller batches decrease latency at the cost of throughput.acks: Set to1(leader only) for lower latency, but at the cost of durability.

1// Java Producer configuration for low latency

2Properties props = new Properties();

3props.put("bootstrap.servers", "kafka-broker:9092");

4props.put("acks", "1");

5props.put("batch.size", "16384"); // Tune as needed

6props.put("linger.ms", "1");

7props.put("compression.type", "none");

8props.put("max.in.flight.requests.per.connection", "1");

9KafkaProducer<String, String> producer = new KafkaProducer<>(props);

10Broker Configuration for Low Latency

Tune these for brokers:

log.flush.interval.ms: Lower to flush logs more frequently to disk for faster durability.log.flush.interval.messages: Control flush frequency by message count.replication.factorandmin.insync.replicas: Lower for latency, higher for durability.segment.bytesandsegment.ms: Smaller segments may help with quicker flushes.

1# Example Kafka broker server.properties

2log.flush.interval.ms=100

3log.flush.interval.messages=1000

4replication.factor=2

5min.insync.replicas=1

6segment.bytes=1073741824

7segment.ms=60000

8Consumer Configuration for Low Latency

Key settings:

fetch.wait.max.ms: Lower values reduce wait time for fetch.fetch.min.bytes: Lower values ensure quick responses.max.poll.records: Adjust for your consumption rate.

1// Java Consumer configuration for low latency

2Properties props = new Properties();

3props.put("bootstrap.servers", "kafka-broker:9092");

4props.put("group.id", "low-latency-consumer");

5props.put("fetch.wait.max.ms", "5");

6props.put("fetch.min.bytes", "1");

7props.put("max.poll.records", "100");

8KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);

9If you're building applications that require real-time interactions, such as those using a

Live Streaming API SDK

, fine-tuning these consumer settings can make a significant difference in perceived latency.Network and Operating System Tuning

socket.blocking.max.ms: Lower to minimize network blocking time.- OS-level tuning: Adjust TCP settings (e.g., TCP_NODELAY, buffer sizes), disable Nagle's algorithm, and ensure low-latency networking drivers are used.

1# Example OS-level TCP tuning (Linux)

2sysctl -w net.core.rmem_max=26214400

3sysctl -w net.core.wmem_max=26214400

4sysctl -w net.ipv4.tcp_no_delay=1

5Hardware and Infrastructure Optimization for Low Latency Kafka

Hardware Choices

- SSDs vs HDDs: SSDs provide much better write/read latency for broker storage.

- Network bandwidth: Use at least 10GbE or higher for inter-broker and client communications.

- CPU cores: More cores support higher concurrency for producers, brokers, and consumers.

Cloud vs On-Premises Considerations

- Cloud providers may introduce additional network hops and virtualization overhead. For ultra-low latency, consider on-premises or dedicated cloud infrastructure.

- Use placement groups, provisioned IOPS, and dedicated instances where possible.

For teams developing cross-platform communication tools, such as those built with

Video Calling API

, evaluating cloud versus on-premises infrastructure can have a direct impact on latency and user experience.Benchmarking for Low Latency

Regular benchmarking is critical to validate your latency targets. Tools like

openmessaging-benchmark

can help.1# Example openmessaging-benchmark command

2./bin/openmessaging-benchmark -t kafka -c config/kafka.yaml -w workloads/low-latency.yaml

3

Balancing Low Latency with Throughput and Durability

Optimizing Kafka for low latency often comes at the expense of throughput and durability. Smaller batches and fewer acknowledgments speed up message flow but may:

- Reduce overall throughput due to increased request overhead

- Lower durability if acknowledgments are not required from multiple replicas

Tips:

- Use different topics/configurations for latency-sensitive and bulk workloads

- Profile typical and peak loads to find the right balance

- For financial trading, latency is often paramount, while analytics workloads may prioritize throughput

For developers interested in experimenting with these configurations, you can

Try it for free

to see how low-latency streaming and communication APIs perform in your own environment.Real-World Latency Optimization Tips and Troubleshooting

- Diagnosing bottlenecks: Use end-to-end tracing to pinpoint where delays occur (producer, broker, consumer, network).

- Monitoring metrics: Integrate Prometheus and Grafana with Kafka for real-time visibility into latency, consumer lag, replication lag, and throughput.

- Common mistakes: Over-batching, excessive replication, or misconfigured network settings often increase latency.

- Quick checklist:

- Set low

linger.msandfetch.wait.max.ms - Use SSD storage

- Monitor consumer lag and broker replication

- Benchmark regularly

- Tune OS and network stack for low latency

- Set low

Conclusion

Achieving low latency with Kafka in 2025 requires a holistic approach—covering producer, broker, consumer, hardware, and network configuration. By understanding the trade-offs and continuously monitoring and tuning your system, Kafka can deliver sub-millisecond event streaming for even the most demanding applications. For mission-critical, real-time data pipelines, these optimizations are not just beneficial—they're essential for success.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ