Introduction to Load Balancer WebSocket

As real-time applications proliferate in 2025, the need for robust, scalable infrastructures has never been greater. At the heart of many real-time systems—chat platforms, multiplayer games, financial dashboards—lies the WebSocket protocol, enabling persistent, bidirectional communication between clients and servers. However, scaling these systems beyond a single node introduces new challenges, particularly when integrating a load balancer with WebSocket connections.

A load balancer WebSocket setup is critical for distributing incoming connections, ensuring high availability, minimizing downtime, and handling traffic surges. Unlike stateless HTTP requests, WebSocket connections are long-lived and stateful, requiring careful management to maintain performance and user experience. This post explores the unique challenges, strategies, tools, and best practices for implementing effective load balancer WebSocket architectures in modern real-time applications.

What is a WebSocket and Why Does it Need Load Balancing?

WebSockets provide a low-latency, bidirectional, full-duplex communication channel over a single TCP connection. Unlike traditional HTTP, which is stateless and request/response-based, WebSockets enable servers to push data to clients instantly, making them ideal for real-time applications such as chat apps, stock tickers, multiplayer games, and live collaboration tools.

For developers building real-time communication features, leveraging solutions like a

javascript video and audio calling sdk

can simplify implementation and enhance scalability.Traditional load balancing excels in stateless HTTP environments, where each request can be routed to any backend server. However, WebSocket connections are persistent—once established, they can last for hours or even days. This persistence complicates load balancing because connections must be consistently routed to the same backend server. Without effective load balancing strategies, WebSocket scaling and high availability become significant challenges, especially under fluctuating traffic.

Challenges of Load Balancing WebSockets

Implementing a load balancer WebSocket solution introduces several unique challenges:

- Connection Persistence: Unlike stateless HTTP, WebSockets rely on long-lived connections, requiring the load balancer to maintain state or route sessions consistently (session persistence).

- Sticky Sessions: To maintain context (e.g., chat history), clients often need to connect to the same backend node throughout their session.

- TCP Connection Limits: Each WebSocket consumes a TCP connection, so backend servers and load balancers must handle potentially thousands of concurrent connections, risking exhaustion.

- Failover and Reconnections: When a backend node fails, existing connections break, and clients must reconnect—ideally, to a healthy node with preserved session state.

For mobile and cross-platform developers, exploring

react native video and audio calling sdk

andflutter webrtc

can help address device-specific challenges in real-time communication scenarios.WebSocket vs HTTP Request Life Cycle

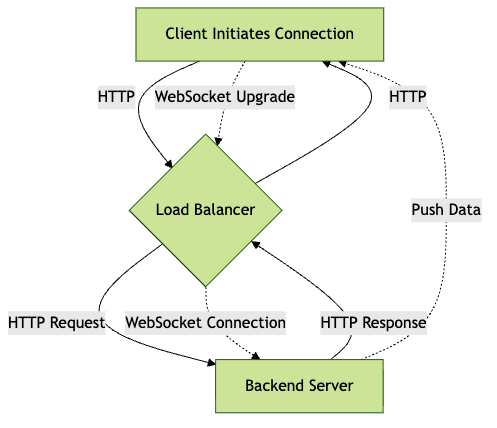

This diagram highlights the persistent nature of WebSocket connections (dashed lines), compared to the ephemeral lifecycle of HTTP requests.

Load Balancing Strategies for WebSockets

Choosing the right load balancing strategy for WebSocket traffic is crucial for performance, scalability, and reliability.

For applications that require seamless integration of video and audio features, leveraging a robust

Video Calling API

can ensure high-quality, real-time communication across distributed systems.Round-Robin

The load balancer distributes incoming WebSocket connections evenly across all backend servers. While simple, this approach can lead to session issues since it doesn’t guarantee session persistence for reconnecting clients.

IP Hash / Sticky Sessions

Sticky sessions (session affinity) ensure a client is always routed to the same backend node, usually by hashing the client’s IP address or a session cookie. This preserves session state but can result in uneven load distribution if many users are behind shared IPs.

Layer 4 vs Layer 7 Load Balancing

- Layer 4 (TCP): Operates at the transport layer, forwarding raw TCP packets. It’s protocol-agnostic, efficient, and works well with WebSocket’s persistent connections.

- Layer 7 (HTTP): Operates at the application layer, providing more advanced routing (based on headers, cookies). However, Layer 7 load balancers must support the WebSocket protocol upgrade mechanism.

Session Affinity Techniques

- IP Hashing: Maps client IPs to specific backend servers.

- Cookie-Based: Uses a session cookie to maintain affinity.

- Token-Based: Employs authentication tokens to direct traffic.

Pros and Cons

- Round-Robin: Simple, but not suitable for stateful protocols without additional session tracking.

- Sticky Sessions: Necessary for stateful sessions but may reduce load distribution efficiency.

- Layer 4: Fast and scalable, but lacks application-level intelligence.

- Layer 7: Flexible routing, but may introduce additional latency and configuration complexity.

If you’re building browser-based solutions, you might also consider how to

embed video calling sdk

for rapid deployment of real-time features.Load Balancer WebSocket Traffic Flow

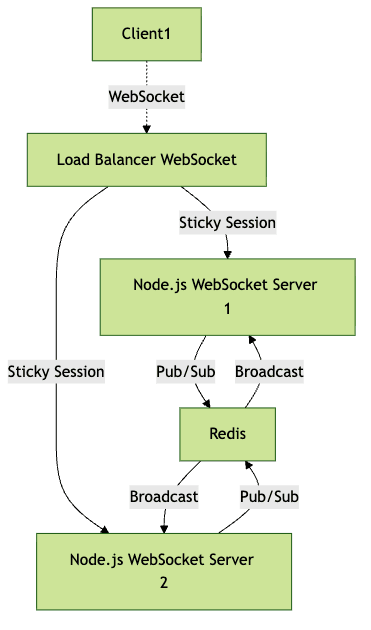

This diagram illustrates how a load balancer routes WebSocket connections using sticky sessions, and how servers might broadcast messages between each other.

Popular Load Balancers for WebSockets

HAProxy

HAProxy is a high-performance TCP/HTTP load balancer with native WebSocket support. Key considerations include:

- Ensure timeout settings accommodate long-lived connections.

- Configure protocol switching to support HTTP upgrades to WebSocket.

HAProxy WebSocket Config Example:

1frontend ws

2 bind *:80

3 mode http

4 option httplog

5 acl is_websocket hdr(Upgrade) -i WebSocket

6 use_backend websocket if is_websocket

7

8backend websocket

9 mode http

10 option http-server-close

11 timeout server 1h

12 balance roundrobin

13 server ws1 10.0.0.1:3000 check

14 server ws2 10.0.0.2:3000 check

15NGINX

NGINX is widely used for HTTP and WebSocket proxying. Since version 1.3, it has first-class WebSocket support. Key steps:

- Enable HTTP Upgrade and Connection headers.

- Set proper timeouts for persistent connections.

NGINX WebSocket Proxy Config Example:

1server {

2 listen 80;

3 server_name websocket.example.com;

4

5 location / {

6 proxy_pass http://backend_websockets;

7 proxy_http_version 1.1;

8 proxy_set_header Upgrade $http_upgrade;

9 proxy_set_header Connection "upgrade";

10 proxy_set_header Host $host;

11 proxy_read_timeout 3600;

12 }

13}

14AWS Elastic Load Balancer (ELB)

AWS ELB (specifically the Application Load Balancer) supports WebSocket connections and offers managed scaling and health checks. However, sticky sessions are based on cookies and may require custom configuration. ELB automatically handles failover and provides robust monitoring, but fine-grained session management can be limited compared to self-hosted solutions like HAProxy or NGINX.

For Android developers, understanding

webrtc android

implementation details can be crucial for optimizing WebSocket-based communication on mobile platforms.Scaling, High Availability, and Redundancy

Horizontal scaling is essential for real-time WebSocket applications. By distributing connections across multiple servers (a WebSocket cluster), you can handle increased load and provide redundancy. In the event of a node failure, the load balancer websocket setup should route new connections to healthy nodes. Health checks and proactive monitoring are vital for minimizing downtime.

To support features like broadcast and shared state, integrate a message broker such as Redis Pub/Sub:

Redis Pub/Sub Integration Example (Node.js):

1const redis = require("redis");

2const pub = redis.createClient();

3const sub = redis.createClient();

4

5sub.subscribe("chat");

6sub.on("message", (channel, message) => {

7 // Broadcast to all WebSocket clients

8 wss.clients.forEach((client) => {

9 if (client.readyState === WebSocket.OPEN) {

10 client.send(message);

11 }

12 });

13});

14

15function broadcast(msg) {

16 pub.publish("chat", msg);

17}

18This setup enables horizontal scaling and cross-node message delivery. For those looking to add interactive broadcasting, a

Live Streaming API SDK

can further enhance scalability and engagement.Best Practices for Load Balancer WebSocket Deployments

Implementing a resilient load balancer WebSocket setup requires careful planning:

- Connection Limits: Monitor and enforce connection caps per node to prevent resource exhaustion.

- Security: Always use TLS (wss://), authenticate users, and validate input to prevent hijacking or abuse.

- Autoscaling & Pre-Warming: Use autoscaling groups and pre-warm new instances to handle sudden spikes.

- Monitoring & Troubleshooting: Instrument your stack with metrics (connection counts, latency, errors) and logs for rapid diagnosis.

If your application also requires voice capabilities, exploring a

phone call api

can help you integrate reliable audio calling features alongside WebSocket-based messaging.Sample Implementation: Node.js WebSocket Server Behind a Load Balancer

Let’s walk through a practical example of deploying a Node.js WebSocket server behind a load balancer websocket setup.

Architecture Overview

- Clients connect to the load balancer (HAProxy, NGINX, or AWS ELB).

- Load balancer routes WebSocket connections using sticky sessions.

- Multiple Node.js servers handle connections, possibly sharing state via Redis Pub/Sub.

For developers building with React, implementing a

react video call

feature can be seamlessly integrated with a scalable WebSocket backend.Node.js WebSocket Server Example

1const WebSocket = require("ws");

2const wss = new WebSocket.Server({ port: 3000 });

3

4wss.on("connection", (ws) => {

5 ws.on("message", (message) => {

6 // Broadcast to all clients

7 wss.clients.forEach((client) => {

8 if (client.readyState === WebSocket.OPEN) {

9 client.send(message);

10 }

11 });

12 });

13});

14console.log("WebSocket server running on port 3000");

15Client Connection via Load Balancer

1const ws = new WebSocket("wss://websocket.example.com/");

2ws.onopen = () => {

3 ws.send("Hello via load balancer websocket!");

4};

5ws.onmessage = (event) => {

6 console.log("Received:", event.data);

7};

8End-to-End Architecture Diagram

This architecture supports horizontal scaling, session persistence, and cross-node messaging.

Conclusion

As real-time application demands continue to grow in 2025, designing a robust load balancer WebSocket architecture is paramount for scalability, high availability, and seamless user experience. By understanding protocol challenges, selecting the right tools, and following best practices, engineers can build resilient systems ready for production at scale. Regular monitoring and iterative optimization remain key to long-term success.

Ready to build your own scalable real-time application?

Try it for free

and experience the power of modern WebSocket architectures.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ