Introduction

Deep voice text to speech technology has revolutionized the way we convert written text into spoken words. Unlike earlier robotic-sounding systems, modern deep voice text to speech engines use advanced deep learning to produce lifelike, human-like voiceovers. In 2025, these systems are powering a new generation of applications, from virtual assistants and accessibility tools to content creation and commercial voice AI tools. Developers and organizations are leveraging deep voice text to speech for more engaging, accessible, and multilingual experiences. As the demand for realistic voice synthesis grows, understanding how deep voice text to speech works and how to implement it is crucial for anyone building modern, voice-enabled software.

What is Deep Voice Text to Speech?

Deep voice text to speech (TTS) refers to the use of deep learning and neural networks to generate high-fidelity, natural-sounding spoken audio from written text. Unlike traditional TTS systems, which relied on concatenating pre-recorded voice fragments or using rule-based synthesis, deep learning TTS models can learn the nuances of human speech—such as inflection, emotion, and prosody—directly from large datasets.

The evolution from signal processing to neural TTS has enabled a leap in realism. Deep learning TTS systems, such as Tacotron, WaveNet, and FastSpeech, map text to audio using neural networks trained on thousands of hours of voice data. These systems understand context, emphasize key words, and even adjust tone, resulting in more expressive and intelligible output. Voice inflections and subtle changes in pitch or speed help neural voices sound convincingly human. In short, deep voice text to speech brings us closer than ever to bridging the gap between computer-generated and human speech.

How Deep Voice TTS Works: The Technology Explained

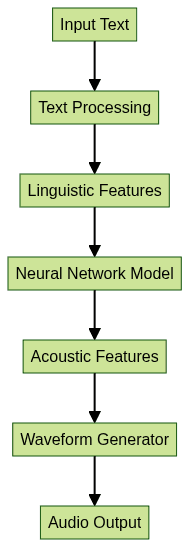

Deep voice TTS leverages the power of neural networks and deep learning to deliver state-of-the-art voice synthesis. Here’s a breakdown of the core components:

Neural Networks and Deep Learning in TTS

Modern TTS systems use deep neural networks (DNNs) to process text and generate audio. These networks learn complex representations of speech by analyzing vast datasets. Two core architectures dominate:

- Sequence-to-sequence models (e.g., Tacotron) map input text to intermediate acoustic features.

- Waveform generators (e.g., WaveNet, HiFi-GAN) synthesize raw audio from these features.

For developers looking to integrate advanced voice features, using a

Voice SDK

can streamline the process of adding real-time audio capabilities to your applications.Key Components

- Text Processing: Converts input text into a sequence of linguistic features (phonemes, stress, punctuation).

- Voice Synthesis: Neural networks generate audio waveforms based on processed features.

- Voice Customization: Adjustments to pitch, speed, emotion, and language for expressive TTS.

If you’re building solutions that require both voice and video, consider a

python video and audio calling sdk

for seamless integration of communication features.TTS Process Flow Diagram

Simple Python Example Using TensorFlow TTS

Here’s an example using the

tensorflow-tts library:1import tensorflow_tts

2from tensorflow_tts.inference import AutoProcessor, TFAutoModel

3

4processor = AutoProcessor.from_pretrained("tensorspeech/tts-tacotron2-ljspeech-en")

5tacotron2 = TFAutoModel.from_pretrained("tensorspeech/tts-tacotron2-ljspeech-en")

6

7input_text = "Hello, world!"

8input_ids = processor.text_to_sequence(input_text)

9

10# Generate mel spectrogram

11mel_outputs, _, _ = tacotron2.inference(

12 input_ids=tf.expand_dims(tf.convert_to_tensor(input_ids, dtype=tf.int32), 0),

13 input_lengths=tf.convert_to_tensor([len(input_ids)], dtype=tf.int32),

14)

15This code demonstrates converting text input into features ready for waveform generation.

Features of Modern Deep Voice Text to Speech Tools

Modern deep voice TTS solutions offer a rich set of features to support diverse applications:

Realistic Voice Synthesis

Advanced models can reproduce natural inflections, rhythm, and emotional nuances, resulting in highly human-like voiceovers. Some tools even detect context and adjust tone accordingly.

For interactive applications, integrating a

Voice SDK

can help you deliver real-time, high-quality audio experiences alongside TTS.Emotion and Multilingual Support

Expressive TTS systems allow synthesis of speech with emotions such as happiness, sadness, or excitement. Multilingual text to speech models support dozens of languages and regional accents, making global deployment possible.

If your use case involves live events or broadcasts, a

Live Streaming API SDK

can be invaluable for delivering synchronized audio and video at scale.Custom Voice Creation and Voice Cloning

Voice AI tools enable developers to create custom voices or clone real voices using a few minutes of audio data. This is valuable for branding, unique digital assistants, or replicating voices for accessibility.

Voice Parameter Adjustments

Most TTS engines provide control over pitch, speed, pauses, emphasis, and pronunciation. Audio output customization lets you fine-tune results for commercial use cases, from customer support to gaming.

For applications that require direct phone-based communication, integrating a reliable

phone call api

can enhance your TTS-powered customer interactions.Top Use Cases for Deep Voice Text to Speech

Deep voice TTS technology is at the core of many modern applications:

- Accessibility: Helps visually impaired users by reading content aloud with natural-sounding voices.

- Content Creation: Powers narration for videos, podcasts, audiobooks, and e-learning platforms, saving time and costs.

- Virtual Assistants and Chatbots: Provides engaging, real-time responses in virtual agents or smart devices.

- Customer Support and IVR: Enhances interactive voice response systems with clear, professional voices for better customer experience.

- Gaming and Interactive Media: Brings characters to life in games, AR/VR experiences, and interactive storytelling.

If you’re developing collaborative or interactive applications, a

Video Calling API

can complement TTS features for a more immersive user experience.These use cases benefit from the expressive, adaptable, and low latency TTS capabilities of modern systems.

Choosing the Right Deep Voice TTS Solution

Selecting the best deep voice text to speech engine involves evaluating several key factors:

- Voice Quality: How natural and expressive are the available voices?

- Languages and Voice Styles: Does the system support your target languages and desired voice personas?

- API Access and Integration: Is there a robust API or SDK for easy integration into your app or workflow?

- Customization Features: Can you adjust voice parameters, create custom voices, or clone voices?

- Pricing and Licensing: Does it fit your budget and usage requirements?

For developers seeking to add real-time audio chat or conferencing, a

Voice SDK

provides a flexible foundation for building scalable audio solutions.Comparison Table of Leading TTS Tools

| Tool | Voices Available | Languages | Custom Voices | API Access | Pricing |

|---|---|---|---|---|---|

| Google Cloud TTS | 220+ | 40+ | Limited | Yes | Pay-as-you-go |

| Amazon Polly | 100+ | 30+ | Yes | Yes | Pay-as-you-go |

| Microsoft Azure TTS | 400+ | 140+ | Yes | Yes | Pay-as-you-go |

| Resemble.ai | 60+ | 30+ | Yes | Yes | Subscription |

| Coqui TTS (OpenSrc) | Varies | 10+ | Yes | Yes | Free/Open Source |

Open Source vs Commercial Solutions

- Open Source: Ideal for developers needing flexibility, experimentation, or privacy (e.g., Coqui TTS, Mozilla TTS).

- Commercial: Offers higher-quality voices, support, and scalability. Best for production environments needing reliability and compliance.

For businesses that need to enable voice-based customer support, integrating a

phone call api

can help bridge the gap between automated TTS and real-time human interaction.Implementation: Step-by-Step Guide

Let’s walk through how to set up and use deep voice text to speech in a Python application using Google TTS API.

1. Set Up the Environment

Install the necessary packages:

1pip install google-cloud-texttospeech

22. Authentication

Set your Google credentials:

1export GOOGLE_APPLICATION_CREDENTIALS="path/to/your/key.json"

23. Code Example: Synthesize Speech

1from google.cloud import texttospeech

2

3client = texttospeech.TextToSpeechClient()

4

5synthesis_input = texttospeech.SynthesisInput(text="""Hello, developer! Welcome to deep voice text to speech.""")

6

7voice = texttospeech.VoiceSelectionParams(

8 language_code="en-US",

9 name="en-US-Wavenet-D",

10 ssml_gender=texttospeech.SsmlVoiceGender.MALE

11)

12

13audio_config = texttospeech.AudioConfig(

14 audio_encoding=texttospeech.AudioEncoding.MP3,

15 speaking_rate=1.0,

16 pitch=0.0

17)

18

19response = client.synthesize_speech(

20 input=synthesis_input,

21 voice=voice,

22 audio_config=audio_config

23)

24

25with open("output.mp3", "wb") as out:

26 out.write(response.audio_content)

27 print("Audio content written to file 'output.mp3'")

28If you want to experiment with deep voice TTS and related features, you can

Try it for free

and see how these tools fit your workflow.4. Customizing Output

- Voice: Change the

nameparameter to select different voices. - Speed: Adjust

speaking_rate. - Pitch: Modify

pitchfor deeper or higher tones.

For developers looking to enable live audio rooms or group discussions, a

Voice SDK

can be an essential addition to your tech stack.Best Practices and Tips for Realistic Voice Output

- Optimize Text Input: Use punctuation and SSML tags for pauses, emphasis, and breaks.

- Choose the Right Voice Model: Test several voices and languages to match your use case.

- Test and Iterate: Regularly review generated audio for pronunciation, inflection, and naturalness. Adjust parameters for best results.

Conclusion

Deep voice text to speech is reshaping how we interact with technology, delivering natural, expressive AI voices for a variety of applications. As deep learning advances, the realism and versatility of deep voice text to speech will only improve, making it a cornerstone of accessible, engaging digital experiences in 2025 and beyond. Embracing deep voice text to speech now ensures your applications stay on the cutting edge of voice AI innovation.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ