AI Voice Generator Text to Speech: The Ultimate Guide

Introduction to AI Voice Generator Text to Speech

Text to Speech (TTS) technology has become a cornerstone in modern software, transforming written content into lifelike audio. With advancements in artificial intelligence (AI), voice generation has evolved far beyond robotic monotones, now achieving remarkably natural, expressive, and context-aware speech synthesis. AI-powered TTS is revolutionizing the way developers build accessible, engaging, and scalable digital experiences across platforms.

In this guide, we'll dive into how AI voice generator text to speech solutions work, their key features, diverse use cases, and hands-on implementation tips. You'll also find comparisons of leading TTS tools, insights into API and SSML integration, and a look at future trends shaping the field in 2025. Whether you're building for content creation, accessibility, or next-gen interactive experiences, this developer-focused resource will help you make the most of AI voice technology.

What is an AI Voice Generator Text to Speech?

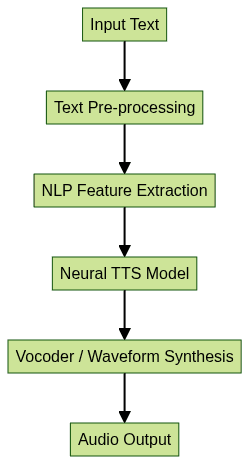

An AI voice generator text to speech system is advanced software that converts written text into spoken audio using deep learning and natural language processing (NLP). Unlike traditional rule-based or concatenative TTS, modern AI-driven TTS leverages neural networks—especially architectures such as Tacotron, WaveNet, and FastSpeech—to produce highly natural, human-like voices with nuanced intonation, emotion, and context awareness.

Core NLP techniques are used to analyze, tokenize, and interpret text, allowing the system to capture meaning, context, and even sentiment. The neural network then maps this understanding to the acoustic space, generating speech waveforms that sound both realistic and expressive.

The evolution from basic TTS to modern AI voice generators can be visualized as a journey from:

- Concatenative TTS: Pre-recorded word and phoneme units stitched together, resulting in robotic output

- Parametric TTS: Statistical models generating speech parameters for smoother, but still synthetic voices

- Neural TTS: Deep learning models synthesizing speech at the waveform level, enabling natural-sounding AI voices, voice cloning, and customization

Today's AI voice generators offer multi-language support, voice customization, and real-time streaming, empowering developers to create solutions for accessibility, content creation, gaming, and more. For those looking to implement real-time audio features, integrating a

Voice SDK

can further enhance your application's capabilities.Key Features of Modern AI Voice Generator Text to Speech

Emotion and Inflection in AI Voices

Modern AI TTS engines can embed emotion, inflection, and a dynamic range of tone into synthetic voices. This is achieved by training deep neural networks on large, professionally narrated datasets annotated for sentiment, emotion, and prosody. The result: AI voices that sound empathetic, engaging, and contextually aware.

Multi-language and Accents Support

Support for dozens of languages and regional accents is standard in leading TTS platforms. Developers can generate speech in multiple languages or dialects—crucial for global applications and inclusive content. Neural TTS models generalize linguistic patterns, enabling accurate pronunciation and natural-sounding delivery across languages. If your project requires live audio interaction in multiple languages, consider leveraging a

Voice SDK

for seamless integration.Voice Cloning and Customization

Voice cloning allows developers to create unique AI voices based on a few minutes of target speaker data. Customization options include adjusting pitch, speed, timbre, and even emotion, letting brands and creators craft signature synthetic voices for their applications.

Use Cases for AI Voice Generator Text to Speech

Content Creation: Podcasts, Videos, and Audiobooks

AI voice generators streamline content workflows by converting scripts into professional-quality audio. Creators can produce podcasts, video voiceovers, and audiobooks at scale, leveraging natural-sounding AI voices that save time and reduce production costs. Advanced TTS enables multi-voice narration, language localization, and rapid content iteration. For developers aiming to add interactive audio rooms or live discussions to their content platforms, a robust

Voice SDK

can be a game-changer.Accessibility and Inclusive Design

TTS powers screen readers, voice-enabled apps, and assistive tools, making digital content accessible to users with visual impairments or reading difficulties. AI-driven voices improve the listening experience with higher clarity, emotion, and natural prosody, supporting inclusive design principles.

Gaming and Interactive Media

Developers use AI voice generator text to speech for dynamic in-game dialogue, NPC interactions, and real-time narration. TTS enables scalable voiceovers for large or procedurally generated game worlds, with support for multiple characters, accents, and emotional tones. For real-time player communication, integrating a

phone call api

can add seamless voice chat to your games.Education and E-Learning

In e-learning platforms, TTS brings interactive lessons, quizzes, and reading materials to life. Multi-language TTS enhances language learning, while voice customization tailors the user experience for different age groups or learning styles. If your educational app requires live lectures or discussions, a

Live Streaming API SDK

can help you deliver real-time interactive experiences.Customer Support and Chatbots

AI voices are integrated into virtual assistants, IVR systems, and chatbots, providing clear, human-like responses. Real-time TTS ensures customer interactions are engaging and accessible, improving satisfaction and reducing support costs. For businesses looking to add voice-based customer support, a

phone call api

can streamline the process and enhance user experience.How Does AI Voice Generator Text to Speech Work?

Text Analysis and Pre-processing

The TTS pipeline begins with analyzing and normalizing input text. This involves tokenization, sentence boundary detection, text normalization, and linguistic feature extraction. Example Python code for preprocessing:

1import re

2

3def preprocess_text(text):

4 text = text.lower()

5 text = re.sub(r"[^a-z0-9.,!?\s]", "", text)

6 text = re.sub(r"\s+", " ", text).strip()

7 return text

8

9sample = "Hello, World! Welcome to AI voice generator text to speech."

10print(preprocess_text(sample))

11If you are building a Python-based application that requires both video and audio calling features, consider using a

python video and audio calling sdk

for seamless integration alongside your TTS pipeline.Neural Voice Synthesis

Once pre-processed, the text is fed into a neural TTS model (like Tacotron or FastSpeech), which predicts a sequence of spectrograms representing the audio features. A vocoder (e.g., WaveNet) then generates the final waveform.

This architecture enables nuanced, context-aware speech synthesis, supporting features like emotion and accent modulation. For applications that require real-time video and audio communication in addition to TTS, integrating a

Video Calling API

can provide a comprehensive multimedia experience.Output and Customization

Developers can customize output using parameters for pitch, speed, emotion, and more. Many platforms support Speech Synthesis Markup Language (SSML), which provides granular control over pronunciation, emphasis, and prosody. Output can be generated in various formats (MP3, WAV) and streamed in real-time for interactive applications.

Comparing the Best AI Voice Generator Text to Speech Tools

Feature Table

Pricing and Accessibility

Pricing varies from pay-as-you-go (per million characters) to monthly subscriptions. Major cloud providers offer generous free tiers and SDKs for rapid prototyping. Accessibility features, such as multi-language and voice customization, are now standard in most leading platforms. If you want to experiment with these technologies, you can

Try it for free

and explore their capabilities before making a commitment.Pros and Cons

While neural TTS delivers unmatched realism and flexibility, it can require careful tuning for specific use cases. Pros include scalability, natural-sounding output, and broad language support; cons may include latency in real-time settings and potential licensing restrictions for cloned voices.

Integration and Implementation Tips

Using APIs and SDKs

Most TTS providers offer REST APIs and client SDKs. Here's a Python example for Google Cloud TTS:

1from google.cloud import texttospeech

2

3client = texttospeech.TextToSpeechClient()

4input_text = texttospeech.SynthesisInput(text="AI voice generator text to speech rocks!")

5voice = texttospeech.VoiceSelectionParams(language_code="en-US", ssml_gender=texttospeech.SsmlVoiceGender.NEUTRAL)

6audio_config = texttospeech.AudioConfig(audio_encoding=texttospeech.AudioEncoding.MP3)

7response = client.synthesize_speech(input=input_text, voice=voice, audio_config=audio_config)

8with open("output.mp3", "wb") as out:

9 out.write(response.audio_content)

10For developers who want to add interactive audio features, using a

Voice SDK

can simplify the process and provide robust, scalable solutions.SSML for Advanced Voice Control

SSML (Speech Synthesis Markup Language) lets you control pronunciation, emphasis, pauses, and emotion. Example:

1<speak>

2 Hello, <emphasis level="strong">developers</emphasis>! Welcome to <break time="400ms"/> AI voice generator text to speech.

3</speak>

4Security and Privacy Considerations

When integrating TTS, ensure user data is handled securely. Use encrypted connections (HTTPS), comply with data privacy regulations (GDPR, CCPA), and review provider policies on voice data storage—especially for voice cloning or sensitive content generation.

Future Trends in AI Voice Generator Text to Speech

By 2025, expect rapid advancements in expressive speech synthesis, real-time multilingual TTS, and ultra-fast neural models deployable on edge devices. Emotion detection, cultural context adaptation, and ultra-realistic voice cloning will push the boundaries of human-computer interaction. Ethical considerations—such as deepfake prevention and consent in voice cloning—will become increasingly important, shaping responsible adoption.

Conclusion

AI voice generator text to speech technology is transforming the way we build accessible, engaging, and scalable digital experiences. With neural TTS, developers can deliver human-like voices for content, gaming, education, and beyond. Now is the perfect time to explore and integrate these powerful tools into your next project—

try an AI voice generator today and take your applications to the next level

.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ