Introduction to Python Speech to Text

Speech to text technology has revolutionized the way we interact with computers, making it possible to convert spoken language into written text with impressive accuracy. In the context of Python programming, speech to text unlocks powerful capabilities for applications such as voice assistants, automated transcription services, hands-free interfaces, and accessibility tools. With the growing demand for voice-driven applications and the rise of remote work, integrating speech recognition into your Python projects can enhance user experience and productivity. In 2025, Python speech to text remains crucial for modern software, enabling real-time voice input, efficient data entry, and seamless integration with AI-driven workflows.

How Python Speech to Text Works

Speech recognition is the process of converting spoken words into machine-readable text. Python speech to text relies on capturing audio input (from a microphone or file), processing the audio to extract features, and then using algorithms or neural networks to transcribe speech. For developers building communication platforms, integrating a

python video and audio calling sdk

can further enhance your application's capabilities by enabling both speech recognition and real-time audio/video interactions.Key Concepts

- Audio Capture: Using microphones or audio files as input sources.

- Audio Processing: Transforming raw audio signals into features suitable for recognition, such as Mel-frequency cepstral coefficients (MFCCs).

- Transcription: Applying machine learning models (statistical or neural) to convert features into text.

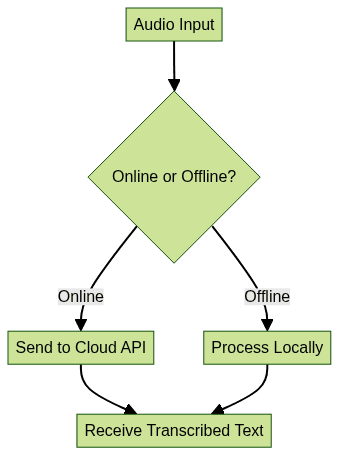

Online services (like Google Speech Recognition API) send audio to the cloud for transcription, offering high accuracy and language variety. Offline solutions (like Vosk or CMU Sphinx) process audio locally—ideal for privacy and low-latency scenarios, though sometimes with reduced accuracy. If you're interested in building live audio experiences, consider exploring a

Voice SDK

to add scalable voice chat or audio room features to your Python applications.Online vs. Offline Speech to Text

Popular Python Speech to Text Libraries

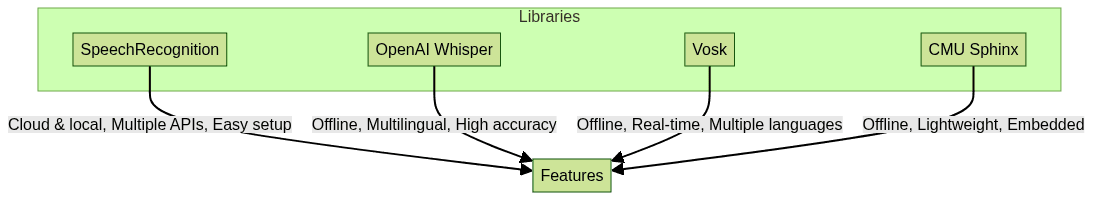

Python developers have a wealth of libraries for speech to text applications. The most prominent options in 2025 are:

- SpeechRecognition: A user-friendly wrapper supporting multiple cloud APIs (Google, IBM, Microsoft, etc.)

- OpenAI Whisper: State-of-the-art neural model supporting multilingual and offline transcription.

- Vosk: Robust offline speech recognition with wide language support.

- CMU Sphinx: Mature open-source offline recognizer, great for embedded systems.

If your project requires seamless integration of calling features, an

embed video calling sdk

can be a powerful addition, allowing you to embed video and audio calls alongside speech-to-text functionality.Feature Comparison Table

| Library | Online/Offline | Language Support | Notable Features |

|---|---|---|---|

| SpeechRecognition | Online/Offline | Many (via APIs) | Multi-API, easy to use |

| OpenAI Whisper | Offline | Dozens (multilingual) | Neural, highly accurate |

| Vosk | Offline | 20+ | Fast, real-time, low resource |

| CMU Sphinx | Offline | 10+ | Lightweight, embeddable |

When to Choose Each Library

- SpeechRecognition: Best for quick integration and cloud-based accuracy.

- OpenAI Whisper: For high-accuracy, multilingual offline transcription.

- Vosk/CMU Sphinx: For privacy-focused, real-time, or resource-constrained environments.

If you need to add phone call capabilities to your Python application, check out a

phone call api

to enable seamless voice communication features.SpeechRecognition Library

The

SpeechRecognition library is the most popular choice for beginners and professionals alike. It offers a unified API for several speech-to-text engines, including Google Speech Recognition API, IBM, Microsoft Bing Voice Recognition, and more. Its strengths include ease of use, broad API support, and active community. Basic usage involves capturing audio from a microphone or file and sending it to your chosen engine for transcription. For those looking to integrate both speech recognition and calling features, a python video and audio calling sdk

can streamline the process of building comprehensive communication solutions.OpenAI Whisper

OpenAI Whisper is a breakthrough neural speech recognition model released by OpenAI. It provides state-of-the-art transcription quality, especially for multilingual audio, and can be run entirely offline on modern hardware. Whisper is available as both a Python package and via APIs, making it flexible for local or cloud-based deployments. Despite its resource needs, Whisper excels in accuracy and language coverage. If you're interested in building advanced voice-driven applications, integrating a

Voice SDK

can help you create interactive audio experiences such as live audio rooms or group discussions.Vosk and CMU Sphinx

Vosk and CMU Sphinx are best for fully offline speech recognition. Vosk supports more than 20 languages and works efficiently on desktops and embedded systems. CMU Sphinx is lightweight and ideal for embedded or resource-constrained environments, with support for over 10 languages. Both are suitable where privacy and local processing are essential, though they may lag behind cloud-based solutions in accuracy. For developers seeking to add robust calling features, exploring a

phone call api

can provide the necessary tools for integrating voice calls into your Python applications.Step-by-Step: Setting Up Python Speech to Text

System Requirements

- Python 3.7 or newer (most libraries require Python 3.7+)

- pip for package management

- Working microphone (for live input)

- Internet connection (for online APIs)

If your application requires both speech recognition and real-time communication, consider leveraging a

python video and audio calling sdk

to enable seamless audio and video interactions alongside speech-to-text features.Installing Python Packages

To get started, install the necessary speech recognition packages. For most cases:

1pip install SpeechRecognition pyaudio

2pip install openai-whisper

3pip install vosk

4pip install pocketsphinx

5pyaudiois required for microphone support with SpeechRecognition.- For Whisper, ensure you have

ffmpeginstalled (used for audio processing).

Setting Up Microphone/Audio Input

- Test your microphone outside Python first (e.g., OS sound recorder).

- Use the

sounddevicePython package for more advanced audio capture if needed:bash pip install sounddevice

Troubleshooting Common Issues

- PyAudio install errors: On Windows, use

pip install pipwinthenpipwin install pyaudio. - Microphone not detected: Verify drivers and permissions.

- Missing ffmpeg (for Whisper): Download from

https://ffmpeg.org/

and add to your system PATH. - Model download issues: Ensure stable internet or download models manually.

Python Speech to Text Example Code

Example 1: Transcribing Speech from Microphone (SpeechRecognition, Google API)

1import speech_recognition as sr

2

3recognizer = sr.Recognizer()

4with sr.Microphone() as source:

5 print("\"Say something!\"")

6 audio = recognizer.listen(source)

7 try:

8 text = recognizer.recognize_google(audio)

9 print(f"\"You said: {text}\"")

10 except sr.UnknownValueError:

11 print("\"Google Speech Recognition could not understand audio\"")

12 except sr.RequestError as e:

13 print(f"\"Could not request results: {e}\"")

14Example 2: Transcribing an Audio File (SpeechRecognition)

1import speech_recognition as sr

2

3recognizer = sr.Recognizer()

4with sr.AudioFile('audio.wav') as source:

5 audio = recognizer.record(source)

6 try:

7 text = recognizer.recognize_google(audio)

8 print(f"\"Transcribed: {text}\"")

9 except sr.UnknownValueError:

10 print("\"Could not understand the audio\"")

11 except sr.RequestError as e:

12 print(f"\"API error: {e}\"")

13Example 3: Using OpenAI Whisper Locally

1import whisper

2

3model = whisper.load_model("\"base\"") # Options: tiny, base, small, medium, large

4result = model.transcribe("\"audio.mp3\"")

5print(result["\"text\""])

6Error Handling and Tips for Accuracy

- Always handle

UnknownValueErrorandRequestErrorfor robust applications. - Use high-quality microphones and minimize background noise.

- Calibrate microphone energy thresholds with

recognizer.adjust_for_ambient_noise(source). - For longer recordings, process in segments or use batch processing.

If you're building a video conferencing or collaboration platform, integrating a

Video Calling API

can help you add high-quality audio and video communication features to your Python projects.Advanced Features and Real-World Applications

Speech to text in Python is not limited to basic transcription. Advanced use cases include:

- Real-time Transcription: Process microphone input continuously for live captions or voice commands.

- Noisy Environments: Use noise reduction techniques or resilient models like Whisper/Vosk.

- Batch Processing: Transcribe large collections of audio files efficiently (looping with code).

- Integration: Combine with NLP, chatbots, or automation workflows for intelligent assistants, meeting transcription, or accessibility solutions.

Example: Batch transcribing audio files with Whisper:

1import whisper, os

2

3model = whisper.load_model("\"base\"")

4audio_dir = "\"audio_folder\""

5for file in os.listdir(audio_dir):

6 if file.endswith("\".mp3\""):

7 result = model.transcribe(os.path.join(audio_dir, file))

8 print(f"\"{file}: {result['text']}\"")

9Limitations, Challenges, and Best Practices

While Python speech to text tools are powerful, there are limitations:

- Accuracy: Varies by accent, language, and audio quality; cloud APIs generally outperform offline models.

- Language Support: Not all libraries support all languages equally; check documentation.

- Privacy: Online APIs send data to the cloud; use offline models for sensitive data.

- Best Practices:

- Preprocess audio (noise reduction, normalization)

- Choose APIs/models suited for your use case

- Always implement error handling and fallbacks

Conclusion: Future of Python Speech to Text

As of 2025, Python speech to text continues to advance with AI-driven models, real-time processing, and expanding multilingual support. Developers now have robust tools for integrating speech recognition into diverse applications, from productivity apps to accessibility solutions. Staying updated with the latest libraries and best practices will help you harness the full potential of voice technology in Python.

Ready to build your own voice-powered or communication-enabled application?

Try it for free

and start exploring the possibilities today!Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ