Introduction to Azure Speech to Text

Azure Speech to Text, part of Azure Cognitive Services, is Microsoft’s enterprise-grade speech recognition platform. It enables developers to seamlessly convert spoken language into accurate, readable text, supporting real-time and batch transcription scenarios. As voice-driven interfaces, automated meeting transcription, and accessibility features become essential in modern applications, Azure Speech to Text provides scalable, secure, and customizable solutions to meet diverse needs. Key features like custom speech models, speaker diarization, and multilingual support make it a standout tool for developers and enterprises seeking reliable speech analytics and transcription services.

Key Features of Azure Speech to Text

Real-Time Transcription

Azure Speech to Text delivers low-latency transcription, enabling live captioning, voice-driven commands, and instant speech analytics. This is ideal for interactive applications, video conferencing, and accessibility tools. For developers building interactive voice experiences, integrating a

Voice SDK

can further enhance real-time audio capabilities alongside Azure’s transcription.Batch Transcription

For processing large audio archives or pre-recorded files, batch transcription allows asynchronous conversion, supporting long-form content like meetings, webinars, and call center recordings. If your workflow involves both video and audio, leveraging a

Video Calling API

can streamline the process of capturing and managing multimedia content before transcription.Custom Speech Models

Developers can train custom models tailored to industry jargon, product names, or regional accents, significantly boosting accuracy for specialized vocabularies and environments. When building applications that require both voice recognition and real-time communication, integrating a

Voice SDK

can help facilitate seamless audio interactions.Multilingual and Accent Support

Azure supports over 100 languages and variants, offering robust recognition across global user bases. Accent adaptation further enhances reliability in international deployments. For projects that require live translation or multilingual streaming, a

Live Streaming API SDK

can complement Azure’s capabilities by enabling interactive broadcasts with real-time language support.Speaker Diarization and Language Identification

Advanced diarization segments audio by speaker, making transcripts clearer and more actionable. Language identification automatically detects and transcribes multilingual audio streams. If you’re developing group audio experiences, such as live audio rooms, a

Voice SDK

can be integrated to manage speaker roles and enhance user engagement.Pronunciation Assessment

This feature evaluates spoken language for pronunciation accuracy—vital for language learning apps, training, and assessment platforms. For educational or assessment solutions, combining Azure’s assessment features with a

Voice SDK

enables real-time feedback and interactive learning environments.

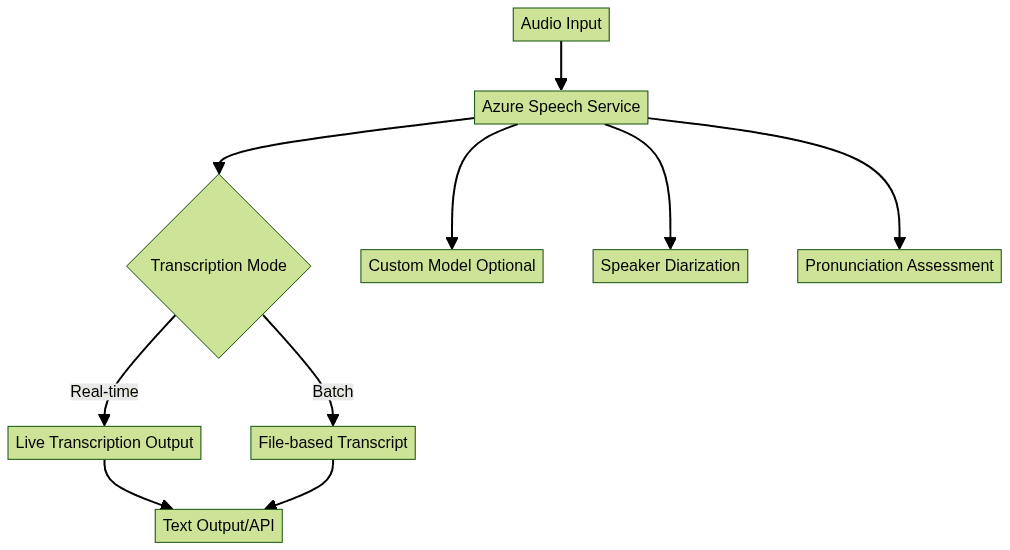

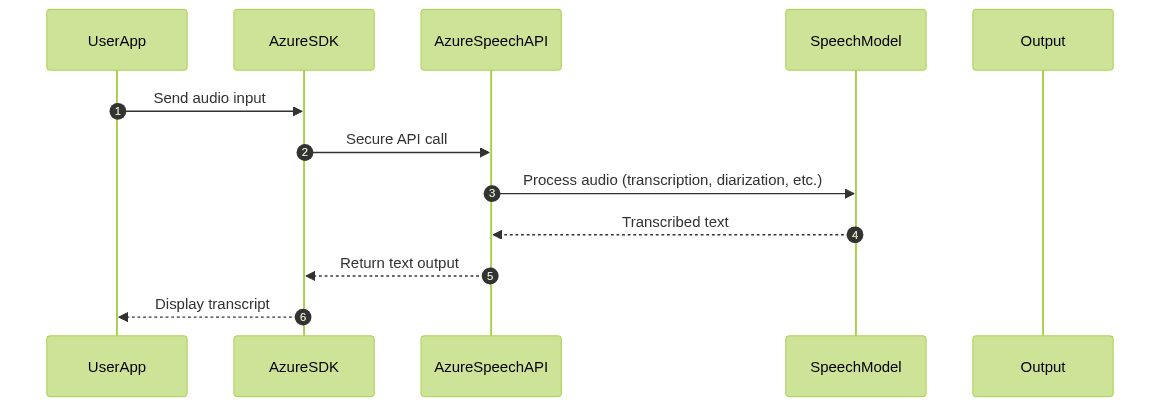

How Azure Speech to Text Works

Architecture and Workflow

The workflow begins with an audio stream or file, which is sent to the Azure Speech endpoint via SDKs or REST API. The service processes the audio, optionally applying custom models, diarization, or language identification. The final transcript is returned in real time or as a downloadable file, depending on the mode. Developers working with Python can also take advantage of a

python video and audio calling sdk

to capture and transmit audio streams efficiently before sending them to Azure for transcription.Integration Points

- SDKs: Azure provides client libraries for C#, Python, JavaScript, and more. For those developing in JavaScript, a

javascript video and audio calling sdk

can be used to integrate real-time audio and video features alongside speech recognition. - REST API: For language-agnostic integration, the REST API supports HTTP-based requests.

Implementing Azure Speech to Text

Prerequisites and Setup

- Azure Subscription: Create or use an existing Azure account.

- Speech Resource: Deploy a Speech service resource in the Azure portal.

- API Keys/Endpoints: Obtain credentials for SDK or REST API usage.

Using the Azure SDK

C# Example

1using System;

2using Microsoft.CognitiveServices.Speech;

3

4class Program {

5 static async Task Main(string[] args) {

6 var config = SpeechConfig.FromSubscription("YourSubscriptionKey", "YourRegion");

7 using var recognizer = new SpeechRecognizer(config);

8 Console.WriteLine("Speak into your microphone.");

9 var result = await recognizer.RecognizeOnceAsync();

10 Console.WriteLine($"Recognized: {result.Text}");

11 }

12}

13Python Example

1import azure.cognitiveservices.speech as speechsdk

2

3speech_config = speechsdk.SpeechConfig(subscription="YourSubscriptionKey", region="YourRegion")

4speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config)

5print("Speak into your microphone.")

6result = speech_recognizer.recognize_once()

7print(f"Recognized: {result.text}")

8Using the REST API

Sample API Request

1POST https://<region>.stt.speech.microsoft.com/speech/recognition/conversation/cognitiveservices/v1?language=en-US

2Ocp-Apim-Subscription-Key: YourSubscriptionKey

3Content-Type: audio/wav

4

5<binary audio data>

6Sample Response

1{

2 "RecognitionStatus": "Success",

3 "DisplayText": "This is a sample transcription.",

4 "Offset": 10000000,

5 "Duration": 20000000

6}

7For applications that require both audio and video input, integrating a

Video Calling API

can help capture high-quality streams for transcription and analysis.Customization and Advanced Features

Building Custom Speech Models

Azure’s Custom Speech portal allows you to upload data and train models that recognize domain-specific terms, increasing transcription accuracy for niche vocabularies.

Phrase Lists and Domain Adaptation

Phrase lists help bias recognition towards specific terms (e.g., product names, acronyms). This is especially useful in sectors like healthcare or finance where terminology matters.

Integrating OpenAI Whisper

Azure can integrate with OpenAI Whisper for advanced transcription capabilities, leveraging deep learning for improved accuracy in noisy or diverse-language environments.

Custom Model Usage Example (Python)

1import azure.cognitiveservices.speech as speechsdk

2speech_config = speechsdk.SpeechConfig(subscription="YourSubscriptionKey", region="YourRegion")

3speech_config.endpoint_id = "YourCustomModelEndpointId"

4speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config)

5result = speech_recognizer.recognize_once()

6print(f"Custom Model Recognized: {result.text}")

7Practical Use Cases

Call Center Analytics

Transcribe and analyze customer-agent conversations to extract insights, improve quality assurance, and monitor compliance in real time. For organizations running live audio rooms or voice-driven customer support, a

Voice SDK

can be integrated to facilitate seamless real-time communication and data capture for analytics.Live Captioning for Accessibility

Provide accurate, real-time captions for meetings, lectures, or broadcasts, enhancing accessibility for users with hearing impairments and boosting engagement.

Meeting and Event Transcription

Automatically generate searchable, shareable transcripts from meetings and events, streamlining documentation and enabling advanced analytics.

Video Translation and Dubbing

Transcribe and translate audio from videos to facilitate multilingual access, global content distribution, and automated dubbing workflows.

Security, Compliance, and Privacy

Data Security and Privacy

Azure Speech to Text encrypts all data in transit and at rest. Developers have full control over data residency, retention, and access policies, ensuring confidentiality.

Compliance Standards

The service meets major compliance standards, including GDPR, HIPAA, and ISO certifications, making it a trustworthy choice for regulated industries.

Pricing and Deployment Options

Cost Considerations

Pricing is based on usage—minutes of audio processed—with separate tiers for standard and custom models. Free tiers allow for initial experimentation. If you want to explore these features, you can

Try it for free

and experience the platform’s capabilities firsthand.Cloud vs Edge Deployment

Deploy in Azure’s cloud for scalability or use Azure Speech containers to run on edge devices for low-latency, offline scenarios.

Getting Started and Best Practices

- Create your Speech resource in the Azure portal.

- Install SDKs or set up API integration.

- Collect high-quality audio for best results.

- Use phrase lists and custom models to maximize accuracy.

- Monitor logs and tune models iteratively for continual improvement.

Conclusion: Why Choose Azure Speech to Text?

Azure Speech to Text offers unmatched flexibility, accuracy, and security for developers and enterprises in 2025, empowering innovation in speech-driven applications across industries.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ