Introduction to HLS Protocol

The landscape of online video streaming has transformed dramatically in the last decade, with the HLS protocol (HTTP

Live Streaming

) emerging as a cornerstone technology for delivering content worldwide. HLS protocol, developed by Apple, enables seamless streaming of video and audio over the internet using ordinary HTTP servers. Today, whether you’re watching a live sports event or catching up on your favorite series on-demand, chances are the HLS protocol is powering your experience. Its importance in modern streaming cannot be overstated, as it allows broadcasters, developers, and platforms to reach diverse audiences across devices efficiently and reliably.What is HLS Protocol?

The HLS protocol is an adaptive bitrate streaming protocol introduced by Apple in 2009. Originally designed to address the limitations of Flash-based streaming and to ensure smooth video delivery on iOS devices, HLS has become a de facto standard for internet video. It works by breaking media content into small, HTTP-based file segments, which are then served to clients along with manifest files (typically with a

.m3u8 extension) describing how to assemble the stream.Key features of the HLS protocol include:

- Adaptive Bitrate Streaming: HLS can automatically switch between different quality levels based on network conditions, minimizing buffering and providing a consistent user experience.

- HTTP-based Delivery: HLS leverages regular web servers and CDNs for scalability and reliability.

- Cross-device Compatibility: HLS is natively supported on iOS, macOS, many browsers, smart TVs, and set-top boxes.

For developers building interactive applications, integrating a

Video Calling API

alongside HLS can enable seamless transitions between live streaming and real-time communication.By employing a simple yet robust architecture, the HLS protocol addresses many challenges of legacy streaming protocols, making it a foundational technology in 2025’s streaming ecosystem.

How Does the HLS Protocol Work?

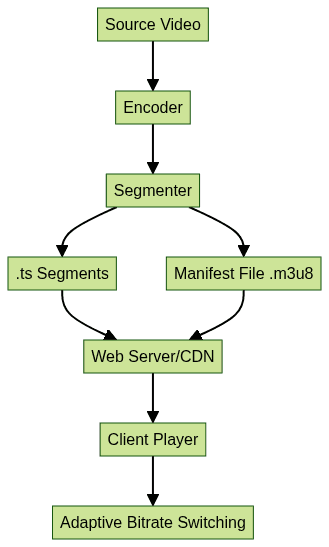

HLS Streaming Workflow

At its core, the HLS protocol segments media files into smaller chunks and delivers these segments to clients via HTTP. Here’s a high-level overview of the workflow:

- Segmenting & Encoding: The source video/audio is encoded into multiple quality levels and split into small segments (usually 2-6 seconds each). Each segment is an individual file (commonly

.tsfor video). - Manifest Files (.m3u8): A playlist file (the manifest) lists all available segments and their quality levels. The client uses this file to fetch and play the stream.

- Adaptive Bitrate Streaming: The client monitors bandwidth and CPU conditions, dynamically switching between quality levels for the best possible playback experience.

If you're looking to

embed video calling sdk

into your web or mobile applications, you can complement HLS-based streaming with interactive video features for a richer user experience.Adaptive Bitrate Example

If a user’s bandwidth drops, the player automatically switches to a lower bitrate stream by referencing the

.m3u8 manifest, ensuring uninterrupted playback. Conversely, on faster connections, higher quality can be delivered seamlessly.HLS Protocol Code Example

Below is a simplified example of an HLS manifest file (

playlist.m3u8):1#EXTM3U

2#EXT-X-VERSION:3

3#EXT-X-STREAM-INF:BANDWIDTH=800000,RESOLUTION=640x360

4low/playlist.m3u8

5#EXT-X-STREAM-INF:BANDWIDTH=1400000,RESOLUTION=1280x720

6mid/playlist.m3u8

7#EXT-X-STREAM-INF:BANDWIDTH=2800000,RESOLUTION=1920x1080

8high/playlist.m3u8

9Explanation:

#EXTM3Uis the file header.#EXT-X-STREAM-INFtags define different streams (bitrates and resolutions).- Each stream points to another manifest for that specific quality. The player chooses among these based on real-time conditions.

For developers working with web technologies, using a

javascript video and audio calling sdk

can help you quickly add real-time communication features that complement HLS streaming.The HLS protocol thus provides flexibility and reliability for adaptive bitrate streaming in diverse network environments.

HLS Protocol vs Other Streaming Protocols

Understanding how the HLS protocol compares to other popular streaming protocols is crucial when architecting a modern streaming solution.

HLS vs RTMP

- RTMP (Real-Time Messaging Protocol) was the industry standard for low-latency

live streaming

, especially for Flash-based video. However, RTMP requires specialized servers and is less compatible with modern devices and browsers. - HLS protocol uses HTTP, is firewall-friendly, and works natively on most platforms. While HLS typically has higher latency than RTMP, its scalability and device support are superior.

If you're targeting mobile platforms, exploring

webrtc android

solutions can be beneficial for building low-latency, real-time video experiences alongside HLS.HLS vs MPEG-DASH

- MPEG-DASH is an open standard for adaptive streaming, similar to HLS protocol. Both use segmenting and manifest files, but DASH is codec and container agnostic, while HLS traditionally favors MPEG-2 TS and AAC codecs (with recent support for fragmented MP4).

- HLS enjoys broader native support, especially in the Apple ecosystem, while DASH is more widely adopted for Android and smart TVs.

For cross-platform development, especially with frameworks like Flutter,

flutter webrtc

provides a modern approach to adding real-time video communication to your apps, complementing HLS for streaming.Advantages and Disadvantages

- HLS protocol: + Broad compatibility, CDN-friendly, robust adaptive streaming. – Higher latency, less flexible codec/container options.

- RTMP: + Low latency, mature ecosystem. – Poor device support, requires special servers.

- MPEG-DASH: + Open standard, codec flexibility. – Less native support, especially on Apple devices.

Use Cases

- HLS protocol: Live and on-demand content for web, mobile, and connected devices.

- RTMP: Legacy live workflows, ingest to streaming servers.

- MPEG-DASH: Broadcast-grade streaming, Android/TV platforms.

If you’re building mobile apps, leveraging a

react native video and audio calling sdk

can help you integrate real-time communication alongside HLS-based video delivery.Benefits of Using HLS Protocol

The HLS protocol brings significant advantages for developers and broadcasters:

- Device Compatibility: Native support on iOS, macOS, Safari, most smart TVs, and many browsers using Media Source Extensions (MSE).

- CDN Integration: Uses standard HTTP, making it easy to cache and distribute via global Content Delivery Networks.

- Security: Supports encryption (AES-128, SAMPLE-AES), token-based authentication, and integration with DRM systems for content protection.

- Adaptive Bitrate Streaming: Ensures optimal user experience by dynamically adjusting video quality based on real-time network conditions.

For those seeking to add interactive features to their streaming platforms, integrating a

Video Calling API

can provide seamless video conferencing capabilities alongside HLS streams.These strengths make the HLS protocol a leading choice for scalable, secure, and reliable video delivery in 2025.

Drawbacks and Limitations of HLS Protocol

Despite its popularity, the HLS protocol has some challenges:

- Latency Issues: Default HLS segment sizes (2-6 seconds) can introduce latency of 15-30 seconds—problematic for real-time interactions or live sports.

- Segment Size Trade-offs: Smaller segments reduce latency but increase overhead and load on servers and networks.

- Scalability Concerns: While HTTP-based delivery is scalable, large live events can stress origin servers and require robust CDN strategies.

If you want to experiment with these technologies and see how they fit your workflow, you can

Try it for free

and explore the integration possibilities with HLS and real-time video solutions.Understanding these limitations is key to optimizing HLS protocol streaming workflows.

How to Implement HLS Protocol

Setting Up an HLS Stream

Implementing the HLS protocol involves several core steps:

- Encoding: Transcode your source video into multiple quality levels/bitrates.

- Segmenting: Split each video stream into short segments (e.g., 4 seconds each).

- Manifest Generation: Create

.m3u8playlist files referencing available segments/bitrates. - Serving: Host segments and manifests on an HTTP server or CDN.

- Playback: Use an HLS-compatible player (e.g., hls.js, video.js) to request and play the content.

For developers looking to streamline the process, integrating a

Video Calling API

can enhance your platform by enabling both streaming and real-time communication within the same application.Example: Using FFmpeg to Create HLS Segments

1ffmpeg -i input.mp4 -profile:v baseline -level 3.0 -s 640x360 -start_number 0 \

2-c:v libx264 -b:v 800k -c:a aac -b:a 96k -f hls \

3-hls_time 4 -hls_list_size 0 -hls_segment_filename "segment_%03d.ts" playlist.m3u8

4Explanation:

- Transcodes

input.mp4into HLS-compliant.tssegments (4 seconds each), produces a playlist manifest, and uses baseline H.264 for broad compatibility.

Open-source tools for HLS implementation:

- FFmpeg: Versatile media encoding and segmenting.

- Shaka Packager: Advanced packaging and DRM support.

- hls.js: JavaScript library for HLS playback in browsers.

- Video.js: Feature-rich HTML5 video player with HLS plugin support.

Troubleshooting HLS Protocol

Common issues and tips:

- Buffering: Check segment duration and encoding profiles. Overly large segments or high bitrates can cause playback stalling.

- Playback Failures: Verify manifest syntax, segment availability, and CORS headers on the server.

- Device Compatibility: Test across browsers (Safari, Chrome with MSE), and ensure fallback options for unsupported devices.

Effective troubleshooting ensures robust HLS streaming experiences for end-users.

Best Practices and Tips for HLS Protocol

To maximize the effectiveness of the HLS protocol, consider these best practices:

- Segment Size: 4 seconds is a common compromise between latency and overhead. For lower latency, consider 2-second segments and "Low-Latency HLS" extensions.

- Optimizing for Low Latency: Use smaller segments, tune server caching, and leverage Apple’s Low-Latency HLS (LL-HLS) where supported.

- CDN Strategies: Distribute content via multiple CDNs for redundancy and reach. Use cache control headers to manage freshness.

- Security: Always encrypt sensitive streams and use secure tokens for access. Integrate with DRM when required for premium content.

- Monitoring: Continuously monitor origin/CDN performance and client analytics to proactively address issues.

For those building interactive streaming solutions, combining HLS with real-time communication using SDKs like

javascript video and audio calling sdk

orreact native video and audio calling sdk

can deliver a seamless user experience across platforms.Applying these strategies ensures stable, secure, and high-performing HLS protocol deployments.

Conclusion

The HLS protocol stands as a robust and adaptable solution for video streaming in 2025, offering broad compatibility, strong security, and adaptive bitrate streaming. While it has some latency and scalability challenges, ongoing improvements and best practices keep it at the forefront of streaming technology. As demand for reliable, high-quality video continues to grow, the HLS protocol will remain central to broadcasters, developers, and platforms worldwide.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ