Introduction: What Makes the Best LLM for Chatbot?

The rise of conversational AI has transformed how businesses engage with users, automate support, and deliver personalized experiences. At the core of these systems lies the large language model (LLM), a neural network trained on massive datasets to understand and generate human language. Selecting the best LLM for chatbot development is critical—model choice affects accuracy, responsiveness, scalability, data privacy, and overall user satisfaction. As the LLM landscape evolves rapidly in 2025, understanding the latest models and their capabilities is key to building competitive, trustworthy chatbots.

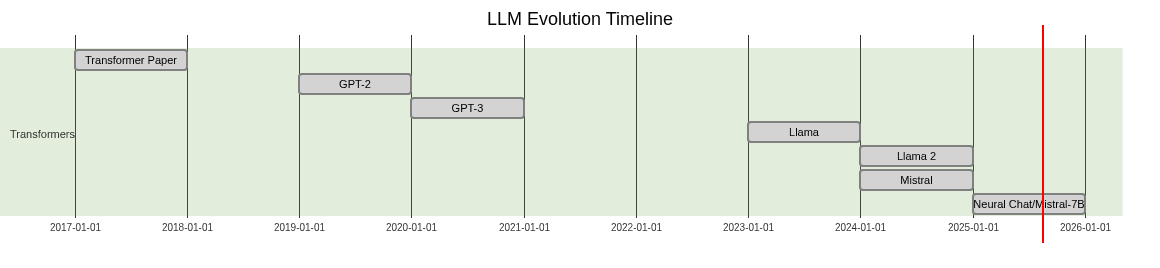

The Evolution of LLMs for Chatbots

LLMs have advanced dramatically since the first transformer architectures emerged. Early models like GPT-2 set new standards for natural language understanding, while GPT-3 demonstrated the power of large-scale training. The open-source movement brought LLMs like Llama and Mistral, empowering developers with customizable, high-quality models. Today, organizations can choose from a rich ecosystem of LLMs tailored for chatbot use. For those looking to enhance chatbot interactions with real-time communication, you can

embed video calling sdk

solutions to add seamless video and audio features to your conversational AI.

Criteria for Choosing the Best LLM for Chatbot

Selecting the best LLM for chatbot projects requires evaluating several dimensions:

- Model Size and Architecture: Larger models (e.g., 70B parameters) offer stronger reasoning and language generation but demand more resources. Smaller models can run efficiently on edge devices or with limited infrastructure.

- Performance Benchmarks: Assess accuracy (e.g., F1, BLEU), latency, throughput, and efficiency. Real-world chatbot benchmarks provide the most relevant insights.

- Customizability and Fine-Tuning: The ability to fine-tune or adapt the LLM for specific domains, brand tone, or task-specific knowledge significantly improves chatbot usability.

- Privacy and Data Security: Consider models that support on-premise deployment or provide robust privacy controls, especially for sensitive sectors like healthcare or finance.

- Cost and Licensing: Open-source LLMs reduce licensing costs and offer greater flexibility. Commercial models may provide better support or unique capabilities but at a premium.

- Use Case Alignment: Match the LLM’s strengths to your application—customer service, content creation, language learning, or personal assistants.

If your chatbot use case involves real-time communication, integrating a

Video Calling API

can further enhance user engagement by enabling live video interactions within your application.Balancing these criteria ensures your chatbot is performant, secure, and aligned with business goals.

Leading LLMs for Chatbots: Detailed Comparison

Llama 2 (7B, 13B, 70B)

Overview: Llama 2, developed by Meta AI, is a family of open-weight LLMs available in multiple sizes. It sets a new standard for open-source conversational models, offering strong performance on benchmarks and robust multilingual support.

Strengths:

- Excellent performance in conversational tasks

- Open-source and easily deployable on-premise

- Supports fine-tuning and custom training

- Scales from 7B (efficient) to 70B (high-accuracy)

Weaknesses:

- Larger models require significant GPU resources

- Prompt alignment and safety require additional tuning for production

Implementation Tips:

- Use 7B for resource-constrained environments and rapid prototyping

- Choose 13B/70B for complex, high-accuracy chatbots

- Leverage Hugging Face integration for streamlined deployment

For chatbots that require audio-only communication, integrating a

Voice SDK

can enable high-quality live audio rooms and voice interactions alongside LLM-powered conversations.Mistral Neural Chat 7B

Overview: Mistral’s Neural Chat 7B is a state-of-the-art open-source LLM focused on efficiency and business-ready chat. It combines high accuracy with low inference latency, making it ideal for real-time chatbot applications.

Key Technical Features:

- Highly optimized for inference on CPUs and GPUs

- Outperforms larger models on certain benchmarks

- Excellent at following instructions and handling multi-turn conversations

If you’re building web-based chatbots, consider using a

javascript video and audio calling sdk

to add seamless video and audio calling capabilities directly within your JavaScript applications.Use Cases:

- Customer support bots

- Internal business assistants

- Digital helpdesks

ChadGPT (and Multi-Model Approaches)

Overview: ChadGPT represents a trend towards specialized, business-focused LLM deployments. It is designed for simplicity, reliability, and easy API integration, often combining multiple models for optimal results.

Pros:

- Managed API access, scalable deployments

- Business-grade privacy and compliance

- Simple integration for startups and enterprises

Cons:

- Limited customizability compared to open-source models

- Vendor lock-in risk

API Accessibility:

- RESTful endpoints, SDKs, and cloud-native deployment

- Focus on uptime and rapid scaling

For mobile-first chatbot experiences, leveraging a

react native video and audio calling sdk

is ideal for integrating real-time communication features into React Native apps.Comparison Table: Best LLMs for Chatbot in 2025

| Model | Params | Open Source | Performance | Customization | Best Use Case |

|---|---|---|---|---|---|

| Llama 2 7B/13B/70B | 7B-70B | Yes | High | Yes | Customer Service |

| Mistral Neural Chat 7B | 7B | Yes | High | Yes | Business Chatbots |

| ChadGPT | Varied | No | Very High | Limited | Enterprise Support |

Implementation: How to Integrate the Best LLM for Chatbot

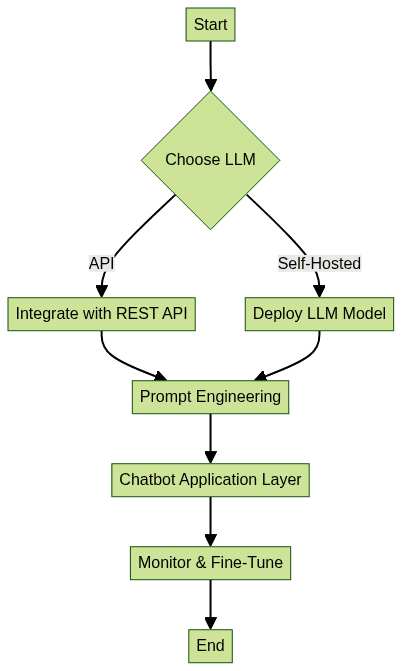

When integrating the best LLM for chatbot use, you’ll face a choice: leverage a managed API (e.g., OpenAI, Cohere, ChadGPT) or self-host an open-source model (e.g., Llama 2, Mistral). APIs offer speed and scalability, while self-hosting provides control and privacy.

If your chatbot needs to support cross-platform video and audio calls, exploring

flutter webrtc

can help you implement robust real-time communication in Flutter-based applications.API vs. Self-Hosted Options

- API: Fast setup, managed infrastructure, automatic updates, less control over data locality

- Self-Hosted: Full data control, customizable, potentially lower long-term costs, but requires ML ops expertise

For scenarios where chatbots need to initiate or manage phone conversations, integrating a

phone call api

can be a valuable addition to your communication stack.Prompt Engineering Strategies

Prompt engineering is vital for conversational accuracy. Structure prompts to include context, user history, and explicit instruction.

Customizing System Prompts

1system_prompt = """You are a helpful assistant specialized in AI programming support. Answer user questions clearly and concisely.\n"""

2user_message = "How do I fine-tune Llama 2 for my chatbot?"

3prompt = system_prompt + "User: " + user_message

4Handling Multi-Turn Conversations

Maintain conversational state to provide context-aware responses:

```python

conversation_history = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello, who are you?"},

{"role": "assistant", "content": "I am an AI developed to help you with chatbots."},

{"role": "user", "content": "How do I choose the best LLM for chatbot?"},

]

Pass conversation_history to the LLM API for context

1

2### LLM Integration Workflow

3

4

5If your chatbot solution includes large-scale events or webinars, integrating a [Live Streaming API SDK](https://www.videosdk.live/interactive-live-streaming) can enable interactive live streaming experiences alongside conversational AI.

6

7## Use Cases and Real-World Applications

8

9Modern chatbots powered by the **best LLM for chatbot** deliver tangible business value:

10

11- **Customer Support:** Llama 2 and Mistral drive automated helpdesks, resolving thousands of tickets daily with human-level empathy.

12- **Content Generation:** Businesses use LLMs to create product descriptions, summarize documents, and power creative writing assistants.

13- **Personal Assistants:** LLMs like Mistral Neural Chat enable digital assistants that manage calendars, answer FAQs, and perform research.

14- **Language Learning:** Adaptive chatbots help users practice conversation, correct grammar, and suggest improvements, leveraging multilingual LLM models.

15

16If you’re eager to experiment with these integrations and see how they can elevate your chatbot, you can [Try it for free](https://www.videosdk.live/signup?utm_source=mcp-publisher&utm_medium=blog&utm_content=blog_internal_link&utm_campaign=best-llm-for-chatbot) and start building advanced conversational experiences today.

17

18**Example:** A European e-commerce firm deployed Llama 2 13B for multilingual customer support, reducing response times by 60%. An EdTech startup used Mistral Neural Chat to build a language tutor chatbot, increasing student engagement.

19

20## Best Practices for Optimizing LLM Chatbot Performance

21

22- **Fine-Tuning & Continuous Improvement:** Regularly retrain or fine-tune your LLM on new interaction data to improve accuracy and relevance.

23- **Monitoring & Evaluation:** Track key metrics (e.g., response accuracy, latency, user satisfaction) and set up feedback loops for evaluation.

24- **Security & Privacy:** Encrypt user data, comply with GDPR/CCPA, and limit sensitive data exposure. Prefer self-hosted LLMs for maximum privacy.

25

26For chatbots that require robust real-time communication, integrating a [Video Calling API](https://www.videosdk.live/audio-video-conferencing) ensures high-quality, scalable video conferencing features within your application.

27

28## Future Trends in LLMs for Chatbots

29

30As the field advances in 2025, expect major trends:

31

32- **Multilingual Capabilities:** Next-gen LLMs will natively support dozens of languages, broadening global reach.

33- **Efficient Inference/Edge Deployment:** Smaller, faster models allow chatbots to run on mobile and IoT devices, reducing latency and cloud reliance.

34- **Open-Source Community Growth:** More models, tools, and benchmarks emerge from open-source communities, accelerating innovation and reducing costs.

35

36## Conclusion: Choosing the Best LLM for Your Chatbot Project

37

38Selecting the **best LLM for chatbot** depends on your use case, technical requirements, and privacy needs. Llama 2 and Mistral excel for open-source, customizable deployments, while ChadGPT offers managed simplicity. Evaluate performance, cost, and integration fit—then prototype, measure, and iterate to deliver exceptional chatbot experiences in 2025.

39

40Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ