Introduction: Why the Best LLM API for Voice Matters

In 2025, the convergence of large language models (LLMs) and voice technology is transforming the way we build voice-enabled applications. From conversational AI agents and voice bots to multimodal virtual assistants, the right LLM API for voice is essential for delivering natural, responsive, and scalable user experiences. Whether you are developing enterprise voice solutions, real-time voice assistants, or creative voice cloning apps, selecting a robust, low-latency, and secure API can make or break your project.

This guide explores the best LLM APIs for voice, key selection criteria, and practical integration steps—empowering developers and businesses to harness the full potential of voice AI in 2025.

What is an LLM API for Voice?

An LLM API for voice is a developer-accessible interface that connects applications to advanced language models capable of processing, understanding, and generating human-like speech. Unlike traditional speech APIs, which focus on simple speech-to-text (STT) or text-to-speech (TTS), LLM voice APIs offer:

- Contextual understanding and conversational intelligence

- Multimodal capabilities (voice-to-voice, STT, TTS)

- Integration with real-time, low-latency voice workflows

- Advanced features like voice cloning, emotion detection, and intent recognition

For developers looking to add real-time audio features, a

Voice SDK

can streamline integration and enhance the capabilities of your voice-enabled applications.These APIs power next-gen voice agents, interactive apps, and enterprise voice solutions.

Example: Basic API Call for Speech-to-Text

```python

import requests

url = "

https://api.voice-llm.com/v1/speech-to-text

" headers = {"Authorization": "Bearer YOUR_API_KEY"} files = {"audio": open("sample.wav", "rb")}response = requests.post(url, headers=headers, files=files)

print(response.json())

```

Key Criteria for Choosing the Best LLM API for Voice

Selecting the right LLM API for voice involves careful consideration of multiple factors:

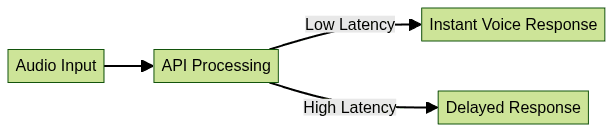

Latency and Real-time Performance

Low latency is critical for conversational AI and interactive voice applications. High response times can degrade user experience, especially in real-time voice bots. For scenarios like live audio rooms or interactive sessions, leveraging a

Voice SDK

ensures seamless, low-latency communication.

Accuracy and Naturalness of Voice Output

LLM-based APIs should provide not only accurate speech recognition but also natural, human-like synthesis. Evaluate APIs for:

- Intelligibility and clarity

- Voice tone and expressiveness

- Adaptability to accents and languages

If your use case involves telephony or integrating voice with phone systems, consider using a

phone call api

to ensure high-quality audio transmission and reception.Multimodality & Versatility

Best-in-class APIs support multiple modalities:

- Speech-to-text (STT)

- Text-to-speech (TTS)

- Voice-to-voice (translation or transformation)

- Emotion and intent detection

This enables broader use cases from accessibility to real-time translation. For developers building cross-platform solutions, a

python video and audio calling sdk

or ajavascript video and audio calling sdk

can help you quickly add both video and audio features to your voice-enabled apps.Scalability and Reliability

Enterprise and consumer apps require APIs capable of handling variable loads, global user bases, and high uptime. Look for:

- Auto-scaling infrastructure

- SLAs and uptime guarantees

- Multi-region deployment

To support large-scale events or broadcasts, integrating a

Live Streaming API SDK

can provide the scalability and reliability needed for interactive voice and video streaming.Security, Privacy, and Compliance

With sensitive voice data, compliance is non-negotiable. Prioritize APIs offering:

- End-to-end encryption

- GDPR/CCPA compliance

- Data retention controls

- Secure authentication

Top LLM APIs for Voice in 2024

The market features a wide range of LLM voice APIs. Here’s a breakdown of the best options for 2025:

Deepgram

Overview: Deepgram is renowned for its robust speech-to-text, text-to-speech, and audio intelligence APIs. Optimized for real-time, multi-language environments, Deepgram delivers low latency and high accuracy.

Key Strengths:

- Ultra-fast STT and TTS

- Real-time streaming

- Advanced audio analytics (emotion, intent, summarization)

- Scalable for enterprise

Example Use Case: Real-time transcription for customer service bots.

For developers who want to build real-time voice rooms or group audio chats, a

Voice SDK

can be integrated alongside Deepgram for enhanced functionality.1import deepgram_sdk

2from deepgram_sdk import Deepgram

3

4dg_client = Deepgram("YOUR_API_KEY")

5

6audio_url = {"url": "https://www.example.com/audio.wav"}

7response = dg_client.transcription.sync_prerecorded(audio_url)

8print(response['results']['channels'][0]['alternatives'][0]['transcript'])

9LMNT

Overview: LMNT specializes in lifelike text-to-speech and voice cloning. Their API enables developers to synthesize speech with emotional context and clone unique voices for personalization.

Unique Features:

- High-fidelity voice synthesis

- Custom voice cloning

- Expressive emotional control

- Developer-friendly SDKs

Developer Support: Extensive documentation, SDKs for Python, Node.js, and REST.

If your application requires phone-based interactions, integrating a

phone call api

can help you bridge the gap between web and telephony.AssemblyAI

Overview: AssemblyAI offers industry-leading speech-to-text APIs with a focus on streaming transcription and audio intelligence for enterprises and voice agents.

Features:

- Real-time and batch transcription

- Summarization, topic detection, sentiment analysis

- Seamless integration with voice bots

Millis AI

Overview: Millis AI delivers ultra-low latency LLM APIs for turnkey voice agent deployment. Designed for instant, lifelike conversations, Millis AI supports rapid integration and high concurrency.

Key Points:

- Real-time, conversational LLM voice agents

- WebSocket and REST integration

- Flexible deployment (cloud/edge)

For group audio experiences or collaborative voice applications, a

Voice SDK

can be used in conjunction with Millis AI to enable real-time communication.RealtimeAPI

Overview: RealtimeAPI is architected for truly real-time, multimodal voice conversations. Supports audio input/output, interrupt handling, and bidirectional streaming.

Highlights:

- Multimodal: voice, text, audio

- Low-latency, real-time processing

- Interrupt/resume for conversational AI

Gabber

Overview: Gabber combines a composable, visual app builder with open-source LLM API components. Ideal for rapid prototyping and customizable voice applications.

Strengths:

- Drag-and-drop app builder

- Open-source and extensible

- Multimodal voice and text integration

Fish Audio

Overview: Fish Audio provides developer APIs for voice cloning and model training. With robust integrations for cloud and on-prem, Fish Audio is ideal for custom voice and TTS workflows.

Features:

- Custom voice model training

- High-accuracy voice cloning

- REST and gRPC APIs

Implementation Guide: Integrating a Best LLM API for Voice

Choosing the right API depends on your use case, budget, and technical resources. Here’s a step-by-step guide to getting started:

- Define Your Use Case: Clarify whether you need STT, TTS, voice-to-voice, or a multimodal solution.

- Evaluate APIs: Compare latency, pricing, language support, and compliance.

- Obtain API Keys: Register and acquire secure credentials.

- Integrate SDK or REST API: Use provided SDKs or direct REST calls.

- Test and Optimize: Validate with sample audio, optimize for latency and accuracy.

For developers seeking to experiment with these APIs, you can

Try it for free

and explore different voice and video SDKs before making a commitment.Step-by-Step: Setting Up a Basic Voice Bot

```python

import requests

def voice_bot(audio_file_path):

url = "

https://api.llm-voice.com/v1/bot

" headers = {"Authorization": "Bearer YOUR_API_KEY"} files = {"audio": open(audio_file_path, "rb")} response = requests.post(url, headers=headers, files=files) return response.json()["reply"]print(voice_bot("user_query.wav"))

```

Common Pitfalls and How to Avoid Them:

- Latency issues: Use streaming endpoints or WebSockets for real-time apps.

- Audio quality: Preprocess input audio to reduce noise.

- Security: Never expose API keys or transmit unencrypted data.

- Scaling: Monitor usage and set up alerts for API limits.

Future Trends: Where LLM Voice APIs are Heading

The next generation of LLM APIs for voice will focus on:

- Multilingual and Multimodal Support: Enhanced capabilities for global, diverse user bases, including image and text context alongside voice.

- Real-time, On-Device/Edge Deployment: Reduced reliance on cloud, enabling offline voice agents and lower latency.

- Adaptive AI: Models that learn user preferences and adapt to individual voices and accents.

Conclusion: Choosing Your Best LLM API for Voice

The LLM API landscape for voice is rapidly evolving. By prioritizing latency, accuracy, versatility, and security, you can select the best LLM API for your project’s needs. Whether you’re building enterprise voice agents or innovative voice-first apps, the APIs covered here will help you deliver exceptional voice experiences in 2025.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ