Introduction to OpenAI Real Time Voice API

Speech-to-speech technology has rapidly advanced, transforming the way users interact with applications. From voice assistants to real-time collaboration tools, low-latency voice AI is redefining modern digital experiences. As conversational interfaces become essential, the need for seamless, natural, and instantaneous voice interactions grows. OpenAI has stepped into this space with the release of GPT-4o and the OpenAI Real Time Voice API, providing developers with cutting-edge capabilities for building next-generation speech-first applications. This guide covers everything you need to know about leveraging OpenAI's real time voice technology in your own apps.

What is the OpenAI Real Time Voice API?

The OpenAI Real Time Voice API is a state-of-the-art speech-to-speech interface that moves beyond traditional Text-to-Speech (TTS) and Speech-to-Text (STT) models. Unlike classic TTS/STT, which operate in separate stages, OpenAI's API supports direct, low-latency, bidirectional audio streaming—enabling real-time conversations between users and AI. Key features include:

- Speech-in, speech-out: Send and receive audio streams for natural dialogue

- WebSocket-based interface: Maintains persistent, low-latency connections

- Low latency: Sub-500ms round-trip times for near-instant responses

- Function calling and multimodal support: Integrate with other OpenAI tools and chain capabilities

- Supported models: GPT-4o, GPT-4o-mini, with regular updates and new voices

This API is designed for developers seeking to build conversational, voice-first, and interactive AI-driven applications.

Key Benefits and Use Cases

Real-Time, Low-Latency Conversational AI (Keyword: openai real time voice api)

The OpenAI Real Time Voice API unlocks a range of high-impact use cases:

- Customer support bots: Deploy AI agents that handle customer queries via voice, reducing wait times and enhancing support experiences.

- Voice assistants and accessibility tools: Create assistive technologies for visually impaired users or hands-free operation.

- Language learning and live translation: Real-time conversational partners for language practice or instant voice-to-voice translation.

- Real-time collaboration and gaming: Enable natural, context-aware voice chat for multiplayer games or collaborative platforms with AI-powered moderation, coaching, or NPCs.

With its low-latency design, the API is ideal for scenarios where immediacy and natural dialogue are critical.

How the OpenAI Real Time Voice API Works

Under the Hood: Speech-to-Speech Pipeline

The OpenAI Real Time Voice API operates over a secure WebSocket connection, allowing continuous bi-directional streaming of audio and text data. Here's how the pipeline functions:

- WebSocket interface: Establishes a persistent connection between client and server for real-time communication.

- Audio streaming: Users stream microphone input to the API, which processes, understands, and generates audio responses in real time.

- Function calling and multimodal capabilities: The API can trigger external functions (e.g., database lookups, backend actions) and handle text, images, or structured data in context.

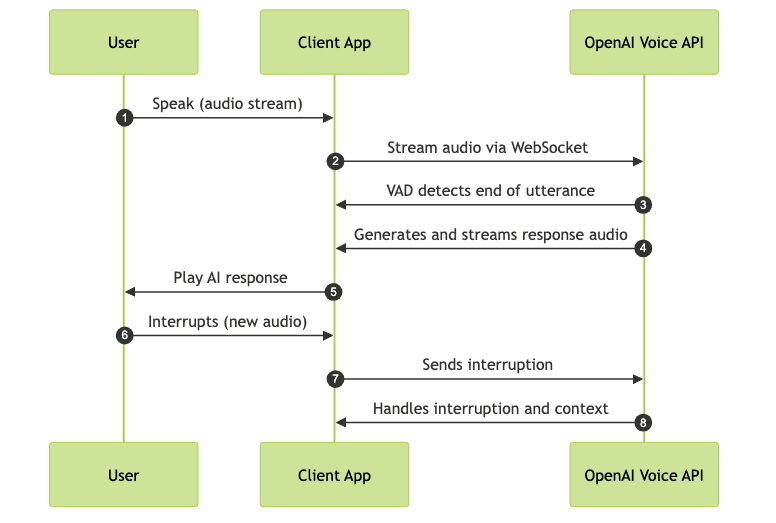

Voice Activity Detection (VAD) and Turn-Taking

Voice Activity Detection (VAD) is integral to fostering natural conversations. VAD algorithms detect when a user starts and stops speaking, allowing the system to:

- Avoid talking over users

- Manage interruptions and maintain context

- Seamlessly hand off between user and AI turns

This approach enables more human-like interaction, reducing the frustration of lag or missed cues in voice apps.

Setting Up the OpenAI Real Time Voice API

Requirements and Authentication

To use the OpenAI Real Time Voice API, you need:

- OpenAI API key: Sign up at OpenAI, subscribe to a paid tier, and generate keys.

- Billing setup: Real time voice is not included in free tiers.

- Azure integration: Optionally, use Azure OpenAI Service for enterprise deployment.

- Model deployment: Select GPT-4o or its variants; availability may vary by region.

Quickstart: Connecting via WebSocket (with Code Example)

For low-latency streaming, WebSocket is the preferred protocol. Here's how to connect with Node.js using the

ws library:1const WebSocket = require("ws");

2

3const ws = new WebSocket(

4 "wss://api.openai.com/v1/voice/stream",

5 {

6 headers: {

7 Authorization: `Bearer YOUR_OPENAI_API_KEY`,

8 },

9 }

10);

11

12ws.on("open", function open() {

13 // Send initial configuration

14 ws.send(JSON.stringify({

15 model: "gpt-4o",

16 input: { stream: true }

17 }));

18

19 // Begin streaming audio

20});

21

22ws.on("message", function incoming(data) {

23 // Handle AI response (audio or events)

24 console.log(data);

25});

26For full-featured clients and utilities, refer to OpenAI's official SDKs or community libraries.

Implementing a Voice Assistant with OpenAI Realtime API

Building with WebRTC for Live Audio Streaming

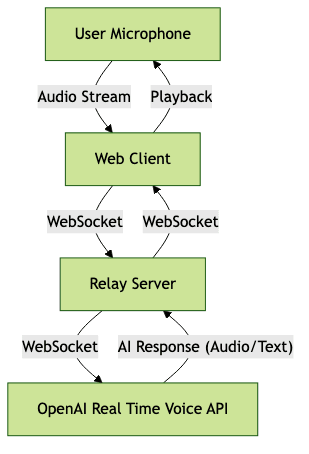

WebRTC is often used alongside the OpenAI API to capture microphone input, manage peer connections, and relay audio for processing. The common architecture is:

- Capture audio: Use the Web Audio API or

getUserMediato access the microphone. - Establish peer connection: Set up a WebRTC peer connection for direct streaming (if needed for client-client or multi-user scenarios).

- Send/receive audio and text: Forward captured audio to a relay server, then to the OpenAI API. Receive and playback AI-generated audio responses.

- UI considerations: Frameworks like React or Vue can help manage state, user cues (e.g., "Listening..."), and playback controls.

Code Snippet: Streaming Audio via WebSocket

Here's a simplified browser-side example using native WebSocket and the Web Audio API:

1const ws = new WebSocket("wss://api.openai.com/v1/voice/stream");

2ws.onopen = () => {

3 navigator.mediaDevices.getUserMedia({ audio: true }).then(stream => {

4 const audioContext = new (window.AudioContext || window.webkitAudioContext)();

5 const source = audioContext.createMediaStreamSource(stream);

6 const processor = audioContext.createScriptProcessor(4096, 1, 1);

7 source.connect(processor);

8 processor.connect(audioContext.destination);

9

10 processor.onaudioprocess = (e) => {

11 const audioData = e.inputBuffer.getChannelData(0);

12 // Convert Float32Array to Int16Array and encode as needed

13 ws.send(audioData.buffer);

14 };

15 });

16};

17

18ws.onmessage = (event) => {

19 // Handle received AI audio data and play it back

20};

21Best Practices and Security

- API key management: Never expose your API key in frontend code. Use a relay server to inject credentials securely.

- Relay server recommendations: All traffic should be proxied through a secure backend to prevent credential leaks and enable rate limiting.

- Handling interruptions and errors: Listen for VAD events, user interruptions, or dropped connections. Implement retry and graceful fallback logic.

- Fallback logic: Always provide alternative input methods (e.g., text) if voice fails.

Pricing, Limitations, and Considerations

OpenAI's Real Time Voice API is billed per minute of audio processed, with rates varying by model (e.g., GPT-4o vs. GPT-4o-mini). The API is currently in beta—features, pricing, and stability may change. Consider these points:

- Latency vs. expressiveness: Lower latency may reduce the expressiveness of AI-generated speech. Tune settings for your use case.

- Regional availability: Not all models or voices are available in every region due to licensing and infrastructure constraints.

- Production caveats: As a preview product, expect occasional outages or API changes. Test thoroughly before deploying mission-critical voice apps.

OpenAI Real Time Voice API: Future Trends

OpenAI is rapidly evolving its voice models. Expect future improvements such as richer emotion modeling, accent and dialect support, and seamless multimodal experiences (combining voice, text, and vision). The API will power next-generation voice-first interfaces for business, education, and entertainment.

Conclusion

The OpenAI Real Time Voice API offers developers unprecedented access to low-latency, conversational AI with speech-in and speech-out capabilities. From customer support bots to real-time translation and gaming, the applications are vast. By leveraging this API, you can build more natural, accessible, and engaging user experiences. Experiment today and be part of the voice-first future.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ