Introduction to OpenAI Speech to Text

OpenAI speech to text technology is transforming the way developers build voice-enabled applications. By leveraging powerful neural models, OpenAI enables seamless conversion of audio to accurate, readable text. This capability is essential for modern applications—think meeting transcriptions, live captions, automated customer support, and voice interfaces. Two primary offerings, the OpenAI Whisper model and OpenAI STT (Speech-to-Text) APIs, provide diverse solutions for audio transcription, real-time streaming, and multilingual support. As we move into 2025, the importance of integrating reliable speech recognition into products is only growing, making OpenAI speech to text a foundational tool for developers and businesses alike.

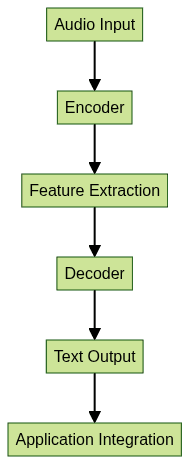

How OpenAI Speech to Text Works

At its core, OpenAI speech to text leverages deep learning models such as Whisper and STT, trained on massive datasets encompassing multiple languages, accents, and real-world noise conditions. These models use an encoder-decoder architecture: the encoder processes the raw audio waveform into high-dimensional features, while the decoder generates the corresponding text output. This architecture allows for robust handling of noisy environments, various dialects, and real-time or batch transcription.

OpenAI Whisper, in particular, is noted for its multilingual capabilities and robustness, while the STT API is optimized for scalable, production-grade transcription and streaming. Developers can access these tools via REST APIs, WebSockets for real-time transcription, or SDKs for seamless integration. For those looking to build interactive voice-enabled experiences, integrating a

Voice SDK

can further streamline the development of live audio rooms and real-time communication features.

Key Features of OpenAI Speech to Text

- Multilingual Support: Transcribe audio in dozens of languages, making global applications accessible.

- Robustness to Noise and Accents: Designed for real-world audio, including background noise and diverse speaker accents.

- Streaming and Batch Modes: Choose between real-time streaming (WebSockets) and batch processing (REST API/file upload) for flexible integration.

- SDK Integration: Developers can enhance their applications by embedding a

Voice SDK

for seamless voice communication and transcription.

Setting Up OpenAI Speech to Text

Before you can use OpenAI speech to text, ensure you have the following prerequisites:

- A valid OpenAI API key (from

https://platform.openai.com

) - Python 3.8+ installed

- Required libraries (openai, websockets, sounddevice for microphone input, etc.)

If you're building Python-based applications that require both video and audio communication, consider using a

python video and audio calling sdk

to simplify integration and accelerate development.Installation Steps:

- Install the OpenAI Python client and supporting libraries:

1pip install openai websockets sounddevice

2- Set your OpenAI API key securely, either via environment variable or directly in your code (not recommended for production):

1import openai

2import os

3

4# Recommended: Store API key in environment variable

5os.environ["OPENAI_API_KEY"] = "sk-...your-key..."

6openai.api_key = os.getenv("OPENAI_API_KEY")

7- When deploying applications, always secure your API keys using environment variables, secret managers, or encrypted vaults. Never commit keys to source control.

With these steps, your environment is ready for OpenAI speech to text development. For developers looking to add video communication alongside speech-to-text, a

Video Calling API

can be a valuable addition to your tech stack.Methods of Transcription with OpenAI Speech to Text

OpenAI speech to text supports multiple transcription workflows, depending on your use case:

File Upload (Blocking & Streaming)

The simplest workflow involves uploading audio files for transcription. This can be done synchronously (waiting for the result) or asynchronously (streaming responses for large files).

Pros:

- Simple integration

- Good for batch processing and offline use cases

Cons:

- Latency for large files

- Not suitable for live, real-time applications

For applications that require embedding video and audio calling features along with transcription, you can

embed video calling sdk

directly into your product for a seamless user experience.Example: Transcribing an audio file

1import openai

2

3with open("audio_sample.wav", "rb") as audio_file:

4 transcript = openai.Audio.transcribe(

5 model="whisper-1",

6 file=audio_file,

7 response_format="text"

8 )

9print(transcript)

10Real-Time Streaming with WebSockets

For live captioning or voice interfaces, WebSocket-based real-time transcription is essential. This allows you to stream audio and receive transcribed text with minimal delay.

Use Cases:

- Live events

- Interactive voice applications

If your use case involves large-scale broadcasts or interactive sessions, integrating a

Live Streaming API SDK

can help you deliver real-time audio and video streams with integrated speech-to-text capabilities.Limitations:

- Session timeouts (varies by plan)

- Real-time only; not ideal for large pre-recorded files

Example: Streaming transcription with WebSockets

1import asyncio

2import websockets

3import sounddevice as sd

4import numpy as np

5

6async def transcribe_audio():

7 uri = "wss://api.openai.com/v1/audio/transcriptions"

8 api_key = "sk-...your-key..."

9 headers = {"Authorization": f"Bearer {api_key}"}

10 async with websockets.connect(uri, extra_headers=headers) as ws:

11 # Send audio chunks (this is a simplified example)

12 # Implement proper audio streaming in production

13 for _ in range(100):

14 audio_chunk = np.random.rand(16000).astype(np.float32).tobytes()

15 await ws.send(audio_chunk)

16 result = await ws.recv()

17 print(result)

18

19asyncio.run(transcribe_audio())

20Using Agents SDK VoicePipeline

For advanced agentic workflows, OpenAI's Agents SDK provides the

VoicePipeline, enabling structured integration of speech to text in conversational agents. If you're building interactive voice applications, leveraging a robust Voice SDK

can help you implement real-time voice features and enhance user engagement.Example: Basic VoicePipeline Integration

1from openai.agents import VoicePipeline

2

3pipeline = VoicePipeline(api_key="sk-...your-key...")

4result = pipeline.transcribe(file_path="audio_sample.wav")

5print(result['text'])

6This approach simplifies integrating speech recognition into multi-step conversational flows. For telephony-based applications, you might also explore a

phone call api

to enable seamless voice interactions over traditional phone networks.OpenAI Whisper: Deep Dive

OpenAI Whisper is an open-source automatic speech recognition (ASR) model, designed for high accuracy and versatility. Whisper stands out for its:

- Multilingual transcription: Supports over 50 languages

- Translation capability: Can translate speech from one language to another

- Noise robustness: Handles poor-quality audio and varied accents

Developers can use Whisper via the OpenAI API or run it locally using the official open-source implementation. This makes it ideal for privacy-conscious or offline applications. For developers seeking to build live audio rooms or conversational platforms, integrating a

Voice SDK

can further enhance the capabilities of your ASR-powered solutions.Best Practices for OpenAI Speech to Text

- Audio Quality: Use high-quality microphones and minimize background noise for best results.

- Chunking Audio: For long recordings, split audio into manageable segments to improve performance and reliability.

- Partial Results: When using streaming, display partial transcriptions to users for better UX.

- Security: Always secure API keys and handle user audio data per relevant privacy regulations (GDPR, CCPA).

Following these best practices ensures your OpenAI speech to text integration is robust, secure, and user-friendly.

Use Cases and Real-World Applications

OpenAI speech to text unlocks numerous real-world scenarios:

- Live Captions: Power accessible live events and media with accurate captions

- Meeting Transcriptions: Automate note-taking for meetings, interviews, and lectures

- Voice Interfaces: Enable voice controls in mobile apps, web platforms, and IoT devices

- Integration: Seamlessly embed transcription into SaaS products, CRM systems, and workflow automation tools

For developers looking to quickly add live audio features, a

Voice SDK

provides a straightforward path to real-time voice integration.Limitations and Considerations

While OpenAI speech to text is powerful, be aware of:

- File size and session limits: Large files may hit API limits or session timeouts

- Supported formats: Ensure your audio is in a compatible format (e.g., WAV, MP3, FLAC)

- Model limitations: Accents, rare languages, or specific jargon may still pose challenges

Conclusion

OpenAI speech to text empowers developers in 2025 to build smarter, more accessible, and efficient applications. Its combination of accuracy, multilingual support, and flexible APIs makes it a top choice for speech recognition solutions. If you're ready to enhance your product with cutting-edge voice and video features,

Try it for free

and start building today!Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ