Introduction to Realistic Text to Speech (TTS)

The term text to speech realistic refers to cutting-edge AI technology that transforms written text into lifelike, natural-sounding speech. Unlike early synthetic voices, modern TTS can convincingly replicate human nuances, intonation, and emotional expression. This leap in audio realism is revolutionizing accessibility, content creation, and user experiences across digital platforms.

Over the past decade, TTS technology has evolved from monotone, robotic outputs to expressive AI voices indistinguishable from actual human speakers. The demand for text to speech realistic solutions is surging, driven by accessibility needs, global content localization, and the rise of voice-driven applications in business, entertainment, and education. In this guide, we explore how these advanced systems work, key features, popular platforms, implementation strategies, ethical issues, and future trends for 2025 and beyond.

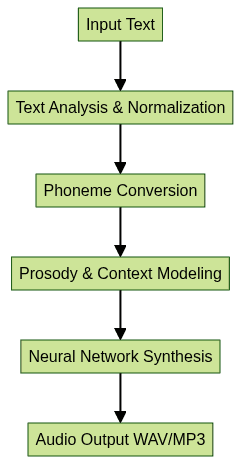

How Realistic Text to Speech Works

At the heart of realistic TTS are deep neural networks—specifically architectures like Tacotron, WaveNet, and FastSpeech. These models learn to map phonemes, intonation, and context from large datasets of recorded speech. The result is natural-sounding TTS that adapts its delivery based on sentence structure, punctuation, and even emotional cues.

Modern TTS engines are context-aware and capable of infusing speech with emotion. They analyze the sentiment and context of text input, allowing the output to reflect happiness, sadness, excitement, or neutrality. This is crucial for creating expressive AI voices that engage listeners and convey nuanced meaning.

For developers, integrating realistic TTS is highly accessible through APIs. Here’s a simple Python example using the ElevenLabs API:

1import requests

2

3def synthesize_speech(text, voice_id, api_key):

4 url = f"https://api.elevenlabs.io/v1/text-to-speech/{voice_id}"

5 headers = {"xi-api-key": api_key, "Content-Type": "application/json"}

6 payload = {"text": text, "voice_settings": {"stability": 0.7, "similarity_boost": 0.8}}

7 response = requests.post(url, headers=headers, json=payload)

8 with open("output.wav", "wb") as f:

9 f.write(response.content)

10

11synthesize_speech("Realistic text to speech brings your words to life!", "Rachel", "YOUR_API_KEY")

12Developers looking to add interactive voice features to their applications can also benefit from solutions like a

Voice SDK

, which streamlines the integration of real-time audio capabilities alongside TTS.Evolution from Robotic to Realistic Voices

Early TTS systems relied on concatenative synthesis or formant-based methods, resulting in robotic, flat speech. With neural networks, TTS now produces outputs rich in inflection, timing, and prosody. Subtle pauses, stress, and changes in pitch give today’s voices a human touch, making TTS viable for everything from audiobooks to virtual assistants.

Key Features of Modern Realistic TTS Solutions

Realistic text to speech platforms in 2025 offer a suite of advanced capabilities:

- Natural Intonation and Cadence: Modern engines deliver speech with authentic pacing, stress, and rhythm, closely mimicking human conversation.

- Voice Customization and Cloning: Users can clone voices from a few minutes of target speech or create custom voices for brands, characters, or accessibility.

- Multilingual & Multicultural Support: Ultra-realistic TTS solutions support dozens of languages, regional accents, and code-switching within sentences.

- Real-Time Synthesis & Streaming: State-of-the-art systems enable low-latency, streaming synthesis for live applications like customer support or gaming.

- Accessibility Benefits: Realistic TTS powers screen readers, learning tools, and assistive technology, opening digital content to a broader audience.

For applications that require seamless communication, integrating a

phone call api

can further enhance user experience by enabling direct voice interactions powered by TTS.

Emotional & Contextual Delivery

AI-driven TTS doesn’t just read words; it interprets intent. By analyzing sentence structure, punctuation, and sentiment, TTS can deliver excitement in an announcement, empathy in customer service, or neutrality in news reading. This emotional awareness enhances user experience and engagement, making TTS ideal for dynamic, interactive applications.

If your project also involves video communication, consider leveraging a

Video Calling API

to combine realistic TTS with high-quality video calls for a fully immersive experience.Popular Realistic Text to Speech Platforms

Several platforms lead the 2025 landscape for AI voice generator and professional voiceover AI services:

- ElevenLabs: Renowned for ultra-realistic TTS and advanced voice cloning. Supports multi-emotional delivery, real-time streaming, and a rich API for developers. Pricing: Free tier for basic usage, paid plans from $5/month.

- OctaveTTS: Focuses on multilingual, multicultural TTS with expressive AI voices. Offers robust developer SDKs and context-aware emotion modeling. Pricing: Usage-based model, with enterprise support.

- zVoice AI: Specializes in high-fidelity, customizable voices for entertainment and gaming. Features include dynamic pitch control and audio effects. Pricing: Pay-as-you-go API with volume discounts.

- Nari Dia: A popular choice among content creators for professional voiceover AI. Provides simple web and API interfaces, extensive voice library, and voice cloning. Pricing: Subscription-based, starting at $10/month.

- Natural TTS Labs: Targets businesses with scalable, secure TTS for customer service, accessibility, and internationalization. Standout features: GDPR compliance, private voice deployments. Pricing: Custom enterprise plans.

- Minimax: Known for real-time speech synthesis and integration with IoT devices. Supports voice customization and multi-device streaming. Pricing: Tiered, with a free developer sandbox.

For those seeking to add interactive audio rooms or live discussions, a

Voice SDK

can be a valuable addition to TTS-powered platforms.Each platform differentiates by voice quality, language support, customization, and integration flexibility. Developers and businesses should evaluate APIs, latency, data privacy, and total cost of ownership when choosing a solution.

Use Cases: Who Benefits from Realistic TTS?

- Content Creators: Generate podcasts, audiobooks, and videos without recording studios.

- Businesses: Automate customer support, create interactive voice response (IVR), and localize content globally.

- Accessibility: Empower users with visual impairments or reading challenges.

- Entertainment: Power in-game dialogue, NPCs, and dynamic storytelling.

- Language Learning: Offer immersive, multilingual speech experiences for learners worldwide.

Live events and broadcasts can also benefit from a

Live Streaming API SDK

, which allows creators to deliver real-time, high-quality audio experiences enhanced by realistic TTS.Implementing Realistic TTS: A Step-by-Step Guide

1. Choose the Right TTS Platform

Assess your needs—voice quality, language support, emotion modeling, and API compatibility. Review platform documentation and pricing to align with your project goals.

If your application requires robust audio and video integration, you might want to explore a

python video and audio calling sdk

for seamless development in Python environments.2. Set Up Your Project

Register for API access and obtain credentials. Most platforms provide SDKs or REST APIs for easy integration into Python, JavaScript, or other environments.

For developers building communication tools, integrating a

phone call api

alongside TTS can streamline voice interactions and enhance user engagement.3. Customize Voices and Parameters

Select or clone voices, adjust parameters like pitch, speed, and emotion. Many platforms allow fine-tuning for unique brand voices or characters.

4. Sample Implementation

Here’s a generic example using OctaveTTS (Python):

1import requests

2

3def tts_octave(text, voice, api_key):

4 url = "https://api.octavetts.com/v1/synthesize"

5 headers = {"Authorization": f"Bearer {api_key}", "Content-Type": "application/json"}

6 data = {"text": text, "voice": voice, "emotion": "excited"}

7 r = requests.post(url, headers=headers, json=data)

8 if r.status_code == 200:

9 with open("octave_output.wav", "wb") as f:

10 f.write(r.content)

11

12# Usage Example

13tts_octave("Experience ultra-realistic speech synthesis for your project!", "en_US_female_2", "YOUR_API_KEY")

14For those seeking to enable real-time audio rooms or collaborative voice features, a

Voice SDK

can be seamlessly integrated with your TTS workflow.Integration Tips for Developers & Creators

- Embedding in Apps/Websites: Use client-side SDKs or RESTful APIs to trigger TTS on demand. Cache frequent outputs to improve performance.

- Handling Languages & Accents: Structure your UI to allow users to select language, accent, and emotion. Use fallback voices for unsupported combinations. Test for pronunciation accuracy in target markets.

If you want to experiment with these tools and see how they fit your workflow, you can

Try it for free

and start building with advanced TTS and voice capabilities today.Challenges & Ethical Considerations

As voice cloning and ultra-realistic TTS mature, so do risks:

- Deepfake Risks: Malicious actors can misuse TTS to impersonate individuals, spread misinformation, or conduct fraud. Platforms must implement watermarking and traceability.

- Copyright & Consent: Always obtain explicit consent when cloning voices. Respect intellectual property rights for voice data, especially for commercial use.

- AI Safety & Responsible Use: Deploy TTS responsibly by monitoring outputs, preventing abusive scenarios (e.g., harassment, fake news), and adhering to platform usage policies and legal frameworks. Organizations should establish clear ethical guidelines and audit TTS deployments regularly.

The Future of Realistic Text to Speech

By 2025, text to speech realistic engines will feature even deeper context awareness, emotional intelligence, and real-time multilingual translation. Ongoing research is focused on zero-shot voice transfer (cloning a voice from minimal data), expressive prosody control, and seamless integration with AR/VR and IoT systems.

We anticipate widespread adoption across customer engagement, education, media, and accessibility. Regulatory frameworks and AI safety standards will shape responsible development, ensuring that lifelike speech benefits society while minimizing risks.

Conclusion

Realistic text to speech is transforming digital communication, accessibility, and content creation. With neural TTS, lifelike voices are accessible to developers, businesses, and creators worldwide. As platforms grow more sophisticated, now is the time to explore and implement these solutions—unlocking new levels of engagement, inclusion, and innovation in 2025 and beyond.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ