Introduction to Google Cloud Text-to-Speech API

The Google Cloud Text-to-Speech API transforms written text into natural-sounding speech, leveraging advanced deep learning models. This cloud-based API empowers developers to build accessible, interactive, and engaging applications by integrating high-quality speech synthesis. Industries ranging from telecommunications (IVR), assistive technology (screen readers), to media (podcast narration, video voiceovers) benefit from this API. With support for multiple languages, neural voices, and flexible output formats, Google Cloud Text-to-Speech is a critical component in the modern developer's toolkit. As real-time voice interfaces and accessibility requirements grow in 2025, integrating robust speech synthesis is more important than ever.

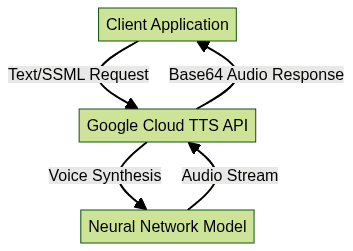

How Google Cloud Text-to-Speech API Works

Google Cloud Text-to-Speech API uses deep neural networks to convert text or Speech Synthesis Markup Language (SSML) into speech. The process involves submitting a request specifying the input text, desired language, voice, and audio output format. The API supports premium neural voices for highly realistic output, and standard voices for cost-effective use. Multilingual support ensures global reach. SSML lets you control pronunciation, pauses, pitch, and emphasis for a truly customized experience.

If you're looking to add real-time voice features to your applications, consider integrating a

Voice SDK

alongside Google Cloud Text-to-Speech for seamless audio experiences.

Setting Up Google Cloud Text-to-Speech API

Prerequisites and Project Setup

To start, you need a Google Cloud account. Visit

Google Cloud Console

and create a new project. Ensure that billing is enabled for your project, as most features require an active billing account. Organize your resources under this project for easier management and cost tracking.For developers building communication solutions, integrating a

phone call api

can further enhance your application's capabilities, enabling both text-to-speech and real-time calling features.Enabling the API and Authentication

Within your project, navigate to the "APIs & Services" dashboard and enable the "Text-to-Speech API". Next, configure authentication. Create a service account with the appropriate permissions and download its JSON key file. This file will be used by client libraries and REST calls to authenticate your requests, ensuring secure access to your project resources.

If your application requires both video and audio communication, you might also explore the

python video and audio calling sdk

for seamless integration with Python-based projects.Installing Client Libraries

Google provides client libraries for Python, Java, Node.js, Go, and more. To install the Python client library, run:

1pip install --upgrade google-cloud-texttospeech

2For other languages, refer to

Google Cloud Client Libraries documentation

. JavaScript developers can leverage thejavascript video and audio calling sdk

to add robust communication features alongside speech synthesis.Making Your First Request

Using the REST API

The primary endpoint for the API is:

1POST https://texttospeech.googleapis.com/v1/text:synthesize

2A sample REST request using

curl:1curl -X POST \

2 -H \"Authorization: Bearer $(gcloud auth application-default print-access-token)\" \

3 -H \"Content-Type: application/json\" \

4 --data '{

5 "input": {"text": "Hello, world!"},

6 "voice": {"languageCode": "en-US", "name": "en-US-Wavenet-D"},

7 "audioConfig": {"audioEncoding": "MP3"}

8 }' \

9 https://texttospeech.googleapis.com/v1/text:synthesize

10Sample Python code for REST request:

1import requests

2import json

3

4def synthesize_text(text, token):

5 url = \"https://texttospeech.googleapis.com/v1/text:synthesize\"

6 headers = {"Authorization": f"Bearer {token}", "Content-Type": "application/json"}

7 body = {

8 "input": {"text": text},

9 "voice": {"languageCode": "en-US", "name": "en-US-Wavenet-D"},

10 "audioConfig": {"audioEncoding": "MP3"}

11 }

12 response = requests.post(url, headers=headers, data=json.dumps(body))

13 return response.json()

14If you want to build interactive voice experiences, integrating a

Voice SDK

can help you create live audio rooms and enhance user engagement.Using Client Libraries

With the official Python library, you can synthesize speech easily:

1from google.cloud import texttospeech

2

3client = texttospeech.TextToSpeechClient()

4

5synthesis_input = texttospeech.SynthesisInput(text=\"Hello, world!\")

6voice = texttospeech.VoiceSelectionParams(

7 language_code=\"en-US\", name=\"en-US-Wavenet-D\"

8)

9audio_config = texttospeech.AudioConfig(audio_encoding=texttospeech.AudioEncoding.MP3)

10

11response = client.synthesize_speech(

12 input=synthesis_input, voice=voice, audio_config=audio_config

13)

14with open(\"output.mp3\", \"wb\") as out:

15 out.write(response.audio_content)

16Client libraries are available for Java, Node.js, and Go, with similar usage patterns and authentication mechanisms. For applications that require both audio and video communication, integrating a

Video Calling API

can provide a comprehensive solution.Configuring Voices, Languages, and Audio Output

Supported Voices and Languages

Google Cloud Text-to-Speech supports 300+ voices across 50+ languages and variants. You can select among standard, neural, and studio voices, specifying gender, accent, and even specific voice names. For the latest supported voices and languages, consult the

official voice list

.When building multilingual or global applications, combining Text-to-Speech with a

Voice SDK

ensures your users enjoy high-quality, real-time voice interactions.Customizing Output with SSML

SSML (Speech Synthesis Markup Language) enables fine-tuned control over speech output—altering pitch, rate, volume, pauses, and pronunciation. Example SSML request:

1ssml = """

2<speak>

3 Welcome to <emphasis level='strong'>Google Cloud</emphasis> Text-to-Speech!

4 <break time='700ms'/>

5 Enjoy customizing your <prosody pitch='+2st' rate='90%'>voice output</prosody>.

6</speak>

7"""

8

9synthesis_input = texttospeech.SynthesisInput(ssml=ssml)

10Audio Profiles and Formats

Optimize audio for different devices using audio profiles (e.g., phone, headset, car speakers). Output formats include MP3, LINEAR16 (WAV), and OGG_OPUS, set via the

audioEncoding parameter in your request.For telephony and IVR solutions, integrating a

phone call api

can help you deliver synthesized speech directly over phone calls.Advanced Features and Best Practices

Using Neural and Studio Voices

Neural voices, powered by deep learning, provide lifelike speech and are ideal for high-quality applications. Studio voices offer even higher fidelity and naturalness, though at a premium price. Both are suited for professional-grade media, IVR, and accessibility solutions.

If your project requires advanced real-time voice features, a

Voice SDK

can help you implement live audio rooms and interactive voice experiences.Real-Time and Batch Processing

Real-time TTS is critical for chatbots and accessibility tools, while batch processing serves media production and large content conversion. For real-time use, minimize request payload and prefetch tokens. For batch, use asynchronous job queuing and storage integration.

Security, Quotas, and Pricing

Secure your API keys—never expose them publicly. Monitor quotas and usage in the Cloud Console, and set up budget alerts to manage costs. Pricing is based on character count and voice type; review

Text-to-Speech pricing

for up-to-date rates.Integrating with Vertex AI and Other Google Services

Vertex AI Studio enables seamless integration with multimodal and generative AI workflows. You can orchestrate speech synthesis as part of pipelines involving text, image, and video analysis. For example, generate captions with Vertex AI then synthesize audio using Text-to-Speech for accessibility. Vertex AI offers advanced model management, monitoring, and versioning, but may introduce additional complexity and cost. Use Vertex AI for large-scale, AI-driven applications or when combining TTS with other ML services.

Common Use Cases and Implementation Examples

- IVR Systems:

python response = client.synthesize_speech( input=texttospeech.SynthesisInput(text="For sales, press 1..."), voice=voice, audio_config=audio_config) - Accessibility Tools:

python ssml = "<speak>Your unread messages: <break time='500ms'/>2 new emails.</speak>" response = client.synthesize_speech( input=texttospeech.SynthesisInput(ssml=ssml), voice=voice, audio_config=audio_config) - Media Narration:

python narration_text = "Today in tech news, Google announced..." response = client.synthesize_speech( input=texttospeech.SynthesisInput(text=narration_text), voice=voice, audio_config=audio_config) - Chatbots:

python chatbot_reply = "How can I help you today?" response = client.synthesize_speech( input=texttospeech.SynthesisInput(text=chatbot_reply), voice=voice, audio_config=audio_config)

For developers interested in experimenting with these features, you can

Try it for free

to explore SDKs and APIs that complement Google Cloud Text-to-Speech.Troubleshooting and Support

Common issues include authentication failures, quota limits, and invalid request parameters. Always check error messages and consult the

API documentation

. Enable verbose logging for debugging. For persistent issues, use Google Cloud support or community forums. Regularly monitor API status and updates for new features or changes.Conclusion & Next Steps

Google Cloud Text-to-Speech API unlocks powerful, scalable speech synthesis for modern applications. Explore advanced features, review official guides, and experiment with SSML and neural voices to deliver exceptional voice experiences in 2025 and beyond.

If you're ready to enhance your applications with advanced voice and communication features, consider integrating a

Voice SDK

to take your projects to the next level.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ