Introduction to Text to Speech Synthesis

Text to speech synthesis (TTS) is the technology that converts written text into spoken audio. At the intersection of computational linguistics, digital signal processing, and artificial intelligence, TTS has evolved from basic robotic voices to near-humanlike speech generation. Originally used in early accessibility tools, text to speech synthesis now powers everything from voice assistants to media production, automated customer service, and educational platforms. As of 2025, advancements in neural networks and deep learning have enabled TTS systems to deliver natural-sounding, expressive, and multilingual speech, making synthetic speech an essential component in modern digital experiences.

How Text to Speech Synthesis Works

The Science Behind Speech Synthesis

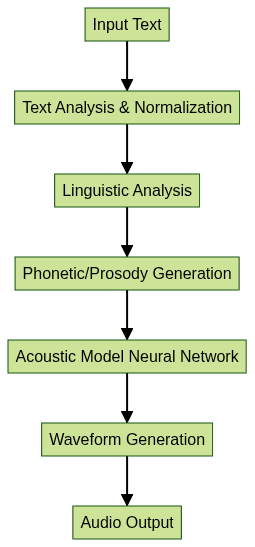

Text to speech synthesis pipelines transform input text through several technical stages, resulting in intelligible speech output. The general TTS pipeline can be visualized as:

Each stage involves complex processing: tokenizing text, mapping words to phonemes, applying prosody (rhythm, pitch), and generating speech waveforms using digital audio synthesis. For developers building interactive voice applications, integrating a

Voice SDK

can streamline the process of adding real-time audio features alongside TTS.Neural Networks, Deep Learning, and SSML in TTS

The latest TTS systems leverage AI and deep learning, particularly neural network architectures like Tacotron and WaveNet, to generate more natural, context-aware, and expressive voices. These models learn from vast datasets of recorded speech, enabling them to mimic nuanced human intonation and emotion.

A key tool for fine-grained speech control is Speech Synthesis Markup Language (

SSML

). SSML lets developers adjust pronunciation, pitch, speed, pauses, and emphasis. Here’s an SSML example that modifies pitch and speaking rate:1<speak>

2 <prosody rate="85%" pitch="+5st">This is a slow, higher-pitched TTS example.</prosody>

3</speak>

4Types of Text to Speech Synthesis Solutions

Browser-based TTS

Modern browsers support TTS via the Web Speech API, enabling real-time speech generation directly in the client. Browser-based TTS is ideal for accessibility features, web apps, and quick prototyping. It requires no server roundtrips and supports basic voice selection and speech controls. If you’re building browser-based communication tools, consider leveraging a

javascript video and audio calling sdk

to add both TTS and real-time audio/video capabilities.Cloud-based TTS and APIs

Cloud TTS solutions, such as

Google Cloud Text-to-Speech

andOpenAI Text to Speech API

, offer scalable, high-fidelity, and multilingual TTS services. These platforms provide hundreds of neural voices, language options, advanced SSML support, and real-time streaming. For Python developers, integrating apython video and audio calling sdk

can further enhance your applications by combining TTS with robust audio/video communication features.Here’s a Python example using Google Cloud’s TTS API:

1from google.cloud import texttospeech

2

3client = texttospeech.TextToSpeechClient()

4input_text = texttospeech.SynthesisInput(text="Hello, world!")

5voice = texttospeech.VoiceSelectionParams(

6 language_code="en-US", ssml_gender=texttospeech.SsmlVoiceGender.NEUTRAL)

7audio_config = texttospeech.AudioConfig(

8 audio_encoding=texttospeech.AudioEncoding.MP3)

9

10response = client.synthesize_speech(

11 input=input_text, voice=voice, audio_config=audio_config)

12

13with open("output.mp3", "wb") as out:

14 out.write(response.audio_content)

15Custom and Humanlike Voices

Leading TTS providers now enable custom voice creation for voice branding and personalization. By training on specific speaker data, organizations can deploy unique, branded, or localized voices for marketing, media, or accessibility use cases, allowing for consistent digital identity across platforms. For businesses seeking to integrate TTS into telephony, exploring a

phone call api

can help bridge the gap between synthetic speech and real-time voice communication.Key Features to Look For in Text to Speech Synthesis

Voice Selection, Languages, and Accents

A robust text to speech synthesis platform offers a wide selection of voices (male/female, age, emotion) across dozens of languages and regional accents. Multilingual TTS is crucial for global applications, content localization, and serving diverse user bases. Developers aiming for interactive experiences can benefit from a

Voice SDK

that supports diverse voice options and seamless integration.Control Features: Speed, Pitch, and SSML

Fine-grained control over voice output is critical. With SSML, developers can customize speech rate, pitch, volume, pauses, and more. For example:

1<speak>

2 <prosody rate="120%" pitch="-2st">Faster, slightly lower voice output.</prosody>

3</speak>

4This enables dynamic audio conversion tailored for specific content, audiences, or accessibility needs.

Real-Time Processing and Streaming

Real-time TTS streaming is vital for interactive applications, such as chatbots, voice assistants, and live media, ensuring low latency and seamless user experiences. Platforms offering a

Live Streaming API SDK

can help developers deliver synchronized TTS and live audio/video for engaging audience interactions.Major Use Cases for Text to Speech Synthesis

Accessibility and Assistive Technology

TTS is foundational for digital accessibility, enabling screen readers, reading aids, and voice user interfaces for visually impaired users or those with reading challenges. Automated voice solutions ensure inclusivity in software and devices.

Content Creation and Media

Synthetic speech is revolutionizing media production, powering voiceover for videos, audiobooks, podcasts, and automated dialogue generation for games and animation. TTS accelerates content creation workflows and offers cost-effective voiceover options. For creators looking to add interactive voice features, a

Voice SDK

can be a valuable asset for integrating TTS with live audio environments.Education and Language Learning

TTS enhances e-learning platforms, language learning apps, and pronunciation guides. Learners benefit from consistent, multilingual audio, real-time feedback, and interactive lessons powered by natural-sounding speech generation.

Customer Service and Automation

Automated TTS powers call center IVRs, chatbots, customer notifications, and real-time translations, streamlining customer interactions and reducing operational costs with scalable synthetic voice solutions. Integrating a

Video Calling API

can further enable seamless transitions between automated TTS and live human support.Hands-On Guide: Implementing Text to Speech Synthesis

Quick Start with a TTS API

To implement text to speech synthesis quickly, use a cloud TTS API. Here’s a Node.js example with OpenAI’s TTS (2025):

1const axios = require("axios");

2

3(async () => {

4 const response = await axios.post(

5 "https://api.openai.com/v1/audio/speech",

6 {

7 model: "tts-1",

8 input: "Hello, this is OpenAI TTS in 2025!",

9 voice: "alloy"

10 },

11 {

12 headers: { Authorization: "Bearer YOUR_API_KEY" }

13 }

14 );

15 require("fs").writeFileSync("speech.mp3", response.data);

16})();

17Voice Customization with SSML

Advanced TTS systems accept SSML for precise control. For example:

1<speak>

2 <voice name="en-US-Wavenet-D">

3 <prosody volume="loud">Welcome to the future of speech technology!</prosody>

4 </voice>

5</speak>

6Integrating TTS in Web Applications

Browsers support speech synthesis natively. Here’s a JavaScript example:

1const msg = new SpeechSynthesisUtterance("Browser-based text to speech synthesis demo.");

2msg.lang = "en-US";

3msg.rate = 1.2;

4msg.pitch = 1.1;

5window.speechSynthesis.speak(msg);

6For developers looking to combine TTS with real-time audio rooms, a

Voice SDK

can simplify integration and enhance user engagement.Choosing the Right Text to Speech Synthesis Provider

When selecting a TTS provider, consider:

- Language and accent support

- Voice quality (neural/standard)

- Pricing and usage limits

- SSML and real-time streaming

- Data privacy and custom voice options

| Provider | Languages | Neural Voices | Real-Time | SSML | Custom Voice |

|---|---|---|---|---|---|

| Google Cloud | 40+ | Yes | Yes | Yes | Yes |

| OpenAI | 10+ | Yes | Yes | Yes | Limited |

| Amazon Polly | 30+ | Yes | Yes | Yes | Yes |

Future Trends and Ethical Considerations in Text to Speech Synthesis

2025 brings breakthroughs in humanlike voices, multilingual TTS, and real-time synthesis for voice assistants, marketing, and media. However, risks such as deepfake audio, consent, and voice misuse require robust policy, watermarking, and ethical frameworks. Developers must prioritize responsible deployment and transparency.

Conclusion

Text to speech synthesis in 2025 empowers developers to build inclusive, engaging, and innovative products. Start exploring TTS APIs, SSML, and custom voices to enhance your applications today. If you’re ready to take the next step,

Try it for free

and experience the latest in text to speech synthesis technology.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ