Introduction to Whisper Speech to Text

Automatic Speech Recognition (ASR) has become foundational in modern computing, powering everything from virtual assistants to automated meeting notes. Developers increasingly rely on accurate speech to text to drive innovation in accessibility, productivity, and content generation. As audio data explodes in volume, the demand for robust, scalable, and privacy-conscious ASR solutions is at an all-time high.

Whisper speech to text, an open source project by OpenAI, has rapidly emerged as a go-to tool for developers seeking high-quality, multilingual transcription. Its ease of integration, advanced features, and strong community support position it as a leader in the ASR landscape of 2025. Whether you’re building a transcription pipeline, enhancing user accessibility, or enabling real-time communication, Whisper offers the flexibility and power needed for modern applications.

What is Whisper Speech to Text?

Whisper speech to text is an open source, end-to-end ASR system developed by OpenAI. Released in late 2022, Whisper quickly gained traction for its remarkable accuracy across diverse languages and accents. Built on a large-scale transformer model, it has been trained on 680,000 hours of multilingual and multitask supervised data gathered from the web.

Key features of Whisper include:

- High accuracy for Whisper transcription tasks, including in noisy environments and with various accents.

- Multilingual support: Capable of recognizing and transcribing dozens of languages and dialects, making it ideal for global applications.

- Diarization: Identifies and separates speakers within a conversation, critical for meeting and podcast transcription.

- Timestamps: Outputs precise timing information for each segment, enabling seamless subtitle generation and audio navigation.

As an open source project, Whisper speech to text has fostered a vibrant ecosystem of community-driven enhancements, wrappers, and integrations—including Whisperx, faster-whisper, and browser-based utilities. Its flexibility and extensibility keep it at the forefront of speech recognition research and application. For developers looking to add real-time communication features, integrating a

Video Calling API

alongside Whisper can enable seamless audio and video experiences.How Whisper Speech to Text Works

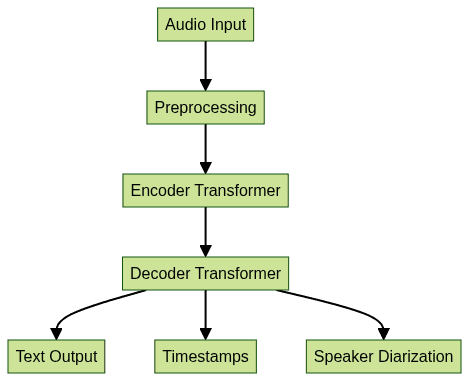

At the core of Whisper speech to text lies a transformer-based deep learning architecture, inspired by advances in Natural Language Processing (NLP). The model ingests raw audio, processes it through a series of neural network layers, and outputs transcriptions, timestamps, and optionally, speaker diarization.

Model Architecture

- Encoder: Converts raw audio into feature representations using a convolutional front-end and transformer layers.

- Decoder: Generates transcriptions, timestamps, and language predictions from encoded features.

- Training Data: Trained on large, diverse datasets to generalize across accents, languages, and environments.

Supported Languages and File Formats

Whisper supports over 90 languages, including English, Spanish, Mandarin, and Arabic. It handles a variety of audio formats—such as WAV, MP3, FLAC, and M4A—making it suitable for most modern audio workflows.

Transcription API and Integration

Whisper can be run locally via Python, deployed as a cloud API, or integrated into JavaScript/browser contexts. It is also accessible via popular wrappers like Whisperx and faster-whisper for enhanced speed and additional features. If you’re building browser-based collaboration tools, consider leveraging a

javascript video and audio calling sdk

to enable interactive communication features alongside transcription.Model Workflow Diagram

Implementing Whisper Speech to Text: Step-by-Step

System Requirements and Setup

To implement Whisper speech to text, you’ll need:

- Python 3.8+ (for Python environments)

- CUDA-compatible GPU (optional, for faster inference)

- Node.js (for JavaScript/browser implementation)

- Audio files in supported formats

If you’re building cross-platform audio and video solutions, integrating a

python video and audio calling sdk

can complement your Whisper-based transcription pipeline for seamless communication.Installing Whisper

Python Installation

Whisper can be installed using pip:

bash

pip install openai-whisperJavaScript Installation

For Node.js environments, use:

bash

npm install whisper-asr-webserviceBrowser-based Whisper

Projects like Whisper Web and browser-based wrappers allow in-browser transcription, leveraging WebAssembly and server APIs. For developers seeking to

embed video calling sdk

functionality, these browser-based solutions can be combined with Whisper for a unified communication experience.Using Whisper in Jupyter Notebooks

For research, experimentation, and rapid prototyping, Whisper works seamlessly in Jupyter environments. Enhanced wrappers like Whisperx and faster-whisper boost speed and add diarization features.

Installing Whisperx

1pip install whisperx

2Installing faster-whisper

1pip install faster-whisper

2Python Whisper Example

1import whisper

2model = whisper.load_model("base")

3result = model.transcribe("audio.wav")

4print(result["text"])

5JavaScript Whisper Example

1const whisper = require('whisper-asr-webservice');

2whisper.transcribe('audio.wav').then(result => {

3 console.log(result.text);

4});

5Real-time Transcription

For real-time applications, use streaming APIs or wrappers like faster-whisper. Segment audio and process in batches for minimal latency. If your application requires live audio features, integrating a

Voice SDK

can provide scalable and interactive audio rooms alongside Whisper’s transcription.Batch Processing and Long Audios

Handle large files by splitting audio into segments and transcribing in parallel. This approach improves speed and resource utilization, especially on limited hardware.

Example: Transcribing Audio with Whisper

Here’s a simple walkthrough for transcribing an audio file using Python Whisper:

1import whisper

2model = whisper.load_model("small")

3result = model.transcribe("meeting.mp3")

4print(f"Transcript: {result['text']}")

5This script loads the "small" Whisper model, transcribes "meeting.mp3", and prints the resulting text. For longer files, consider using the "medium" or "large" models for better accuracy.

Example: Speaker Diarization with Whisper

Whisper alone does not perform diarization, but Whisperx and NVIDIA NeMo can post-process Whisper outputs to identify speakers. Here’s how to use Whisperx for diarization:

1import whisperx

2model = whisperx.load_model("base", device="cuda")

3result = model.transcribe("interview.wav", diarize=True)

4for segment in result["segments"]:

5 print(f"Speaker {segment['speaker']}: {segment['text']}")

6This code loads Whisperx with diarization enabled, processes "interview.wav", and prints each speaker’s transcribed text. Whisperx assigns speaker labels, enabling clear separation of dialogue in multi-speaker audio. For mobile and Android developers, exploring

webrtc android

solutions can further enhance real-time communication capabilities in your apps.Advantages and Limitations of Whisper Speech to Text

Strengths

- Accuracy: Outperforms many open source ASR systems, especially in multilingual and noisy environments.

- Speed: With GPU acceleration or optimized wrappers (faster-whisper), real-time and batch processing are feasible.

- Open Source: No licensing fees, full transparency, and extensibility.

- Privacy: On-premises deployment ensures sensitive data never leaves your infrastructure.

If you’re building applications that require phone integration, consider using a

phone call api

to connect Whisper’s transcription capabilities with telephony features.Weaknesses

- Resource Requirements: Larger models demand significant RAM and GPU resources.

- Noisy Audio Challenges: Performance drops in very noisy conditions or with overlapping speakers.

Comparison with Other ASR Tools

Compared to Google Speech-to-Text, Microsoft Azure, and Amazon Transcribe, Whisper offers comparable accuracy and flexibility at zero cost—but may lag in ease of cloud integration and advanced diarization. However, its community-driven pace means rapid improvement and plentiful alternatives. For developers seeking to add robust video conferencing, a

Video Calling API

can be integrated to create a comprehensive communication platform.Real-World Use Cases

Whisper speech to text is powering a wide range of applications:

- Podcast and Video Transcription: Automate subtitle and transcript generation for media production.

- Accessibility for the Hearing Impaired: Enable live captioning and accessible content for users with hearing loss.

- Real-Time Meeting Transcription: Integrate with conferencing platforms to provide instant, searchable meeting notes.

- Multilingual Content Creation: Translate and transcribe webinars, tutorials, and lectures for a global audience.

Developers are integrating Whisper into SaaS tools, mobile apps, and browser extensions, leveraging its open source flexibility to accelerate innovation in 2025. For those looking to add interactive audio features, integrating a

Voice SDK

can further enhance real-time communication within your applications.Best Practices, Tips, and Troubleshooting

- Improve Accuracy: Use high-quality microphones and lossless audio formats; select larger Whisper models for complex tasks.

- Optimize Performance: Batch process long audios, utilize GPU acceleration, and select the right wrapper (Whisperx, faster-whisper) for your use case.

- Error Handling: Watch for issues like truncated outputs (split long files), or model crashes (reduce batch size or use smaller models).

- Language Selection: For best results, explicitly specify the target language when transcribing multilingual content.

If you want to quickly add video calling to your web app, consider using an

embed video calling sdk

for rapid deployment and seamless integration.Conclusion

Whisper speech to text is redefining automatic speech recognition in 2025, empowering developers to build fast, accurate, and privacy-first transcription solutions. Its open source nature, community innovation, and exceptional language support make it a top choice for modern applications. As ASR technology continues to evolve, Whisper’s impact will only grow, ushering in new possibilities for communication, accessibility, and automation.

Ready to build the next generation of voice and video applications?

Try it for free

and start integrating Whisper speech to text with powerful communication SDKs today!Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ