Introduction to Speech Recognition Python

Speech recognition in Python has revolutionized the way developers build interactive, hands-free, and accessible software. As the demand for smart assistants, automated transcription, and voice-controlled applications grows, Python’s flexible ecosystem offers robust tools to convert human speech into text efficiently. From simple scripts to advanced machine learning systems, speech recognition Python techniques are now powering real-world applications in healthcare, accessibility, customer support, and IoT.

With the advent of reliable speech-to-text Python libraries, developers can integrate voice interfaces, automate note-taking, transcribe meetings, or even build cross-platform voice assistants. This guide covers everything you need to know about speech recognition Python in 2025—installation, libraries, real-time recognition, error handling, and best practices for accuracy. Whether you’re a beginner or a seasoned developer, mastering Python speech recognition will open new possibilities for natural language processing and user experience.

Understanding Speech Recognition vs. Voice Recognition

Before diving into implementation, it’s crucial to differentiate speech recognition and voice recognition. Speech recognition focuses on converting spoken words into machine-readable text. It’s designed for understanding what is being said, regardless of who is speaking. In contrast, voice recognition (or speaker recognition) aims to identify or verify the identity of the speaker based on their voice characteristics.

When working on Python projects, use speech recognition for tasks like transcription, command detection, or accessibility features. Use voice recognition when authentication or personalization is needed. Understanding this difference ensures you select the right Python libraries and APIs for your application’s goals. For developers looking to add real-time communication features, integrating a

python video and audio calling sdk

can further enhance the interactivity of your voice-enabled applications.How Speech Recognition Works in Python

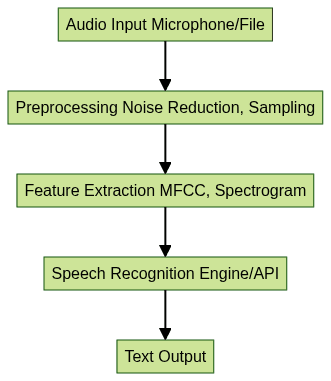

Speech recognition in Python generally follows a multi-step workflow, regardless of the engine or API. Here’s a high-level overview:

- Audio Input: Capture from a microphone or load an audio file.

- Preprocessing: Clean and standardize audio, remove noise.

- Feature Extraction: Transform audio into features for recognition (e.g., MFCCs).

- Speech Recognition Engine: Use APIs or open-source engines (Google, Sphinx, IBM, AssemblyAI, Whisper, etc.) to process and transcribe.

- Text Output: Receive and process the recognized text in Python.

Python’s ecosystem supports both cloud-based (Google SpeechRecognition API, IBM Watson, AssemblyAI) and offline engines (CMU Sphinx, Whisper). Each offers trade-offs between accuracy, privacy, latency, and cost. For those interested in building interactive audio experiences, consider exploring a

Voice SDK

to add live audio room capabilities to your Python applications.Popular Python Libraries for Speech Recognition

Python boasts several libraries and APIs to make speech recognition accessible and powerful:

- SpeechRecognition: The most popular library, providing a simple interface to multiple engines (Google, Sphinx, IBM, etc.). It handles audio input, recognition requests, and error handling.

- PyAudio: Essential for microphone input, enabling real-time audio capture and streaming. Often used alongside SpeechRecognition.

- Pocketsphinx: Lightweight, offline engine from CMU Sphinx. Good for embedded or privacy-sensitive applications.

- Whisper (OpenAI): Neural network-based, robust for multiple languages and accents. Can run locally or via APIs.

- AssemblyAI, DeepSpeech, Vosk: Cloud and on-premise APIs offering advanced speech-to-text, diarization, and language support.

Pros & Cons:

- SpeechRecognition: Easy to use, supports multiple engines, but real-time performance depends on backend.

- PyAudio: Real-time capture, but installation can be tricky on some platforms.

- Pocketsphinx: Offline, but less accurate and harder to tune for some languages.

- Whisper: State-of-the-art accuracy, heavier resource requirements.

For most applications, combining SpeechRecognition with PyAudio (for live input) or Whisper (for advanced transcription) provides the best balance in 2025. If your project requires seamless integration of audio and video communication, leveraging a

python video and audio calling sdk

can streamline the process and enhance user experience.Setting Up Speech Recognition Python Environment

To get started with speech recognition python, ensure you have Python 3.x (Python 3.8+ recommended for best compatibility). Setting up your environment involves installing a few core libraries:

1# Install SpeechRecognition

2pip install SpeechRecognition

3

4# For microphone input, install PyAudio

5pip install PyAudio

6

7# For offline recognition (CMU Sphinx)

8pip install pocketsphinx

9

10# For OpenAI Whisper (optional)

11pip install openai-whisper

12Troubleshooting Installation:

- On Windows, PyAudio may require pre-built wheels (

pip install pipwin && pipwin install pyaudio). - On Linux, you may need development headers:

sudo apt-get install portaudio19-dev python3-pyaudiobefore installing PyAudio. - For Whisper, GPU (CUDA) support is recommended for large models.

Check your Python version:

1import sys

2print(sys.version)

3If you encounter issues, refer to each library’s documentation or search for platform-specific fixes. Keeping your environment updated ensures smoother installations and fewer compatibility issues. For those interested in adding phone-based communication features, integrating a

phone call api

can be a valuable addition to your Python environment.Basic Speech Recognition Python Example

Let’s start with a simple example: transcribing an audio file using the SpeechRecognition library. This example demonstrates best practices for error handling and clarity.

1import speech_recognition as sr

2

3# Initialize recognizer

4recognizer = sr.Recognizer()

5

6# Load audio file

7with sr.AudioFile("audio.wav") as source:

8 audio_data = recognizer.record(source)

9

10try:

11 # Recognize speech using Google Web Speech API

12 text = recognizer.recognize_google(audio_data)

13 print(f"Transcribed Text: {text}")

14except sr.UnknownValueError:

15 print("Error: Could not understand the audio.")

16except sr.RequestError as e:

17 print(f"API Error: {e}")

18Explanation:

- Loads an audio file and initializes the recognizer.

- Handles

UnknownValueErrorif speech isn’t recognized. - Handles

RequestErrorfor API/network issues.

This pattern forms the core of most speech recognition python workflows. If you’re looking to build real-time voice chat or conferencing features, a

Voice SDK

can help you add scalable live audio rooms to your Python applications.Real-Time Speech Recognition in Python Using Microphone

Real-time speech-to-text python applications use live microphone input. Here’s how to capture audio, adjust for background noise, and recognize speech on-the-fly:

1import speech_recognition as sr

2

3recognizer = sr.Recognizer()

4

5# Use default microphone as the source

6with sr.Microphone() as source:

7 print("Calibrating for ambient noise... Please wait.")

8 recognizer.adjust_for_ambient_noise(source, duration=2)

9 print("Listening...")

10 audio_data = recognizer.listen(source)

11

12try:

13 text = recognizer.recognize_google(audio_data)

14 print(f"You said: {text}")

15except sr.UnknownValueError:

16 print("Could not understand the audio.")

17except sr.RequestError as e:

18 print(f"Could not request results; {e}")

19Key Points:

adjust_for_ambient_noiseimproves accuracy in noisy environments.- Always handle errors gracefully for a robust user experience.

For developers aiming to build interactive voice chat or group audio features, integrating a

Voice SDK

is a powerful way to enable live audio rooms and real-time communication in your Python projects.Working with Multiple Speech Recognition Engines in Python

One of the strengths of the SpeechRecognition library is its support for different engines. You can switch between Google (default), CMU Sphinx (offline), IBM, and others depending on your needs.

1# Using CMU Sphinx for offline recognition

2try:

3 text = recognizer.recognize_sphinx(audio_data)

4 print(f"Sphinx Output: {text}")

5except sr.UnknownValueError:

6 print("Sphinx could not understand the audio.")

7

8# Using IBM Speech to Text (requires API credentials)

9try:

10 text = recognizer.recognize_ibm(audio_data, username="YOUR_IBM_USERNAME", password="YOUR_IBM_PASSWORD")

11 print(f"IBM Output: {text}")

12except sr.RequestError as e:

13 print(f"IBM API error: {e}")

14Advantages & Limitations:

- Google API: High accuracy, requires internet connection.

- Sphinx: Works offline, less accurate for some accents/languages.

- IBM: Good enterprise support, paid API.

Switch engines based on privacy, cost, and deployment requirements. If your application requires robust audio and video communication, consider combining speech recognition with a

python video and audio calling sdk

for a comprehensive solution.Improving Accuracy: Tips and Best Practices

Maximizing speech recognition python accuracy requires a combination of strategies:

- Noise Handling: Use

adjust_for_ambient_noise, record in quieter spaces, and preprocess audio to filter unwanted sounds. - Language Selection: Specify the language code (e.g.,

recognize_google(audio_data, language='en-US')) for better results. - Audio Preprocessing: Normalize volume, trim silences, or use denoising libraries before recognition.

- Engine Selection: Test different engines/APIs for your use case.

- Microphone Quality: Use high-quality microphones and sample at recommended rates.

By following these best practices, your speech recognition python projects will achieve more reliable and accurate results, especially in real-time or noisy settings. For those looking to add phone-based features, integrating a

phone call api

can help you enable calling capabilities directly within your Python applications.Advanced Use Cases: Voice Commands and Accessibility

Speech recognition python opens the door to innovative applications:

- Voice Assistants: Build custom Python voice assistants for home automation, chatbots, or productivity tools. Combine with text-to-speech python libraries for interactive dialogue.

- Accessibility: Enable voice navigation and dictation for users with disabilities, making your apps universally accessible.

- Real-World Example: Integrate speech-to-text python in meeting transcription, voice-controlled robots, or smart IoT devices.

These advanced use cases demonstrate Python’s versatility in delivering speech-driven solutions. For large-scale or interactive audio environments, a

Voice SDK

can help you implement live audio rooms and voice chat features for accessibility and collaboration.Conclusion and Next Steps for Speech Recognition Python

Speech recognition python empowers developers to create intuitive, voice-enabled applications in 2025 and beyond. By leveraging the right libraries and following best practices, you can achieve high accuracy in both offline and real-time scenarios. Continue exploring API documentation, experiment with preprocessing techniques, and contribute to open source speech recognition python projects to stay at the forefront of this exciting field.

If you’re ready to start building your own voice-enabled applications,

Try it for free

and explore the latest tools and SDKs for Python developers. For advanced group audio features, don’t forget to check out aVoice SDK

for scalable live audio rooms in your next project.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ