Introduction to Speech Recognition and Synthesis from Google

Speech recognition and synthesis have revolutionized human-computer interaction, enabling seamless communication between users and digital systems through natural spoken language. In 2025, these technologies underpin countless real-world applications, from real-time transcription for meetings to AI-driven voice assistants and accessibility tools.

Google stands at the forefront of this transformation, offering state-of-the-art solutions for both speech recognition (Speech-to-Text) and speech synthesis (Text-to-Speech). These services leverage advanced deep learning models and vast multilingual datasets to deliver high accuracy, natural-sounding voices, and robust scalability. In this post, we explore the core capabilities, implementation strategies, and innovations powering speech recognition and synthesis from Google.

Understanding Google Speech Recognition (Speech-to-Text)

What is Google Speech-to-Text?

Google Speech-to-Text is a cloud-based API that converts spoken language from audio files or streams into written text. It supports real-time and batch transcription, making it ideal for applications like voice search, call analytics, and live captioning. By leveraging Google's AI expertise and global infrastructure, developers can build robust speech recognition solutions that scale effortlessly. For those developing interactive voice applications, integrating a

Voice SDK

can further enhance real-time audio experiences.Core Features and Capabilities

- Multilingual Support: Recognizes over 125 languages and variants, enabling truly global applications.

- Real-time & Batch Transcription: Offers both streaming APIs for instant transcription and batch processing for large volumes of audio.

- Chirp Model: Utilizes Google’s advanced Chirp model for improved accuracy across accents and noisy environments.

- Security & Compliance: Provides enterprise-grade security, data residency options, and compliance with regulations like GDPR and HIPAA.

- Speaker Diarization: Distinguishes between multiple speakers in a conversation.

- Automatic Punctuation & Formatting: Adds punctuation, capitalization, and formatting for more readable transcripts.

How Google Speech Recognition Works

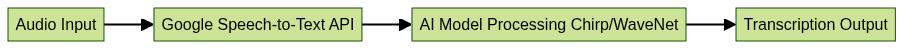

The Google Speech-to-Text workflow can be visualized as follows:

Audio data, either as a file or stream, is sent to the Google API endpoint. The audio is processed by deep learning models such as Chirp or WaveNet, which decode the spoken words and output accurate, punctuated text. For developers looking to add real-time communication features, exploring a

phone call api

can be beneficial for integrating voice capabilities into their platforms.Use Cases for Google Speech Recognition

Voice assistants, meeting transcription, media captioning, customer support analytics, and accessibility tools. Many of these applications benefit from integrating a

Video Calling API

, enabling seamless audio and video communication alongside speech recognition.Exploring Google Speech Synthesis (Text-to-Speech)

What is Google Text-to-Speech?

Google Text-to-Speech is a powerful API that transforms written text into natural-sounding spoken audio using deep neural networks. It serves a wide range of industries, powering virtual agents, IVR systems, audiobook production, and accessible content for visually impaired users. Google’s ongoing AI research ensures continuous improvements in voice quality and language coverage. For those building interactive audio experiences, leveraging a

Live Streaming API SDK

can help deliver high-quality, scalable live audio content.WaveNet and Gemini 2.5: Underlying Technology

Google’s WaveNet, developed by DeepMind, marked a significant leap in realistic speech synthesis, modeling raw audio waveforms to produce highly natural voices. In 2025, Gemini 2.5 expands on this foundation, integrating multimodal AI to interpret not only text but also contextual cues from images and audio, resulting in more expressive, context-aware speech synthesis. These innovations drive Google's leadership in voice quality, flexibility, and expressive capabilities.

Key Features and Languages Supported

- Over 220 voices across 50+ languages and variants

- Custom voice models through Vertex AI

- Support for SSML (Speech Synthesis Markup Language) for advanced control

- Real-time streaming and batch synthesis

Common Applications for Google Speech Synthesis

Voice UIs, screen readers, IVR, audiobooks, language learning tools, and dynamic media content generation. Developers working with Python can take advantage of a

python video and audio calling sdk

to add robust audio and video features to their applications.Implementation: Using Google Speech APIs

Getting Started with Google Speech-to-Text API

To use Google Speech-to-Text, enable the API in Google Cloud Console, create a service account, and install the client library. Here’s a basic Python example for transcribing an audio file:

1import os

2from google.cloud import speech_v1p1beta1 as speech

3

4os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "path/to/credentials.json"

5

6client = speech.SpeechClient()

7

8with open("audio.wav", "rb") as audio_file:

9 content = audio_file.read()

10

11audio = speech.RecognitionAudio(content=content)

12config = speech.RecognitionConfig(

13 encoding=speech.RecognitionConfig.AudioEncoding.LINEAR16,

14 sample_rate_hertz=16000,

15 language_code="en-US",

16)

17

18response = client.recognize(config=config, audio=audio)

19

20for result in response.results:

21 print("Transcript: {}".format(result.alternatives[0].transcript))

22For Android developers, integrating

webrtc android

technology can further enhance real-time communication and audio processing capabilities in mobile applications.Getting Started with Google Text-to-Speech API

First, enable the Text-to-Speech API, set up billing, and authenticate. Here’s a Python script to synthesize speech from text:

1import os

2from google.cloud import texttospeech

3

4os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "path/to/credentials.json"

5

6client = texttospeech.TextToSpeechClient()

7

8synthesis_input = texttospeech.SynthesisInput(text="Hello, world!")

9voice = texttospeech.VoiceSelectionParams(

10 language_code="en-US",

11 ssml_gender=texttospeech.SsmlVoiceGender.NEUTRAL

12)

13audio_config = texttospeech.AudioConfig(

14 audio_encoding=texttospeech.AudioEncoding.MP3

15)

16

17response = client.synthesize_speech(

18 input=synthesis_input, voice=voice, audio_config=audio_config

19)

20

21with open("output.mp3", "wb") as out:

22 out.write(response.audio_content)

23Advanced Features: Customization, SSML, Streaming, Speaker Diarization

Google’s speech APIs offer customization features such as:

- Custom phrase hints to improve recognition of domain-specific terminology

- Streaming recognition and synthesis for real-time applications

- Speaker diarization to separate speakers in a transcript

- Speech Synthesis Markup Language (SSML) to control pronunciation, pitch, emphasis, and pauses

For developers seeking to build interactive voice applications, integrating a

Voice SDK

can streamline the process of adding live audio rooms and real-time communication features.Example: SSML for Text-to-Speech

1ssml = '''<speak>

2 Hello, <break time="500ms"/> this is an <emphasis level="strong"/>example of <prosody pitch="+6st"/>SSML.

3</speak>'''

4

5synthesis_input = texttospeech.SynthesisInput(ssml=ssml)

6Security, Compliance, and Data Residency

Speech recognition and synthesis from Google prioritize security at every step. All data is encrypted in transit and at rest, with options for data residency in specific geographic regions. Google Cloud speech services adhere to industry standards including ISO 27001, GDPR, and HIPAA. Access controls, audit logging, and service account management further ensure compliance for sensitive and regulated workloads.

Innovations: AI and Multimodal Advances

Gemini 2.5 and Multimodal Audio

Gemini 2.5 represents Google’s latest leap in AI-driven speech processing. By fusing audio analysis with visual and language models, Gemini 2.5 enables multimodal understanding—interpreting not just spoken words, but also contextual cues from images, video, and environmental sounds. This advance paves the way for smarter virtual agents, accessibility tools, and creative AI audio dialog systems in 2025. For platforms aiming to support live, interactive audio experiences, a

Voice SDK

can be a valuable addition to the tech stack.Research Innovations: Chirp, WaveNet, and Beyond

Google’s Chirp model sets new benchmarks for speech recognition accuracy, especially in adverse acoustic conditions. WaveNet, meanwhile, continues to redefine speech synthesis realism. Ongoing research in multilingual speech recognition, on-device capabilities, and low-resource language support ensures Google AI speech innovations remain at the cutting edge. The integration of these technologies into Vertex AI speech solutions further accelerates enterprise adoption.

Roadmap: The Future of Google Speech Processing

Expect continued advances in real-time, on-device speech processing, larger and more expressive voice models, and deeper integration with the broader Google AI ecosystem.

Best Practices and Use Cases for Google Speech Recognition and Synthesis

Building Accessible, Multilingual, and Scalable Solutions

To maximize the value of speech recognition and synthesis from Google, design systems with:

- Accessibility: Use speech APIs to generate captions, transcriptions, and spoken feedback for users of all abilities.

- Multilingual Support: Leverage Google’s extensive language models to reach global audiences.

- Scalability: Employ batch and streaming APIs to handle workloads ranging from single requests to millions of interactions daily.

Real-world Examples and Case Studies

- Media companies automate captioning and translation for global content delivery.

- Healthcare providers use real-time transcription for clinical documentation.

- EdTech platforms create interactive voice-based learning experiences.

Tips for Optimizing API Usage and Costs

- Choose real-time streaming only when necessary; prefer batch for large files.

- Use custom phrase hints to reduce errors and reprocessing costs.

- Monitor quotas and usage in Google Cloud Console for efficient scaling.

Conclusion

In 2025, Google’s leadership in speech recognition and synthesis empowers developers to build smarter, more inclusive, and globally scalable applications. With advanced models like Chirp, WaveNet, and Gemini 2.5, and flexible APIs for both speech-to-text and text-to-speech, the possibilities are vast. Experiment with Google’s speech APIs today to unlock new experiences in audio, accessibility, and AI-powered dialog. If you’re ready to get started,

Try it for free

and explore the full potential of voice technology.External Links

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ