Introduction to Speech Recognition and Synthesis

Speech recognition and synthesis have become pivotal technologies in the digital world of 2025. At their core, these technologies empower machines to understand and generate human speech, bridging the gap between humans and computers. Their rapid adoption is transforming everything from accessibility tools to enterprise applications, unlocking new interaction paradigms and user experiences.

The impact of speech recognition and synthesis is visible in everyday life—virtual assistants, real-time transcription in meetings, automated customer support, and hands-free interfaces. As speech AI matures, it not only enhances convenience but also drives inclusivity and productivity across domains.

What is Speech Recognition and Synthesis?

Speech recognition and synthesis, often referred to as ASR (Automatic Speech Recognition) and TTS (Text-to-Speech), are complementary technologies within the speech AI landscape.

- ASR (Speech Recognition): Converts spoken language into written text. Used in applications like voice dictation, transcription services, and voice interfaces.

- TTS (Speech Synthesis): Converts textual information into natural-sounding spoken audio. Powers virtual assistants, audio books, and accessibility tools.

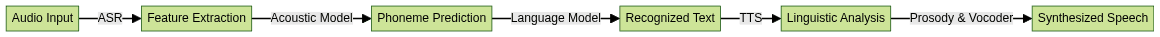

Core Technologies & Terminology:

- Feature Extraction: Transforming raw audio into acoustic features.

- Acoustic Model: Maps features to phonetic units or words.

- Language Model: Provides context to improve recognition accuracy.

- Vocoder: Generates audio waveforms from linguistic and prosodic data in synthesis.

- Prosody: The rhythm, stress, and intonation of speech.

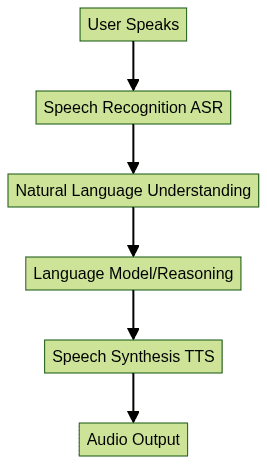

How Speech Recognition Works

Speech recognition (ASR) transforms spoken language into machine-readable text through a multi-stage process:

- Audio Capture: Microphones or audio files are used to collect speech.

- Feature Extraction: The audio signal is converted into feature vectors (e.g., MFCCs, spectrograms) representing the sound's characteristics.

- Acoustic Modeling: Machine learning models, often deep neural networks, map features to phonemes or subword units.

- Language Modeling: Statistical or neural models predict the most probable word sequence given the context.

- Decoding: The recognized text is output for further processing.

For developers looking to add real-time voice features to their applications, integrating a

Voice SDK

can streamline the process of capturing and processing audio data for speech recognition.Example: Using OpenAI Whisper for ASR in Python

1import whisper

2

3model = whisper.load_model("base")

4result = model.transcribe("audio_sample.wav")

5print(result["text"])

6This code loads the Whisper ASR model and transcribes an audio file, showcasing modern, developer-friendly ASR workflows. If you want to build similar workflows in Python, consider using a

python video and audio calling sdk

to enable seamless audio and video communication alongside speech recognition.How Speech Synthesis Works

Speech synthesis (TTS) generates lifelike speech from text, enabling natural machine-human communication.

- Text Preprocessing: Normalization and tokenization of input text.

- Linguistic Analysis: Identifies phonemes, syllables, and prosodic features.

- Prosody Prediction: Assigns rhythm, intonation, and emphasis to speech.

- Vocoder Synthesis: Converts processed linguistic data into audible speech using neural vocoders.

For applications that require both voice input and output, leveraging a

Voice SDK

can provide robust support for real-time audio streaming and synthesis.Example: Text-to-Speech with Python (ElevenLabs API)

1import requests

2

3token = "<YOUR_API_KEY>"

4url = "https://api.elevenlabs.io/v1/text-to-speech"

5headers = {"Authorization": f"Bearer {token}"}

6data = {"text": "Speech recognition and synthesis are transforming AI!"}

7response = requests.post(url, headers=headers, json=data)

8with open("output.wav", "wb") as f:

9 f.write(response.content)

10This example demonstrates how to convert text to speech using a modern API, returning high-quality, natural-sounding audio.

Modern Applications of Speech Recognition and Synthesis

The integration of speech recognition and synthesis into software applications is rapidly expanding:

- Virtual Assistants: Devices like Google Assistant, Alexa, and Gemini 2.5 rely on ASR and TTS for seamless voice interactions.

- Call Centers: Automated agents handle customer queries with real-time transcription and dynamic speech synthesis. Businesses can enhance these solutions by integrating a

phone call api

for reliable and scalable voice communication. - Accessibility Tools: TTS empowers visually impaired users, while ASR supports real-time captioning.

- Healthcare: Medical transcription, hands-free EMR navigation, and patient interaction are revolutionized by speech AI.

- Gaming: NPC dialog and voice-based controls enhance immersion and accessibility. Developers can use an

embed video calling sdk

to add interactive audio and video features to gaming platforms. - Generative AI: LLM-powered chatbots and multimodal AIs use speech for conversational experiences. Integrating a

Voice SDK

can help deliver high-quality, real-time voice interactions in these AI-driven environments.

Case Studies:

- Healthcare: Hospitals use NVIDIA Riva for real-time patient documentation.

- Customer Service: Call centers deploy Whisper for transcription and ElevenLabs for rapid TTS responses.

- Gaming: Digital avatars with expressive voices powered by generative AI models. For cross-platform development, a

react native video and audio calling sdk

enables seamless integration of voice and video features in mobile games.

Building with Speech Recognition and Synthesis APIs

Numerous APIs and SDKs streamline the integration of speech recognition and synthesis into applications:

- Microsoft Azure Speech SDK: Supports ASR, TTS, speaker identification, and translation.

- Google Cloud Speech-to-Text & Text-to-Speech: Multilingual, accurate, scalable.

- OpenAI Whisper: Open-source ASR for flexible, on-prem or cloud deployment.

- ElevenLabs API: High-fidelity, expressive TTS.

- NVIDIA Riva: Real-time, GPU-accelerated speech AI for enterprise use.

- For web-based solutions, a

javascript video and audio calling sdk

offers developers a fast way to add real-time voice and video features to browser apps. - If your application requires robust conferencing, consider a

Video Calling API

to enable high-quality, multi-participant audio and video calls. - For scalable and interactive voice experiences, integrating a

Voice SDK

can provide advanced features such as live audio rooms and real-time audio processing.

Integration Tips

- Latency: Optimize for low-latency streaming where real-time interaction is critical.

- Multilingual Support: Leverage APIs that support a wide range of languages and dialects.

- Customization: Train or fine-tune models for domain-specific vocabulary and pronunciation.

Security & Privacy

- Data Encryption: Ensure voice data is encrypted in transit and at rest.

- On-Prem Deployment: For sensitive data, prefer APIs (like Whisper, Riva) that support local deployment.

- Compliance: Adhere to industry regulations (GDPR, HIPAA) when handling user speech data.

Future Trends in Speech Recognition and Synthesis

Looking ahead to 2025 and beyond, speech recognition and synthesis are rapidly advancing:

- Multilingual & Expressive Synthesis: TTS systems now produce highly natural, emotionally rich speech across languages.

- Real-Time Transcription & Speaker Diarization: Enables live multilingual conversations, accurate speaker labeling.

- LLM & RAG Integration: Large Language Models and Retrieval-Augmented Generation power context-aware, conversational speech AI.

- AI Avatars & Digital Humans: Speech technologies are core to lifelike, interactive avatars in virtual environments.

As generative and multimodal AI evolve, speech recognition and synthesis will underpin the next generation of intelligent, voice-driven applications.

Conclusion

Speech recognition and synthesis are revolutionizing how humans interact with technology. These powerful, accessible tools are driving efficiency, accessibility, and innovation. For developers and enterprises, leveraging speech AI in 2025 opens the door to more natural, inclusive, and intelligent digital experiences. Now is the time to explore, build, and innovate with speech technologies.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ