Introduction to Speech Recognition

Speech recognition, also known as automatic speech recognition (ASR) or speech-to-text, is a transformative technology that enables computers to interpret and transcribe spoken language into written text. In 2024, the proliferation of voice interfaces, virtual assistants, and real-time transcription tools has made speech recognition a core component of modern applications. From accessibility to productivity and automation, speech recognition is driving new user experiences and fundamentally changing how humans interact with machines. Developers leverage this technology to build smarter, more inclusive, and hands-free solutions across devices, platforms, and industries.

What is Speech Recognition?

Speech recognition is the process by which computers convert spoken language into readable text. At its core, it involves capturing audio signals, processing them, and using models to transcribe words with high accuracy. Key terms include "speech-to-text" and "automatic speech recognition" (ASR), which are often used interchangeably. The technology underpins a range of applications, from voice assistants and dictation software to real-time transcription services. For developers looking to add real-time voice features to their applications, integrating a

Voice SDK

can streamline the process and enhance user experience.Difference between Speech Recognition and Voice Recognition

While speech recognition focuses on transcribing spoken words into text, voice recognition is about identifying or verifying a speaker's identity. Speech recognition answers "what was said?", whereas voice recognition answers "who said it?". Both employ sophisticated audio processing and machine learning techniques, but serve different use cases in software engineering. Solutions like

Voice SDK

can support both speech and voice recognition functionalities, making them versatile for a variety of applications.How Does Speech Recognition Work?

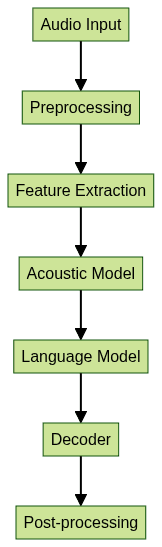

Speech recognition systems are built on a pipeline of components that transform raw audio into structured text. The process typically includes:

- Audio Input: Capturing spoken language via microphone or audio file.

- Preprocessing: Cleaning and normalizing audio (noise reduction, silence removal).

- Feature Extraction: Converting audio waveforms to numerical features (like MFCCs).

- Acoustic Model: Mapping features to phonetic units.

- Language Model: Determining word probabilities and context.

- Decoder: Generating the most likely text output.

- Post-processing: Refining output, correcting errors, and formatting text.

For developers building communication platforms, leveraging a

python video and audio calling sdk

can simplify the integration of speech recognition with real-time audio and video features.Acoustic Models and Language Models

Acoustic models interpret the relationship between audio features and phonemes (distinct units of sound). Language models, on the other hand, use statistical or neural methods to predict word sequences, ensuring that the transcribed text is both accurate and natural. Both are essential for robust speech recognition.

Role of Deep Learning and NLP

Deep learning, particularly with neural networks, has revolutionized speech recognition accuracy since 2010. Techniques like recurrent neural networks (RNNs), convolutional neural networks (CNNs), and transformers enable models to learn complex speech patterns. Natural language processing (NLP) further enhances understanding, enabling context-aware and semantically meaningful transcriptions. For those developing browser-based solutions, a

javascript video and audio calling sdk

can be instrumental in building seamless, interactive experiences that incorporate speech recognition.

Evolution and History of Speech Recognition

The journey of speech recognition began in the 1950s, with early systems like Bell Labs' "Audrey" recognizing digits. The 1970s saw the introduction of Hidden Markov Models (HMMs), which became foundational for acoustic modeling. In the 2000s, statistical methods and larger datasets improved performance.

The real breakthrough came post-2010, with deep learning models massively boosting accuracy. Open source frameworks (e.g., Kaldi, DeepSpeech) and public benchmarks (like LibriSpeech) democratized research and development. In 2024, speech recognition is embedded in devices from smartphones to cars, and continues to evolve rapidly. Modern communication platforms often utilize a

Video Calling API

to enable real-time interactions, further enhancing the capabilities of speech-enabled applications.Real-World Applications of Speech Recognition

Speech recognition powers a wide array of applications across industries:

- Virtual Assistants: Siri, Google Assistant, and Alexa use ASR for natural user interaction.

- Transcription Services: Automated meeting notes and real-time captioning.

- Accessibility: Voice-driven controls and dictation enhance access for people with disabilities.

- Healthcare: Medical dictation, patient records, and hands-free interfaces.

- Automotive: Voice-based navigation and infotainment systems.

For businesses aiming to add calling capabilities, integrating a

phone call api

can provide robust voice communication features that complement speech recognition technology.Use Case: Accessibility for People with Disabilities

ASR enables users with mobility or vision impairments to interact with technology using speech. Features like voice commands, real-time transcription, and screen readers increase digital accessibility, ensuring inclusivity in modern software and device ecosystems. Developers can further enhance accessibility by embedding a

Voice SDK

into their applications, enabling hands-free and voice-driven interactions.Building a Speech Recognition System: Step-by-Step Guide

Implementing a speech recognition system involves several key steps. Here's a practical guide for developers in 2024:

Requirements and Tools:

- Programming language: Python

- Libraries: SpeechRecognition, PyDub, librosa, TensorFlow/PyTorch (for custom models)

- Datasets: LibriSpeech, Common Voice, proprietary datasets

- Hardware: Microphone or pre-recorded audio files

For those looking to quickly integrate video and audio calling alongside speech recognition, consider using an

embed video calling sdk

to streamline development and deployment.Step 1: Collecting and Preparing Audio Data

Gather diverse audio samples representing your target user base. Clean and label the data, ensuring balanced representation of accents, languages, and environments.

Step 2: Feature Extraction

Convert raw audio into numerical features such as Mel-frequency cepstral coefficients (MFCCs) or spectrograms. These features help models learn relevant speech patterns and phonetic structures.

1import librosa

2

3y, sr = librosa.load(\"audio.wav\")

4mfcc = librosa.feature.mfcc(y=y, sr=sr, n_mfcc=13)

5print(mfcc.shape)

6Step 3: Training Acoustic and Language Models

Train an acoustic model (e.g., using deep neural networks) to map features to phonemes. Simultaneously, build or fine-tune a language model to predict word sequences based on context.

Step 4: Decoding and Transcription

Integrate the acoustic and language models with a decoder to generate the most probable text output for a given audio input. Decoding algorithms (like beam search) optimize transcription accuracy. For scalable, real-time applications, leveraging a

Voice SDK

can help facilitate seamless audio processing and transcription.Step 5: Post-processing and Evaluation

Refine the output by correcting errors, formatting text, and applying domain-specific rules. Evaluate system performance using metrics like word error rate (WER), and iterate on model improvements.

Example: Using Python SpeechRecognition Library

1import speech_recognition as sr

2

3recognizer = sr.Recognizer()

4with sr.AudioFile(\"audio.wav\") as source:

5 audio = recognizer.record(source)

6

7try:

8 text = recognizer.recognize_google(audio)

9 print(\"Transcribed Text:\", text)

10except sr.UnknownValueError:

11 print(\"Could not understand audio\")

12Challenges and Limitations of Speech Recognition

Despite significant progress, speech recognition faces ongoing challenges. Accents, dialects, and background noise can reduce accuracy. Domain adaptation is difficult, as systems trained on general speech may falter in specialized contexts (e.g., medical or legal terminology). Word error rate (WER) remains a crucial metric, and minimizing it requires continual improvements in data diversity, model robustness, and algorithmic innovation.

Future Trends in Speech Recognition

Looking ahead to 2025 and beyond, speech recognition will be shaped by several trends:

- Multilingual and Code-Switching Models: Seamless ASR across languages and dialects.

- Edge Computing: Running ASR locally on mobile and IoT devices for privacy and low-latency responses.

- Improved Accuracy: Larger datasets and advanced neural architectures will further reduce word error rates.

- Context Awareness: Integrating speech recognition with context from user profiles, application state, and environment for smarter transcriptions and command execution.

As these trends evolve, developers can

Try it for free

to explore the latest advancements in speech and voice technology.Conclusion

Speech recognition is a cornerstone of modern human-computer interaction. In 2024, it empowers accessibility, productivity, and automation across countless domains. By leveraging deep learning, NLP, and robust software tools, developers can build powerful ASR systems that drive innovation and inclusivity. The future holds even greater promise as technology continues to advance.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ