Introduction to Open Source Speech Recognition

Open source speech recognition refers to freely available software toolkits and libraries that convert spoken language into text using transparent, modifiable codebases. As the demand for voice-enabled applications and digital assistants skyrockets, open source automatic speech recognition (ASR) has become a cornerstone for developers seeking cost-effective, privacy-respecting, and customizable solutions. The market has seen significant growth in recent years, with open source projects like Vosk, Kaldi, and PocketSphinx leading the charge. These tools empower developers to build robust offline speech recognition, multi-language support, and real-time speech transcription into a range of applications, from mobile devices to enterprise systems.

Why Choose Open Source Speech Recognition?

Opting for open source speech recognition offers compelling advantages for developers and organizations. First, cost savings are substantial—there are no licensing fees, and the community-driven nature encourages rapid innovation. Privacy is a major factor: unlike proprietary or cloud-based speech-to-text APIs, open source ASR enables on-device processing, ensuring user data never leaves local infrastructure. Flexibility is another key benefit; open source solutions allow for deep customization, letting teams adapt models for specialized vocabularies, accents, or environments. In contrast, proprietary and cloud-based solutions may lock users into subscription fees, restrict offline usage, or limit model customization. For developers who value transparency, control, and the ability to contribute to or audit the code, open source speech recognition is the clear choice. For those building voice-enabled applications, integrating a

Voice SDK

can further streamline the development process and enhance real-time communication features.Key Features of Open Source Speech Recognition Tools

Open source speech recognition tools are defined by several critical features:

- Offline Functionality: Many toolkits, such as Vosk and PocketSphinx, offer true offline speech recognition, making them ideal for privacy-sensitive and low-connectivity environments.

- Multi-Language and Custom Model Support: Leading open source ASR projects support dozens of languages and allow developers to train custom language and acoustic models for specialized domains.

- Cross-Platform Compatibility: These toolkits run on a wide array of platforms, from Linux servers and Windows desktops to Android, iOS, Raspberry Pi, and embedded systems. This flexibility opens opportunities for innovation across device types, especially when paired with solutions like a

python video and audio calling sdk

for seamless integration of speech and communication features.

Leading Open Source Speech Recognition Projects

The open source speech recognition ecosystem has matured rapidly, offering robust toolkits for diverse use cases. Let’s explore the most prominent projects as of 2025.

Vosk: Versatile Offline Speech Recognition

Vosk is a modern, lightweight speech recognition toolkit designed for offline use, making it ideal for privacy-focused and on-device applications. Vosk supports over 20 languages and dialects, including English, Spanish, Chinese, Russian, and more. It’s compatible with major operating systems (Linux, Windows, macOS), mobile platforms (Android, iOS), and even Raspberry Pi. Vosk stands out for its low resource requirements and ease of integration with popular programming languages like Python, Java, and Node.js. Developers choose Vosk for rapid prototyping, cross-platform speech-to-text, embedded speech recognition, and real-time transcription needs. If you're looking to add voice features to your app, a

javascript video and audio calling sdk

can be a powerful complement to Vosk for building interactive experiences.Basic Vosk Usage in Python

1import vosk

2import sys

3import json

4import sounddevice as sd

5

6model = vosk.Model("model")

7rec = vosk.KaldiRecognizer(model, 16000)

8

9def callback(indata, frames, time, status):

10 if rec.AcceptWaveform(indata):

11 result = rec.Result()

12 print(json.loads(result)["text"])

13

14with sd.RawInputStream(samplerate=16000, blocksize = 8000, dtype='int16', channels=1, callback=callback):

15 print('Listening...')

16 while True:

17 pass

18Kaldi: High-Performance Open Source Speech Recognition

Kaldi is the gold standard for researchers and engineers seeking maximum accuracy and extensibility in open source speech recognition. Renowned for its deep learning capabilities, Kaldi supports advanced acoustic modeling (including neural networks), speaker adaptation, and custom language model training. Its modular architecture makes it highly extensible for research, academic, and production use-cases. Kaldi’s tools are primarily command-line driven and support scripting in Bash and Python. Major use cases include research in ASR, development of custom enterprise transcription systems, and voice-driven analytics. For projects requiring robust communication features alongside speech recognition, leveraging a

Video Calling API

can help you build scalable, real-time audio and video solutions.Running Kaldi with a Sample Model

1# Example: Running Kaldi's online2-wav-nnet3-recognizer

2online2-wav-nnet3-latgen-faster \

3 --config=conf/online.conf \

4 --input-file=sample.wav \

5 --model=final.mdl \

6 --graph=HCLG.fst \

7 --words=words.txt \

8 --output=transcription.txt

9PocketSphinx: Lightweight Open Source Speech Recognition

PocketSphinx, part of the CMU Sphinx project, is designed for embedded and low-resource environments. It’s a C-based toolkit with Python bindings, making it ideal for IoT devices, mobile apps, and hardware-constrained systems. PocketSphinx supports live audio input and can be run entirely offline. While less accurate than Vosk or Kaldi in complex environments, its minimal resource usage makes it perfect for always-on voice triggers, command recognition, or simple on-device transcription. For Android developers, exploring

webrtc android

can open up new possibilities for integrating real-time audio and video communication with speech recognition on mobile devices.PocketSphinx Microphone Example (Python)

1import speech_recognition as sr

2

3recognizer = sr.Recognizer()

4with sr.Microphone() as source:

5 print("Say something...")

6 audio = recognizer.listen(source)

7 try:

8 print("You said: " + recognizer.recognize_sphinx(audio))

9 except sr.UnknownValueError:

10 print("PocketSphinx could not understand audio")

11 except sr.RequestError as e:

12 print("PocketSphinx error; {0}".format(e))

13How to Implement Open Source Speech Recognition in Your Project

Integrating open source speech recognition into your application involves several steps, from choosing the right toolkit to workflow automation and code-level integration. For those working with cross-platform apps,

flutter webrtc

provides a flexible framework for adding real-time communication and speech features to both Android and iOS applications.Choosing the Right Toolkit

Begin by assessing your project requirements:

- Language Support: Does your application need multi-language or domain-specific vocabulary?

- Platform Compatibility: Will you deploy on mobile, embedded, or desktop systems?

- Resource Constraints: Are CPU, RAM, or storage limited?

- Real-Time Needs: Do you require real-time speech recognition or can you process audio in batches?

If your application involves telephony or call features, integrating a

phone call api

can help you build seamless audio calling experiences with speech recognition capabilities.Basic Implementation Workflow

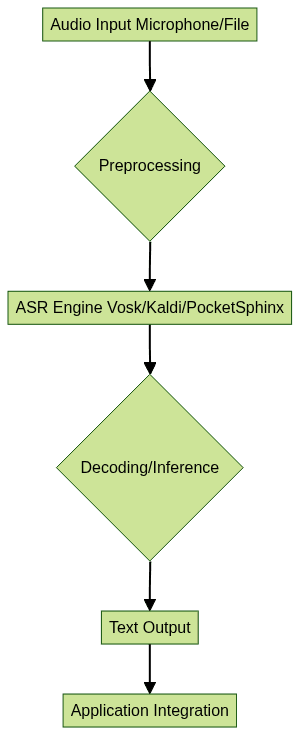

Once you’ve selected a toolkit (e.g., Vosk, Kaldi, or PocketSphinx), the typical workflow is as follows:

- Install the speech recognition library and dependencies

- Download or train the appropriate language and acoustic models

- Integrate the ASR engine into your application code

- Process audio input and handle transcription output

For enhanced voice experiences, consider leveraging a dedicated

Voice SDK

to add scalable, interactive audio features to your application.Workflow Diagram

Example: Integrating Vosk in a Python App

Here’s how you can transcribe live audio using Vosk in a simple Python application:

1import vosk

2import sounddevice as sd

3import sys

4import json

5

6model = vosk.Model("model")

7rec = vosk.KaldiRecognizer(model, 16000)

8

9with sd.RawInputStream(samplerate=16000, blocksize=8000, dtype='int16', channels=1) as stream:

10 print('Listening...')

11 while True:

12 data = stream.read(4000)[0]

13 if rec.AcceptWaveform(data):

14 result = json.loads(rec.Result())

15 print("Recognized: ", result["text"])

16Customizing and Training Language Models

Custom models are essential when you need high accuracy for domain-specific terms, unique accents, or underrepresented languages. Vosk and Kaldi both support custom language and acoustic model training, though the workflow varies in complexity.

- Vosk: Allows you to adapt and fine-tune existing models with new vocabulary or datasets. The

official Vosk documentation

provides resources for model adaptation. - Kaldi: Offers a comprehensive toolchain for building and training language models from scratch, using your own audio and text corpora. Extensive guides are available in the

Kaldi docs

.

For both, you’ll need:

- Clean, labeled audio and transcript data

- Knowledge of model training pipelines

- Compute resources for model training and validation

If you want to quickly prototype or enhance your voice-enabled applications, a

Voice SDK

can accelerate development and provide advanced features out of the box.Challenges and Considerations

While open source speech recognition is powerful, developers should be aware of certain challenges:

- Accuracy: Open source models may lag behind commercial APIs in noisy or complex audio environments.

- Noise & Environment: Robustness to ambient noise varies by toolkit and model quality.

- Language Availability: Some languages or dialects have limited official model support.

- Setup Complexity: Training custom models, especially in Kaldi, can require significant expertise and computational resources.

The Future of Open Source Speech Recognition

In 2025 and beyond, open source speech recognition is poised for rapid evolution. Key trends include AI-driven improvements in accuracy, growth of edge and on-device speech recognition for privacy, expansion to new languages and dialects, and easier workflows for training and deploying custom models. As voice interfaces proliferate, open source ASR will play a major role in democratizing speech technology.

Conclusion

Open source speech recognition empowers developers to build innovative, privacy-friendly voice applications across platforms. Explore Vosk, Kaldi, and PocketSphinx to start integrating robust, customizable ASR into your projects today. If you're ready to get started,

Try it for free

and experience the benefits of open source speech recognition in your next project.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ