Introduction to GStreamer WebRTC Technology

What is GStreamer WebRTC?

GStreamer is a powerful and versatile pipeline-based multimedia framework designed for constructing graphs of media-handling components. It allows developers to build a variety of media applications, from simple audio playback to complex video streaming systems. GStreamer supports a wide range of formats, making it an ideal choice for multimedia application development and real-time communication.

WebRTC

(Web Real-Time Communication) is a technology that enables peer-to-peer communication between browsers and mobile applications. It allows for real-time audio, video, and data sharing without the need for plugins or external software. WebRTC is widely used in applications such as video conferencing, online gaming, and live streaming.Combining GStreamer with WebRTC leverages the strengths of both technologies, providing a robust solution for real-time media streaming and processing. GStreamer handles the multimedia processing, while WebRTC facilitates seamless peer-to-peer communication. This combination is particularly useful for applications requiring advanced media processing capabilities within a WebRTC context, such as applying filters or transcoding video streams. For more information and the latest updates, you can visit the GStreamer GitHub repository.

GStreamer WebRTC opens doors to various use cases. For example, in telehealth applications, it can facilitate secure and high-quality video consultations between doctors and patients. Remote collaboration tools can benefit from GStreamer's media processing capabilities, enabling features like screen sharing with annotations. These applications highlight the power of GStreamer WebRTC in enabling seamless and efficient real-time media experiences.

GStreamer WebRTC Architecture

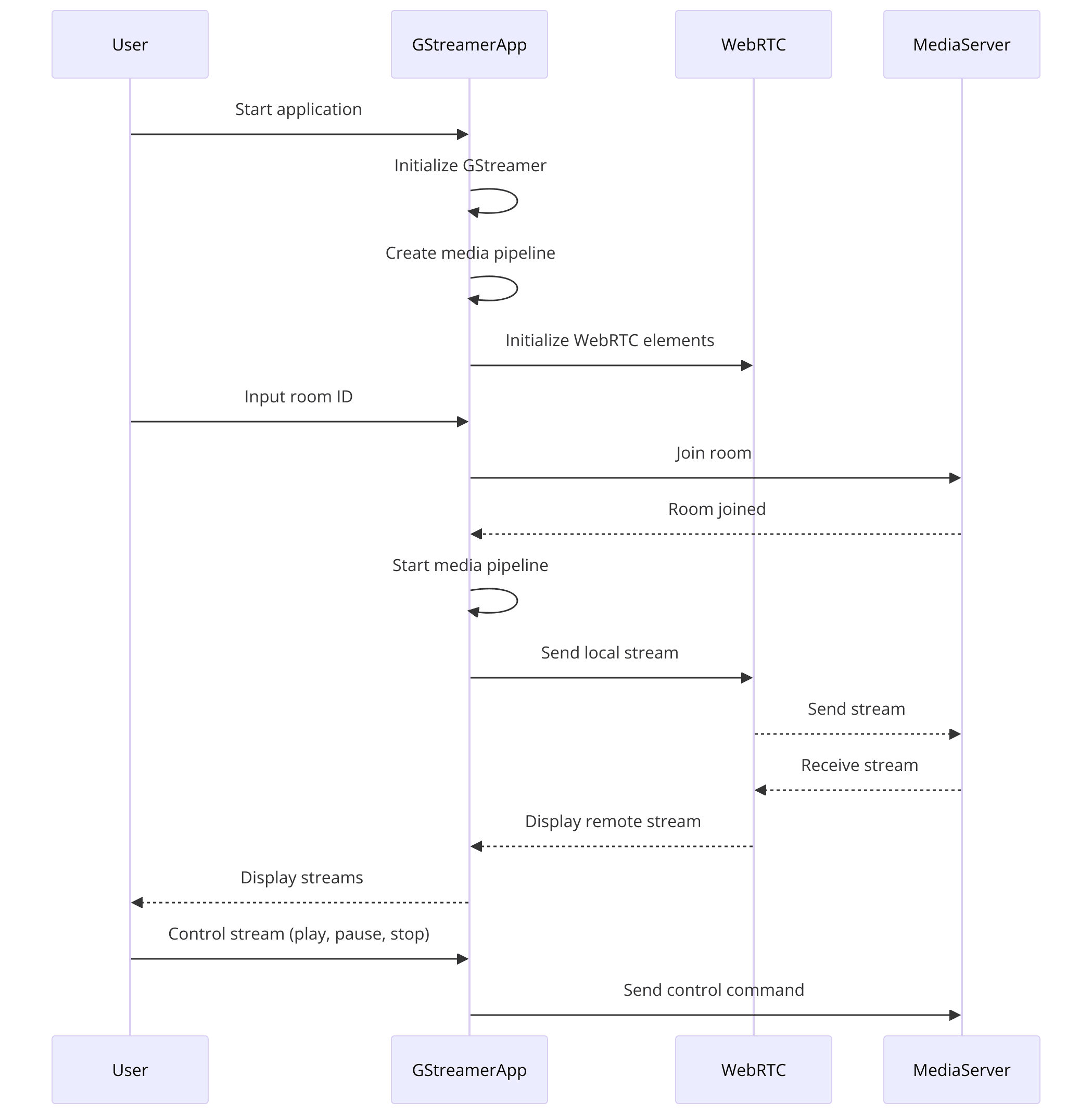

Understanding the architecture of a GStreamer WebRTC application is key to building successful applications. The following diagram illustrates how GStreamer and WebRTC interact:

Note: Replace the above fake image URL with a proper architecture diagram, showing the data flow from the media source, through the GStreamer pipeline for processing, to the WebRTC bin for real-time transmission.

This architecture diagram shows how a media source (e.g., camera, microphone) feeds data into a GStreamer pipeline. The pipeline performs various media processing tasks, such as encoding, filtering, and mixing. Finally, the processed media is passed to the WebRTC bin, which handles the real-time communication with other peers.

Getting Started with the Code

Create a New GStreamer WebRTC App

To start building a GStreamer WebRTC application for media streaming, you first need to set up your development environment. Ensure you have the necessary tools and libraries installed on your system. This section provides a detailed guide on installing GStreamer and WebRTC.

Prerequisites and Dependencies

- GStreamer: Make sure you have GStreamer installed on your system. You can download it from the official

GStreamer website

. - WebRTC Libraries: Download and install the WebRTC libraries from

WebRTC.org

.

Install GStreamer and WebRTC

[a] Installing GStreamer

These installation instructions cover Linux, macOS, and Windows operating systems.

For Linux:

bash

1sudo apt-get install gstreamer1.0-tools gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-bad gstreamer1.0-plugins-ugly

2This command installs essential GStreamer tools and plugins.

For macOS:

bash

1brew install gstreamer gst-plugins-base gst-plugins-good gst-plugins-bad gst-plugins-ugly

2Ensure you have Homebrew installed before running this command.

For Windows, download the installer from the

GStreamer website

. Follow the installation wizard, and ensure that the GStreamer binaries are added to your system's PATH environment variable.[b] Installing WebRTC

Follow the instructions on

WebRTC.org

to download and build the WebRTC libraries for your platform. This typically involves using a build system like CMake and a compiler like GCC or Visual Studio.Structure of the Project

Organizing your project directory is crucial for managing code effectively. A typical GStreamer WebRTC project structure might look like this:

1gstreamer-webrtc-app/

2│

3├── src/

4│ ├── main.c

5│ ├── webrtc.c

6│ └── webrtc.h

7├── build/

8│ └── Makefile

9└── README.md

10Important Files and Folders

- src/: Contains the source code for your application.

- build/: Contains build scripts and makefiles.

- README.md: Provides documentation and instructions for your project.

App Architecture

- Media Source: Captures audio and video data.

- Media Pipeline: Processes and encodes media data.

- WebRTC Bin: Manages WebRTC sessions and connections.

- Controls: Allows user interaction with the media stream.

The architecture ensures that media data flows seamlessly from the source through the pipeline and is transmitted via WebRTC to the end users.

With this setup, you're ready to start coding your GStreamer WebRTC application. The next sections will guide you through the step-by-step implementation, beginning with the main application file and setting up the necessary components.

Step 1: Get Started with main.c

Creating the main.c File

To kick off your GStreamer WebRTC application, you need to set up the main entry point of your program. This is where you'll initialize GStreamer, create the media pipeline, and set up WebRTC elements.

Setting Up the main Function

Start by creating a new file named

main.c in the src directory. In this file, you'll define the main function and initialize GStreamer.C

1#include <gst/gst.h>

2

3int main(int argc, char *argv[]) {

4 gst_init(&argc, &argv);

5

6 GMainLoop *main_loop = g_main_loop_new(NULL, FALSE);

7

8 // Create and set up the pipeline here

9

10 g_main_loop_run(main_loop);

11

12 gst_deinit();

13 return 0;

14}

15In this code snippet, you initialize GStreamer with

gst_init(), create a GMainLoop to keep the application running, and call g_main_loop_run() to start the main loop.Pipeline Creation

The next step is to create a simple GStreamer pipeline that will serve as the backbone of your application. This pipeline will handle the media flow and processing.

Code Snippet for Creating a Simple GStreamer Pipeline

C

1#include <gst/gst.h>

2

3int main(int argc, char *argv[]) {

4 gst_init(&argc, &argv);

5

6 GMainLoop *main_loop = g_main_loop_new(NULL, FALSE);

7

8 GstElement *pipeline = gst_pipeline_new("webrtc-pipeline");

9 GstElement *source = gst_element_factory_make("videotestsrc", "source");

10 GstElement *sink = gst_element_factory_make("autovideosink", "sink");

11

12 if (!pipeline || !source || !sink) {

13 g_printerr("Failed to create elements\n");

14 return -1;

15 }

16

17 gst_bin_add_many(GST_BIN(pipeline), source, sink, NULL);

18 if (gst_element_link(source, sink) != TRUE) {

19 g_printerr("Failed to link elements\n");

20 gst_object_unref(pipeline);

21 return -1;

22 }

23

24 gst_element_set_state(pipeline, GST_STATE_PLAYING);

25

26 g_main_loop_run(main_loop);

27

28 gst_element_set_state(pipeline, GST_STATE_NULL);

29 gst_object_unref(pipeline);

30 g_main_loop_unref(main_loop);

31 gst_deinit();

32

33 return 0;

34}

35In this snippet, you create a pipeline named

webrtc-pipeline, add a video source (videotestsrc), and a video sink (autovideosink). The elements are linked together to form a complete pipeline. The pipeline is set to the PLAYING state, and the main loop runs to keep the application active.This basic setup lays the groundwork for adding WebRTC components and further developing your application in the next steps.

Step 2: Wireframe All the Components

Setting Up the Components

In this step, you'll extend the basic pipeline created in

main.c to include WebRTC elements. This will enable the application to handle real-time media streaming.Adding and Linking WebRTC Elements to the Pipeline

To start, you need to add the necessary WebRTC elements to your pipeline. This typically includes elements for encoding and decoding video and audio, as well as the WebRTC bin that manages the WebRTC connections.

Code Snippets for Components

Adding WebRTC Bin

C

1 GstElement *webrtcbin = gst_element_factory_make("webrtcbin", "webrtcbin");

2 if (!webrtcbin) {

3 g_printerr("Failed to create webrtcbin\n");

4 return -1;

5 }

6Adding Video Encoder

C

1 GstElement *videoconvert = gst_element_factory_make("videoconvert", "videoconvert");

2 GstElement *vp8enc = gst_element_factory_make("vp8enc", "vp8enc");

3 if (!videoconvert || !vp8enc) {

4 g_printerr("Failed to create video elements\n");

5 return -1;

6 }

7Adding Audio Encoder

C

1 GstElement *audioconvert = gst_element_factory_make("audioconvert", "audioconvert");

2 GstElement *opusenc = gst_element_factory_make("opusenc", "opusenc");

3 if (!audioconvert || !opusenc) {

4 g_printerr("Failed to create audio elements\n");

5 return -1;

6 }

7Linking the Components

Now, link these components into the pipeline. Ensure the data flows from the source, through the encoders, and into the WebRTC bin.

C

1gst_bin_add_many(GST_BIN(pipeline), source, videoconvert, vp8enc, webrtcbin, NULL);

2if (!gst_element_link_many(source, videoconvert, vp8enc, webrtcbin, NULL)) {

3 g_printerr("Failed to link video elements\n");

4 gst_object_unref(pipeline);

5 return -1;

6}

7

8gst_bin_add_many(GST_BIN(pipeline), audioconvert, opusenc, webrtcbin, NULL);

9if (!gst_element_link_many(audioconvert, opusenc, webrtcbin, NULL)) {

10 g_printerr("Failed to link audio elements\n");

11 gst_object_unref(pipeline);

12 return -1;

13}

14Explanation of the Role of Each Component

- webrtcbin: Manages WebRTC connections, including signaling and media streaming.

- videoconvert: Converts video formats to ensure compatibility with the encoder.

- vp8enc: Encodes video streams using the VP8 codec.

- audioconvert: Converts audio formats for compatibility with the encoder.

- opusenc: Encodes audio streams using the Opus codec.

By adding these components and linking them, you've prepared the pipeline to handle WebRTC streams. The next step involves designing the join screen, which provides a user interface for connecting to WebRTC sessions.

Step 3: Implement Join Screen

Designing the Join Screen

The join screen is the user interface where users can enter connection details and initiate the WebRTC session. It typically includes fields for entering a room ID or URL and buttons to join or leave the session.

Basic Layout and Elements Required

- Text Input Field:

- Allows users to input the room ID or connection URL.

- Join Button:

- Initiates the WebRTC connection using the provided details.

- Leave Button:

- Ends the WebRTC session and disconnects the user.

Code for Join Screen

Creating the Join Screen UI

For simplicity, we'll use GTK, a multi-platform toolkit for creating graphical user interfaces, to design the join screen.

Code Snippet for Creating the Join Screen

C

1#include <gtk/gtk.h>

2

3static void on_join_button_clicked(GtkWidget *widget, gpointer data) {

4 const gchar *room_id = gtk_entry_get_text(GTK_ENTRY(data));

5 g_print("Joining room: %s\n", room_id);

6 // Implement WebRTC connection logic here

7}

8

9static void on_leave_button_clicked(GtkWidget *widget, gpointer data) {

10 g_print("Leaving room\n");

11 // Implement WebRTC disconnection logic here

12}

13

14int main(int argc, char *argv[]) {

15 gtk_init(&argc, &argv);

16

17 GtkWidget *window = gtk_window_new(GTK_WINDOW_TOPLEVEL);

18 gtk_window_set_title(GTK_WINDOW(window), "GStreamer WebRTC Join Screen");

19 gtk_window_set_default_size(GTK_WINDOW(window), 300, 200);

20

21 GtkWidget *vbox = gtk_box_new(GTK_ORIENTATION_VERTICAL, 5);

22 gtk_container_add(GTK_CONTAINER(window), vbox);

23

24 GtkWidget *entry = gtk_entry_new();

25 gtk_box_pack_start(GTK_BOX(vbox), entry, TRUE, TRUE, 0);

26

27 GtkWidget *join_button = gtk_button_new_with_label("Join");

28 g_signal_connect(join_button, "clicked", G_CALLBACK(on_join_button_clicked), entry);

29 gtk_box_pack_start(GTK_BOX(vbox), join_button, TRUE, TRUE, 0);

30

31 GtkWidget *leave_button = gtk_button_new_with_label("Leave");

32 g_signal_connect(leave_button, "clicked", G_CALLBACK(on_leave_button_clicked), NULL);

33 gtk_box_pack_start(GTK_BOX(vbox), leave_button, TRUE, TRUE, 0);

34

35 g_signal_connect(window, "destroy", G_CALLBACK(gtk_main_quit), NULL);

36

37 gtk_widget_show_all(window);

38 gtk_main();

39

40 return 0;

41}

42Handling User Input and Events

- onjoinbutton_clicked: Captures the room ID from the input field and initiates the WebRTC connection logic.

- onleavebutton_clicked: Ends the WebRTC session when the leave button is clicked.

By implementing this join screen, you provide a simple and interactive interface for users to connect to WebRTC sessions, making the application more user-friendly. The next step will focus on implementing the control functionalities.

Step 4: Implement Controls

Setting Up Controls

In this step, you'll add basic control functionalities to your GStreamer WebRTC application, such as play, pause, and stop. These controls will allow users to manage the media stream during the WebRTC session.

Implementing Basic Controls

- Play Button:

- Starts or resumes the media stream.

- Pause Button:

- Pauses the media stream.

- Stop Button:

- Stops the media stream and resets the pipeline.

Code Snippets for Controls

Adding Control Buttons to the UI

Expand your existing GTK-based join screen to include buttons for play, pause, and stop.

C

1#include <gtk/gtk.h>

2#include <gst/gst.h>

3

4GstElement *pipeline;

5

6static void on_play_button_clicked(GtkWidget *widget, gpointer data) {

7 gst_element_set_state(pipeline, GST_STATE_PLAYING);

8 g_print("Stream started\n");

9}

10

11static void on_pause_button_clicked(GtkWidget *widget, gpointer data) {

12 gst_element_set_state(pipeline, GST_STATE_PAUSED);

13 g_print("Stream paused\n");

14}

15

16static void on_stop_button_clicked(GtkWidget *widget, gpointer data) {

17 gst_element_set_state(pipeline, GST_STATE_READY);

18 g_print("Stream stopped\n");

19}

20

21int main(int argc, char *argv[]) {

22 gst_init(&argc, &argv);

23 gtk_init(&argc, &argv);

24

25 pipeline = gst_pipeline_new("webrtc-pipeline");

26

27 GtkWidget *window = gtk_window_new(GTK_WINDOW_TOPLEVEL);

28 gtk_window_set_title(GTK_WINDOW(window), "GStreamer WebRTC Controls");

29 gtk_window_set_default_size(GTK_WINDOW(window), 300, 200);

30

31 GtkWidget *vbox = gtk_box_new(GTK_ORIENTATION_VERTICAL, 5);

32 gtk_container_add(GTK_CONTAINER(window), vbox);

33

34 GtkWidget *entry = gtk_entry_new();

35 gtk_box_pack_start(GTK_BOX(vbox), entry, TRUE, TRUE, 0);

36

37 GtkWidget *join_button = gtk_button_new_with_label("Join");

38 g_signal_connect(join_button, "clicked", G_CALLBACK(on_join_button_clicked), entry);

39 gtk_box_pack_start(GTK_BOX(vbox), join_button, TRUE, TRUE, 0);

40

41 GtkWidget *leave_button = gtk_button_new_with_label("Leave");

42 g_signal_connect(leave_button, "clicked", G_CALLBACK(on_leave_button_clicked), NULL);

43 gtk_box_pack_start(GTK_BOX(vbox), leave_button, TRUE, TRUE, 0);

44

45 GtkWidget *play_button = gtk_button_new_with_label("Play");

46 g_signal_connect(play_button, "clicked", G_CALLBACK(on_play_button_clicked), NULL);

47 gtk_box_pack_start(GTK_BOX(vbox), play_button, TRUE, TRUE, 0);

48

49 GtkWidget *pause_button = gtk_button_new_with_label("Pause");

50 g_signal_connect(pause_button, "clicked", G_CALLBACK(on_pause_button_clicked), NULL);

51 gtk_box_pack_start(GTK_BOX(vbox), pause_button, TRUE, TRUE, 0);

52

53 GtkWidget *stop_button = gtk_button_new_with_label("Stop");

54 g_signal_connect(stop_button, "clicked", G_CALLBACK(on_stop_button_clicked), NULL);

55 gtk_box_pack_start(GTK_BOX(vbox), stop_button, TRUE, TRUE, 0);

56

57 g_signal_connect(window, "destroy", G_CALLBACK(gtk_main_quit), NULL);

58

59 gtk_widget_show_all(window);

60 gtk_main();

61

62 gst_object_unref(pipeline);

63 return 0;

64}

65Integrating Controls with the GStreamer Pipeline

- onplaybutton_clicked: Sets the pipeline state to PLAYING to start or resume the stream.

- onpausebutton_clicked: Sets the pipeline state to PAUSED to pause the stream.

- onstopbutton_clicked: Sets the pipeline state to READY to stop the stream and reset the pipeline.

These controls provide users with basic functionality to manage the media stream during the WebRTC session, enhancing the user experience and making the application more interactive. The next step involves creating the participant view to display active participants in the session.

Troubleshooting GStreamer WebRTC Applications

When building GStreamer WebRTC applications, you might encounter several common issues. Here are some troubleshooting tips to help you resolve them:

- Installation Issues:

- Problem: GStreamer or WebRTC libraries not found.

- Solution: Double-check that you've followed the installation instructions correctly. Ensure that the GStreamer binaries are added to your system's PATH environment variable. For WebRTC libraries, verify that the build process completed successfully and that the libraries are installed in the correct location.

- Pipeline Problems:

- Problem: Pipeline fails to create or link elements.

- Solution: Examine the error messages closely. Ensure that all the required plugins are installed. Verify that the element names are correct and that the elements are compatible with each other. Use

gst-inspect-1.0to check the capabilities of each element.

- WebRTC Connectivity Challenges:

- Problem: Unable to establish a WebRTC connection.

- Solution: Check your firewall settings to ensure that WebRTC traffic is not blocked. Verify that the signaling server is working correctly and that the ICE candidates are being exchanged successfully. Use a tool like Wireshark to capture and analyze the network traffic.

Security Considerations for GStreamer WebRTC

When developing GStreamer WebRTC applications, security should be a primary concern. Here are some key security considerations:

- Encryption: Ensure that all WebRTC traffic is encrypted using DTLS (Datagram Transport Layer Security). This protects the media streams from eavesdropping and tampering.

- Authentication: Implement robust authentication mechanisms to verify the identity of the users. This prevents unauthorized access to the WebRTC sessions.

- Signaling Security: Secure the signaling channel used to exchange WebRTC session information. Use HTTPS to encrypt the signaling traffic and protect against man-in-the-middle attacks.

- Permissions: Limit the permissions granted to the GStreamer WebRTC application. Only request the necessary permissions for accessing the camera, microphone, and network.

Frequently Asked Questions (FAQs)

Q: How do I build a GStreamer WebRTC application for video conferencing?

A: Follow the steps outlined in this blog post, ensuring that you have correctly installed GStreamer and the WebRTC libraries. Pay close attention to the pipeline creation and component linking steps.

Q: Can I use GStreamer WebRTC for live streaming?

A: Yes, GStreamer WebRTC is well-suited for live streaming applications. You can use GStreamer to capture and process the media stream, and WebRTC to transmit it to the viewers in real-time.

Q: What are the advantages of using GStreamer with WebRTC?

A: GStreamer provides a powerful and flexible framework for media processing, while WebRTC enables seamless real-time communication. Combining these technologies allows you to build robust and feature-rich real-time media applications.

We encourage you to try out the examples provided in this blog and provide feedback. Your input is valuable in improving this guide and making GStreamer WebRTC technology more accessible to developers.

Step 5: Implement Participant View

Creating the Participant View

The participant view is crucial for displaying the streams of all active participants in a WebRTC session. This allows users to see and interact with each other in real-time.

Layout Design for Displaying Participants

To implement the participant view, you need a layout that can dynamically display multiple video streams. A grid layout is often a suitable choice for this purpose.

Code for Participant View

Expanding the GTK-based UI

Enhance the existing GTK interface to include a drawing area or a grid where video streams can be displayed.

C

1#include <gtk/gtk.h>

2#include <gst/gst.h>

3

4GstElement *pipeline;

5

6static void on_join_button_clicked(GtkWidget *widget, gpointer data) {

7 const gchar *room_id = gtk_entry_get_text(GTK_ENTRY(data));

8 g_print("Joining room: %s\n", room_id);

9 // Implement WebRTC connection logic here

10}

11

12static void on_leave_button_clicked(GtkWidget *widget, gpointer data) {

13 g_print("Leaving room\n");

14 // Implement WebRTC disconnection logic here

15}

16

17static void on_play_button_clicked(GtkWidget *widget, gpointer data) {

18 gst_element_set_state(pipeline, GST_STATE_PLAYING);

19 g_print("Stream started\n");

20}

21

22static void on_pause_button_clicked(GtkWidget *widget, gpointer data) {

23 gst_element_set_state(pipeline, GST_STATE_PAUSED);

24 g_print("Stream paused\n");

25}

26

27static void on_stop_button_clicked(GtkWidget *widget, gpointer data) {

28 gst_element_set_state(pipeline, GST_STATE_READY);

29 g_print("Stream stopped\n");

30}

31

32int main(int argc, char *argv[]) {

33 gst_init(&argc, &argv);

34 gtk_init(&argc, &argv);

35

36 pipeline = gst_pipeline_new("webrtc-pipeline");

37

38 GtkWidget *window = gtk_window_new(GTK_WINDOW_TOPLEVEL);

39 gtk_window_set_title(GTK_WINDOW(window), "GStreamer WebRTC Participant View");

40 gtk_window_set_default_size(GTK_WINDOW(window), 600, 400);

41

42 GtkWidget *vbox = gtk_box_new(GTK_ORIENTATION_VERTICAL, 5);

43 gtk_container_add(GTK_CONTAINER(window), vbox);

44

45 GtkWidget *entry = gtk_entry_new();

46 gtk_box_pack_start(GTK_BOX(vbox), entry, FALSE, FALSE, 0);

47

48 GtkWidget *join_button = gtk_button_new_with_label("Join");

49 g_signal_connect(join_button, "clicked", G_CALLBACK(on_join_button_clicked), entry);

50 gtk_box_pack_start(GTK_BOX(vbox), join_button, FALSE, FALSE, 0);

51

52 GtkWidget *leave_button = gtk_button_new_with_label("Leave");

53 g_signal_connect(leave_button, "clicked", G_CALLBACK(on_leave_button_clicked), NULL);

54 gtk_box_pack_start(GTK_BOX(vbox), leave_button, FALSE, FALSE, 0);

55

56 GtkWidget *play_button = gtk_button_new_with_label("Play");

57 g_signal_connect(play_button, "clicked", G_CALLBACK(on_play_button_clicked), NULL);

58 gtk_box_pack_start(GTK_BOX(vbox), play_button, FALSE, FALSE, 0);

59

60 GtkWidget *pause_button = gtk_button_new_with_label("Pause");

61 g_signal_connect(pause_button, "clicked", G_CALLBACK(on_pause_button_clicked), NULL);

62 gtk_box_pack_start(GTK_BOX(vbox), pause_button, FALSE, FALSE, 0);

63

64 GtkWidget *stop_button = gtk_button_new_with_label("Stop");

65 g_signal_connect(stop_button, "clicked", G_CALLBACK(on_stop_button_clicked), NULL);

66 gtk_box_pack_start(GTK_BOX(vbox), stop_button, FALSE, FALSE, 0);

67

68 GtkWidget *grid = gtk_grid_new();

69 gtk_box_pack_start(GTK_BOX(vbox), grid, TRUE, TRUE, 0);

70

71 // Function to add video streams to the grid will be implemented here

72

73 g_signal_connect(window, "destroy", G_CALLBACK(gtk_main_quit), NULL);

74

75 gtk_widget_show_all(window);

76 gtk_main();

77

78 gst_object_unref(pipeline);

79 return 0;

80}

81Managing Multiple Streams

Implement a function to add video streams to the grid dynamically. This function will be called whenever a new participant joins the session.

C

1void add_video_stream(GtkWidget *grid, GstElement *video_sink, int participant_id) {

2 GtkWidget *drawing_area = gtk_drawing_area_new();

3 gtk_widget_set_size_request(drawing_area, 160, 120);

4

5 gtk_grid_attach(GTK_GRID(grid), drawing_area, participant_id % 4, participant_id / 4, 1, 1);

6 gtk_widget_show(drawing_area);

7

8 // Connect the video_sink to the drawing_area for rendering the stream

9 gst_video_overlay_set_window_handle(GST_VIDEO_OVERLAY(video_sink), GDK_WINDOW_XID(gtk_widget_get_window(drawing_area)));

10}

11addvideostream: This function adds a drawing area for each video stream to the grid and connects the video sink to the drawing area for rendering the video.

By implementing the participant view, your application can display multiple video streams, allowing users to see all active participants in the WebRTC session. This step enhances the interactivity and usability of your GStreamer WebRTC application.

Step 6: Run Your Code Now

Compiling and Running the Application

Now that you have implemented the main components of your GStreamer WebRTC application, it’s time to compile and run the code. Follow these steps to ensure your application is ready for use.

Steps to Compile the Code

[a] Create a Makefile

Create a Makefile in the

build directory to simplify the compilation process.1 CC=gcc

2 CFLAGS=`pkg-config --cflags gstreamer-1.0 gtk+-3.0`

3 LIBS=`pkg-config --libs gstreamer-1.0 gtk+-3.0`

4 SOURCES=../src/main.c

5 TARGET=gstreamer-webrtc

6

7 $(TARGET): $(SOURCES)

8 $(CC) -o $(TARGET) $(SOURCES) $(CFLAGS) $(LIBS)

9[b] Compile the Code

Navigate to the

build directory and run the make command.bash

1 cd build

2 make

3[c] Running the Application

Once the code is compiled, you can run the application:

bash

1./gstreamer-webrtc

2This command starts your GStreamer WebRTC application, launching the UI with the join screen and controls.

Troubleshooting Common Issues

- Missing Dependencies: Ensure all GStreamer and GTK dependencies are installed.

- Pipeline Errors: Check for errors in the pipeline setup and linking of elements.

By following these steps, you can successfully compile and run your GStreamer WebRTC application, allowing you to test and use the functionalities implemented so far.

Conclusion

In this article, we explored the integration of GStreamer with WebRTC, providing a robust solution for

real-time media streaming

and processing. Starting with setting up the development environment, we progressed through creating the main application, adding essential components, implementing the join screen and controls, and finally running the application. This comprehensive guide, complete with practical code snippets and detailed explanations, aims to equip you with the knowledge needed to develop your own GStreamer WebRTC applications.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ