Introduction to Gesture Recognition

Gesture recognition is a transformative technology in modern human-computer interaction (HCI), enabling computers to interpret and respond to human gestures. By leveraging machine learning, computer vision, and advanced signal processing, gesture recognition bridges the gap between humans and digital devices through intuitive, touchless interfaces. From manipulating virtual environments to controlling smart devices, gesture recognition has become a cornerstone of seamless, natural user experiences across industries in 2025.

What is Gesture Recognition?

At its core, gesture recognition refers to the computational process of detecting, tracking, and interpreting human gestures. Gestures can be as simple as a wave or as complex as a sequence of hand movements, and are typically classified into three categories:

- Hand gestures: Finger movements, hand poses, and dynamic motions

- Body gestures: Full-body or upper-body postures and actions

- Facial gestures: Expressions, blinks, and head movements

Gesture recognition enables natural user interfaces (NUIs) that respond to physical movements, reducing the need for traditional input devices. This technology is essential for creating immersive, accessible, and intuitive systems in gaming, AR/VR, healthcare, and industrial automation. For developers building interactive applications, integrating a

Video Calling API

can further enhance user engagement by enabling real-time communication alongside gesture-based controls.How Gesture Recognition Works

Tracking and Interpreting Gestures

Gesture recognition systems rely on accurately tracking the position, orientation, and motion of body parts. This process utilizes computer vision algorithms and various sensors (e.g., cameras, inertial measurement units) to capture movement data. Key steps include:

- Detection: Locating relevant body parts (e.g., hands, face) in video streams

- Tracking: Following their movement and changes over time

- Feature Extraction: Deriving key points, angles, or trajectories for interpretation

Sensors such as depth cameras, LiDAR, and even WiFi signals enable device-free gesture recognition, while wearables like data gloves provide highly accurate readings for hand gesture recognition. Developers working with cross-platform solutions may also consider frameworks like

flutter webrtc

to facilitate real-time video and gesture data transmission in their applications.Gesture Recognition Algorithms

Gesture recognition algorithms leverage machine learning, deep learning, and signal processing techniques to classify gestures. Traditional approaches utilize handcrafted features and classifiers (e.g., SVM, HMM), while modern systems employ deep neural networks for robust performance.

Below is a Python example using MediaPipe to recognize hand gestures:

1import cv2

2import mediapipe as mp

3

4mp_hands = mp.solutions.hands

5hands = mp_hands.Hands()

6mp_drawing = mp.solutions.drawing_utils

7

8cap = cv2.VideoCapture(0)

9while cap.isOpened():

10 ret, frame = cap.read()

11 if not ret:

12 break

13 frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

14 results = hands.process(frame_rgb)

15 if results.multi_hand_landmarks:

16 for hand_landmarks in results.multi_hand_landmarks:

17 mp_drawing.draw_landmarks(frame, hand_landmarks, mp_hands.HAND_CONNECTIONS)

18 cv2.imshow('Hand Gesture Recognition', frame)

19 if cv2.waitKey(1) & 0xFF == ord('q'):

20 break

21cap.release()

22cv2.destroyAllWindows()

23Signal processing techniques (e.g., filtering, time-series analysis) support gesture recognition in cases where sensor noise or complex environments are present. For those looking to integrate gesture recognition with real-time communication in Python, leveraging a

python video and audio calling sdk

can streamline development and add robust audio/video features.Types of Gesture Recognition Systems

Device-based vs. Device-free Systems

Device-based gesture recognition systems employ physical devices, like data gloves or wearables, to capture movement data with high precision. Device-free systems rely on external sensors—such as RGB/depth cameras, radar, or WiFi—to detect gestures without requiring users to wear equipment, enabling more natural interactions.

For Android developers, implementing device-free solutions is made easier with

webrtc android

, which supports seamless real-time video and audio streaming for gesture-enabled apps.Real-Time vs. Offline Gesture Recognition

Real-time gesture recognition processes input instantly, often utilizing edge computing to reduce latency and ensure responsive feedback. Offline recognition, on the other hand, analyzes recorded data, making it suitable for post-processing or research applications.

If you want to quickly add video calling and gesture recognition to your web or mobile apps, an

embed video calling sdk

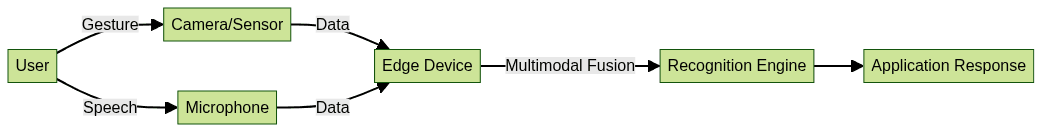

offers a plug-and-play solution for rapid deployment.Multimodal Gesture Recognition

Multimodal gesture recognition systems combine gesture input with other modalities, such as speech or gaze, creating robust and context-aware interfaces. For React developers, integrating

react video call

functionality can further enhance multimodal experiences by combining gesture controls with live video interactions.

Applications of Gesture Recognition

Consumer Electronics

Gesture recognition has revolutionized consumer electronics, powering smart TVs, gaming consoles, and AR/VR devices. Users can navigate menus, interact with virtual environments, or play games using intuitive hand and body gestures, making interfaces more engaging and accessible. Developers working with JavaScript can utilize a

javascript video and audio calling sdk

to add real-time communication features to gesture-driven web applications.Healthcare and Accessibility

In healthcare, gesture recognition supports rehabilitation, remote monitoring, and touchless control of medical devices. Assistive technologies leverage gesture recognition to empower users with limited mobility, providing alternative ways to interact with software and hardware systems. For cross-platform mobile solutions, a

react native video and audio calling sdk

can facilitate secure, high-quality video calls and gesture-based controls for patients and caregivers.Industrial and IoT

Industries utilize gesture recognition for touchless interfaces in hazardous or sterile environments, enhancing safety and efficiency. In IoT, gesture recognition enables process control, robotics, and smart home automation, offering seamless, context-aware command execution. For large-scale, interactive broadcasts, integrating a

Live Streaming API SDK

allows organizations to combine gesture recognition with live streaming for remote monitoring and real-time collaboration.Challenges in Gesture Recognition

Despite its advancements, gesture recognition faces several technical challenges:

- Accuracy: Achieving high recognition rates across users and environments

- Speed: Maintaining low latency for real-time interaction

- Environmental Factors: Varying lighting, backgrounds, or sensor occlusion

- User Variability: Differences in gesture execution across individuals

- Dataset Limitations: Need for large, diverse gesture datasets

- Privacy: Managing and securing sensitive user data

Overcoming these challenges requires sophisticated algorithms, robust hardware, and privacy-aware design principles.

Implementing Gesture Recognition: A Practical Guide

Choosing the Right Sensors and Tools

Selecting appropriate sensors and frameworks is crucial. Popular sensors include DFRobot's gesture sensors (for embedded/IoT), while MediaPipe offers a robust, open-source framework for hand gesture recognition in Python. Each tool varies in accuracy, latency, and ease of integration, so consider application requirements and development resources. For those eager to experiment, you can

Try it for free

and explore SDKs that support gesture recognition and video calling integration.Example: Building a Simple Hand Gesture Recognition System in Python

Below is a step-by-step guide to implementing a basic hand gesture recognition pipeline using MediaPipe:

- Install MediaPipe and OpenCV

1pip install mediapipe opencv-python

2- Capture Video Input and Detect Hands

1import cv2

2import mediapipe as mp

3

4mp_hands = mp.solutions.hands

5hands = mp_hands.Hands(min_detection_confidence=0.7)

6mp_drawing = mp.solutions.drawing_utils

7

8cap = cv2.VideoCapture(0)

9while True:

10 ret, frame = cap.read()

11 if not ret:

12 break

13 frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

14 results = hands.process(frame_rgb)

15 if results.multi_hand_landmarks:

16 for hand_landmarks in results.multi_hand_landmarks:

17 mp_drawing.draw_landmarks(frame, hand_landmarks, mp_hands.HAND_CONNECTIONS)

18 cv2.imshow("Hand Gesture Recognition", frame)

19 if cv2.waitKey(1) & 0xFF == ord('q'):

20 break

21cap.release()

22cv2.destroyAllWindows()

23- Classify Gestures (Extend with ML model)

- Collect landmark data

- Train a classifier on labeled gesture data

- Integrate prediction logic into the real-time loop

Testing and Improving Accuracy

Improve gesture recognition accuracy by augmenting datasets, testing under diverse conditions, and refining model parameters through continual learning.

The Future of Gesture Recognition

Gesture recognition is poised for rapid growth in 2025 and beyond, driven by advances in deep learning, edge computing, and sensor technology. Future trends include:

- AI-powered multimodal systems

- Ultra-fast, on-device recognition for wearables and IoT

- Expanded applications in smart environments, automotive, and telemedicine

As gesture recognition matures, expect ever more seamless, intuitive, and secure human-computer interactions across devices and platforms.

Conclusion

Gesture recognition stands at the forefront of HCI innovation in 2025, enabling natural, touchless interactions across consumer, healthcare, and industrial domains. By harnessing advanced algorithms and accessible tools like MediaPipe, developers can deliver responsive, accessible, and immersive experiences. As technology evolves, gesture recognition will play an increasingly vital role in shaping the future of digital interaction.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ