Introduction to LLMs

Large Language Models (LLMs) have completely transformed artificial intelligence and natural language processing (NLP) in recent years, powering everything from chatbots to advanced code generation tools. Their ability to read, understand, and generate human-like text at scale has made them central to modern AI systems. As we move into 2025, LLMs are not just research curiosities—they are foundational technology impacting software engineering, business, and daily life. In this post, we will unravel what is a llm, how they work, their core architectures, training regimes, real-world applications, and the challenges and ethical considerations surrounding their rapid adoption.

What is a LLM?

A Large Language Model (LLM) is a type of artificial intelligence model designed to understand and generate human language. Built on deep neural networks—primarily transformer architectures—LLMs are trained on vast datasets, often containing hundreds of billions of words. Their scale, both in terms of data and parameter size (sometimes numbering in the hundreds of billions), enables them to perform a wide range of natural language processing (NLP) tasks with minimal or even zero task-specific training.

The significance of LLMs lies in their ability to generalize across domains and perform complex reasoning, making them powerful tools for text generation, translation, summarization, code generation, and more. Unlike earlier models, LLMs can adapt to new prompts and contexts, demonstrating few-shot or zero-shot learning. When asking what is a llm, it is essential to recognize their role as flexible, general-purpose AI models that leverage large-scale data and neural network innovations.

LLMs vs Traditional Language Models

Traditional language models, such as n-gram models or early RNNs, were limited by their shallow architectures and reliance on smaller datasets. In contrast, LLMs use deep, transformer-based neural networks, which allow them to capture long-range dependencies and contextual information. This innovation enables LLMs to outperform older models in virtually every NLP task, from understanding nuanced language to generating coherent, contextually relevant text.

Key Components and Architecture of LLMs

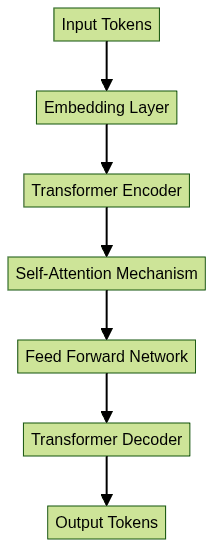

Transformer Architecture and Its Importance

The transformer architecture, introduced in 2017, is the backbone of most modern LLMs. Transformers use self-attention mechanisms to weigh the importance of each word in a sequence relative to others, enabling parallel processing and superior performance on long sequences compared to RNNs or LSTMs.

The key innovation is the attention mechanism, which allows the model to focus on relevant parts of the input text at each step of processing. This architecture is highly scalable and forms the basis for models like GPT-3, GPT-4, and other state-of-the-art LLMs. Developers building communication tools can leverage technologies like the

Video Calling API

to integrate real-time video and audio features into their applications, complementing the capabilities of LLM-powered chatbots and assistants.Encoder-Decoder and Attention Mechanism

LLMs often use either an encoder, a decoder, or both (encoder-decoder) in their architecture. Encoders process input sequences into contextual representations, while decoders generate outputs from these representations. The attention mechanism bridges these components, allowing the decoder to selectively attend to parts of the encoded input.

Tokenization and Attention Example in Python

Tokenization and attention are central to how LLMs process language. Here's a simple Python example using Hugging Face Transformers:

1from transformers import AutoTokenizer, AutoModel

2import torch

3

4# Load pretrained tokenizer and model

5model_name = "bert-base-uncased"

6tokenizer = AutoTokenizer.from_pretrained(model_name)

7model = AutoModel.from_pretrained(model_name)

8

9# Tokenize input text

10inputs = tokenizer("What is a LLM?", return_tensors="pt")

11

12# Forward pass to get attention weights

13with torch.no_grad():

14 outputs = model(**inputs, output_attentions=True)

15 attention = outputs.attentions[0]

16

17print(f"Attention shape: {attention.shape}")

18This code demonstrates how input text is tokenized and how attention weights are extracted from a transformer model, illustrating core concepts behind LLM processing. If you're developing AI-powered communication tools in Python, consider exploring the

python video and audio calling sdk

for seamless integration of real-time media features.How LLMs are Trained

The training process for LLMs is what sets them apart from traditional models. It involves several stages and massive amounts of data and compute resources.

Pretraining on Massive Datasets

LLMs are initially pretrained on diverse and large-scale corpora, including books, web pages, code repositories, and more. Pretraining is typically unsupervised: the model learns to predict the next word in a sequence, enabling it to build a broad understanding of language structure, semantics, and context.

Supervised Finetuning and Transfer Learning

After pretraining, LLMs undergo supervised finetuning on smaller, task-specific datasets. This transfer learning approach allows the model to adapt its general knowledge to tasks like question answering, translation, or summarization. Finetuning is crucial for aligning the model's outputs with desired behaviors and improving performance on specific applications.

For developers working with cross-platform apps, leveraging the

flutter video and audio calling api

can help integrate real-time communication features alongside LLM-powered functionalities.Zero-Shot and Few-Shot Learning

One remarkable feature of LLMs is their ability to perform zero-shot and few-shot learning. In zero-shot learning, the model can tackle new tasks without explicit retraining, simply by being given instructions in the prompt. Few-shot learning further improves performance by providing a handful of examples within the prompt. This flexibility is a direct result of the scale and generality of LLMs.

If you are building applications in JavaScript, the

javascript video and audio calling sdk

offers an efficient way to add real-time audio and video capabilities, which can be enhanced by LLM-driven user experiences.

This diagram summarizes the end-to-end pipeline, from ingesting raw data to deploying a fine-tuned LLM.

Applications of LLMs

LLMs have unlocked a wide range of applications in both consumer and enterprise technology. Their ability to generalize and adapt has led to rapid adoption across industries.

- Text Generation: LLMs can generate coherent and contextually relevant text, powering applications like creative writing, content creation, and automated reports.

- Machine Translation: Modern translation tools leverage LLMs to produce accurate and fluent translations between languages.

- Summarization: LLMs can condense long documents into concise summaries, aiding information retrieval and research.

- Sentiment Analysis: Businesses use LLMs to analyze customer feedback and social media, extracting sentiment and trends in real time.

- Code Generation: Tools like GitHub Copilot use LLMs to assist developers by generating code snippets, improving productivity and code quality.

- Chatbots and Virtual Assistants: LLMs power conversational agents that understand and respond to user queries naturally.

For those building mobile applications, the

react native video and audio calling sdk

enables seamless integration of real-time communication, which can be further enhanced by LLM-driven conversational interfaces. Additionally, if you want to quicklyembed video calling sdk

features into your web or mobile app, prebuilt solutions can save significant development time.These applications demonstrate the practical, real-world impact of LLM technology. For live events and broadcasts, integrating a

Live Streaming API SDK

can help deliver interactive experiences, while aVoice SDK

is ideal for building live audio rooms and discussions, all of which can benefit from LLM-powered moderation or content generation.If you're working with WebRTC in Flutter, check out this

flutter webrtc

resource to learn how to implement real-time video and audio communication, which pairs well with LLM-driven features.Challenges and Limitations

Despite their transformative potential, LLMs present significant challenges and limitations:

- Computational Cost: Training and running LLMs is resource-intensive, requiring powerful hardware and significant energy consumption.

- Bias and Fairness: LLMs can inherit and even amplify biases present in training data, leading to ethical concerns and unreliable outputs.

- Hallucination: LLMs sometimes generate plausible but incorrect or nonsensical information, a phenomenon known as hallucination.

- Data Privacy: Using sensitive or proprietary data in training can raise privacy and security risks.

Addressing these issues is critical for the responsible deployment of LLMs.

Ethical Considerations for LLMs

With great power comes great responsibility. The societal impact of LLMs is profound: they can influence public discourse, automate decision-making, and even create convincing misinformation. Developers and organizations must prioritize transparency, accountability, and fairness when deploying LLM-powered systems. Establishing robust oversight and ethical guidelines is essential for ensuring that LLM technology benefits society as a whole.

Future Trends in LLMs

The landscape of LLMs continues to evolve rapidly in 2025:

- Open-Source Models: Community-driven LLMs are making advanced AI accessible to more developers.

- Multimodal LLMs: Models that integrate text, images, and other modalities are expanding the capabilities of LLMs.

- Scaling Laws: Ongoing research into scaling laws guides the design of ever-larger and more capable models.

Staying informed about these trends is crucial for developers and organizations leveraging LLM technology.

Conclusion: What is a LLM and Why it Matters

In summary, understanding what is a llm is essential for anyone working in AI, software engineering, or technology today. LLMs have redefined what's possible in NLP, thanks to their transformer-based architectures, massive pretraining, and ability to generalize across tasks. As their applications and influence grow, developers must balance innovation with responsibility, ensuring that LLMs are used ethically and effectively. The future of LLMs promises even greater advances—and new challenges—for the tech community in 2025 and beyond. If you're interested in exploring these technologies further,

Try it for free

and start building your own AI-powered communication solutions.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ