Introduction

Voice agents have rapidly reshaped interactions between humans and computers, evolving from simple keyword-based assistants to sophisticated, context-aware AI companions. The recent advancements by OpenAI in voice agents have ushered in a new era of real-time, agentic workflows that leverage state-of-the-art speech-to-speech technology. In this post, we explore the transformative impact of OpenAI in voice agents, focusing on SDKs, real-time orchestration, and technical best practices for building robust voice AI solutions in 2025.

What are Voice Agents?

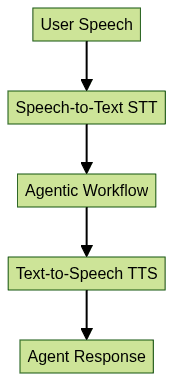

Voice agents are software-driven entities capable of understanding, processing, and responding to human speech using AI and natural language processing (NLP). The evolution of voice agents has moved from rule-based IVR systems to end-to-end neural speech-to-speech pipelines, enabling agentic workflows that can manage complex conversations, multi-turn dialogues, and adaptive behaviors.

Modern voice agents utilize advanced speech recognition, natural language understanding (NLU), and dynamic speech synthesis to emulate human-like interactions. These agentic voice assistants can perform task handoff, manage context, and interact seamlessly with external tools and APIs, making them powerful components for real-time voice AI applications across industries. For developers seeking to build these solutions, integrating a

Voice SDK

can accelerate development and enhance real-time capabilities.OpenAI’s Role in Transforming Voice Agents

The Emergence of OpenAI Voice Mode

OpenAI’s innovations in the voice domain have set new standards for natural and expressive AI-powered conversations. The introduction of OpenAI Voice Mode brought:

- Accents and Emotions: Support for a wide range of accents and emotional tones, enabling more personalized and relatable interactions.

- Multi-Character Support: The ability to simulate multiple distinct voices or personas within a single conversation, a leap forward for storytelling, learning, and entertainment applications.

Voice Recognition and Speaker Differentiation

OpenAI in voice agents leverages advanced deep learning models for high-accuracy speech recognition and speaker differentiation. These models can identify speakers in multi-user environments, maintain conversational context, and enable agentic workflows such as real-time task switching and multi-agent orchestration. The result is a seamless, natural voice experience that adapts dynamically to user intent and environmental cues. For applications requiring real-time communication, integrating a

phone call api

can further streamline voice interactions and connectivity.Core Components: OpenAI Voice Agents SDK

Python SDK: Architecture & Workflow

OpenAI’s Voice Agents Python SDK provides a modular, extensible architecture for building and deploying voice AI solutions. Developers looking for cross-platform support may also consider using a

python video and audio calling sdk

to enable seamless audio and video integration within their voice agent applications.- VoicePipeline: Orchestrates speech-to-text (STT), language understanding, and text-to-speech (TTS) in a unified flow.

- SingleAgentVoiceWorkflow: Encapsulates agent logic, state management, and tool integration for individual voice agents.

Code Example: Basic VoicePipeline Setup (Python)

1from openai_voice_sdk.voice_pipeline import VoicePipeline

2from openai_voice_sdk.workflows import SingleAgentVoiceWorkflow

3

4def main():

5 # Initialize the voice pipeline

6 pipeline = VoicePipeline(

7 agent=SingleAgentVoiceWorkflow(),

8 stt_model="openai-stt-2025",

9 tts_model="openai-tts-2025"

10 )

11 pipeline.run()

12

13if __name__ == "__main__":

14 main()

15

JavaScript SDK: Real-Time Applications

The JavaScript SDK for OpenAI voice agents is tailored for real-time, browser-based, and backend applications with support for:

- OpenAI Realtime API: Low-latency streaming for speech recognition and synthesis.

- WebSocket/WebRTC Integration: Enables real-time audio streaming, callbacks, and event-driven tool calls.

For developers working in JavaScript, leveraging a

javascript video and audio calling sdk

can simplify the process of adding robust audio and video features to your voice agent workflows.Example: Real-Time Streaming with Callbacks (JavaScript)

1import { VoiceAgent, RealtimeAPI } from "openai-voice-sdk-js";

2

3const agent = new VoiceAgent({

4 agentWorkflow: "single-agent",

5 sttModel: "openai-stt-2025",

6 ttsModel: "openai-tts-2025"

7});

8

9const realtime = new RealtimeAPI({

10 agent,

11 onPartialResult: (data) => console.log("Partial:", data),

12 onFinalResult: (data) => console.log("Final:", data)

13});

14

15realtime.startStreaming();

16Building a Voice Assistant with OpenAI

Step-by-Step Implementation Guide

1. Prerequisites & Setup

- Obtain API keys from OpenAI

- Install the relevant SDK (

openai-voice-sdkfor Python oropenai-voice-sdk-jsfor JavaScript) - Ensure microphone and audio permissions are configured

For those building mobile or cross-platform voice agents,

flutter webrtc

offers a powerful solution for real-time audio and video streaming, making it easier to deploy voice AI across devices.2. Creating an Agent (Python Example)

1from openai_voice_sdk.workflows import SingleAgentVoiceWorkflow

2

3class MyVoiceAgent(SingleAgentVoiceWorkflow):

4 def handle_intent(self, intent, context):

5 if intent == "get_weather":

6 return self.tool_call("weather_api", context)

7 elif intent == "handoff":

8 return self.handoff("support_agent", context)

9 return "Sorry, I didn't understand."

103. Tool Integration

1def weather_api(context):

2 # Mock API call to get weather

3 return f"The weather in {context['city']} is sunny."

4

5agent = MyVoiceAgent()

6agent.register_tool("weather_api", weather_api)

74. Handoff Logic

1def support_agent(context):

2 return "You are now connected to a support specialist."

3

4agent.register_handoff("support_agent", support_agent)

55. Running the Voice Pipeline

1pipeline = VoicePipeline(agent=agent)

2pipeline.run()

3Orchestrating Multi-Agent Workflows

OpenAI in voice agents enables orchestration of multiple agents for complex task management. Using a state machine, tasks can be routed, escalated, or handed off seamlessly. For advanced orchestration and real-time audio experiences, integrating a

Voice SDK

can help manage multiple audio streams and agent interactions efficiently.State Machine Example (Python Pseudocode)

1class MultiAgentStateMachine:

2 def __init__(self):

3 self.state = "greeting"

4

5 def transition(self, event):

6 if self.state == "greeting" and event == "request_info":

7 self.state = "info_collection"

8 elif self.state == "info_collection" and event == "handoff":

9 self.state = "support_handoff"

10 return self.state

11Key Use Cases for OpenAI Voice Agents

Customer Support

OpenAI in voice agents powers 24/7 intelligent customer support, automating issue resolution, triaging, and escalation to human agents when needed. For live audio experiences in support scenarios, a

Voice SDK

can provide the necessary infrastructure for scalable, real-time communication.Language Learning

AI voice agents deliver personalized language coaching, pronunciation feedback, and interactive lessons in real-time, adapting to learner proficiency. To enhance mobile learning applications,

flutter webrtc

can be used to enable smooth, high-quality audio and video sessions across platforms.Storytelling & Entertainment

Multi-character, emotionally expressive agents provide immersive storytelling experiences, voice-driven games, and interactive fiction.

Workflow Automation

Voice-driven automation agents manage scheduling, reminders, data entry, and integrate with enterprise tools for streamlined operations. Developers can

Try it for free

to explore how these SDKs and APIs can power their next voice automation project.Advanced Features and Best Practices

- Real-Time Monitoring & Debugging:

- Use built-in logging and tracing features to monitor agent performance and conversation flows.

- Integrate with real-time monitoring solutions for proactive issue detection.

- Performance Optimization:

- Minimize latency by using streaming APIs and optimizing model selection.

- Employ batching and asynchronous processing for scale.

- Security and Privacy:

- Ensure end-to-end encryption for all audio and metadata streams.

- Comply with data protection regulations (GDPR, CCPA) and implement anonymization where required.

For developers building scalable, real-time voice solutions, leveraging a

Voice SDK

ensures robust performance and flexibility across diverse use cases.Challenges and Limitations

While OpenAI in voice agents offers powerful capabilities, challenges remain:

- Latency in real-time speech processing can impact user experience.

- Complexity in multi-agent orchestration and state management requires careful design.

- Integration with legacy systems and third-party tools can be non-trivial.

Future Trends in OpenAI Voice Agents

Looking ahead to 2025 and beyond, OpenAI in voice agents will evolve toward:

- Multi-modal interactions (voice, vision, text)

- Proactive, context-aware agents that anticipate user needs

- Enhanced personalization and on-device inference for privacy and speed

Conclusion

OpenAI in voice agents is accelerating the future of human-computer interaction, making voice AI smarter, more expressive, and adaptable than ever. By leveraging robust SDKs, real-time APIs, and best practices, developers can build advanced voice assistants and agentic workflows that redefine digital experiences in 2025 and beyond.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ