Introduction to Protocols for Streaming

The term protocol for streaming refers to the standardized set of rules that govern how media files—such as video and audio—are delivered over the internet. As streaming has become the dominant method for consuming digital media in 2025, understanding these protocols is critical for developers, engineers, and architects aiming to deliver seamless, high-quality content to users worldwide.

Over the past decade, the demand for robust streaming protocols has surged due to the proliferation of live events, on-demand content, and interactive applications. Streaming protocols like HLS (HTTP Live Streaming), RTMP (Real-Time Messaging Protocol), SRT (Secure Reliable Transport), MPEG-DASH (Dynamic Adaptive Streaming over HTTP), WebRTC (Web Real-Time Communication), and RTSP (Real-Time Streaming Protocol) now form the backbone of modern streaming architecture, supporting everything from global OTT platforms to IP surveillance systems.

What is a Streaming Protocol?

A streaming protocol is a set of rules and conventions that dictate how media data is transmitted from a server to a client in real time or on-demand. Unlike codecs, which encode or decode the actual media (e.g., H.264, AAC), or container formats (such as MP4 or MKV) that package encoded streams, a protocol for streaming focuses on how data moves across networks.

For example, when watching a live sports event online, the video is often encoded with H.264 (codec), wrapped in an MPEG-TS container, and then delivered using HLS (streaming protocol). The protocol handles segmenting, playlist creation, and adaptive bitrate switching to optimize the user experience across varying network conditions.

In essence, the protocol for streaming ensures smooth delivery, playback control, and compatibility between streaming servers, CDNs, and client devices.

Key Streaming Protocols Explained

HTTP Live Streaming (HLS) Protocol for Streaming

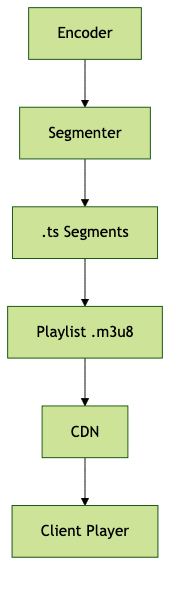

HLS, developed by Apple, is the most widely adopted protocol for streaming video content in 2025. It works by dividing video into small HTTP-based segments, which are listed in a playlist file (

.m3u8). This architecture enables adaptive bitrate streaming, allowing clients to dynamically adjust video quality based on real-time network conditions.HLS Architecture

Sample HLS Playlist (.m3u8)

1#EXTM3U

2#EXT-X-VERSION:3

3#EXT-X-TARGETDURATION:10

4#EXT-X-MEDIA-SEQUENCE:0

5#EXTINF:10.0,

6segment0.ts

7#EXTINF:10.0,

8segment1.ts

9#EXT-X-ENDLIST

10HLS supports DRM, encryption, and is compatible with nearly all browsers, mobile devices, and smart TVs. Its main drawback is higher latency compared to protocols like WebRTC.

RTMP Protocol for Streaming

Originally created by Macromedia (now Adobe), RTMP was the standard protocol for streaming to Flash players. Today, RTMP is primarily used for ingesting live streams into media servers like Wowza, Red5, or Nimble. RTMP offers low latency and robust real-time capabilities, but lacks native support for HTML5 playback and has declining browser support.

SRT Protocol for Streaming

Secure Reliable Transport (SRT) is an open-source protocol designed to deliver high-quality, low-latency video across unpredictable networks. Its strengths include built-in encryption, packet loss recovery, and NAT traversal, making it ideal for professional live contributions and remote production workflows.

MPEG-DASH Protocol for Streaming

MPEG-DASH is a standards-based protocol for adaptive streaming over HTTP. Unlike HLS, MPEG-DASH is codec-agnostic and widely supported in modern browsers and devices. It enables adaptive bitrate switching for both live and on-demand content, but lacks the pervasive compatibility of HLS on Apple devices.

WebRTC Protocol for Streaming

WebRTC is a real-time, peer-to-peer protocol for streaming audio, video, and arbitrary data between browsers and mobile apps. WebRTC is optimized for ultra-low latency and is key to applications such as video conferencing, live chat, and online gaming.

RTSP Protocol for Streaming

RTSP is a control protocol primarily used for IP cameras and surveillance systems. Paired with RTP (Real-Time Transport Protocol), RTSP enables low-latency, real-time streaming, but requires specialized players and is less suitable for large-scale OTT or browser-based delivery.

Streaming Protocol Architecture

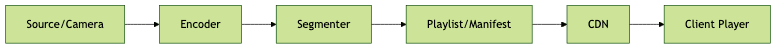

The architecture of a protocol for streaming typically follows a modular workflow:

- Encoder: Compresses raw audio/video using codecs (e.g., H.264, AAC)

- Segmenter: Splits encoded media into small segments

- Playlist Generator: Creates a manifest file (e.g.,

.m3u8or.mpd) - CDN: Distributes segments globally for scalability

- Client Player: Downloads playlist and segments for playback

Example ffmpeg Command for Segmenting Video

1ffmpeg -i input.mp4 -c:v libx264 -c:a aac -f hls -hls_time 10 -hls_playlist_type vod output.m3u8

2This command encodes

input.mp4 and segments it into 10-second chunks suitable for HLS delivery.Choosing the Right Protocol for Streaming

Selecting a protocol for streaming depends on several factors, including:

- Latency: Required speed of delivery (e.g., live sports vs. VOD)

- Scalability: Expected audience size and distribution

- Device Compatibility: Target devices and platforms

- Security: Need for encryption and DRM support

Comparative Table of Streaming Protocols

| Protocol | Latency | Compatibility | Security | Typical Use Cases |

|---|---|---|---|---|

| HLS | 5-30 sec | Very High | DRM, AES | OTT, VOD, Live Events |

| RTMP | <5 sec | Low (HTML5 Poor) | Basic | Ingest, Live Production |

| SRT | <2 sec | Medium | Strong | Remote Production, Links |

| MPEG-DASH | 3-10 sec | High | DRM, AES | Web, VOD, Live |

| WebRTC | <1 sec | Medium | DTLS, SRTP | Conferencing, Gaming |

| RTSP | <2 sec | Low | Basic | Surveillance, Cameras |

Implementing Protocols for Streaming: Practical Guide

To implement a protocol for streaming, follow these steps:

- Encoding: Use an encoder (e.g., ffmpeg) to compress your media.

- Segmenting: Split media into segments (e.g., ts files for HLS).

- Hosting: Store segments and playlist files on a web server or CDN.

- Player Integration: Use a compatible player (e.g., hls.js, Shaka Player).

1<!-- HTML5 Video Tag for HLS Playback -->

2<video controls>

3 <source src=\"https://example.com/stream/output.m3u8\" type=\"application/vnd.apple.mpegurl\">

4 Your browser does not support the video tag.

5</video>

6Open source tools like

Bento4

andffmpeg

simplify encoding, segmenting, and packaging workflows. For testing, tools likehls.js

andShaka Player

provide browser-based playback.Security and Optimization in Protocols for Streaming

Security is paramount in any protocol for streaming. Implement encryption (AES-128, Widevine DRM) to protect content. Adaptive bitrate streaming ensures smooth playback across varying network conditions. Regularly monitor streams for latency, buffering, or failures using analytics and troubleshooting tools.

Future Trends in Protocols for Streaming

Looking ahead to 2025, protocols for streaming are evolving to support AI-driven encoding, ultra-low latency, immersive 360°/VR content, and cloud-native workflows. Expect increasing adoption of open, interoperable standards and tighter integration with edge computing for global scalability.

Conclusion

Choosing the right protocol for streaming is essential for delivering high-quality, secure, and scalable video experiences. By understanding each protocol's strengths, architecture, and implementation nuances, developers can optimize for latency, compatibility, and future trends. Select wisely based on your project's needs in 2025, and stay updated as streaming technology continues to advance.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ