Introduction to LLM for Voice Chatbot

Large Language Models (LLMs) have taken the world of artificial intelligence by storm, enabling machines to understand, generate, and reason over human language at unprecedented levels. With the rapid progress of voice AI and conversational AI, the integration of LLMs into voice chatbots has become a game-changer for modern businesses in 2025. These advanced voice chatbots leverage speech recognition, natural language processing, and text-to-speech technologies to deliver human-like, context-aware interactions at scale. The growing need for real-time, multilingual voice agents—capable of engaging customers, automating tasks, and driving productivity—has fueled massive adoption of LLM for voice chatbot solutions across industries.

What is an LLM for Voice Chatbot?

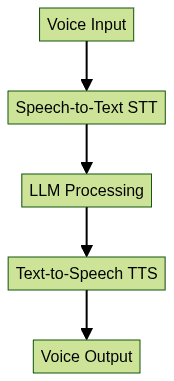

An LLM (Large Language Model) is a neural network trained on massive datasets to understand and generate human-like text. When used in a voice chatbot, the LLM processes transcribed speech, comprehends intent, and generates nuanced, context-rich responses. The core technologies in an llm for voice chatbot are:

- Speech-to-Text (STT): Converts spoken input into text.

- Large Language Model (LLM): Analyzes and crafts intelligent responses.

- Text-to-Speech (TTS): Translates the LLM's response back into natural-sounding voice.

- Agentic AI: Enables autonomous, decision-making behavior.

The integration of these components transforms voice chatbots from rigid scripts to dynamic, conversational agents that can handle complex scenarios, manage context, and express emotion. For developers looking to build such solutions, leveraging a robust

Voice SDK

can significantly streamline the process of integrating real-time audio capabilities.

Core Components of a Voice Chatbot using LLM

Speech-to-Text (STT)

STT engines, such as Google Speech-to-Text and Azure Cognitive Services, convert spoken language into text data. This step is critical for capturing user input with high accuracy, supporting multilingual conversations, and providing a seamless voice experience. The quality of STT directly impacts the overall performance of an llm for voice chatbot. Integrating a

phone call api

can further enhance the ability to capture and process voice input from various channels, including traditional telephony.Large Language Model (LLM) Processing

After STT, the transcribed text is processed by the LLM—such as OpenAI's GPT, Google's PaLM, or open-source models like Llama. The LLM interprets user intent, maintains conversation context, and generates relevant, natural responses. Its deep understanding of language enables context-aware, emotionally intelligent, and human-like dialog. For those developing in Python, using a

python video and audio calling sdk

can facilitate seamless integration of audio and video features alongside LLM processing.Text-to-Speech (TTS)

The final step is converting the LLM's textual response back into voice using TTS engines like Amazon Polly, Google Cloud TTS, or ElevenLabs. Modern TTS solutions deliver expressive, lifelike speech across multiple languages, making the chatbot sound more human and engaging. If you're building web-based applications, a

javascript video and audio calling sdk

can help you implement real-time communication features efficiently.Example: Integrating LLM with STT and TTS APIs in Python

1import openai

2import speech_recognition as sr

3import pyttsx3

4

5# STT: Capture voice and convert to text

6recognizer = sr.Recognizer()

7with sr.Microphone() as source:

8 print(\"Say something...\")

9 audio = recognizer.listen(source)

10 text = recognizer.recognize_google(audio)

11

12# LLM: Generate response

13response = openai.ChatCompletion.create(

14 model=\"gpt-4\", messages=[{"role": "user", "content": text}]

15)

16llm_reply = response["choices"][0]["message"]["content"]

17

18# TTS: Convert response to speech

19engine = pyttsx3.init()

20engine.say(llm_reply)

21engine.runAndWait()

22Key Features of Modern LLM-powered Voice Chatbots

Real-time Multilingual Support

Today's llm for voice chatbot platforms leverage multilingual LLMs and advanced STT/TTS engines to support dozens of languages and dialects in real time. This empowers businesses to engage a global audience, automate customer interactions across geographies, and deliver consistent experiences regardless of language barriers. Leveraging a

Voice SDK

can make it easier to deploy scalable, real-time multilingual voice solutions.Expressive Human-like Emotions

Modern LLMs and TTS engines can detect and express a range of emotions—from empathy to excitement—enabling voice chatbots to build rapport, de-escalate customer frustration, and deliver more engaging, human-like conversations. Emotion recognition and synthesis are core to agentic AI, making interactions feel authentic and personalized.

Contextual Memory and Persistent Sessions

An effective llm for voice chatbot maintains contextual memory, tracking user preferences, conversation history, and intent across multiple turns and sessions. This persistent context enables the bot to deliver relevant, personalized responses and ensures a seamless experience—even as users switch channels or revisit topics. For businesses managing high call volumes, integrating a

phone call api

can help maintain session continuity across different communication platforms.Popular Use Cases for LLM Voice Chatbots

Customer Support Automation

Enterprises deploy llm for voice chatbot solutions to provide 24/7 customer support, answer FAQs, resolve issues, and handle routine queries. These bots can escalate complex cases to human agents and integrate with CRMs, reducing wait times and improving customer satisfaction. Utilizing a

Voice SDK

allows organizations to easily add live voice support to their chatbot systems.Sales and Lead Qualification

Voice chatbots powered by LLMs can autonomously conduct outbound sales calls, qualify leads, and capture customer intent. By integrating with sales platforms, these bots automate prospecting, manage follow-ups, and improve conversion rates—all while delivering a natural, conversational experience. For teams looking to enhance their communication stack, a

Video Calling API

can provide high-quality video and audio interactions alongside voice chatbots.Scheduling, Booking, and Productivity Tasks

Businesses use llm for voice chatbot platforms to automate appointment scheduling, booking, reminders, and workflow management. These bots can access calendars, send notifications, and coordinate tasks—freeing up valuable time for both customers and staff. Developers can further enrich these solutions by integrating a

Voice SDK

for seamless voice interactions.How to Implement an LLM for Your Voice Chatbot

Choosing the Right LLM and Voice Tech Stack

When building an llm for voice chatbot, evaluate LLMs (e.g., OpenAI GPT-4, Google Gemini, Anthropic Claude) alongside compatible STT/TTS engines. Consider factors like language support, latency, API pricing, and integration ease. For enterprise-grade deployments, prioritize solutions with strong security and compliance certifications. If you're ready to get started,

Try it for free

and explore the available tools for building your own voice chatbot.Integration Steps and APIs

The typical llm for voice chatbot integration involves:

- Capturing user audio

- Transcribing with STT

- Processing with LLM

- Synthesizing response with TTS

- Streaming output to user

Example: LLM Voice Chatbot API Integration in Node.js

1const openai = require('openai');

2const speech = require('@google-cloud/speech');

3const textToSpeech = require('@google-cloud/text-to-speech');

4

5// (Pseudo-code for clarity)

6async function processVoice(inputAudio) {

7 // STT

8 const sttResult = await speech.recognize({audio: inputAudio});

9 const userText = sttResult.results[0].alternatives[0].transcript;

10

11 // LLM

12 const gptResponse = await openai.ChatCompletion.create({

13 model: "gpt-4",

14 messages: [{role: "user", content: userText}]

15 });

16 const llmReply = gptResponse.choices[0].message.content;

17

18 // TTS

19 const ttsResult = await textToSpeech.synthesizeSpeech({

20 input: {text: llmReply},

21 voice: {languageCode: 'en-US'},

22 audioConfig: {audioEncoding: 'MP3'}

23 });

24 return ttsResult.audioContent;

25}

26No-Code and Low-Code Options

Platforms like Voiceflow, Twilio Studio, and Google Dialogflow offer no-code and low-code environments for building llm for voice chatbot solutions. These tools allow teams to design conversational flows, integrate LLMs via drag-and-drop APIs, and deploy bots across phone, web, and mobile channels without extensive coding. For those seeking to add live audio functionality without heavy development, a

Voice SDK

can be a valuable addition to these platforms.Security and Compliance Considerations

When deploying llm for voice chatbot systems, ensure strict data privacy controls, encryption, and compliance with regulations like GDPR, HIPAA, or SOC 2. Choose vendors that provide robust security, audit logging, and enterprise-grade support.

Challenges and Best Practices

Building and scaling an llm for voice chatbot brings unique challenges. Scalability requires robust cloud infrastructure to handle spikes in traffic and real-time processing. Achieving high accuracy and low latency demands careful optimization of STT/LLM/TTS pipelines. Handling multilingual conversations and emotional nuances requires fine-tuning models and leveraging multilingual/multimodal LLMs. Data privacy must be prioritized, with strict controls over voice/audio storage and user consent. Best practices include continuous monitoring, user feedback loops, and regular model updates to ensure safe, reliable, and engaging conversational experiences.

Future Trends in LLM Voice Chatbots

Looking ahead to 2025 and beyond, llm for voice chatbot technology will increasingly feature multimodal reasoning—integrating voice, vision, and context for richer interactions. Agentic AI will enable bots to autonomously complete tasks, adapt to user preferences, and drive productivity gains across industries. Ongoing advancements in LLM architectures, STT/TTS fidelity, and security will unlock new possibilities for enterprise voice AI.

Conclusion

LLM for voice chatbot solutions are redefining conversational AI in 2025, enabling smarter, more natural, and scalable voice interactions. As enterprises embrace these innovations, the future of customer engagement and automation is here.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ