OpenAI Speech to Transcription: The Ultimate Guide (2025)

Introduction to OpenAI Speech to Transcription

OpenAI continues to set the standard in speech recognition and audio transcription with its advanced speech-to-text (STT) solutions. Leveraging state-of-the-art models like Whisper ASR and GPT-4o-transcribe, OpenAI enables developers to convert spoken language into accurate, readable text across a variety of platforms and use cases.

In 2025, the demand for reliable, real-time transcription has never been higher. From virtual meetings to live captioning and multilingual accessibility, accurate speech-to-text is vital in modern software workflows. This guide covers everything developers need to know about OpenAI speech to transcription: the technology stack, comparing methods, real-world integration, best practices, and limitations—complete with hands-on code and diagrams.

How OpenAI Speech to Transcription Works

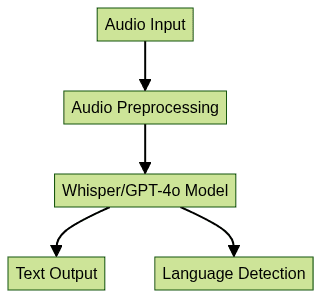

The Technology Behind OpenAI's Speech-to-Text

OpenAI’s speech-to-text pipeline is built on powerful neural architectures. Whisper ASR is a multilingual, multitask model designed for both transcription and translation. GPT-4o-transcribe is the latest offering, tightly integrated with the GPT-4o architecture for extended context and higher accuracy.

These models leverage large-scale supervised learning, massive multilingual datasets, and advanced transformer architectures to achieve state-of-the-art speech recognition. For developers looking to add interactive audio features, integrating a

Voice SDK

can further enhance real-time audio experiences in your applications.Key Features and Capabilities

- Multilingual transcription: Whisper and GPT-4o support dozens of languages, enabling global accessibility.

- Real-time and batch modes: Developers can choose between streaming (for live captions) and file upload (for post-processing) depending on latency and throughput needs.

- Translation support: Built-in translation allows for speech-to-text in one language and output in another, expanding the reach of your applications.

- Speaker turn detection and session management: Essential for meetings and real-time collaboration.

- For advanced communication features, consider exploring a

Video Calling API

to enable seamless video and audio interactions alongside transcription.

Setting Up OpenAI Speech to Transcription

Prerequisites and Environment Setup

Before you begin, ensure you have:

- An OpenAI API key (

sign up

) - Python 3.8+ installed

- Pip for package management

- Basic knowledge of Python

If your project requires robust audio and video communication, you might also want to try a

python video and audio calling sdk

to streamline integration with your Python applications.Create and activate a virtual environment for best isolation:

1python3 -m venv venv

2source venv/bin/activate

3Installation Steps

Install the necessary OpenAI packages and audio dependencies:

1pip install openai-whisper openai openai-agents websockets sounddevice numpy

2For real-time audio capture on Windows, you may need extra dependencies:

1pip install pyaudio

2Authentication and Basic Usage

Set your OpenAI API key as an environment variable:

1export OPENAI_API_KEY=\"sk-...\"

2Basic transcription of an audio file with Whisper:

1import openai

2openai.api_key = os.getenv('OPENAI_API_KEY')

3

4with open('audio_sample.wav', 'rb') as audio_file:

5 transcript = openai.Audio.transcribe('whisper-1', audio_file)

6 print(transcript['text'])

7Comparing OpenAI Speech Transcription Methods

File Upload Mode

OpenAI’s API allows transcription via file upload. Two modes are available:

- stream=True: Returns results incrementally (faster for large files).

- stream=False: Returns transcription only when completed.

1response = openai.Audio.transcribe(

2 model=\"whisper-1\",

3 file=open(\"audio_sample.wav\", 'rb'),

4 stream=True

5)

6for chunk in response:

7 print(chunk['text'])

8For developers building telephony or customer support solutions, integrating a

phone call api

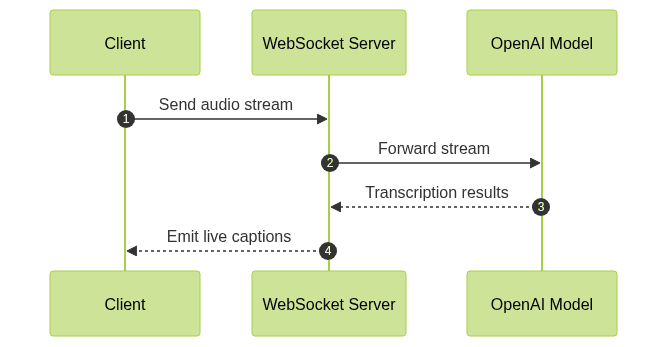

can help you manage and transcribe calls efficiently alongside OpenAI's transcription capabilities.Real-Time WebSocket Transcription

WebSocket API enables real-time, low-latency transcription—ideal for live captioning and interactive apps. Features include live speaker turn detection and session management.

- Live captions: Results streamed back in near real-time.

- Session limits: Each session has a max duration (e.g., 5 minutes).

- Speaker turn detection: API optionally tags speaker changes for multi-user scenarios.

- For live audio events and broadcasts, you can leverage a

Live Streaming API SDK

to deliver interactive, scalable streaming experiences with integrated transcription.

Using Agents SDK VoicePipeline

OpenAI Agents SDK offers a Python-only VoicePipeline for integrating speech transcription directly into agent workflows. Note: As of 2025, this feature is Python-exclusive and best suited for advanced, agent-driven applications. If you want to add live audio room capabilities, integrating a

Voice SDK

can provide flexible options for real-time voice communication.Implementing Real-Time Speech to Transcription with OpenAI

Capturing and Streaming Audio

To transcribe live audio, capture microphone input and stream it to the API. Supported formats include WAV, MP3, FLAC, and OGG.

1import sounddevice as sd

2import numpy as np

3import queue

4

5q = queue.Queue()

6

7def callback(indata, frames, time, status):

8 q.put(indata.copy())

9

10stream = sd.InputStream(samplerate=16000, channels=1, callback=callback)

11stream.start()

12# Audio chunks are now in q; stream to OpenAI API

13For group calls or collaborative environments, integrating a

Voice SDK

can help manage multiple participants and enhance your app's real-time audio capabilities.Integrating with WebSocket API

Async programming is essential for sending and receiving non-blocking audio and transcription data.

1import asyncio

2import websockets

3

4async def send_audio(uri):

5 async with websockets.connect(uri) as ws:

6 while True:

7 audio_chunk = q.get()

8 await ws.send(audio_chunk.tobytes())

9 result = await ws.recv()

10 print(\"Partial transcription:\", result)

11

12asyncio.run(send_audio('wss://api.openai.com/v1/audio/ws'))

13- Sending: Stream small audio chunks (~1s) for lower latency.

- Receiving: Listen for incremental transcription results.

- For voice-enabled applications, a

Voice SDK

can simplify the process of capturing and transmitting audio streams in real time.

Handling Results and Errors

Add user feedback and robust error handling to your pipeline.

1import tqdm

2

3for chunk in tqdm(response):

4 try:

5 print(chunk['text'])

6 except Exception as e:

7 print(f\"Error: {str(e)}\")

8 # Attempt to reconnect or log error

9- \Handle API rate limits and session drops gracefully.

- \Implement reconnect logic to resume transcription sessions.

- If your use case involves phone-based communication, integrating a

phone call api

can help you automate call handling and transcription workflows.

Best Practices for OpenAI Speech to Transcription

- Chunk long audio: Split lengthy recordings into smaller segments for better performance and lower latency.

- Language detection: Let the model auto-detect language or specify explicitly for improved results.

- Improving accuracy: Use high-quality microphones, minimize background noise, and choose appropriate audio formats (16kHz mono WAV recommended).

- Security and privacy: Never log sensitive audio data. Secure API keys and use encrypted channels (HTTPS/WSS) for transmission.

- Monitor usage: Track API limits and monitor latency for optimal user experience.

Use Cases for OpenAI Speech to Transcription

OpenAI speech-to-text powers a range of real-world applications:

- Meeting transcription: Auto-generate meeting minutes, searchable notes, and summaries.

- Live captioning: Enhance accessibility for webinars, streams, and in-person events.

- Voice assistants: Enable hands-free commands and seamless voice interactions.

- Customer support: Analyze and log support calls for quality assurance and automation.

If you’re looking to build these features into your product, you can

Try it for free

and explore the full capabilities of modern audio and video SDKs.Limitations and Considerations

- Audio format restrictions: Only certain formats (WAV, MP3, FLAC, OGG) are accepted.

- Session timeouts: Real-time sessions have maximum durations (typically 5-10 minutes).

- API rate limits: Exceeding limits can result in dropped sessions or slower responses.

- Latency: Network and model latency may vary based on load and audio length.

Conclusion

OpenAI speech to transcription in 2025 delivers unmatched accuracy and flexibility for developers. By integrating the latest APIs and best practices, you can build robust, multilingual, real-time transcription pipelines for virtually any application. Get started today and unlock the power of voice in your software.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ