How to Build an AI Voice Agent: The Ultimate 2024 Guide

Introduction

AI voice agents have rapidly evolved from simple voice assistants to sophisticated, context-aware systems capable of engaging in natural, multi-turn conversations. As the demand for seamless, hands-free, and intelligent digital interactions grows, learning how to build an AI voice agent has become a valuable skill for developers, engineers, and businesses alike. In 2025, organizations are leveraging these agents for everything from customer support to workflow automation and accessibility, making them a cornerstone of modern software solutions. This guide will walk you through the essential technologies, architectures, and practical steps to build your own AI voice agent, using the latest tools and frameworks.

What is an AI Voice Agent?

An AI voice agent is an intelligent software entity capable of understanding, processing, and responding to human speech in real time. Unlike traditional voice assistants that rely on pre-defined scripts and limited natural language processing (NLP), AI voice agents leverage advanced conversational AI, large language models (LLMs), and real-time speech technologies for deeper, context-aware interactions. They are designed to perform tasks, automate workflows, and serve as multi-modal interfaces for applications. The distinction lies in their ability to learn, adapt, and autonomously handle complex dialogues—a leap beyond classic voice assistant capabilities.

Why Build an AI Voice Agent?

Building an AI voice agent opens up transformative possibilities across industries. In accessibility, these agents enable hands-free computing for users with disabilities. In customer support, voicebots can handle high volumes of inquiries 24/7, providing personalized and efficient service. Hands-free AI interfaces are revolutionizing automotive, healthcare, and smart home sectors. The opportunity for innovation is vast: from proactive agentic AI that anticipates user needs to multi-agent systems enabling agent-to-agent communication and workflow orchestration. By mastering how to build an AI voice agent, you position yourself at the forefront of conversational and agentic AI development. For developers looking to enable real-time voice interactions in their applications, integrating a

Voice API

can significantly streamline the process and enhance user experience.Key Technologies and Architectures to Build an AI Voice Agent

Speech-to-Text & Text-to-Speech

To build an AI voice agent, the ability to convert spoken language into text (speech-to-text, STT) and text back into natural-sounding speech (text-to-speech, TTS) is foundational. Modern tools like Vocode (for Python) and ElevenLabs (for TTS) deliver high accuracy and low latency. The OpenAI

Voice API

and Whisper models are leading choices for robust speech recognition, supporting multiple languages and accents. If you want to add live audio capabilities or build interactive audio rooms, leveraging aVoice API

is a practical solution for scalable, real-time communication.Speech-Native vs. Chained Approach

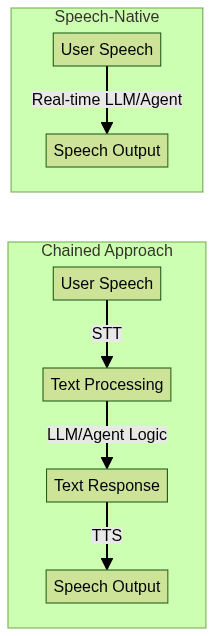

There are two primary architectural patterns when you build an AI voice agent:

- Speech-Native Architecture: All processing, including agent logic, is optimized for real-time speech, reducing latency.

- Chained Approach: Voice input is transcribed, processed as text, then synthesized back to speech. Easier to build, but may introduce delays.

AI Agent Logic & Workflow Orchestration

Modern AI voice agents often use multi-agent systems and agentic AI paradigms. This means multiple specialized agents (e.g., one for conversation, another for data lookup) work together to deliver a seamless experience. Orchestration frameworks like Autogen or custom Python workflows enable proactive, context-aware responses. Agent-responsive design ensures each agent adapts to changing user needs, while agent-to-agent communication allows for complex, multi-step workflows. If your application requires both video and audio communication, consider integrating a

python video and audio calling sdk

to streamline development and ensure robust performance.Step-by-Step Guide to Build an AI Voice Agent

1. Define the Use Case & Requirements

Start by specifying the purpose of your AI voice agent. Is it for customer support, accessibility, workflow automation, or something else? Identify user needs, required integrations (APIs, databases), and conversation complexity. Clear requirements will guide your architecture and technology choices. For scenarios involving telephony or direct calling features, exploring a

phone call api

can help you quickly add reliable phone call capabilities to your agent.2. Choose Your Tech Stack: No-Code vs. Custom Development

- No-Code AI Platforms: Solutions like Vapi AI and Bland AI allow rapid prototyping and deployment without deep coding, ideal for straightforward use cases.

- Custom Development: For flexibility and advanced workflows, use Python with libraries such as Vocode (STT/TTS), OpenAI API (for LLM logic), and ElevenLabs (premium TTS). Custom stacks enable agentic, multi-agent, and agent-to-agent communication scenarios. If you need to support both video and audio conferencing, integrating a

Video Calling API

can provide a seamless communication experience for your users.

3. Setting Up the Environment

To build an AI voice agent with Python, set up your environment and install dependencies:

1pip install vocode elevenlabs openai

2This command installs Vocode for handling speech, ElevenLabs for TTS, and OpenAI for LLM-powered logic. If you want to quickly add video and audio calling features to your application, you can

embed video calling sdk

solutions for a faster and more reliable setup.4. Capture and Transcribe Voice Input

Use Vocode or Whisper (by OpenAI) to capture and transcribe user speech to text. Example using Vocode:

1import vocode

2from vocode.transcriber import WhisperSTT

3

4def transcribe_audio(audio_file_path):

5 stt = WhisperSTT()

6 with open(audio_file_path, "rb") as audio_file:

7 transcript = stt.transcribe(audio_file)

8 return transcript['text']

9This function takes an audio file and returns the transcribed text. For developers looking to enable seamless phone call functionality, integrating a

phone call api

can help you manage call flows and telephony integration efficiently.5. Process Input with AI Agent Logic

Once you have the user's transcribed input, route it to an LLM (like OpenAI's GPT models) for processing. You can design complex workflows by chaining agents or integrating with orchestration frameworks like Autogen:

1import openai

2

3def get_ai_response(prompt):

4 response = openai.ChatCompletion.create(

5 model="gpt-4",

6 messages=[{"role": "user", "content": prompt}]

7 )

8 return response['choices'][0]['message']['content']

9For advanced agentic workflows, you can design agents for specific tasks (e.g., booking, information retrieval) and compose them for a seamless experience.

6. Generate and Synthesize Voice Output

Convert the AI's text response back to speech using ElevenLabs for natural-sounding output:

1from elevenlabs import generate, play, set_api_key

2

3set_api_key("YOUR_ELEVENLABS_API_KEY")

4def speak(text):

5 audio = generate(text)

6 play(audio)

7This snippet generates and plays voice output directly from your agent's text response.

7. Integrate with Applications/Workflows

Connect your AI voice agent to external services for real-world utility. For instance, you can integrate with Airtable to store user data or Make.com for automation. A customer support agent might fetch ticket statuses or update records:

1import requests

2

3def fetch_ticket_status(ticket_id):

4 url = f"https://api.airtable.com/v0/appId/Tickets/{ticket_id}"

5 headers = {"Authorization": "Bearer YOUR_AIRTABLE_API_KEY"}

6 response = requests.get(url, headers=headers)

7 return response.json().get('fields', {}).get('Status', 'Unknown')

8If your workflow requires integrating real-time voice communication, using a

Voice API

can help you add live audio features without extensive backend setup.8. Testing & Iteration

Test your AI voice agent for low latency, natural conversation flow, and error handling. Simulate edge cases (e.g., noisy input, ambiguous queries) and iterate based on user feedback. Tools like Chainlit can help visualize flows and debug interactions.

9. Deploy and Monitor

Deploy your AI voice agent on scalable cloud platforms (AWS, GCP). Monitor for uptime, performance, and errors using observability tools. Consider horizontal scaling for high-concurrency scenarios and implement logging/alerting for production reliability.

Best Practices and Tips for Building Effective AI Voice Agents

- Design for Natural Conversation: Use context tracking and memory to handle multi-turn dialogues. Avoid robotic responses by leveraging expressive TTS and robust NLP.

- Error Handling: Gracefully manage misrecognitions, ambiguous input, and system failures. Provide fallback prompts and confirmations.

- Accessibility & Compliance: Ensure your agent is usable with assistive technologies and complies with privacy/security standards (e.g., GDPR, HIPAA for healthcare).

Future Trends in AI Voice Agents

In 2025, expect real-time multimodal models that blend voice, vision, and touch for rich interactions. Industry adoption is accelerating, with agent-to-agent communication and workflow automation at the forefront. Open standards and frameworks will further democratize how to build an AI voice agent for any application.

Conclusion

The ability to build an AI voice agent empowers you to create intelligent, accessible, and interactive experiences. With the right tools and best practices, you can shape the future of conversational AI.

Try it for free

and start building today!Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ