Introduction to AI Video Calls

AI video calls are revolutionizing the way we connect, collaborate, and communicate across digital platforms. By integrating artificial intelligence into

video conferencing solutions

, developers are enabling smarter, more natural, and efficient conversations—regardless of physical distance. As remote work and global teams become the new norm, the demand for seamless, high-quality video calls has surged. AI-driven enhancements are now central to overcoming traditional barriers such as poor lighting, background noise, bandwidth constraints, and even the lack of genuine eye contact. Modern AI video calls are not just about transmitting audio and video; they are about creating an immersive, lifelike digital presence that fosters trust, engagement, and productivity for developers, teams, and end users alike.How AI is Revolutionizing Video Calls

The integration of AI technologies into video conferencing platforms is driving a paradigm shift in how virtual meetings are conducted. Key advancements include deep learning, computer vision,

natural language processing

, and neural audio/video codecs. These technologies enable features such as real-time gaze correction, background noise suppression, and bandwidth optimization—making video calls more efficient and accessible worldwide.AI-enhanced video conferencing delivers tangible benefits:

- Efficiency: AI automates mundane tasks like background blurring and lighting correction, letting participants focus on the conversation.

- Rapport and Trust: Features like AI eye contact and facial expression enhancement foster a sense of presence and reliability, even when participants are thousands of miles apart.

- Realism: GANs (Generative Adversarial Networks) and 3D transformation technologies create more natural, immersive interactions, narrowing the gap between virtual and in-person communication.

By leveraging AI, video calls are evolving from basic digital meetings to sophisticated, interactive experiences that drive deeper engagement and collaboration.

Core AI Features in Modern Video Calls

AI Eye Contact and Gaze Correction

One of the most impactful applications of AI in video calls is automatic eye contact correction. Computer vision models detect the user’s gaze and subtly adjust the video feed so it appears as if participants are looking directly at each other—regardless of camera placement. This simple tweak greatly enhances trust and improves engagement, making digital conversations feel more personal and authentic.

AI Video Compression and Bandwidth Optimization

AI-based video compression leverages GANs and neural codecs to significantly reduce the amount of data required for high-quality video transmission. For example, NVIDIA Maxine uses deep learning models to transmit key face points and reconstruct high-fidelity video on the recipient’s end. The result is lower bandwidth consumption while maintaining sharp, clear visuals—even in low-connectivity environments.

AI Audio Enhancement

AI-powered audio processing can eliminate background noise, simulate studio-quality microphones, and even modify speaker voices in real time. Deep learning models identify and suppress unwanted sounds, while voice font technology can clone or modify vocal characteristics. These enhancements ensure crystal clear communication, even in noisy or suboptimal acoustic environments.

Advanced AI Capabilities

AI-Powered Avatars and Real-Time Effects

AI-driven avatars and real-time visual effects are rapidly gaining traction in video conferencing. Users can represent themselves with 3D avatars that mimic facial expressions and movements, enabling privacy and creative self-expression. AR overlays and digital makeovers add another layer of engagement, allowing for professional or playful customization on the fly.

AI-Driven Lighting and Visual Enhancements

Lighting conditions can make or break the quality of a video call. AI algorithms can analyze and relight scenes, correct color balance, and optimize brightness in real time. This not only improves visual clarity but also ensures that participants appear professional and well-lit regardless of their environment.

AI for 3D Video Conferencing

Advanced AI can convert 2D video feeds into 3D representations, enabling immersive conferencing experiences. With depth estimation and pose detection, participants can interact in virtual 3D spaces, enhancing presence and collaboration. These technologies are paving the way for fully interactive AR and VR meetings.

Security and Privacy in AI Video Calls

AI video calls necessitate robust privacy and security measures. Features such as end-to-end encryption, secure recording, and compliance with data regulations ensure that sensitive information remains protected while leveraging advanced AI-driven capabilities.

Implementing AI Video Calls: Tools and Platforms

Popular AI Video Call SDKs and APIs

For developers looking to build or enhance AI video call functionality, a variety of SDKs and APIs are available:

- NVIDIA Maxine: Offers SDKs for AI-powered video, audio, and augmented reality effects, including gaze correction and noise suppression.

- AICONTACT: Specializes in AI-driven eye contact and gaze correction APIs.

- Agora, Twilio, Daily.co: Provide programmable video call SDKs with AI-powered features such as noise cancellation and video quality optimization.

These platforms allow teams to integrate AI features into their applications, accelerating development and ensuring high performance.

Integration with Existing Video Platforms

Many mainstream video conferencing tools—such as Zoom, Microsoft Teams, and Google Meet—have started incorporating AI-driven features. These include real-time background blur, voice enhancement, and even live translation. Developers can extend these platforms using APIs, bots, and plugins to add custom AI functionalities.

Example Implementation: Gaze Correction

Below is a sample Python implementation for AI-based gaze correction using OpenCV and dlib. This code detects facial landmarks and adjusts the eye region to simulate direct eye contact:

1import cv2

2import dlib

3import numpy as np

4

5def correct_gaze(frame):

6 detector = dlib.get_frontal_face_detector()

7 predictor = dlib.shape_predictor('shape_predictor_68_face_landmarks.dat')

8 gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

9 faces = detector(gray)

10 for face in faces:

11 landmarks = predictor(gray, face)

12 # Example: adjust eye region (landmarks 36-41, 42-47)

13 left_eye_pts = np.array([(landmarks.part(n).x, landmarks.part(n).y) for n in range(36, 42)])

14 right_eye_pts = np.array([(landmarks.part(n).x, landmarks.part(n).y) for n in range(42, 48)])

15 # Apply transformation for gaze correction (details omitted)

16 return frame

17This snippet demonstrates the foundation for gaze correction and can be expanded using AI models for more sophisticated adjustments.

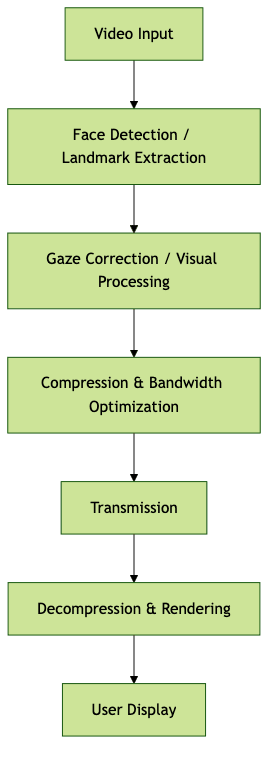

Using Mermaid Diagrams to Visualize AI Video Call Architecture

Below is a Mermaid diagram illustrating the typical AI video call pipeline:

Challenges and Considerations

Despite the rapid advancement of AI video calls, several challenges remain. Technically, real-time processing demands substantial computational resources, especially for high-resolution video and complex effects. Network latency and hardware limitations can impact performance and user experience.

On the ethical front, AI-generated content—such as deepfakes or synthetic avatars—raises concerns about authenticity and trust. Privacy issues are paramount, particularly in recording, storing, or analyzing video and audio data. Ensuring transparency, user consent, and compliance with data laws is critical for responsible deployment.

User acceptance is another hurdle. Some participants may feel uncomfortable with AI-altered visuals or voices. Thoughtful implementation, clear communication, and opt-in features can help drive adoption and trust.

Future Trends in AI Video Calls

Looking ahead, AI video calls are set to become even more immersive and intelligent. Expect rapid growth in 3D video conferencing, with realistic avatars and spatial audio creating lifelike virtual environments. AR overlays will enable interactive collaboration—think shared whiteboards or 3D models floating in space.

Real-time multilingual translation and emotion recognition will break down language and cultural barriers, making global collaboration seamless. AI will increasingly automate meeting logistics—scheduling, note-taking, action tracking—freeing up participants to focus on meaningful interaction. As these trends accelerate, AI’s role in remote work and global communication will only deepen.

Conclusion

AI video calls are fundamentally changing how we connect and collaborate. Through innovations in eye contact correction, compression, avatars, and security, developers are building video conferencing solutions that are more engaging, efficient, and secure. As adoption grows, embracing best practices in privacy, transparency, and user experience will be key to unlocking the full potential of AI-driven virtual communication.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ