Why Human-Like Synthetic Audio Matters in 2025

In 2025, businesses are witnessing an unprecedented rise in demand for lifelike digital interactions. Whether it’s customer support, content creation, or virtual assistants, the way brands sound matters more than ever. Enter wavenet—a breakthrough in voice technology that empowers organizations to deliver truly human-like synthetic audio. For business leaders, product managers, and entrepreneurs, embracing wavenet isn’t just about keeping up with trends; it’s a strategic move to differentiate your digital products, boost customer engagement, and unlock new revenue streams.

The Problem with Old-School Speech Synthesis

Traditional approaches to text-to-speech (TTS) technology have long struggled to deliver natural, engaging voices. Concatenative TTS systems stitch together pre-recorded speech fragments, resulting in audio that’s mechanical, rigid, and costly to update. Parametric TTS and vocoder-based methods offer more control over speech characteristics, but their output often lacks the richness and nuance of real human speech. The result: digital voices that alienate users, limit brand expression, and hamper product adoption.

| Technology | Flexibility | Cost | Naturalness |

|---|---|---|---|

| Concatenative TTS | Low | High | Low |

| Parametric TTS | Moderate | Moderate | Moderate |

| Wavenet-based TTS | High | Low (at scale) | High |

These limitations highlight why forward-thinking companies are now prioritizing wavenet-powered solutions for their next generation of digital audio experiences.

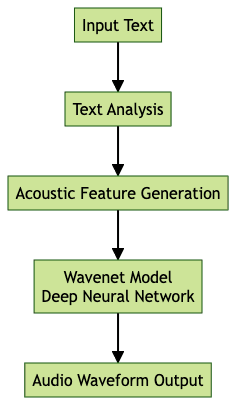

Inside Wavenet: How It Works and Why It’s Different

Wavenet represents a paradigm shift in synthetic speech generation. Unlike previous technologies, wavenet harnesses generative AI to create raw audio waveforms, modeling each sample individually. This approach enables a level of expressiveness, inflection, and realism previously unattainable by machines. At its core, wavenet leverages deep learning—specifically, neural networks with dilated convolutions—to analyze vast datasets of human speech and capture complex audio patterns.

Here’s how wavenet stands apart:

- Generative Power: Models audio directly, producing fluid, human-like voices.

- Deep Learning Backbone: Learns from huge, diverse voice datasets to capture subtle speech features.

- Dilated Convolutions: Processes context across long stretches of audio, enabling smoothness and continuity.

For those interested in the technical underpinnings, the

AI voice Agent core components overview

provides an in-depth look at the essential building blocks that power advanced synthetic audio systems.

The result? A voice that doesn’t just read words but delivers them with natural pauses, emotion, and clarity—transforming digital interactions into memorable experiences.

Tangible Business Benefits: Where Wavenet Is a Game-Changer

Investing in wavenet-based audio unlocks new business value across multiple dimensions:

- Enhanced Customer Experience: Customers now expect digital voices to sound trustworthy, relatable, and engaging. Wavenet delivers on these expectations, deepening brand connections and reducing user frustration.

- Multi-Language & Multi-Speaker Flexibility: Quickly deploy voices in numerous languages and styles, scaling your reach and personalizing every interaction.

- Faster Iteration: Unlike older TTS systems, wavenet allows rapid prototyping, A/B testing, and deployment, speeding up innovation cycles.

- Diverse Use Cases: From virtual assistants and accessibility tools to branded voice experiences and content automation, the applications are vast and growing. For those looking to get started quickly, the

Voice Agent Quick Start Guide

offers step-by-step instructions to launch your own AI-powered voice agent.

For businesses, the adoption of wavenet is not just a technical upgrade—it’s a strategic lever for customer loyalty, market differentiation, and operational agility.

Real-World Applications and ROI

Wavenet’s impact is already visible across industries:

- Google Assistant: Delivers lifelike responses, increasing user satisfaction and engagement.

- Google Maps: Provides more natural navigation prompts, improving trust and usability.

- Accessibility Solutions: Empowers visually impaired users with voices that are pleasant and understandable, fostering inclusivity.

To further enhance these experiences, integrating TTS plugins such as the

Google TTS Plugin for voice agent

,ElevenLabs TTS Plugin for voice agent

, andOpenAI TTS Plugin for voice agent

allows businesses to tailor voice outputs to specific needs and use cases.The measurable outcomes are compelling:

- Higher Engagement: Users interact more frequently and for longer durations with lifelike voices.

- Improved Satisfaction: Natural-sounding audio reduces cognitive load and frustration.

- Greater Inclusivity: Expands access to digital services for a broader audience.

| ROI Metric | Wavenet Impact |

|---|---|

| User Session Duration | +20% (average uplift) |

| Customer Satisfaction Score | +15 points |

| Time to Market for Voice Apps | -40% (faster deployment) |

| Accessibility Adoption Rate | 2x increase |

| Brand Recall | +25% improvement |

These metrics underscore the business case for investing in wavenet-powered experiences—delivering returns that extend beyond the bottom line to brand equity and customer advocacy.

Key Challenges: What’s Hard About Building Wavenet-Quality Audio

Despite its promise, building wavenet-quality audio is a complex endeavor. The primary hurdles include:

- Data Requirements: Training high-fidelity models demands massive, high-quality voice datasets.

- Computational Load: Real-time audio generation is resource-intensive, requiring advanced hardware and smart optimization.

- Pipeline Orchestration: Seamlessly integrating wavenet voices into modern apps, while managing latency and reliability, can be daunting. Understanding the

Realtime pipeline in AI voice Agents

is crucial for delivering low-latency, high-quality audio at scale. - Security & Privacy: Safeguarding sensitive user data and ensuring compliant voice modeling are non-negotiable.

For organizations, overcoming these challenges means not just technical expertise but also robust infrastructure and end-to-end orchestration.

The Builder’s Blueprint: Bringing Wavenet-Quality Audio to Your Product

To harness wavenet’s full potential, product innovators must approach development strategically. Here’s a blueprint for success:

The Core Components You’ll Need

- Data Pipelines: Continuous ingestion and curation of diverse, high-quality voice data.

- Model Training & Tuning: Leveraging state-of-the-art AI frameworks to train, validate, and optimize wavenet models.

- Deployment Infrastructure: Scalable, resilient systems to serve audio on-demand—globally and securely. For a seamless rollout, consult the

AI voice Agent deployment

documentation for best practices and deployment strategies. - Seamless Integration: Embedding synthetic voices deeply within your product experiences, from chatbots to content platforms.

Additionally, robust analytics and monitoring are essential. Leveraging

AI voice Agent Session Analytics

andAI voice Agent tracing and observability

ensures you can track performance, user engagement, and troubleshoot issues effectively.The Critical Challenge: Real-Time Orchestration

Real-world applications demand more than just great audio—they require flawless, real-time experiences. Managing latency, scaling to millions of users, and delivering consistent quality are formidable challenges. User expectations are sky-high, and any lag or glitch can undermine trust and brand value.

For teams looking to capture and analyze interactions,

AI voice Agent recording

provides a reliable way to archive and review voice sessions, supporting compliance and quality assurance.The Solution: The VideoSDK Agents Framework

This is where the VideoSDK Agents Framework becomes a game-changer. Designed specifically to abstract the complexity of real-time audio orchestration, it empowers your team to focus on creating value, not wrangling infrastructure.

Key advantages include:

- Real-Time Orchestration: Route, process, and deliver wavenet-quality audio with sub-second latency.

- Scalability: Grow from pilot to planet-scale deployments without reengineering your stack.

- Developer-First Tooling: Streamlined APIs, monitoring, and workflows accelerate build cycles and reduce operational headaches.

By leveraging VideoSDK, your roadmap to human-like audio becomes faster, more predictable, and inherently scalable. The framework handles the heavy lifting, so your team can innovate—confident that every customer touchpoint showcases the best your brand has to offer.

Conclusion: The Future of Voice and Why It’s Within Reach

The next frontier of digital experience is voice—natural, expressive, and universally accessible. For business leaders and product innovators, the opportunity is now. With wavenet and the VideoSDK Agents Framework, building world-class audio is no longer a dream but a strategic reality—one that sets your product apart and shapes the future of digital engagement.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ