Introduction to OpenAI WebRTC

What is OpenAI WebRTC?

OpenAI WebRTC refers to the integration of OpenAI's powerful AI models with the WebRTC (Web Real-Time Communication) protocol. This combination enables developers to build real-time applications that leverage AI capabilities, such as voice assistants, interactive voice response (IVR) systems, and real-time language translation services. Think of it as a bridge connecting the world of AI to the immediacy of real-time communication, creating richer and more interactive user experiences.

Why use OpenAI WebRTC?

Using OpenAI WebRTC unlocks a range of possibilities for developers. It allows you to create applications that respond instantly to user input, providing a seamless and engaging experience. By leveraging OpenAI's models, you can add intelligent features like natural language understanding, speech synthesis, and sentiment analysis to your real-time communication applications. This integration opens doors to creating truly innovative and interactive experiences that were previously difficult to achieve.

Key benefits of OpenAI WebRTC

The key benefits of using OpenAI WebRTC include:

- Low Latency: WebRTC's real-time capabilities ensure minimal delay in communication, crucial for interactive applications.

- AI-Powered Intelligence: Integrate OpenAI's advanced models for natural language understanding, speech synthesis, and more.

- Enhanced User Experience: Create engaging and interactive experiences with real-time AI processing.

- Scalability: WebRTC is designed to handle a large number of concurrent users, making it suitable for scalable applications.

- Versatility: Suitable for building various applications, from voice assistants to real-time translation services.

Setting up OpenAI WebRTC

Prerequisites and Installation

Before diving into OpenAI WebRTC, you'll need to ensure you have the following prerequisites:

- Node.js and npm: Required for running JavaScript-based WebRTC applications.

- WebRTC Library: A WebRTC library such as

simple-peerorpeerjsfor handling the WebRTC connection. - OpenAI Account: Access to the OpenAI API and an API key.

Installation typically involves using npm to install the necessary packages. For example:

bash

1npm install simple-peer

2npm install openai

3API Key and Authentication

To access OpenAI's models, you'll need an API key. You can obtain an API key from the OpenAI website after creating an account. Ensure you store your API key securely and avoid exposing it in client-side code. Use environment variables or a secure configuration file to manage your API key.

javascript

1// Example using Node.js and dotenv

2require('dotenv').config();

3const OpenAI = require('openai');

4

5const openai = new OpenAI({

6 apiKey: process.env.OPENAI_API_KEY, // This is also the default, can be omitted

7});

8

9async function main() {

10 const completion = await openai.chat.completions.create({

11 messages: [{ role: "system", content: "You are a helpful assistant." }, { role: "user", content: "Hello!" }],

12 model: "gpt-3.5-turbo",

13 });

14

15 console.log(completion.choices[0].message);

16}

17

18main();

19

20Choosing the right OpenAI model

OpenAI offers a variety of models suitable for different tasks. For voice-based applications, consider models like

gpt-3.5-turbo or gpt-4 for natural language understanding and models specifically designed for text-to-speech and speech-to-text.The choice of model will depend on the complexity of your application and the desired level of accuracy. Experiment with different models to find the best fit for your needs.

javascript

1// Basic JavaScript setup for OpenAI WebRTC connection

2// This is a simplified example and would need to be integrated with a WebRTC library

3

4const apiKey = 'YOUR_OPENAI_API_KEY'; // Replace with your actual API key

5const model = 'gpt-3.5-turbo'; // Choose your desired OpenAI model

6

7// Function to send text to OpenAI and receive a response

8async function getOpenAIResponse(text) {

9 try {

10 const response = await fetch('https://api.openai.com/v1/chat/completions', {

11 method: 'POST',

12 headers: {

13 'Content-Type': 'application/json',

14 'Authorization': `Bearer ${apiKey}`

15 },

16 body: JSON.stringify({

17 model: model,

18 messages: [{ role: 'user', content: text }]

19 })

20 });

21

22 const data = await response.json();

23 return data.choices[0].message.content;

24 } catch (error) {

25 console.error('Error calling OpenAI API:', error);

26 return 'Error processing your request.';

27 }

28}

29

30// Example usage (This would be called from your WebRTC audio processing code)

31// getOpenAIResponse('Hello, OpenAI!').then(response => console.log(response));

32

33Building a Real-time Voice Assistant with OpenAI WebRTC

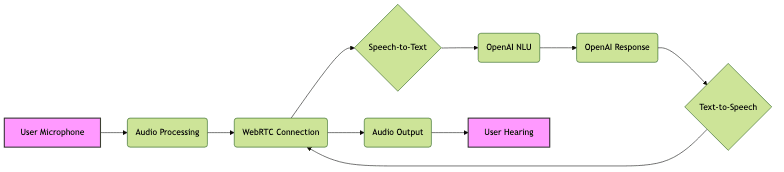

Conceptual Overview: Architecture and Components

Building a real-time voice assistant with OpenAI WebRTC involves several key components:

- WebRTC Connection: Establishes a peer-to-peer connection for real-time audio streaming.

- Audio Processing: Captures and processes audio from the user's microphone.

- Speech-to-Text: Converts the audio stream into text using OpenAI's speech recognition models.

- Natural Language Understanding: Processes the text input using OpenAI's language models to understand the user's intent.

- Text-to-Speech: Converts the AI-generated response back into an audio stream using OpenAI's text-to-speech models.

- Audio Output: Plays the audio response back to the user.

Step-by-step guide: Connecting to the OpenAI Realtime API via WebRTC

- Establish a WebRTC connection: Use a WebRTC library to create a peer-to-peer connection between the client and server.

- Capture Audio: Capture audio from the user's microphone using the

getUserMediaAPI. - Stream Audio: Send the audio stream to the server via the WebRTC connection.

- Process Audio: On the server, receive the audio stream and convert it to text using OpenAI's Speech-to-Text API.

- Send Text to OpenAI: Send the transcribed text to OpenAI's language model for processing.

- Receive Response: Receive the AI-generated response from OpenAI.

- Convert Text to Speech: Convert the response to audio using OpenAI's Text-to-Speech API.

- Stream Audio Back: Send the audio stream back to the client via the WebRTC connection.

- Play Audio: Play the audio response to the user.

Handling Audio Streams: Sending and Receiving

Handling audio streams involves capturing audio from the user's microphone, encoding it, and sending it over the WebRTC connection. On the receiving end, you need to decode the audio stream and play it back to the user.

javascript

1// JavaScript function to handle audio streaming

2

3function handleAudioStream(stream) {

4 const audioContext = new AudioContext();

5 const source = audioContext.createMediaStreamSource(stream);

6 const processor = audioContext.createScriptProcessor(1024, 1, 1);

7

8 source.connect(processor);

9 processor.connect(audioContext.destination);

10

11 processor.onaudioprocess = function(e) {

12 // e.inputBuffer contains the audio data

13 const audioData = e.inputBuffer.getChannelData(0);

14 // Send audioData to the server via WebRTC

15 // Implement your WebRTC sending logic here

16 console.log('Sending audio data:', audioData.length);

17 };

18}

19

20navigator.mediaDevices.getUserMedia({ audio: true, video: false })

21 .then(handleAudioStream)

22 .catch(function(err) {

23 console.log('Error getting audio stream:', err);

24 });

25

26Implementing Speech-to-Text and Text-to-Speech

OpenAI provides APIs for both speech-to-text and text-to-speech functionality. You can use these APIs to convert audio streams into text and vice versa. Refer to the OpenAI documentation for detailed instructions on using these APIs. Models like Whisper are useful for Speech to Text.

Advanced OpenAI WebRTC Techniques

Optimizing for Low Latency

Latency is a critical factor in real-time applications. To optimize for low latency, consider the following techniques:

- Reduce Network Hop: Minimize the number of network hops between the client and server.

- Optimize Audio Encoding: Use efficient audio codecs that minimize encoding and decoding time.

- Reduce Buffer Size: Reduce the buffer size to minimize delay, but be mindful of potential audio dropouts.

- Prioritize Traffic: Prioritize WebRTC traffic to ensure it receives preferential treatment on the network.

Error Handling and Robustness

Error handling is crucial for building robust applications. Implement error handling to gracefully handle network disruptions, API errors, and other unexpected issues. Use try-catch blocks to catch exceptions and provide informative error messages to the user.

Data Channel Implementation for Enhanced Communication

WebRTC data channels allow you to send arbitrary data between peers. You can use data channels to send metadata, control signals, or other non-audio data. Data channels can enhance communication and enable features like real-time collaboration and data synchronization.

Scaling your OpenAI WebRTC application

Scaling an OpenAI WebRTC application requires careful planning and infrastructure design. Consider using a load balancer to distribute traffic across multiple servers. Implement caching to reduce the load on the OpenAI API. Use a distributed architecture to ensure high availability and fault tolerance.

Measuring and Improving OpenAI WebRTC Performance

Measuring Latency: Tools and Techniques

Measuring latency is essential for identifying performance bottlenecks. Use tools like

ping and traceroute to measure network latency. Implement client-side and server-side logging to track processing times and identify areas for optimization. WebRTC Internals in Chrome is also useful.Analyzing Network Conditions

Analyzing network conditions can help you identify potential issues that may be affecting performance. Monitor network bandwidth, packet loss, and jitter. Use network monitoring tools to gain insights into network performance and identify areas for improvement.

Troubleshooting and Optimization Strategies

Troubleshooting performance issues requires a systematic approach. Start by identifying the symptoms and gathering data. Use logging and monitoring tools to pinpoint the source of the problem. Experiment with different optimization techniques to improve performance. For example, adjusting the bitrate of the audio stream can help improve performance under poor network conditions.

Security Considerations for OpenAI WebRTC

Protecting API Keys and User Data

Protecting API keys and user data is paramount. Never expose API keys in client-side code. Store API keys securely in environment variables or configuration files. Encrypt user data both in transit and at rest. Implement access controls to restrict access to sensitive data.

Secure Communication Channels

WebRTC provides built-in security features, such as encryption and authentication. Ensure that you are using secure communication channels to protect against eavesdropping and tampering. Use HTTPS for all web traffic and enable encryption for WebRTC connections.

Mitigating Potential Vulnerabilities

Be aware of potential vulnerabilities in WebRTC and OpenAI APIs. Stay up-to-date with security patches and best practices. Implement input validation and sanitization to prevent injection attacks. Regularly audit your code for security vulnerabilities.

Real-World Applications of OpenAI WebRTC

Voice-enabled Chatbots

OpenAI WebRTC can be used to create voice-enabled chatbots that provide real-time assistance and support to users. These chatbots can understand natural language, answer questions, and perform tasks using voice commands.

Interactive Voice Response (IVR) Systems

IVR systems can be enhanced with OpenAI WebRTC to provide more natural and intuitive voice interactions. Users can speak to the system using natural language, and the system can respond using AI-generated speech.

Real-time Language Translation

Real-time language translation applications can be built using OpenAI WebRTC. These applications can translate spoken language in real-time, enabling seamless communication between people who speak different languages.

Accessibility Features

OpenAI WebRTC can be used to create accessibility features for people with disabilities. For example, it can be used to provide real-time transcription of spoken language for people who are deaf or hard of hearing.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ