What is Automatic Speech Recognition (ASR)? Technology, History, and Modern Strategies in 2025

Explore the fundamentals of automatic speech recognition (ASR), its historical evolution, core technologies, modern deep learning approaches, real-world applications, challenges, and future outlook—all tailored for developers.

Introduction to What is Automatic Speech Recognition (ASR)

Automatic Speech Recognition (ASR) is a transformative technology that converts spoken language into written text using computational methods. In 2025, ASR is foundational to many modern software applications, making human-computer interaction more natural and inclusive. From powering voice assistants like Google Assistant and Siri to enabling real-time transcription and accessibility features, ASR systems have become indispensable in both consumer and enterprise technology. The surge in digital communication and the proliferation of smart devices demand highly accurate and robust speech recognition systems. Developers and engineers are now leveraging advanced ASR technology to build innovative solutions across domains, from healthcare to customer service. Understanding what is automatic speech recognition and how it works is crucial for software professionals aiming to create voice-driven applications and interfaces.

The Evolution and History of Automatic Speech Recognition (ASR)

The history of automatic speech recognition dates back to the 1950s, with early systems such as Audrey developed by Bell Labs capable of recognizing digits spoken by a single voice. These pioneering systems operated on rudimentary acoustic models and were limited in vocabulary and speaker adaptability. The progression from template-based methods to statistical models fueled the first wave of commercial ASR systems. The 2000s saw the integration of hidden Markov models (HMMs) and Gaussian Mixture Models (GMMs), enabling broader vocabulary and improved accuracy.

The past decade accelerated the evolution of ASR through deep learning, with neural networks significantly outperforming traditional models. Open-source frameworks like Mozilla DeepSpeech and open models such as Whisper have democratized access to high-quality ASR. The emergence of multilingual ASR and universal speech models, including Google’s Universal Speech Model, highlight the rapid pace of innovation. Today’s ASR systems are far more adaptable, supporting dozens of languages and dialects, and are integral to cloud-based services and edge computing solutions. Developers seeking to integrate speech recognition into their applications can leverage tools like

Voice SDK

to streamline the process and enhance user experiences.How Does Automatic Speech Recognition Work?

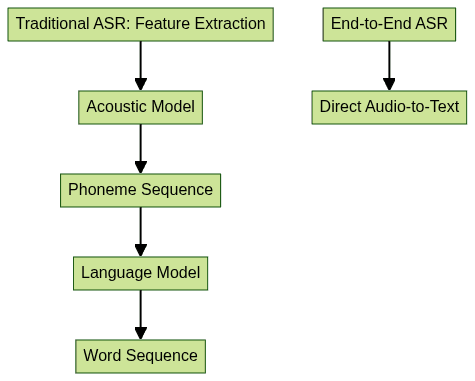

Automatic speech recognition is a multi-stage process that translates audio signals into readable text. Understanding what is automatic speech recognition involves delving into its core components: audio capture, feature extraction, acoustic modeling, language modeling, and decoding. Each stage plays a critical role in ensuring the accuracy and efficiency of the ASR pipeline.

Step 1: Audio Capture and Feature Extraction

ASR systems begin by capturing audio through microphones. The raw audio is then processed to extract meaningful features, such as Mel-frequency cepstral coefficients (MFCCs), which represent the spectral properties of speech. These features are visualized as spectrograms, providing a time-frequency representation of the audio signal. For developers building real-time communication tools, integrating a

javascript video and audio calling sdk

can facilitate seamless audio capture and transmission within web applications.

Step 2: Acoustic Modeling in Automatic Speech Recognition

The extracted features are input to an acoustic model, which predicts phonetic units or subword components. Modern ASR systems use deep neural networks to map these features to phonemes, accounting for variations in accent, speed, and background noise.

1import librosa

2import numpy as np

3

4audio_path = "sample.wav"

5y, sr = librosa.load(audio_path, sr=None)

6mfccs = librosa.feature.mfcc(y=y, sr=sr, n_mfcc=13)

7print(np.shape(mfccs)) # Output: (13, frames)

8For those working in Python, a

python video and audio calling sdk

can be utilized to handle audio streams and integrate ASR capabilities into backend or server-side applications.Step 3: Language Modeling & Decoding in Automatic Speech Recognition

After phonetic probabilities are produced, a language model estimates the likelihood of word sequences. This model helps resolve ambiguities and ensures grammatically coherent output. Techniques range from n-gram models to transformer-based neural networks. The decoder integrates acoustic and language model outputs to generate the most probable transcription. This process is critical to understanding what is automatic speech recognition and how systems achieve contextual accuracy. For applications involving phone-based interactions, integrating a

phone call api

can help developers add robust voice communication features alongside ASR.Modern Approaches: Deep Learning and End-to-End ASR

Deep learning has revolutionized what is automatic speech recognition by enabling end-to-end models that directly transcribe audio to text. Traditional ASR pipelines rely on separately trained acoustic and language models, while modern systems leverage architectures like Recurrent Neural Network Transducer (RNN-T), Connectionist Temporal Classification (CTC), and attention-based encoder-decoder models.

These models, powered by deep neural networks, eliminate the need for handcrafted features, allowing direct optimization for transcription accuracy. End-to-end ASR streamlines training, simplifies deployment, and improves adaptability to new languages and domains. For organizations looking to implement scalable video and audio communication, a

Video Calling API

can be integrated to support real-time collaboration with built-in ASR features.

The advantages of deep learning in ASR include better handling of noisy environments, improved speaker independence, and the ability to learn complex patterns in speech data. Open-source projects like Whisper and end-to-end frameworks such as ESPnet and NVIDIA NeMo have made state-of-the-art ASR accessible to the wider developer community in 2025. Additionally, solutions like

Voice SDK

provide developers with easy-to-use tools for integrating live audio features and speech recognition into their applications.Applications and Use Cases of Automatic Speech Recognition

Automatic speech recognition technology is ubiquitous in today’s digital landscape. Leading applications include:

- Voice Assistants: ASR powers devices like Amazon Alexa and Google Assistant, enabling natural voice commands for smart home control and information access.

- Transcription Services: Real-time and batch transcription tools automate note-taking in meetings, lectures, and interviews, increasing productivity.

- Accessibility: ASR-driven captions and voice-to-text services make digital content accessible to users with hearing or motor impairments.

- Call Centers: Automated speech recognition enables interactive voice response (IVR) systems, customer sentiment analysis, and call transcription.

- Healthcare: Medical professionals use ASR for hands-free note dictation, improving patient record accuracy and efficiency.

- Media and Entertainment: ASR facilitates content indexing, subtitle generation, and interactive gaming by processing spoken input.

As ASR accuracy and language support improve, developers are integrating speech recognition into an expanding array of real-world scenarios, from real-time translation to voice-driven programming assistants. For those interested in building interactive live experiences, a

Live Streaming API SDK

can enable real-time audio and video streaming with integrated ASR for enhanced engagement.Factors Affecting the Accuracy of Automatic Speech Recognition

The performance of ASR systems is primarily measured by Word Error Rate (WER), which quantifies the proportion of insertions, deletions, and substitutions in the transcription. Key factors influencing accuracy include:

- Background Noise: High noise levels reduce ASR reliability, necessitating advanced noise-cancellation techniques.

- Accents and Dialects: Variability in pronunciation introduces challenges, especially for global applications.

- Domain-Specific Vocabulary: Specialized terminology or jargon may be underrepresented in training data, lowering recognition rates.

- Audio Quality: Poor microphone quality and compression artifacts degrade feature extraction.

- Privacy Considerations: On-device ASR and differential privacy methods are increasingly employed to protect user data while maintaining system performance.

Optimizing these factors is essential for delivering robust ASR across diverse contexts in 2025. Developers can further enhance their applications by integrating a

Voice SDK

, which offers advanced features for managing audio quality and background noise in real-time communication environments.Limitations and Challenges of Automatic Speech Recognition

Despite significant advancements, automatic speech recognition faces several limitations:

- Accuracy: ASR systems may struggle with homophones, rare words, or overlapping speech.

- Privacy: Cloud-based ASR raises concerns over data security and user confidentiality.

- Language and Context: Many languages and dialects remain underrepresented, and context-aware understanding is an ongoing research challenge.

- Computational Requirements: Real-time ASR on edge devices demands efficient algorithms and hardware optimization.

Developers must balance these constraints when designing ASR-enabled applications. Utilizing a

Voice SDK

can help address some of these challenges by providing flexible APIs and tools for secure, scalable voice integration.The Future of Automatic Speech Recognition

The future of automatic speech recognition is driven by advances in multilingual models, open source collaboration, and AI research. Initiatives like Google Universal Speech Model aim to support hundreds of languages, breaking down communication barriers. Open-source projects, such as Whisper, foster transparency and rapid innovation.

Emerging trends include federated learning for privacy-preserving ASR, real-time speech translation, and context-aware assistants. As AI models become more generalized and capable, ASR will play a central role in shaping human-computer interaction for years to come.

Conclusion: The Transformative Impact of Automatic Speech Recognition

Automatic speech recognition has revolutionized digital interfaces, making technology more accessible, intuitive, and productive. Developers are at the forefront of this transformation, leveraging state-of-the-art ASR to build smarter applications. As innovation accelerates in 2025, the potential of ASR to bridge communication gaps and drive new experiences is greater than ever. If you’re ready to explore the possibilities of ASR and voice technology,

Try it for free

and start building your own voice-driven applications today.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ