Introduction to Speech Recognition Threshold

Speech recognition threshold (SRT) is a foundational concept in audiology and hearing technology, representing the minimum intensity level (measured in decibels) at which speech stimuli can be correctly recognized 50% of the time. In 2025, SRT remains a key metric for both clinical assessment and the development of advanced hearing solutions.

Understanding SRT is vital for designing hearing aids, cochlear implant programming, and auditory training software. For developers and engineers working on speech processing applications, knowledge of SRT ensures that products are attuned to the real-world needs of users with hearing loss. The measurement of SRT provides insights into speech perception abilities and guides interventions, making it a cornerstone in the field of hearing science.

Understanding the Speech Recognition Threshold (SRT)

What is Speech Recognition Threshold?

The speech recognition threshold (SRT) is defined as the lowest sound level (in decibels, dB) at which an individual can correctly repeat or recognize 50% of presented speech stimuli, typically using spondee words (two-syllable words with equal stress). This audiometric threshold is a direct measure of speech perception and is critical in assessing an individual's ability to detect and understand speech in controlled environments. For developers building interactive audio solutions, integrating a

Voice SDK

can streamline the process of capturing and analyzing speech responses during SRT testing.Historical Evolution of SRT Measurement

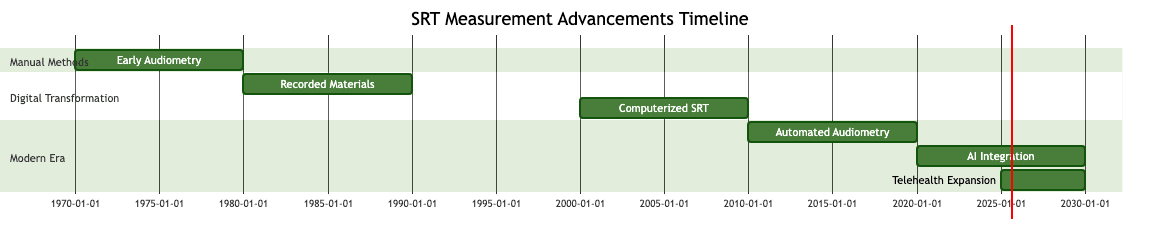

Early SRT measurements relied on live-voice presentations and manual adjustments of intensity by audiologists, yielding variable results. The advent of recorded, standardized speech materials in the late 20th century improved consistency and reliability. Modern SRT testing integrates computer-driven audiometry, automated scoring, and speech-in-noise paradigms, leveraging advancements in digital signal processing. Today’s protocols enable precise, repeatable, and efficient SRT assessments, which are crucial for developing hearing technology and evaluating hearing aid or cochlear implant performance. Developers can further enhance these assessments by utilizing a

javascript video and audio calling sdk

to facilitate real-time communication and remote testing environments.The Role of SRT in Hearing Assessment

Why SRT is Essential in Audiological Evaluations

SRT serves as a benchmark for speech perception ability. It allows clinicians and developers to quantify how well an individual recognizes everyday speech, providing a functional complement to pure-tone audiometry. SRT outcomes guide the fitting of hearing aids and the programming of cochlear implants, ensuring devices are tuned for optimal speech understanding. For those building cross-platform solutions, leveraging a

python video and audio calling sdk

can help create robust tools for remote SRT assessments and teleaudiology.How SRT Complements Other Audiological Tests

SRT does not stand alone; it fits within a comprehensive battery of hearing assessments. Pure-tone threshold tests determine the absolute threshold of hearing for specific frequencies, while word recognition scores and suprathreshold speech recognition tests evaluate an individual’s ability to perceive speech at levels above the SRT. This layered approach ensures a holistic understanding of auditory function. Incorporating a

react native video and audio calling sdk

can provide seamless mobile integration for these multi-faceted assessments.

This diagram illustrates the progression from basic hearing detection to complex speech perception, with SRT acting as a critical bridge between pure-tone testing and advanced speech evaluation.

Factors Influencing Speech Recognition Threshold

Hearing Loss and Its Impact on SRT

The nature and degree of hearing loss significantly affect SRT values. Sensorineural hearing loss—caused by damage to the inner ear or auditory nerve—typically results in elevated SRT, as speech perception is compromised. Conductive hearing loss, stemming from middle or outer ear dysfunction, also raises SRT but may be partially reversible with medical treatment. Mixed hearing loss combines both types, further complicating speech recognition. Developers must consider these factors when designing adaptive speech processing algorithms in hearing technology, and can benefit from integrating a

flutter video and audio calling api

to enable real-time testing and feedback across platforms.Effects of Age and Cognitive Factors on SRT

Age-related hearing loss (presbycusis) is a prevalent factor that increases SRT, particularly in the elderly. Cognitive decline, such as reduced working memory or slower processing speed, can also elevate SRT by impairing the listener’s ability to decode and repeat speech. Developers of speech recognition and auditory training platforms should account for these variables, offering adaptive solutions tailored to age and cognition. Utilizing an

embed video calling sdk

can help make these platforms more accessible and user-friendly for diverse populations.Linguistic and Cultural Factors

The familiarity and relevance of test materials influence SRT outcomes. Speech materials must be culturally and linguistically appropriate—using words and accents familiar to the test population—to ensure accurate assessment. Developers creating multilingual audiometry tools should incorporate region-specific speech stimuli and consider cultural factors in their interface design. Implementing a

Voice SDK

can facilitate the delivery of localized speech content and support real-time language switching during assessments.Environmental Factors: Quiet vs. Noise

SRT values are typically lower in quiet conditions and rise significantly in the presence of background noise. Speech-in-noise testing protocols measure SRT under realistic listening environments, better reflecting daily communication challenges and informing advanced noise reduction strategies in hearing devices. For developers, integrating a

phone call api

can simulate real-world audio environments and enhance the realism of speech-in-noise testing scenarios.Methods and Protocols for Measuring Speech Recognition Threshold

Standardized Test Materials

SRT measurement relies on carefully selected speech materials, most commonly spondee words. These two-syllable words, like ""baseball"" or ""toothbrush"", have equal stress and are easy to recognize. Some protocols also use monosyllabic words or sentences, especially in pediatric or cross-linguistic contexts. Standardization guarantees consistency and comparability across test sessions and locations. For developers aiming to streamline these protocols, integrating a

Voice SDK

can automate the delivery and scoring of standardized speech materials.Testing Protocols and Procedures

Modern SRT testing may be manual or automated. The typical procedure involves presenting speech stimuli at varying intensity levels and recording the lowest decibel level where 50% correct recognition is achieved. Automated audiometry systems can streamline this process. Leveraging a

Voice SDK

can further automate response collection and analysis, making SRT testing more efficient and scalable.1# Pseudocode for Automated SRT Testing

2speech_levels = [70, 65, 60, 55, 50] # dB HL

3correct_responses = []

4for level in speech_levels:

5 present_speech(level)

6 response = get_user_response()

7 correct_responses.append(check_correct(response))

8 if calculate_percentage(correct_responses) >= 50:

9 srt = level

10 break

11print(f"\"Speech Recognition Threshold: {srt} dB HL\"")

12Interpreting SRT Results

Clinically, a normal SRT typically falls within 0–25 dB HL. Elevated SRTs suggest hearing impairment or possible retrocochlear pathology. Comparing SRT with pure-tone averages helps identify non-organic hearing loss or malingering. Accurate interpretation is essential for guiding hearing aid fitting, cochlear implant candidacy, and auditory rehabilitation strategies.

Applications of Speech Recognition Threshold in Clinical Practice

Diagnosis and Management of Hearing Loss

SRT is indispensable in diagnosing hearing loss and shaping management plans. Audiologists use SRT data to calibrate hearing aids and fine-tune cochlear implant settings, ensuring that amplification matches the user’s speech perception threshold. For developers, integrating SRT metrics into device firmware can enhance user outcomes and satisfaction. Using a

Voice SDK

can help synchronize SRT data with cloud-based management tools for streamlined device programming.Monitoring and Rehabilitation

SRT measurements are critical for tracking progress in auditory rehabilitation, such as after cochlear implantation or auditory training programs. Changes in SRT over time indicate improvements (or declines) in speech perception, guiding adjustments in therapy or device programming. Software solutions that visualize SRT trends empower clinicians and end-users alike.

Special Populations

Specialized SRT protocols exist for pediatric and elderly populations, accounting for age-specific challenges in attention and cognition. Developers creating assessment tools for these groups must ensure accessible interfaces, engaging speech materials, and robust error handling.

Advancements and Future Directions in SRT Measurement

The future of SRT measurement is shaped by innovations in digital audiometry, speech-in-noise testing, and artificial intelligence (AI). Automated, cloud-based audiometry platforms allow for remote SRT testing and telehealth integration. AI-driven speech recognition algorithms can adaptively select test materials and analyze responses, increasing accuracy and efficiency. Integrating a

Voice SDK

into these platforms can further enhance real-time interaction and data collection for remote SRT assessments.

Expect further developments in 2025 and beyond, including mobile SRT testing apps, real-time speech-in-noise assessments, and personalized auditory training powered by machine learning.

Conclusion

Speech recognition threshold (SRT) remains a vital metric in audiology and hearing technology, underpinning accurate assessment and effective intervention. As digital tools and AI-driven solutions evolve in 2025, understanding and implementing SRT protocols is essential for developers, clinicians, and engineers striving to improve communication outcomes for individuals with hearing loss. Early assessment and proactive management based on SRT can make a profound difference in quality of life.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ