Introduction to Speech Recognition Technology

Speech recognition technology, also known as automatic speech recognition (ASR) or voice-to-text, has rapidly advanced in recent years, profoundly impacting the way humans interact with computers. As digital interfaces evolve, voice AI is becoming a pivotal component of modern software, enabling more natural and efficient communication between humans and machines. From digital assistants and smart devices to real-time transcription and accessibility tools, speech recognition is powering a new wave of voice-driven applications in 2025.

The core appeal of speech recognition technology lies in its ability to convert spoken language into readable text with high accuracy and speed. This not only enhances user experience but also opens up new possibilities in fields such as healthcare, legal, and customer service. As deep learning and neural networks continue to drive innovation, speech-to-text systems are becoming more robust, multilingual, and context-aware than ever before.

How Speech Recognition Technology Works

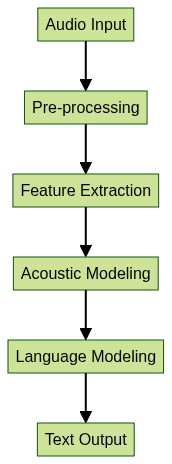

Speech recognition technology involves a complex pipeline that transforms audio signals into text output. Here’s a step-by-step breakdown of the typical workflow:

- Audio Input: The process starts with capturing spoken language via a microphone or audio file.

- Pre-processing: Noise reduction, normalization, and filtering clean up the audio signal for analysis.

- Feature Extraction: Algorithms extract relevant features (e.g., Mel-frequency cepstral coefficients, MFCCs) that represent the characteristics of speech.

- Acoustic Modeling & Classification: Deep learning models (often neural networks) analyze features to predict phonemes or sub-word units.

- Language Modeling: Context-aware language models predict word sequences and refine output to improve accuracy.

- Text Output: The system assembles recognized words into coherent text sentences.

For developers looking to integrate speech recognition into real-time communication platforms, leveraging a

Voice SDK

can streamline the process of capturing and processing audio input for transcription and analysis.Example Python Pseudocode: Basic Speech-to-Text Flow

1import speech_recognition as sr

2

3recognizer = sr.Recognizer()

4with sr.Microphone() as source:

5 print("Speak now...")

6 audio = recognizer.listen(source)

7

8try:

9 text = recognizer.recognize_google(audio)

10 print(f"Transcribed Text: {text}")

11except sr.UnknownValueError:

12 print("Could not understand audio.")

13except sr.RequestError as e:

14 print(f"API error: {e}")

15Data Flow Diagram

The Role of Deep Learning and Neural Networks

Deep learning has revolutionized speech recognition technology by enabling systems to learn complex audio patterns and linguistic structures from massive datasets. Modern ASR solutions predominantly use deep neural networks (DNNs), convolutional neural networks (CNNs), and recurrent neural networks (RNNs)—including advanced architectures like long short-term memory (LSTM) and transformers.

GPU acceleration plays a crucial role in training and deploying these models efficiently. By leveraging parallel computation, GPUs drastically reduce the time required to train deep learning models on vast speech corpora. As a result, contemporary speech recognition software can achieve remarkable accuracy even in challenging, real-world environments.

If you're building applications that require both video and audio capabilities, integrating a

python video and audio calling sdk

can help you seamlessly add real-time communication features alongside speech recognition.Natural Language Processing in Speech Recognition

Natural language processing (NLP) is integral to making sense of recognized speech. NLP algorithms infuse context, semantic understanding, and disambiguation into speech recognition systems, enabling them to comprehend homonyms, slang, and domain-specific language.

In 2025, NLP-driven speech recognition powers digital assistants (like Google Assistant and Siri), smart home devices, and enterprise voice bots, facilitating seamless voice interactions for tasks ranging from scheduling meetings to controlling IoT devices. For web-based solutions, a

javascript video and audio calling sdk

can be used to integrate voice and video features directly into browser applications.Key Components of Modern Speech Recognition Systems

Modern speech recognition systems are composed of several critical components:

- Acoustic Models: Map audio features to phonetic units using neural networks or statistical methods.

- Language Models: Predict word sequences based on context, improving recognition of ambiguous phrases.

- Inference Engines: Orchestrate the flow, combining models to output the most probable transcription.

- Streaming & Real-Time Processing: Many applications demand low-latency, real-time transcription (e.g., live captioning, contact centers).

- Multilingual & On-Device Capabilities: State-of-the-art systems handle multiple languages and dialects, with some models running efficiently on smartphones or edge devices for enhanced privacy.

To enable seamless integration of voice features in your applications, consider using a

Voice SDK

that supports real-time audio processing and communication.Major Speech Recognition Technologies and Tools

A thriving ecosystem of speech recognition platforms and libraries provides developers with a range of choices, from open source to commercial solutions. Here are some of the most prominent in 2025:

- Google Speech-to-Text API: Industry-leading cloud service with robust real-time and batch transcription, supporting over 125 languages.

- Mozilla DeepSpeech: Open source, end-to-end speech recognition engine inspired by Baidu’s Deep Speech architecture.

- OpenAI Whisper: Versatile, open source model excelling at multilingual and robust transcription.

- Vosk: Lightweight, offline ASR toolkit supporting numerous languages and platforms, ideal for on-device use.

- Soniox: AI-powered, real-time transcription API with high accuracy and industry-specific models.

- Baidu Deep Speech: Pioneering deep learning-based ASR model, influential in modern architectures.

For developers building video conferencing or collaborative platforms, leveraging a

Video Calling API

can help you add robust, scalable video and audio communication features that complement speech recognition capabilities.Open Source vs Commercial Solutions

Open source projects (e.g., Whisper, DeepSpeech, Vosk) offer flexibility and transparency, making them suitable for research, customization, and privacy-sensitive deployments. Commercial APIs (e.g., Google Speech-to-Text, Soniox) provide scalability, managed infrastructure, and advanced features such as automatic punctuation, diarization, and speaker identification.

If you need to add calling functionality to your applications, exploring a

phone call api

can help you implement high-quality voice calls alongside speech recognition.Example: Using Vosk for Speech Recognition in Python

1from vosk import Model, KaldiRecognizer

2import pyaudio

3import json

4

5model = Model("model-en")

6rec = KaldiRecognizer(model, 16000)

7p = pyaudio.PyAudio()

8stream = p.open(format=pyaudio.paInt16, channels=1, rate=16000, input=True, frames_per_buffer=8000)

9stream.start_stream()

10

11print("Speak into the microphone...")

12while True:

13 data = stream.read(4000)

14 if rec.AcceptWaveform(data):

15 result = json.loads(rec.Result())

16 print(f"Recognized: {result['text']}")

17Applications and Benefits of Speech Recognition Technology

Speech recognition technology is transforming industries by enabling hands-free, efficient, and accessible interactions. Key applications include:

- Healthcare: Real-time transcription of medical notes, doctor-patient conversations, and dictation workflows.

- Legal: Automated transcription of depositions, court proceedings, and legal documentation.

- Customer Service: Intelligent IVR systems, sentiment analysis, and automated call summaries.

- Accessibility: Empowering users with disabilities via voice commands, live captioning, and screen readers.

For Android developers, implementing

webrtc android

solutions can facilitate real-time voice and video communication, which pairs well with speech recognition for mobile applications.The benefits of speech recognition technology in 2025 include improved productivity, inclusivity, and operational automation, allowing organizations and users to interact more naturally and efficiently with digital systems. If you want to quickly add video and audio calling to your web app, you can

embed video calling sdk

components that integrate seamlessly with speech recognition workflows.Challenges and Limitations of Speech Recognition

Despite remarkable advances, speech recognition systems still face significant challenges:

- Accuracy: Background noise, overlapping speech, and diverse accents can reduce transcription quality.

- Privacy & Security: Cloud-based solutions may raise concerns over sensitive data exposure.

- Error Correction: Achieving human parity remains difficult, especially in noisy or domain-specific contexts.

To further enhance your application's voice capabilities, a

Voice SDK

can provide advanced features such as noise suppression and real-time audio enhancements, helping to mitigate some common challenges in speech recognition.Ongoing research focuses on robust error correction, privacy-preserving on-device models, and advancements in language modeling to address these hurdles.

Future Trends in Speech Recognition

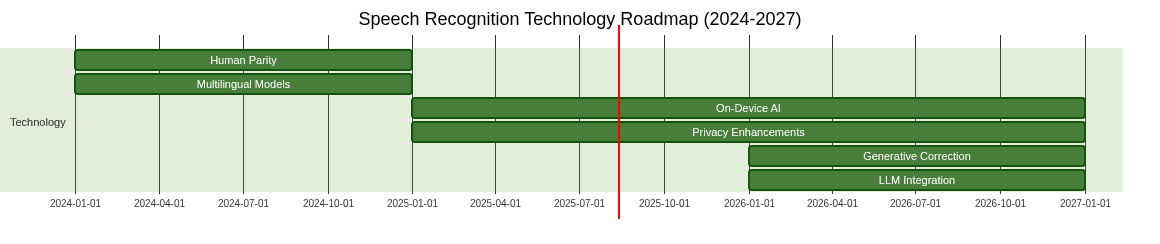

The future of speech recognition technology is bright, with ongoing innovation in:

- Human-Level Accuracy: Approaching or surpassing human transcription quality across languages and dialects.

- Multilingual Expansion: Supporting more languages, code-switching, and regional dialects.

- On-Device AI & Privacy: Enhanced privacy through on-device inference and federated learning.

- Generative Error Correction & LLM Integration: Leveraging large language models (LLMs) for context-rich, generative error correction and summarization.

Roadmap Diagram: Future of Speech Recognition

Conclusion

Speech recognition technology in 2025 stands at the intersection of deep learning, NLP, and real-time processing, transforming the way we interact with digital systems. With continuous advancements in accuracy, multilingual support, and privacy, speech recognition is poised to become an even more integral part of the software engineering landscape. As open source and commercial tools evolve, developers are empowered to build innovative, accessible, and context-aware voice-driven applications for the future.

Ready to build your own voice-driven app?

Try it for free

and start integrating advanced speech recognition and communication features today!Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ