Open Source Speech to Text: The Ultimate Guide (2025)

Introduction to Open Source Speech to Text

Open source speech to text refers to software that can automatically transcribe spoken language into written text, with the source code freely available for anyone to use, modify, and distribute. Over the past two decades, speech recognition technology has evolved from rule-based signal processing systems to sophisticated deep learning models capable of real-time, multilingual transcription. Open source speech to text is now integral to a wide range of applications: from automating meeting notes and providing accessibility features, to powering voice assistants and enabling media production tools with subtitle generation. Its flexibility, transparency, and active community support make it a preferred choice for developers and organizations seeking customizable, privacy-respecting speech recognition solutions.

How Open Source Speech to Text Works

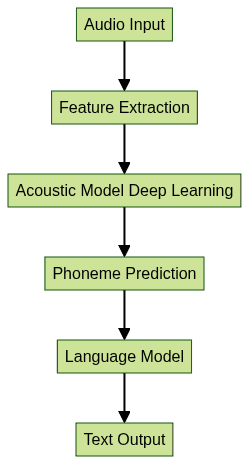

Speech recognition technology transforms audio signals into text by processing sound waves through various computational stages. Traditionally, systems relied on Hidden Markov Models and Gaussian Mixture Models, but modern solutions leverage deep learning, using neural networks to model complex speech patterns. The heart of open source speech to text lies in two key components: the acoustic model, which maps audio features to phonemes, and the language model, which predicts word sequences based on linguistic probability. Together, these models enable accurate transcription across languages and accents. For developers interested in integrating voice features into their applications, leveraging a

Voice SDK

can further streamline the process of building real-time audio experiences.

This pipeline illustrates how open source speech to text engines process raw audio through feature extraction, deep learning-based acoustic modeling, and language modeling to output readable text.

Top Open Source Speech to Text Engines

Open source speech to text has seen remarkable growth, with several high-quality engines now available for developers. Here, we highlight the most popular and reliable options in 2025. If you're building solutions that require both speech recognition and real-time communication, consider integrating a

python video and audio calling sdk

to enable seamless audio and video interactions alongside transcription.Coqui STT

Coqui STT is a leading open source speech to text engine focused on ease of use, deep learning accuracy, and multilingual support. Designed for Python developers, it supports multiple languages and offers efficient real-time transcription. Coqui STT’s flexible Python API makes integration straightforward, while its active community regularly updates pre-trained models and documentation. For developers looking to add live audio features, a

Voice SDK

can complement Coqui STT by enabling interactive voice capabilities in your applications.Coqui STT Python API Example:

```python

import stt

Load a pre-trained model

model = stt.Model("model.tflite")

Transcribe an audio file

with open("audio.wav", "rb") as f:

audio = f.read()

text = model.stt(audio)

print(text)

```

Vosk

Vosk is renowned for its offline speech recognition capability and lightweight design. Supporting multiple programming languages and platforms (Linux, Windows, macOS, Raspberry Pi, Android, iOS), Vosk is ideal for on-device transcription and privacy-sensitive applications. It offers real-time speech recognition, multilingual models, and a flexible Python API. If you need to embed video calling and transcription into your platform, using an

embed video calling sdk

can accelerate development and provide a robust user experience.Vosk Python Integration Example:

```python

import vosk

import sys

import wave

Load model

model = vosk.Model("model")

Open audio file

wf = wave.open("audio.wav", "rb")

rec = vosk.KaldiRecognizer(model, wf.getframerate())

while True:

data = wf.readframes(4000)

if len(data) == 0:

break

if rec.AcceptWaveform(data):

print(rec.Result())

```

Other Notable Projects

Beyond Coqui STT and Vosk, several smaller open source speech to text initiatives thrive. Kdenlive, a popular open source video editor, features integrated subtitle generation via speech recognition. Additionally, GitHub hosts numerous compact projects and plugins offering specialized speech-to-text capabilities for niche use cases, such as browser extensions and IoT devices. For developers aiming to integrate advanced communication features, exploring a

Video Calling API

can help you add high-quality video and audio conferencing to your applications.Setting Up an Open Source Speech to Text System

Getting started with open source speech to text engines is straightforward for developers familiar with Python and command-line tools. If your project requires phone-based communication, integrating a

phone call api

alongside speech recognition can enable powerful telephony and transcription features.Installation and Configuration

Begin by setting up a Python virtual environment for isolation. Then, install the relevant engine (Coqui STT or Vosk) via pip and download a suitable pre-trained model.

Python Virtual Environment and Installation Example:

```bash

python3 -m venv stt-env

source stt-env/bin/activate

pip install coqui-stt vosk

Download models as per engine documentation

1

2Both Coqui STT and Vosk provide detailed guides for downloading and configuring models. Once installed, test the setup with a simple script to ensure your environment is ready for transcription tasks. For applications that require real-time voice chat, integrating a [Voice SDK](https://www.videosdk.live/live-audio-rooms) can enhance your solution with interactive audio capabilities.

3

4### Testing and Evaluating Accuracy

5

6To measure the accuracy of your open source speech to text system, use established benchmarks and datasets like LibriSpeech, Common Voice, or TED-LIUM. Calculate Word Error Rate (WER) by comparing the engine’s output with ground truth transcripts. Libraries such as JiWER can automate WER calculation, enabling iterative tuning and model selection.

7

8**Accuracy Evaluation in Python:**

9python

from jiwer import wer

reference = "the quick brown fox jumps over the lazy dog"

hypothesis = "the quick brown fox jump over the lazy dog"

error = wer(reference, hypothesis)

print(f"Word Error Rate: {error}")

```

Customizing and Training Open Source Speech to Text Models

One of the key strengths of open source speech to text engines is the ability to customize vocabularies and train models for specific domains or languages. If your application also requires robust video communication, integrating a

Video Calling API

can provide seamless video and audio conferencing features alongside speech-to-text capabilities.Custom Vocabulary and Language Models

You can enhance recognition accuracy by updating the engine’s vocabulary with domain-specific terminology or uncommon names. This customization ensures better performance in specialized applications. For developers building live audio rooms or interactive voice features, a

Voice SDK

can be a valuable addition to your tech stack.Updating Vocabulary File (Example):

```python

For engines supporting custom vocabulary text files

def update_vocab(vocab_file, new_words):

with open(vocab_file, "a") as vf:

for word in new_words:

vf.write(f"{word}\n")

Usage

update_vocab("vocabulary.txt", ["blockchain", "API", "Quasar"])

```

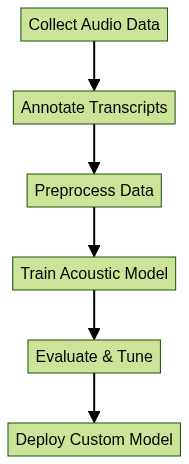

Training Your Own Models

When pre-trained models don’t suffice, you can train a speech to text model using your own dataset. This involves collecting and annotating audio samples, preparing transcriptions, and running model training scripts. Open source engines like Coqui STT provide utilities and documentation for each step.

This workflow enables the creation of highly accurate, domain-adapted speech-to-text models using open source toolkits.

Real-World Applications and Use Cases

Open source speech to text solutions power a diverse array of applications in 2025:

- Business: Automated meeting transcription, CRM integration, and customer support analytics.

- Accessibility: Real-time captions for video calls, public events, and educational content.

- Media Production: Subtitle generation for podcasts, YouTube, and video editing in tools like Kdenlive.

- Research: Linguistic data analysis, speech corpus development, and human-computer interaction studies.

- API Integration: Seamless real-time transcription in web and mobile apps, supporting multilingual speech to text.

If you're looking to quickly add interactive audio features to your application, a

Voice SDK

can help you create scalable and engaging voice experiences.These use cases highlight the flexibility and impact of open source speech to text in modern software ecosystems.

Challenges and Limitations of Open Source Speech to Text

While open source speech to text technology has advanced rapidly, challenges remain. Achieving high accuracy in noisy environments or for under-resourced languages can be difficult. Hardware requirements for real-time transcription and deep learning model training may be significant. Additionally, community-supported projects may lack the dedicated support teams of commercial offerings, though active forums and contributors often bridge this gap.

Community and Support for Open Source Speech to Text

A vibrant ecosystem backs open source speech to text projects. Developers can access help through GitHub issues, Matrix chat rooms, discussion forums, and extensive online documentation. Regular community contributions drive continuous improvement, bug fixes, and new feature releases, ensuring that open source speech to text remains robust and innovative in 2025. If you're interested in exploring these technologies for your own projects, you can

Try it for free

and experience the benefits firsthand.Conclusion: The Future of Open Source Speech to Text

Looking ahead, open source speech to text is poised for even greater accuracy, broader language support, and deeper integration into everyday software. The rapid pace of deep learning research, combined with global community collaboration, ensures ongoing improvements and new possibilities for real-time, privacy-first, and highly customizable voice recognition solutions in 2025 and beyond.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ