Introduction to Automatic Speech Recognition

Automatic speech recognition (ASR) is revolutionizing the way humans interact with computers, software, and devices in 2025. As speech-to-text and voice recognition technologies continue to advance, ASR is now at the core of digital assistants, customer service bots, transcription tools, and accessibility technologies. Driven by rapid progress in deep learning and speech AI, automatic speech recognition enables machines to interpret and process human speech with remarkable accuracy. This guide provides a comprehensive overview of ASR, illustrating its evolution, technical foundations, real-world applications, top tools and APIs, as well as the latest trends and ethical considerations shaping the future of speech technology.

What is Automatic Speech Recognition?

Automatic speech recognition is a branch of artificial intelligence that enables computers to convert spoken language into written text. ASR systems analyze audio signals, interpret linguistic patterns, and generate accurate transcriptions in real time. The concept dates back to the 1950s, with early systems recognizing only a handful of words. Over the decades, speech-to-text technology has evolved from simple pattern matching to sophisticated machine learning and deep neural network models.

Modern ASR leverages advances in natural language processing (NLP), acoustic modeling, and language modeling. Today, speech recognition engines can handle diverse accents, dialects, and noisy environments, making voice-driven interfaces a reality for millions. The evolution of automatic speech recognition has enabled ubiquitous applications in mobile devices, call centers, healthcare, accessibility, and virtual assistants. As deep learning and speech AI continue to mature in 2025, ASR is becoming more accurate, context-aware, and capable of understanding intent beyond literal words. For developers looking to add real-time voice capabilities to their applications, integrating a

Voice SDK

can significantly streamline the process.How Does Automatic Speech Recognition Work?

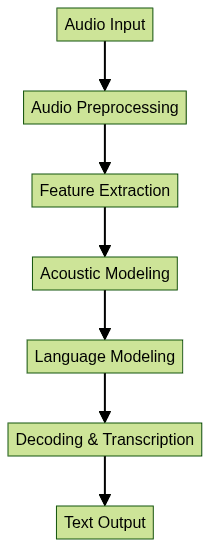

Automatic speech recognition systems follow a structured pipeline to convert raw audio into meaningful text. The process integrates signal processing, machine learning for speech, and advanced language modeling. Here’s a breakdown of the typical ASR pipeline:

- Audio Preprocessing: The audio input is cleaned and normalized. Noise reduction and echo cancellation are applied to ensure clarity.

- Feature Extraction: Key features like Mel-frequency cepstral coefficients (MFCCs) or spectrograms are extracted to represent the audio signal in a form suitable for modeling.

- Acoustic Modeling: Deep learning models (often recurrent or transformer-based neural networks) map extracted features to phonetic units.

- Language Modeling: Statistical or neural models predict the most likely word sequences given the phonetic predictions, considering grammar and context.

- Decoding: The best transcription is chosen by combining acoustic and language model probabilities.

For projects that require seamless integration of calling features, leveraging a robust

phone call api

can enhance both the user experience and the accuracy of speech-driven applications.ASR System Pipeline

Modern ASR engines employ deep learning architectures for both acoustic and language modeling. Integration with NLP allows ASR systems to better understand context, disambiguate homonyms, and provide more accurate, natural output. Real-time transcription and speech processing demand highly optimized, scalable solutions—often leveraging cloud infrastructure or edge computing. Developers building interactive audio experiences can benefit from a

Voice SDK

to facilitate real-time communication and transcription.Code Example: Simple ASR with Python

Here’s a minimal example using the open source

speech_recognition library in Python:1import speech_recognition as sr

2

3# Initialize recognizer

4recognizer = sr.Recognizer()

5

6with sr.AudioFile("audio_sample.wav") as source:

7 audio_data = recognizer.record(source)

8 text = recognizer.recognize_google(audio_data)

9 print("Transcribed text:", text)

10This snippet demonstrates the basic process: loading audio, processing it, and using a speech-to-text engine (Google ASR) to produce a transcription. If you want to build more advanced audio and video calling features in your Python applications, consider using a

python video and audio calling sdk

.Key Applications of Automatic Speech Recognition

Automatic speech recognition powers a wide range of applications across industries:

- Virtual Assistants: ASR is fundamental to assistants like Google Assistant, Siri, and Alexa, enabling users to interact with their devices hands-free.

- Transcription Services: Meeting minutes, interviews, and podcasts are transcribed in real time or post-processing, enhancing productivity and accessibility.

- Accessibility: ASR makes technology inclusive by enabling voice-driven interfaces for users with disabilities (e.g., voice commands, real-time captions).

- Customer Service: Automated call centers and IVR systems use ASR for intent detection, query routing, and speech-driven self-service. Integrating a

phone call api

can further automate and streamline customer interactions. - Voice Search: Search engines process spoken queries with ASR, improving user experience on mobile and smart home devices.

- Medical Dictation: Healthcare professionals rely on ASR for transcribing patient notes and medical records, streamlining workflows and reducing administrative burden.

For developers aiming to create immersive, real-time communication experiences, a

Video Calling API

can be a powerful addition to ASR-driven applications.These applications demonstrate how automatic speech recognition is now integral to both consumer and enterprise software solutions.

Top Automatic Speech Recognition Tools & APIs

Several platforms and libraries offer robust ASR capabilities for developers:

- Google Speech-to-Text: High accuracy, supports 120+ languages, real-time and batch processing, strong noise robustness.

- Amazon Transcribe: Real-time transcription, speaker identification, medical transcription, seamless AWS integration.

- IBM Watson Speech to Text: Customizable models, domain adaptation, multiple deployment options (cloud, on-premises).

- Microsoft Cognitive Services Speech: Flexible APIs, language and acoustic customization, edge support.

- Vosk: Open source, lightweight, supports multiple languages, works on mobile and embedded devices.

- Speechbrain: Open source, PyTorch-based, extensible for research and production, supports ASR, speaker recognition, and more.

For those looking to build scalable, interactive broadcast solutions, a

Live Streaming API SDK

can complement ASR by enabling real-time audio and video streaming with integrated speech recognition.If your project is based on JavaScript, using a

javascript video and audio calling sdk

can help you quickly implement both video and audio calling features alongside ASR capabilities.Additionally, developers can leverage a

Voice SDK

to easily add live audio rooms and voice interactions to their applications, further enhancing the user experience.ASR Tools Comparison Table

Developers should evaluate these tools based on language support, customization needs, deployment options, and privacy requirements.

Benefits and Challenges of Automatic Speech Recognition

Benefits

- Productivity: ASR accelerates workflows by automating transcription and enabling voice-driven controls.

- Accessibility: Makes technology approachable for users with disabilities, supporting inclusive design.

- Hands-Free Operation: Essential for mobile, automotive, and smart home contexts where manual input is impractical. Implementing a

Voice SDK

can make hands-free operation seamless in your applications.

Challenges

- Accents & Dialects: Variability in speech can reduce accuracy, especially for underrepresented languages.

- Background Noise: Noisy environments challenge even advanced speech processing systems.

- Privacy: Handling of voice data raises concerns about user consent and data protection.

- Accuracy: Achieving high accuracy across diverse scenarios remains a technical hurdle.

Privacy, Security, and Ethical Considerations in ASR

Automatic speech recognition systems process sensitive voice data. Developers must address privacy, security, and ethical challenges:

- Data Handling: Ensure speech recordings are stored and transmitted securely. Use encryption and limit data retention.

- User Consent: Clearly communicate how voice data is used and obtain explicit consent.

- Ethical Concerns: Guard against misuse, such as unauthorized surveillance or bias in model predictions. Regular audits and transparency are vital for responsible ASR deployment.

Trends and Future of Automatic Speech Recognition

In 2025, automatic speech recognition continues to evolve rapidly:

- AI Advancements: Large-scale transformer models and self-supervised learning are pushing ASR accuracy to new heights.

- Edge Processing: On-device ASR reduces latency and improves privacy by keeping data local.

- Multilingual Models: Unified models are enabling seamless recognition across languages and dialects, making global applications more viable.

Conclusion

Automatic speech recognition is a cornerstone of modern technology, powering hands-free interfaces, boosting accessibility, and enabling smarter applications. As deep learning, NLP, and edge AI converge, ASR will become more accurate, multilingual, and privacy-aware. Developers in 2025 have unprecedented access to powerful ASR tools and APIs, making it easier than ever to build innovative, voice-enabled solutions for the future.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ