The Ultimate Guide to Speech Latency: Optimizing Conversational AI for Real-Time Response (2025)

Introduction

Speech latency refers to the delay between a user’s spoken input and the system’s audible or textual response. In 2025, with the proliferation of AI-powered voice assistants, chatbots, and voice-driven applications, minimizing speech latency is more critical than ever. High speech latency can disrupt human-computer interaction, degrade user experience, and hinder the adoption of conversational AI. Optimizing for low latency speech is essential for natural conversation, ensuring voice agents respond within human expectations. This guide explores what speech latency is, why it matters, how to measure and benchmark it, and advanced strategies for latency optimization in real-time speech-driven applications.

What Is Speech Latency?

Speech latency is the total time taken from the moment a user starts speaking to the moment the system responds. For developers, it encapsulates three primary phases: speech-to-text latency (STT), text-to-speech latency (TTS), and the cumulative end-to-end voice AI latency.

- Speech-to-text latency: Time taken to convert spoken audio into text

- Text-to-speech latency: Time taken to synthesize spoken output from a text response

- End-to-end voice latency: Combined delay from user speech, recognition, processing, and system response

These components collectively shape the responsiveness of AI voice interaction. High latency can cause turn-taking delays, making interactions feel robotic or frustrating. Developers must consider both client and server-side factors, including network delay, inference time, and device performance, when measuring conversational AI latency. For teams building real-time voice features, integrating a robust

Voice SDK

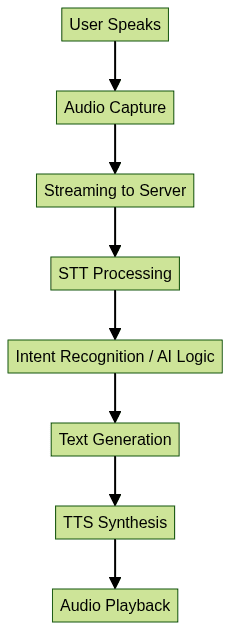

can help streamline audio processing and reduce overall latency.Here’s a visualization of the latency flow in a typical voice AI system:

This flow highlights each step where latency may accumulate, emphasizing the need for optimization at every stage.

Why Speech Latency Matters

Speech latency impacts how users perceive and interact with AI-driven voice systems. According to human conversational benchmarks, the ideal turn-taking delay is about 200ms. Delays beyond this threshold disrupt the natural flow, causing users to feel as though the system is unresponsive or inattentive.

In daily applications:

- Virtual assistants (e.g., Alexa, Google Assistant) rely on low latency speech for smooth, natural exchanges.

- Gaming uses voice chat with minimal conversational AI latency to maintain immersion and competitiveness.

- Customer service bots must minimize turn-taking delay to keep interactions efficient and satisfying.

A seamless, low latency experience increases user trust, engagement, and overall satisfaction in voice-driven applications. Ensuring that speech recognition response time and TTS API latency remain below noticeable thresholds is now a core requirement for modern voice AI solutions. For developers seeking to add calling features, exploring a

phone call api

can further enhance the responsiveness of conversational platforms.Key Components of Speech Latency

Speech latency in AI systems is determined by several key components:

Network Latency

The delay added by transmitting audio and data between the user’s device and the cloud or server. Network delay can fluctuate based on bandwidth, congestion, and geographical distance. Leveraging an efficient

Voice SDK

can help minimize network overhead and improve real-time communication.Inference Time (Model Processing)

The time taken by AI models to process input and generate output. This includes both STT (speech-to-text) and TTS (text-to-speech) inference times, often affected by model architecture and hardware acceleration.

Device and Cloud Infrastructure

Performance varies by whether inference occurs on-device (edge deployment) or in the cloud. Device capabilities (CPU, GPU, NPU) and cloud architecture (server region, load balancing) play crucial roles. For those building cross-platform solutions, using a

python video and audio calling sdk

can simplify integration and ensure consistent latency performance across devices.Code Snippet: Measuring Latency in API Calls

Here’s how to measure API latency in Python for a speech-to-text service, capturing total round-trip time:

1import time

2import requests

3

4def measure_stt_latency(audio_file_path, stt_api_url):

5 with open(audio_file_path, 'rb') as f:

6 audio_data = f.read()

7 start = time.time()

8 response = requests.post(stt_api_url, files={"audio": audio_data})

9 end = time.time()

10 latency_ms = (end - start) * 1000

11 print(f"Speech-to-text latency: {latency_ms:.2f} ms")

12 return latency_ms

13Monitor both STT and TTS API latency in your application to identify bottlenecks and opportunities for optimization.

Measuring and Benchmarking Speech Latency

Measuring speech latency requires specialized tools and clear methodologies. Developers often use:

- Network analyzers (e.g., Wireshark) to profile packet transmission and server round-trip

- Application-level timers to capture end-to-end user interaction latency

- Vendor-provided analytics for speech recognition response time and TTS API latency

Industry benchmarks (as of 2025) for leading providers help set expectations:

| Provider | STT Latency (ms) | TTS Latency (ms) |

|---|---|---|

| Google Cloud | 220 | 180 |

| Amazon Polly | 250 | 160 |

| PlayHT | 190 | 150 |

| Microsoft Azure | 210 | 170 |

| Deepgram | 180 | 200 |

By establishing latency benchmarks, teams can compare providers and monitor progress in latency optimization across their speech-driven applications. If you're building browser-based solutions, a

javascript video and audio calling sdk

can help you achieve low-latency audio and video interactions directly in the web environment.Optimization Strategies for Reducing Speech Latency

Streaming Speech Recognition (STT)

Traditional buffered transcription waits for the entire audio input before processing, resulting in significant delay. Streaming STT processes audio in real-time, delivering partial results as the user speaks, drastically reducing turn-taking delay.

Benefits:

- Real-time feedback for users

- Lower end-to-end voice AI latency

- Enhanced natural conversation flow

For applications requiring seamless integration, an

embed video calling sdk

can be a valuable tool to quickly add real-time communication with minimal development effort.Example: Implementing Streaming STT

Here’s a Python example using Google’s Speech-to-Text gRPC API for streaming transcription:

1import io

2from google.cloud import speech_v1p1beta1 as speech

3

4def transcribe_streaming(audio_path):

5 client = speech.SpeechClient()

6 with io.open(audio_path, "rb") as audio_file:

7 content = audio_file.read()

8 requests = [speech.StreamingRecognizeRequest(audio_content=content)]

9 config = speech.StreamingRecognitionConfig(

10 config=speech.RecognitionConfig(

11 encoding=speech.RecognitionConfig.AudioEncoding.LINEAR16,

12 sample_rate_hertz=16000,

13 language_code="en-US",

14 ),

15 interim_results=True,

16 )

17 responses = client.streaming_recognize(config, requests)

18 for response in responses:

19 for result in response.results:

20 print(f"Transcript: {result.alternatives[0].transcript}")

21Efficient TTS Models

Modern TTS engines offer incremental speech synthesis, generating audio as text becomes available. Selecting a low latency model (e.g., FastSpeech, Glow-TTS) and tuning inference parameters further reduces TTS API latency.

Key Considerations:

- Use models optimized for real-time speech synthesis

- Deploy on hardware with GPU/TPU acceleration when possible

For large-scale events or broadcasts, leveraging a

Live Streaming API SDK

helps maintain low latency and high-quality audio/video delivery to thousands of participants simultaneously.Edge Deployment and Network Optimization

Running STT/TTS inference at the edge (on-device or on-prem) can eliminate network latency entirely. Additionally, selecting optimal server regions and minimizing network hops enhances performance. Integrating a

Voice SDK

designed for edge and cloud flexibility can further reduce delays and boost reliability.Best practices:

- Deploy models on user devices or edge servers for lowest possible latency

- Route traffic through closest data centers

- Implement intelligent load balancing to avoid congestion

Case Studies: Real-World Latency Improvements

Rime Voice AI

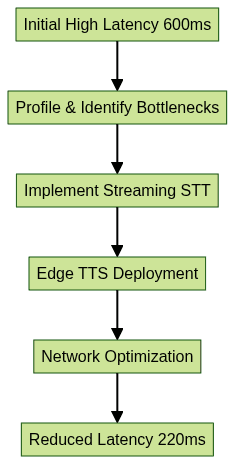

Rime implemented streaming STT and edge TTS deployment for their voice agents. Before optimization, their end-to-end speech latency averaged 600ms. After deploying on-device inference and optimizing network routing, latency dropped to 220ms.

PlayHT

PlayHT switched to a hybrid approach, combining cloud-based STT with incremental TTS and edge caching. This reduced TTS latency by 40%, enabling smoother AI voice interaction in customer service bots. For developers looking to replicate such improvements, adopting a

Voice SDK

can accelerate the deployment of low-latency voice features.

These examples illustrate the measurable impact of targeted latency optimization strategies in production environments.

Challenges and Future Trends in Speech Latency

Despite advances, several challenges persist:

- Multilingual latency: Supporting many languages increases model complexity and inference time

- Device variability: Performance varies widely between edge devices

- Network unpredictability: Fluctuating conditions can spike latency unexpectedly

Looking forward into 2025:

- Ultra-low latency models (sub-100ms) are emerging for both STT and TTS

- Zero-latency conversational agents are being researched, aiming for imperceptible delays in human-computer interaction

- Multimodal AI will require even more aggressive latency optimization as input/output types expand

Achieving near-zero latency will fundamentally transform how users interact with speech-driven applications, making AI voice interaction indistinguishable from human conversation.

Conclusion

Speech latency remains a defining factor in conversational AI and voice-driven applications. Developers must understand, measure, and optimize network delay, inference time, and infrastructure to meet the high expectations for real-time response in 2025. By leveraging streaming STT, efficient TTS models, and edge deployment, teams can deliver natural, responsive voice AI experiences and ensure the future of human-computer interaction stays fast and fluid. If you're ready to build next-generation, low-latency voice solutions,

Try it for free

and experience the difference for yourself.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ