Transcoding vs Encoding – What’s the Difference and Why Does it Matter?

Introduction

In the rapidly evolving world of digital media, understanding the difference between transcoding vs encoding is crucial for developers, video engineers, and content providers. Both processes are fundamental to modern video workflows, impacting everything from streaming quality to device compatibility. As video delivery becomes more complex in 2025, choosing the right approach can optimize performance, reduce costs, and ensure seamless playback across platforms. This guide explores transcoding vs encoding, their core differences, and why making the right choice matters in contemporary video applications.

Understanding Video Encoding

Video encoding is the process of converting raw video data into a compressed digital format using a specific algorithm, known as a codec. Raw video files captured from cameras or screen recordings are massive and not suitable for efficient storage or transmission. Encoding solves this by reducing file size while maintaining a balance between quality and compression. This process is essential for making video streaming, archiving, and sharing feasible at scale.

For developers building real-time communication platforms, integrating a

Video Calling API

often requires a deep understanding of encoding principles to ensure smooth and efficient video transmission.When raw video is encoded, the encoder analyzes the data and removes redundancies, converting visual and audio information into a format like H.264 or H.265. The result is a compressed video file that can be easily distributed or streamed. The encoding process is usually the first step in any video workflow, whether for video on demand,

live streaming

, or cloud storage.Role of Codecs

Codecs (coder-decoders) are algorithms that encode and decode video streams. Popular codecs in 2025 include H.264 (AVC), H.265 (HEVC), AV1, and VVC. Each codec offers different trade-offs between compression efficiency, compatibility, and computational requirements. Choosing the right codec is fundamental to the encoding workflow, as it affects quality, file size, and device support.

If you're developing browser-based applications, leveraging a

javascript video and audio calling sdk

can help you implement efficient video encoding and decoding directly in the client.Lossy vs Lossless Encoding

Encoding can be lossy or lossless. Lossy encoding sacrifices some data to achieve higher compression, leading to smaller file sizes but potential quality loss. Lossless encoding retains all original data, resulting in larger files but perfect fidelity — often used in professional editing or archiving.

Example Code Snippet: Basic FFmpeg Encoding Command

1ffmpeg -i input.raw -c:v libx264 -preset fast -crf 23 output.mp4

2This FFmpeg command encodes a raw video file to H.264, balancing quality (CRF 23) and speed (preset fast).

What is Video Transcoding?

Transcoding is the process of converting a previously encoded video file into a new format, bitrate, or resolution. Unlike encoding, which operates on raw video, transcoding takes an existing compressed video, decodes it, applies modifications (such as resizing or changing the codec), and then re-encodes it. Transcoding is critical for adapting content to different devices, networks, or streaming requirements.

For mobile developers, using a

react native video and audio calling sdk

can streamline the process of handling transcoded streams across various platforms.Typical scenarios for transcoding include:

- Delivering video to devices with different capabilities (smartphones, TVs, legacy hardware)

- Reducing bitrate for mobile streaming or low-bandwidth connections

- Creating multiple renditions for adaptive bitrate streaming (ABR)

- Converting to a new codec as standards evolve

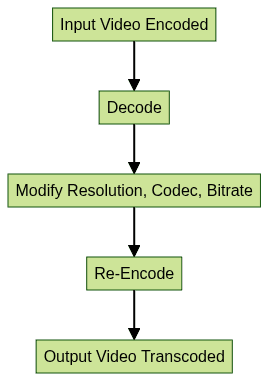

Transcoding Workflow

The basic transcoding workflow consists of three main steps: decode, process/modify, and re-encode. This workflow is central to media servers, OTT streaming, and cloud-based video delivery platforms.

If you're building cross-platform apps, a

flutter video and audio calling api

can help you manage transcoding and streaming efficiently on both iOS and Android devices.

Types of Transcoding

Transcoding can be lossless-to-lossless, lossy-to-lossy, or even lossy-to-lossless, depending on the source and destination formats. Each type has implications for quality and file size.

Transcoding vs Encoding: Key Differences

Understanding transcoding vs encoding is essential for optimizing video workflows. While encoding is the initial compression of raw video, transcoding modifies already encoded content for new purposes.

For those looking to quickly integrate video communication features, an

embed video calling sdk

can simplify the process, handling much of the encoding and transcoding complexity behind the scenes.Side-by-Side Comparison Table

| Feature | Encoding | Transcoding |

|---|---|---|

| Input | Raw video/audio | Encoded video/audio |

| Output | Compressed, encoded file | New format/bitrate/resolution file |

| Typical Use | Initial storage/distribution | Adaptation/compatibility/streaming |

| Quality Impact | Controlled by codec/settings | May degrade with each generation |

When to Use Encoding vs Transcoding

Use encoding when converting raw footage for the first time (e.g., camera to MP4). Use transcoding when you need to adapt existing content for new devices, streaming platforms, or formats. For example, a VOD platform may encode videos once and then transcode them on demand for different users or networks.

If you're targeting Android, understanding

webrtc android

implementations is vital for optimizing transcoding and encoding in real-time communication apps.Both processes impact video quality, file size, and compatibility. Encoding usually offers more control over quality, while transcoding introduces potential for generational quality loss but enables broader device support and streaming flexibility.

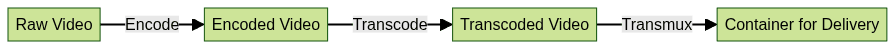

Codecs, Containers, and Transmuxing Explained

What Are Codecs and Containers?

A codec compresses and decompresses video data. A container (like MP4, MKV, MOV) packages video, audio, and metadata into a single file. The container decides how this data is organized and which codecs are supported. Choosing the appropriate codec and container is crucial for playback compatibility and streaming efficiency.

For developers seeking to add real-time communication features, a robust

Video Calling API

can abstract much of the complexity associated with codecs and containers, allowing you to focus on user experience.Role of Transmuxing in Video Delivery

Transmuxing (or re-muxing) is the process of changing the container format without altering the encoded video/audio streams. It enables fast adaptation for various streaming protocols (e.g., HLS, DASH) without re-encoding or quality loss.

Transmuxing is often used in conjunction with encoding and transcoding to optimize video delivery across diverse platforms.

Quality Considerations: Encoding vs Transcoding

How Encoding Choices Affect Quality

Choosing a codec, bitrate, and encoding settings directly affects video quality. High-efficiency codecs (HEVC, AV1) can deliver better quality at lower bitrates but may require more processing power. The initial encoding phase is critical — poor choices here can’t be fixed later.

If your application relies on high-quality video calls, selecting a

Video Calling API

with advanced encoding options can help maintain clarity even under varying network conditions.How Transcoding Affects Quality

Transcoding introduces another generation of compression, which can further degrade quality, especially if using lossy codecs. Each decode/encode cycle can amplify artifacts and reduce fidelity, particularly at lower bitrates or aggressive compression settings.

Best Practices to Minimize Quality Loss

- Always encode from the highest quality source available

- Use lossless transcoding when possible

- Limit the number of transcode generations

- Choose modern, efficient codecs for both encoding and transcoding

Practical Use Cases

Streaming to Multiple Devices

Modern streaming services must deliver video to smartphones, tablets, smart TVs, and browsers, each with distinct capabilities. By leveraging transcoding vs encoding, platforms can provide device-specific renditions, ensuring optimal playback quality and compatibility.

For developers interested in building scalable video solutions, you can

Try it for free

to experience how advanced APIs streamline transcoding and encoding workflows.For example, a media server might encode a high-quality master file and transcode it to lower resolutions and bitrates for mobile streaming or low-bandwidth users.

Video on Demand (VOD) Platforms

VOD platforms typically encode content once at high quality, then transcode to create adaptive bitrate ladders for smooth playback regardless of network speed. This workflow ensures efficient storage and reliable streaming.

Live Streaming Workflows

In live streaming, encoding captures and compresses the live feed, while transcoding generates various renditions in real-time for adaptive delivery to global audiences. Cloud encoding and transcoding services often power these workflows in 2025.

Choosing the Right Solution

Selecting between transcoding vs encoding depends on your source material, target platforms, network constraints, and playback requirements. If starting with raw footage, encoding is mandatory. If adapting existing video for new contexts, transcoding is essential. Cloud encoding and transcoding platforms offer scalable, on-demand solutions for both scenarios, enabling efficient video delivery for OTT, VOD, and live streaming services.

Conclusion

In summary, transcoding vs encoding are distinct but complementary processes in modern video workflows. Encoding handles the transformation of raw video into a compressed format, while transcoding adapts encoded content for new devices, bitrates, or platforms. Making informed choices about when and how to use each process is key to delivering high-quality, compatible video in 2025 and beyond.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ