Introduction

The synergy between voice agents and large language models (LLMs) is redefining what’s possible in conversational AI. The concept of a "voice agent LLM connection" refers to the seamless integration of voice-based interfaces with advanced language models, enabling more natural, intuitive, and powerful user interactions. In 2025, as businesses and developers push for smarter automation and accessible AI, mastering the voice agent LLM connection becomes essential. This guide explores the core concepts, technologies, architecture patterns, implementation steps, and best practices you need to build robust, multimodal AI agents—whether for customer support, enterprise workflows, or innovative new products.

Understanding Voice Agent LLM Connection

What is a Voice Agent LLM Connection?

A voice agent LLM connection is the technical and architectural link between a voice interface (voice agent) and a large language model. The voice agent handles speech input and output, while the LLM processes, understands, and generates intelligent text-based responses. This connection empowers applications with:

- Speech recognition: Converting user speech to text.

- Natural language understanding: Leveraging LLMs for deep reasoning and context.

- Conversational output: Synthesizing natural-sounding speech.

Key use cases include:

- Automated customer support (24/7 virtual agents)

- Data collection and analytics via voice surveys

- Voice-enabled sales and lead qualification

For developers seeking to add real-time audio features, integrating a

Voice SDK

can accelerate the process of building interactive voice interfaces.Why Combine Voice Agents with LLMs?

Integrating voice agents with LLMs transforms simple voice bots into multimodal AI agents. This combination allows:

- Multimodal capabilities: Processing both text and audio for richer interactions.

- Enhanced user experience: Delivering conversational, context-aware, and adaptive responses.

- Scalability: Supporting complex tasks, multi-turn dialogue, and enterprise-grade workflows.

Additionally, leveraging a

phone call api

enables seamless integration of telephony features, allowing your AI agents to interact with users over traditional phone networks.Core Technologies for Voice Agent LLM Connection

Speech-to-Text and Text-to-Speech Engines

Speech recognition and synthesis are foundational for any voice agent LLM connection. Popular tools in 2025 include:

- Whisper (open-source, by OpenAI): High-quality speech-to-text (STT).

- Deepgram: Fast, accurate STT with real-time streaming API.

- Google Speech-to-Text, Azure Speech, Amazon Transcribe: Industry-grade alternatives.

On the output side:

- Google Text-to-Speech, Amazon Polly, Azure TTS: Robust speech synthesis.

- Open-source alternatives: Coqui TTS, Mozilla TTS for customizable voices.

To further enhance your application's capabilities, consider integrating a

Video Calling API

for seamless audio and video communication, or aLive Streaming API SDK

for broadcasting interactive sessions.Large Language Models (LLMs)

The backbone of the voice agent LLM connection is the LLM:

- OpenAI GPT-4, GPT-4o: Industry leaders for natural language tasks.

- Claude (Anthropic): Safe, enterprise-focused LLM via Claude API.

- Llama 3 (Meta): Open-source, local deployment options.

- Hugging Face: Access to thousands of open and fine-tuned models.

If you need to

embed video calling sdk

directly into your application for instant deployment, prebuilt solutions can help you get started quickly.Agent Frameworks and Orchestration

Orchestrating conversations and workflows requires advanced frameworks:

- LangChain: Modular chains for LLM applications, popular for RAG and agent workflows.

- AutoGen: Automated agent composition and orchestration.

- LlamaIndex: Data-centric agent architecture, ideal for RAG.

- Voiceflow: No-code/low-code solution for voice agent design and integration.

For those building audio-centric conversational agents, a robust

Voice SDK

can provide essential features like real-time audio streaming and moderation.Data Storage & Retrieval (RAG, Vector DBs)

Robust voice agent LLM connections often need Retrieval Augmented Generation (RAG) and vector databases:

- Qdrant, Pinecone: Cloud-native vector databases for semantic search and RAG.

- Voiceflow Knowledge Base: Built-in for enterprise knowledge management.

- Postgres, MongoDB: Traditional databases for conversation logs and context.

If your use case involves phone-based interactions, integrating a

phone call api

ensures your voice agent can handle inbound and outbound calls efficiently.Architecture Patterns for a Voice Agent LLM Connection

Chained Approach vs. Real-Time Voice Processing

There are two main architectural styles for a voice agent LLM connection:

- Chained Approach: Sequential processing—audio to text, then to LLM, then to TTS.

- Real-Time Voice Processing: Streaming audio processed in near real-time for latency-sensitive applications.

For developers aiming to build scalable, real-time audio experiences, a

Voice SDK

is invaluable for managing low-latency audio streams and interactive voice features.

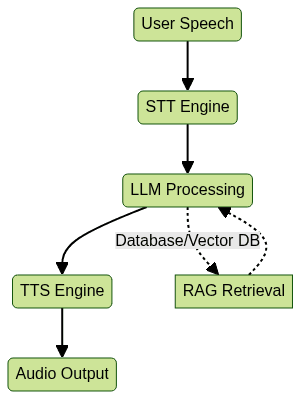

Key Components & Data Flow

A robust voice agent LLM connection involves the following components and flow:

- Speech-to-Text (STT): Converts user audio input to text.

- LLM Invocation: Processes the transcribed text and generates a response.

- Retrieval (RAG): Optionally augments LLM with external knowledge from a vector database.

- Text-to-Speech (TTS): Converts LLM output back to audio.

- Orchestration Layer: Handles workflow, error handling, and logging.

For applications requiring both audio and video communication, integrating a

Voice SDK

alongside your LLM workflow can streamline the development of comprehensive conversational platforms.Basic API Workflow Example

1import requests

2

3# 1. Speech-to-Text

4stt_response = requests.post(

5 \"https://api.deepgram.com/v1/listen\",

6 headers={\"Authorization\": \"Token YOUR_DEEPGRAM_API_KEY\"},

7 data=open(\"user_audio.wav\", \"rb\")

8)

9user_text = stt_response.json()[\"results\"][\"channels\"][0][\"alternatives\"][0][\"transcript\"]

10

11# 2. LLM Processing

12llm_response = requests.post(

13 \"https://api.openai.com/v1/chat/completions\",

14 headers={\"Authorization\": \"Bearer YOUR_OPENAI_KEY\"},

15 json={

16 \"model\": \"gpt-4o\",

17 \"messages\": [{\"role\": \"user\", \"content\": user_text}]

18 }

19)

20llm_output = llm_response.json()[\"choices\"][0][\"message\"][\"content\"]

21

22# 3. TTS Output

23tts_response = requests.post(

24 \"https://api.elevenlabs.io/v1/text-to-speech/standard\",

25 headers={\"xi-api-key\": \"YOUR_ELEVENLABS_API_KEY\"},

26 json={\"text\": llm_output}

27)

28with open(\"response_audio.wav\", \"wb\") as f:

29 f.write(tts_response.content)

30Error Handling & Hallucination Mitigation

- Prompt chaining: Use multiple, focused prompts to guide LLM responses.

- RAG (Retrieval Augmented Generation): Pulls knowledge from trusted vector databases, reducing hallucinations.

- Validation: Post-process LLM output for accuracy and appropriateness.

- Fallbacks: Detect and recover from errors at each step (STT, LLM, TTS).

Step-by-Step Implementation Guide

1. Setting Up Speech Recognition (Whisper/Deepgram)

Here’s how to integrate OpenAI Whisper or Deepgram for speech-to-text in your voice agent LLM connection:

Deepgram example:

```python

import requests

audio_file = open("input.wav", "rb")

headers = {"Authorization": "Token YOUR_DEEPGRAM_API_KEY"}

response = requests.post("

https://api.deepgram.com/v1/listen\

", headers=headers, data=audio_file) result = response.json() print(result[\"results\"][\"channels\"][0][\"alternatives\"][0][\"transcript\"]) ```Whisper (using OpenAI API):

```python

import openai

openai.api_key = "YOUR_OPENAI_API_KEY"

with open("input.wav", "rb") as audio_file:

transcript = openai.Audio.transcribe("whisper-1", audio_file)

print(transcript["text"])

```

2. Connecting to an LLM (OpenAI, Claude, Llama 3)

OpenAI GPT-4/Claude API call:

```python

import requests

headers = {"Authorization": "Bearer YOUR_OPENAI_KEY"}

data = {

"model": "gpt-4o",

"messages": [{"role": "user", "content": "How can I help you today?"}]

}

response = requests.post("

https://api.openai.com/v1/chat/completions\

", headers=headers, json=data) print(response.json()[\"choices\"][0][\"message\"][\"content\"]) ```Claude API (Anthropic):

python

import requests

headers = {\"x-api-key\": \"YOUR_CLAUDE_API_KEY\"}

data = {

\"model\": \"claude-3-sonnet-20240229\",

\"messages\": [{\"role\": \"user\", \"content\": \"What\'s the weather?\"}]

}

response = requests.post(\"https://api.anthropic.com/v1/messages\", headers=headers, json=data)

print(response.json())3. Text-to-Speech Output (TTS)

ElevenLabs TTS API example:

```python

import requests

tts_headers = {"xi-api-key": "YOUR_ELEVENLABS_API_KEY"}

tts_data = {"text": "Hello, this is a test response from your voice agent."}

response = requests.post("

https://api.elevenlabs.io/v1/text-to-speech/standard\

", headers=tts_headers, json=tts_data) with open("output.wav", "wb") as f: f.write(response.content) ```4. Orchestrating the Workflow (LangChain/Voiceflow)

LangChain chain example:

```python

from langchain.chains import SimpleSequentialChain

from langchain.llms import OpenAI

llm = OpenAI(api_key="YOUR_OPENAI_API_KEY")

Define chain steps: STT -> LLM -> TTS

stt_step = ... # Your STT function

llm_step = lambda text: llm(text)

tts_step = ... # Your TTS function

chain = SimpleSequentialChain([stt_step, llm_step, tts_step])

result = chain.run("user_audio.wav")

```

Voiceflow webhook integration:

```python

import requests

def webhook_handler(audio_url):

1# Download audio from Voiceflow

2audio = requests.get(audio_url).content

3# Pass to STT, LLM, TTS as above

4...1

2If you're ready to start building and testing your own conversational AI solution, [Try it for free](https://www.videosdk.live/signup?utm_source=mcp-publisher&utm_medium=blog&utm_content=blog_internal_link&utm_campaign=voice-agent-llm-connection) and explore the available APIs and SDKs.

3

4### 5. Deploying and Testing the Voice Agent

5

6- **Containerize** the workflow using Docker for repeatable deployments.

7- **Test end-to-end**: Simulate real user calls, monitor latency and accuracy.

8- **Integrate monitoring**: Log errors and performance at each stage (STT, LLM, TTS).

9- **Iterate**: Refine prompts, tune agent personality, and scale infrastructure as needed.

10

11## Best Practices for Voice Agent LLM Connection

12

13- **Security & Privacy**: Encrypt all data in transit, anonymize sensitive information, and comply with regulations (GDPR, HIPAA).

14- **Latency Optimization**: Use real-time STT, stream processing, and regional endpoints to minimize lag.

15- **Agent Personalization**: Customize prompts, fine-tune LLMs, and use TTS voices that match your brand.

16- **Scalability**: Leverage container orchestration (Kubernetes), auto-scaling, and serverless endpoints for enterprise workloads.

17

18## Advanced Use Cases and Future Trends

19

20- **Multimodal Agents**: Integrate computer vision and context awareness alongside voice and text for richer agents.

21- **Enterprise Adoption**: Voice agent LLM connections are powering helpdesks, IVRs, and intelligent process automation across industries.

22- **Open Source Evolution**: Whisper, Llama 3, and Hugging Face models are making private, customizable deployments easier and more cost-effective.

23

24## Conclusion

25

26The voice agent LLM connection is transforming conversational AI, enabling natural, scalable, and secure human-computer interaction. By mastering these architectures and best practices in 2025, developers can deliver next-generation voice-powered solutions. Start with robust APIs, focus on user experience, and keep iterating—your users will thank you.

27

28Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ