Introduction to LLM in AI Voice Agents

Large Language Models (LLMs) have revolutionized the field of artificial intelligence by enabling machines to understand and generate human-like language. As we move further into 2025, the intersection of LLMs and AI voice agents is shaping how we interact with technology, bringing unprecedented levels of conversational intelligence to digital assistants. LLMs, such as OpenAI’s GPT series and Meta’s Llama, are now at the core of advanced voice assistants, powering real-time, context-aware, and emotionally intelligent interactions.

The importance of integrating LLM in AI voice agents extends across industries, from customer support automation to sales enablement and beyond. These systems bridge natural language processing (NLP) with speech interfaces, making devices and services more accessible, efficient, and human-centric. This blog post explores the underlying technologies, architecture, and business impact of LLM in AI voice agents, providing a technical deep dive for developers and technology leaders.

What Are AI Voice Agents Powered by LLMs?

AI voice agents are software entities that interact with users through spoken language, understanding commands, answering questions, and performing tasks. Traditionally, such agents relied on fixed, rule-based NLP pipelines and limited contextual awareness. However, with the advent of LLM in AI voice agents, the capabilities of conversational AI have expanded dramatically.

To facilitate these advanced capabilities, developers often leverage a

Voice SDK

to integrate real-time audio processing and communication features into their applications, ensuring seamless and high-quality voice interactions.Unlike older voice assistants, LLM in AI voice agents leverage large-scale neural networks trained on diverse language data, enabling:

- Advanced context retention across long conversations

- Nuanced understanding of intent and emotion

- Dynamic, human-like dialogue generation

The key distinction is that LLMs can handle open-ended queries, multi-turn dialogues, and even inject personality into responses. This enables a shift from simple command-and-control interfaces to engaging, adaptive agents capable of complex business tasks. In 2025, LLM in AI voice agents power next-generation applications in customer support, sales, and multilingual communication, making them essential in modern enterprise and consumer technology stacks.

Core Technologies: LLMs, Speech-to-Text, and Text-to-Speech

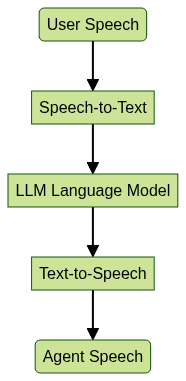

At the heart of every LLM in AI voice agent are three core technologies:

1. Large Language Models (LLMs)

LLMs, like GPT-4 or Llama 3.1, provide deep context understanding, intent recognition, and natural language generation. These models are pre-trained on vast text corpora and fine-tuned for specific domains or tasks.

2. Speech-to-Text (STT)

Speech-to-text engines convert spoken input into text. Modern STT systems use deep neural networks to achieve high accuracy across accents, languages, and noisy environments.

3. Text-to-Speech (TTS)

Text-to-speech systems synthesize natural-sounding audio from generated text, allowing the agent to ""speak"" with various voices, languages, and emotional tones.

For developers working with Python, integrating a

python video and audio calling sdk

can streamline the process of adding both audio and video communication features to AI voice agents, enhancing their versatility.The synergy of these components enables real-time, multi-modal interaction:

This pipeline, illustrated above, is the foundation of every LLM in AI voice agent. Frameworks like Pipecat and LlamaIndex provide abstractions and SDKs for integrating these technologies seamlessly.

Architecture of LLM in AI Voice Agents

A robust architecture for LLM in AI voice agents involves multiple components working in harmony to deliver fast, accurate, and contextually relevant responses. Let’s break down the end-to-end workflow from user speech input to agent reply.

For real-time communication, many teams choose a

Voice SDK

to handle live audio streaming, which is crucial for maintaining low latency and high-quality user experiences in conversational AI.Workflow: From User Speech to Agent Response

- Audio Input: User speaks into a microphone.

- Speech-to-Text: Audio is transcribed via an STT engine (e.g., Whisper, Google STT).

- Contextual Processing: The transcribed text, along with session history or metadata, is fed to the LLM.

- Language Model Processing: The LLM analyzes input, maintains conversation history, and generates a response.

- Text-to-Speech: The LLM’s output is converted to audio using TTS (e.g., ElevenLabs, Amazon Polly).

- Audio Output: The synthesized speech is played back to the user.

Chained vs. Real-Time Architectures

- Chained Architecture: Each component executes sequentially, suitable for batch or non-time-sensitive scenarios.

- Real-Time Architecture: Components run concurrently and optimize for low latency—essential for natural dialog flow.

When building scalable solutions, integrating a

phone call api

can enable your AI voice agents to make and receive calls, further expanding their reach and utility.Frameworks: Pipecat and LlamaIndex

Pipecat offers modular pipelines for chaining STT, LLM, and TTS modules, while LlamaIndex provides context retrieval and agentic workflow management. These frameworks simplify integration and scaling of LLM in AI voice agents.

Python Pseudo-Code: LLM Voice Agent Pipeline

1import pipecat

2from openai import OpenAI

3from speech_recognition import Recognizer, Microphone

4from tts_sdk import TextToSpeech

5

6def llm_voice_agent():

7 recognizer = Recognizer()

8 tts_engine = TextToSpeech()

9 llm = OpenAI(api_key="YOUR_API_KEY")

10 with Microphone() as source:

11 print("\"Listening...\"")

12 audio = recognizer.listen(source)

13 text = recognizer.recognize_google(audio)

14 response = llm.chat_completion(prompt=text)

15 tts_engine.speak(response)

16

17if __name__ == "__main__":

18 llm_voice_agent()

19This pipeline demonstrates how speech is transcribed, processed by the LLM, and synthesized back to speech—all key elements of LLM in AI voice agents.

Key Features and Capabilities

Modern LLM in AI voice agents offer a robust set of features that surpass traditional assistants:

For developers looking to add video capabilities alongside voice, a

Video Calling API

can be seamlessly integrated, allowing for richer, multi-modal user interactions within your AI-powered applications.Conversational Intelligence and Context Retention

LLMs can reference earlier conversation turns, recall user preferences, and maintain coherent dialogues over long interactions. This is critical for customer support, technical troubleshooting, and personal assistants.

Multi-Agent Collaboration and Role Assignment

With frameworks like Pipecat and LlamaIndex, developers can create multi-agent systems where specialized agents handle different tasks (e.g., scheduling, sales, support), collaborating to deliver unified experiences.

To further streamline deployment, you can

embed video calling sdk

modules directly into your application, minimizing development time and ensuring robust audio-video communication.Emotional and Personality-Driven Responses

LLM in AI voice agents now generate emotionally aware and personality-rich responses. They adjust tone, style, and even humor based on user sentiment or business requirements.

Multilingual and Domain-Specific Adaptations

Advanced voice agents support multiple languages and can be fine-tuned for industry-specific jargon, regulatory compliance, or local customs. Tools like OpenAI Voice Mode and multilingual TTS/STT models make global deployments seamless.

Implementation: Building Your Own LLM in AI Voice Agent

Building an LLM in AI voice agent requires orchestrating several technologies and frameworks. Here’s a step-by-step guide for developers:

When integrating voice features, utilizing a

Voice SDK

can help you quickly add robust, scalable audio capabilities to your AI agent, supporting both live and on-demand use cases.1. Select Core Components

- LLM: Choose GPT-4, Llama 3.1, or other models via APIs

- STT/TTS: Integrate Whisper, Google STT, ElevenLabs, or Amazon Polly

- Frameworks: Use Pipecat, LlamaIndex, or OpenAI Voice Mode SDKs

2. Set Up the Pipeline

- Connect microphone input to STT engine

- Pipe transcribed text to the LLM

- Generate response and synthesize to speech

If your application requires telephony integration, a

phone call api

can enable your AI voice agent to interact with users over traditional phone networks, expanding accessibility.3. Integrate with Business Applications

- Use APIs or SDKs to connect your agent to CRM, ticketing systems, or SaaS products

- Automate workflows: customer support, lead qualification, appointment scheduling

4. Address Challenges and Best Practices

- Latency: Optimize for real-time response with asynchronous processing

- Context: Store conversation history for continuity

- Privacy: Encrypt user data and manage consent

- Testing: Simulate edge cases and monitor for hallucinations or bias

5. Real-World Examples

- Leia: Multilingual AI assistant for enterprise knowledge retrieval

- OpenAI Voice Mode: Real-time, conversational voice with personality

- Llama 3.1: Open-source LLM for custom voice agent deployment

Sample Python Implementation with Pipecat

1import pipecat

2from pipecat.modules import WhisperSTT, LlamaLLM, ElevenLabsTTS

3

4def build_voice_agent():

5 pipeline = pipecat.Pipeline([

6 WhisperSTT(),

7 LlamaLLM(model_path="/models/llama-3.1"),

8 ElevenLabsTTS(api_key="ELEVENLABS_API_KEY")

9 ])

10 pipeline.run()

11

12if __name__ == "__main__":

13 build_voice_agent()

14This code shows how to assemble an LLM in AI voice agent pipeline using Pipecat’s modular architecture in Python.

Use Cases and Business Impact

LLM in AI voice agents are transforming business operations across industries. Key applications include:

- Customer Support: 24/7 multilingual agents resolving tickets and handling escalations

- Sales Automation: Proactive lead qualification and personalized outreach

- Language Learning: Interactive, adaptive conversation practice

- Scheduling: Voice-driven calendar and task management

For businesses aiming to deploy voice solutions at scale, a

Voice SDK

provides the infrastructure to support high-concurrency, reliable audio interactions across a variety of platforms.Case studies demonstrate up to 40% reduction in support costs and a 3x increase in customer satisfaction with LLM in AI voice agents. Performance metrics show improved accuracy, faster resolution, and seamless multilingual support.

Challenges, Ethics, and Future Directions

Despite their promise, LLM in AI voice agents present challenges:

- Privacy & Security: Voice data is sensitive; encryption and strict access controls are vital

- Ethical AI: Avoiding bias, respecting user consent, and transparent operation are musts

- Latency: Real-time expectations require optimized pipelines

Looking ahead, trends for 2025 include:

- Greater emotional intelligence and context awareness

- Self-learning agents that adapt in real time

- Widespread adoption in regulated industries (finance, health)

Conclusion

The evolution of LLM in AI voice agents opens new horizons for conversational AI. By combining deep language understanding, real-time speech processing, and agentic workflows, developers can build human-like voice assistants that drive business value and user satisfaction. Now is the time to explore, implement, and innovate with LLM in AI voice agents to stay ahead in 2025 and beyond.

Try it for free

and experience the next generation of AI-powered voice solutions.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ