Introduction to LLMs for Real-Time Conversation

Large Language Models (LLMs) have revolutionized the landscape of conversational AI, enabling machines to understand and generate human-like text at scale. As we move into 2025, the demand for real-time conversation with LLMs is accelerating, driven by applications such as customer support, AI assistants, and live translation. Real-time conversational AI requires not just intelligence, but also speed, scalability, and contextual awareness. In this article, we’ll explore how LLMs are architected and deployed for real-time use, examine leading models and platforms, discuss implementation challenges, and share practical code and architecture patterns for developers.

What is Real-Time Conversation with LLMs?

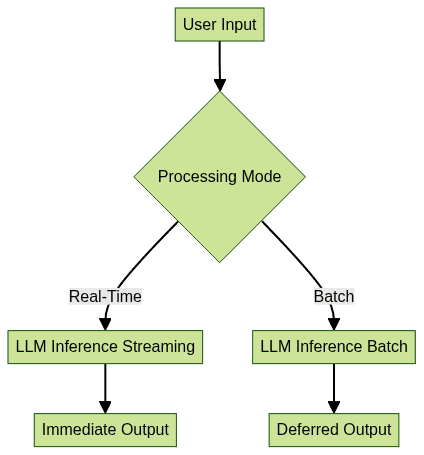

Real-time conversation with LLMs refers to interactive dialogue systems where responses are generated almost instantaneously—typically within a few hundred milliseconds—to ensure a fluid user experience. Unlike traditional batch processing, where requests are queued and processed in bulk (often suitable for data analysis or document summarization), real-time LLMs prioritize minimal latency and high throughput to handle dynamic, multi-turn interactions.

Key requirements for real-time LLM applications include:

- Low Latency: Responses must be generated quickly to maintain conversational flow

- High Throughput: The system must handle many concurrent conversations without bottlenecks

- Robust Context Handling: Maintaining dialogue context across multiple turns

The diagram above contrasts real-time and batch LLM pipelines: real-time flows process input immediately, while batch flows process inputs in groups, introducing delays.

Key Models and Platforms for Real-Time Conversational LLMs

Llama Family (Llama 3, Llama 4) and Their Capabilities

The Llama family (Meta’s open-weights LLMs) has become a cornerstone for real-time conversational AI. Models like Llama 3 and Llama 4 feature expanded context windows—up to 128K tokens in recent releases—permitting longer conversations without context loss. Variants such as Llama 3.1, 3.2, 3.3, 4 Maverick, and 4 Scout offer improvements in speed, memory efficiency, and conversational coherence. These models are optimized for streaming outputs, enabling tokens to be emitted as soon as they’re generated, reducing perceived latency. Llama models are widely adopted for virtual assistants, chatbots, and custom AI apps due to their open-source licenses, high-performance inference, and robust community support. For developers building interactive applications, integrating a

Live Streaming API SDK

can enable seamless real-time video and audio experiences alongside LLM-powered chat.Claude and Bedrock Converse

Anthropic’s Claude models, available via Amazon Bedrock Converse, are engineered for multi-turn conversations and streaming API access. They support large context windows (up to 200K tokens) and are designed for safe, context-aware dialogue. Bedrock Converse provides scalable, managed infrastructure for deploying Claude in real-time applications, with built-in support for streaming outputs, latency monitoring, and multi-user sessions. For applications that require real-time voice communication, integrating a

Voice SDK

can enhance conversational AI with high-quality audio rooms and live audio features.Other Notable Models: DeepSeek, Phi-4, Open-Source Alternatives

DeepSeek and Microsoft’s Phi-4 represent high-efficiency, open-source LLMs with rapid inference and competitive context window sizes. These models are increasingly used for edge deployments, privacy-sensitive applications, and environments with tight latency requirements. The open-source ecosystem continues to innovate on model compression, quantization, and context management, driving down the cost and hardware requirements for real-time LLM chat. For teams seeking to add real-time video communication, a

Video Calling API

can be integrated to support scalable, interactive video and audio conferencing within LLM-powered apps.How Real-Time LLM Conversation Works

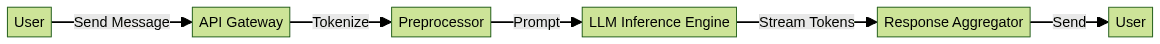

A real-time LLM conversation system typically follows these steps:

- User Input: Text is entered or spoken by the user

- Preprocessing: Input is tokenized and formatted

- Prompt Engineering: Context and instructions are structured for optimal model response

- Inference: The LLM processes input, often streaming tokens as they are generated

- Postprocessing: Output tokens are detokenized, formatted, and sent to the client

- Context Management: Conversation history is updated for the next turn

Streaming outputs allow partial responses to be sent as soon as the model generates each token, minimizing wait times. Robust context management ensures the model remembers previous dialogue turns, crucial for multi-turn conversations.

API integration is typically handled through HTTP-based endpoints. Below is a Python example using the LlamaIndex library to connect with Bedrock Converse. If you're building with Python, consider using a

python video and audio calling sdk

to add real-time communication features to your conversational applications.1import llama_index

2from llama_index.llms.bedrock import BedrockConverse

3

4# Initialize the Bedrock Converse LLM

5llm = BedrockConverse(api_key="YOUR_API_KEY")

6

7# Send a prompt and stream the response

8response = llm.stream(

9 "Explain real-time LLM conversation in 2025."

10)

11

12for chunk in response:

13 print(chunk, end="")

14Prompt engineering for real-time use focuses on concise, context-rich prompts to reduce token count and latency. For those developing in JavaScript, a

javascript video and audio calling sdk

can be leveraged to quickly embed video and audio calling into your LLM-driven web apps.Implementation Challenges for LLMs in Real-Time Conversation

Latency and Infrastructure Considerations

Achieving low latency requires careful infrastructure planning. Decisions include whether to use cloud-based LLM APIs (e.g., Bedrock, Azure OpenAI) or deploy models on-premises/edge. Hardware accelerators (GPUs, TPUs) and techniques like model parallelization or quantization can dramatically improve inference speed. For rapid deployment, you can

embed video calling sdk

solutions to integrate real-time communication without extensive custom development.Handling Long Conversations and Context Windows

LLMs have fixed context window limits. When conversations exceed these windows, strategies such as summarizing prior messages or using memory modules are necessary. Efficient memory management and truncation policies ensure the most relevant context is preserved without overloading the model. If you're building cross-platform apps, leveraging

react native video and audio calling sdk

can help deliver seamless real-time communication on both iOS and Android devices.Privacy, Security, and Compliance

Real-time LLMs often process sensitive data. It is critical to implement encryption in transit and at rest, maintain strict access controls, and support compliance with regulations like GDPR. Some organizations opt for self-hosted LLMs to retain full control over data privacy and security boundaries. For developers interested in WebRTC-based solutions,

flutter webrtc

provides a robust framework for building secure, real-time audio and video communication in Flutter apps.Optimizing LLMs for Real-Time Use

To maximize performance, developers employ several optimization strategies:

- Fine-tuning and Prompt Optimization: Tailoring models and prompts for target use cases

- Streaming Endpoints and Async Handling: Using APIs that support streaming and asynchronous processing for sub-second response times

- Caching and Batching: Caching frequent responses and batching similar requests to reduce compute load

Below is a Python snippet for streaming chat with LlamaIndex:

1from llama_index.llms.llama_cpp import LlamaCPP

2

3llm = LlamaCPP(model_path="llama-4-maverick.gguf")

4

5response = llm.stream("Describe scalable LLM chat architecture.")

6for token in response:

7 print(token, end="")

8

These optimizations are essential for production-grade, scalable LLM-powered chat systems. For businesses requiring telephony integration, a

phone call api

can be used to add voice calling capabilities directly into your AI-powered applications.Use Cases and Applications

Real-time conversational LLMs are transforming a broad spectrum of industries:

- Customer Support Bots: Instant, context-aware responses for support queries

- Virtual Assistants: Scheduling, answering questions, and task automation

- Live Translation: Translating speech or text on-the-fly

- Interactive Tutoring: Personalized, real-time education

- Custom AI Chat Apps: Domain-specific assistants for healthcare, finance, and more

The flexibility and extensibility of LLMs enable rapid prototyping and deployment of unique conversational experiences tailored to specific business needs. If you're ready to build your own solution, you can

Try it for free

and start experimenting with real-time communication APIs today.Open Source vs Proprietary LLMs for Real-Time Conversation

Choosing between open-source and proprietary LLMs depends on several factors. Open-source models (like Llama, DeepSeek, and Phi-4) offer greater flexibility, customization, and lower cost, but require infrastructure management and technical expertise. Proprietary models (Claude via Bedrock, GPT-4 via Azure OpenAI) provide managed services, higher reliability, and support, at a higher cost and with less control over data and customization. The decision hinges on your team’s expertise, compliance needs, and budget.

Future Trends in Real-Time Conversational LLMs

As we look ahead to 2025, expect further expansion of context windows (enabling even longer conversations), improved latency through hardware and software advances, and richer multimodal capabilities (combining text, voice, and images). The pace of innovation will continue to unlock new real-time conversational use cases.

Conclusion

LLMs have become the engine behind real-time conversational AI. With the right models, architectures, and optimizations, developers can deliver fast, context-aware chat experiences. Start experimenting today and shape the future of human-AI interaction.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ