Introduction to LLM for AI Voice Agent

In 2025, Large Language Models (LLMs) are at the core of the most advanced AI voice agents, driving a new era of conversational AI. An LLM for AI voice agent refers to integrating state-of-the-art natural language processing models with voice-driven interfaces, enabling real-time, empathic, and highly adaptive interactions. These agents, powered by LLMs, are transforming industries—offering responsive support, scalable automation, and hyperrealistic voice experiences. As enterprises and developers seek to scale voice AI, the fusion of LLM technology with voice agents becomes essential for meeting user expectations in natural conversation, emotion modeling, and persistent agentic memory. This blog covers how LLMs are engineered into AI voice agents, their core use cases, technical architecture, implementation strategies, and the future of voice AI.

How LLMs Power Modern AI Voice Agents

What is a Large Language Model (LLM)?

A Large Language Model (LLM) is a deep learning model trained on massive datasets of text. LLMs like GPT-4 or Llama 3 excel at understanding, generating, and manipulating human language, making them ideal for conversational AI and voice agent applications.

Why Choose LLMs for Voice Agents?

LLMs enable AI voice agents to interpret natural speech, manage complex dialogues, and respond with contextually relevant, human-like language. Unlike traditional scripted bots, LLM-powered agents dynamically adapt, handle ambiguous queries, and maintain conversation flow—delivering a superior user experience across support, sales, and automation scenarios. For developers looking to build these capabilities, integrating a

Voice SDK

can streamline the process of adding real-time audio interactions to their applications.Core Capabilities of LLMs in Voice Agent Applications

- Natural Language Understanding (NLU): LLMs parse speech inputs, decipher intent, extract entities, and manage ambiguous or colloquial language.

- Context Retention: They maintain context over multi-turn conversations, allowing persistent agentic memory and coherent dialogue across sessions.

- Multi-turn Dialogue: LLMs handle back-and-forth exchanges, ask clarifying questions, and adapt to evolving topics without losing track.

- Empathy and Adaptation: Through emotion modeling and semantic analysis, LLMs imbue voice agents with empathy, adapting tone or phrasing to suit user sentiment and intent.

By integrating these capabilities, LLMs empower AI voice agents with autonomy, flexibility, and the ability to scale voice AI across enterprise and consumer domains. Leveraging a

Video Calling API

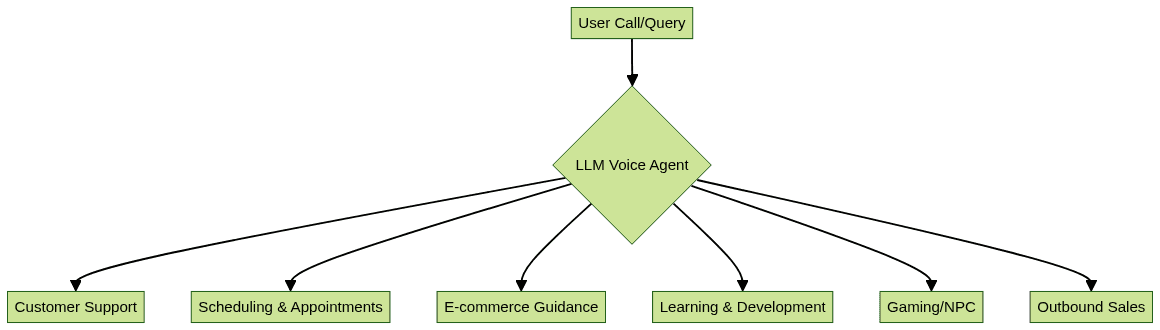

can further enhance these agents by enabling seamless transitions between voice and video conversations.Key Use Cases for LLM-Based Voice Agents

Customer Support

AI voice agents using LLMs automate inbound and outbound support, providing real-time answers, troubleshooting, and empathetic assistance. They integrate with CRMs, resolve tickets, and escalate complex cases to human agents. With persistent agentic memory and hyperrealistic voice avatars, users receive seamless, context-aware support experiences. For businesses aiming to implement these solutions, utilizing a robust

phone call api

ensures reliable and scalable telephony integration.Inbound Scheduling & Appointments

LLM-powered voice agents manage inbound calls for appointment setting, calendar coordination, and real-time rescheduling. Their context retention ensures efficient handling of multi-turn scheduling dialogues, reducing human intervention and operational costs. Developers can simplify integration by adopting a

python video and audio calling sdk

, which provides essential tools for building interactive voice applications in Python.E-commerce and Sales

Conversational AI voice agents in e-commerce guide customers through product selection, answer queries, upsell items, and process transactions. Real-time LLM inference enables personalized recommendations and voice-driven checkout, increasing conversion rates and customer satisfaction. Embedding an

embed video calling sdk

can further support customer engagement by allowing instant video consultations within the shopping experience.Learning & Development

Voice agents harnessing LLMs deliver interactive training, onboarding, and assessments. They adapt explanations to learner proficiency, support multimodal LLM integrations (text, voice, visuals), and offer instant feedback in language learning and professional development environments. For platforms targeting mobile users, integrating a

react native video and audio calling sdk

ensures smooth voice and video interactions on both iOS and Android devices.Gaming and NPC Interactions

In gaming, LLM-based voice agents create lifelike non-player characters (NPCs) with dynamic personalities and dialogue trees. Players engage in immersive, unscripted conversations, enhancing realism and narrative depth. Game developers can leverage a

Voice SDK

to implement real-time audio features that bring these NPCs to life.Outbound Sales and Lead Qualification

Autonomous voice agents powered by LLMs conduct outbound sales calls, qualify leads, and capture data for CRM systems. Their empathetic AI interaction increases engagement, while automated follow-ups optimize pipeline efficiency. To maximize reach and engagement, integrating a

Live Streaming API SDK

can enable scalable, interactive outbound campaigns.

Architecture: Integrating LLM with Voice Agent Stack

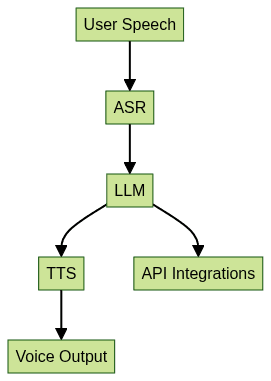

Core Components

A modern LLM voice agent stack contains:

- Automatic Speech Recognition (ASR): Transcribes raw speech into text

- LLM (NLP Core): Interprets and generates natural language responses

- Text-to-Speech (TTS): Converts LLM output into hyperrealistic, human-like voice

- API Integrations: Connects with CRMs, scheduling tools, or other backends for data retrieval and action execution

- Voice Agent APIs: Enable deployment and integration across platforms (Twilio, custom telephony, etc.)

For seamless integration of these components, a

Voice SDK

can provide the necessary APIs and infrastructure to support real-time voice interactions.Workflow: From Speech Input to AI Response

When a user speaks, the workflow is:

- ASR transcribes speech to text

- The text is sent to the LLM

- The LLM generates a response

- TTS synthesizes the response into speech

- The voice agent returns the audio to the user

1import openai

2import speech_recognition as sr

3import pyttsx3

4

5def llm_voice_agent_inference(audio_input):

6 recognizer = sr.Recognizer()

7 with sr.AudioFile(audio_input) as source:

8 audio = recognizer.record(source)

9 text = recognizer.recognize_google(audio)

10 response = openai.ChatCompletion.create(

11 model="gpt-4",

12 messages=[{"role": "user", "content": text}]

13 )

14 reply = response["choices"][0]["message"]["content"]

15 tts_engine = pyttsx3.init()

16 tts_engine.say(reply)

17 tts_engine.runAndWait()

18Reducing Latency and Improving Realism

For real-time voice AI, minimizing latency is critical. Techniques include using low-latency ASR and TTS engines, model quantization, streaming inference, and edge deployment. Hyperrealistic voice avatars leverage neural TTS and emotion modeling for lifelike, adaptive responses.

Diagram: End-to-End LLM Voice Agent Architecture

Choosing the Right LLM for Your AI Voice Agent

Open vs. Proprietary LLMs

- Open-weight LLMs: (e.g., Llama 3, Mistral) offer transparency, on-prem deployment, and customization but may lag in performance or require more tuning.

- Proprietary LLMs: (e.g., OpenAI GPT-4, Anthropic Claude) deliver state-of-the-art results and robust APIs but at higher cost and with limited control over model internals.

Importance of Training Data

LLM quality depends on diverse, high-quality training data—especially conversational, domain-specific, and voice-related datasets. Better data leads to improved NLU, empathy, and adaptation in voice agents.

Customization & Fine-Tuning for Voice Tasks

Fine-tuning LLMs on dialogue datasets, voice transcripts, or customer interaction logs can boost performance for support, sales, or scheduling. Custom LLMs adapt to brand tone, domain, and nuanced conversational patterns, enabling a truly human-like AI voice assistant.

Implementation: Building Your Own LLM-Powered Voice Agent

Required Tools & Platforms

- ASR: Google Speech-to-Text, Deepgram, Whisper

- LLMs: OpenAI GPT-4, Llama 3, Mistral, open-weight speech language models

- TTS: Google Cloud TTS, Amazon Polly, ElevenLabs

- Integration: Twilio Voice, custom APIs, RAG for voice AI, no-code platforms

For developers seeking to quickly add real-time voice features, a

Voice SDK

offers a comprehensive solution for integrating live audio rooms and conversational capabilities.No-Code vs. Full-Code Approaches

- No-code: Platforms like Voiceflow, Cognigy, and Twilio Studio allow rapid prototyping with drag-and-drop tools, ideal for enterprise AI voice agents and fast iterations.

- Full-code: Custom Python/Node.js stacks offer granular control, enabling advanced features like persistent agentic memory, multimodal LLMs, and bespoke API integrations.

Example: Deploying a Voice Agent with OpenAI API

Below is a basic example of deploying a voice agent using OpenAI's API in Python, integrating ASR and TTS for a conversational

phone call

LLM:1import openai

2import speech_recognition as sr

3import pyttsx3

4

5def deploy_voice_agent(audio_file):

6 recognizer = sr.Recognizer()

7 with sr.AudioFile(audio_file) as source:

8 audio = recognizer.record(source)

9 text = recognizer.recognize_google(audio)

10 completion = openai.ChatCompletion.create(

11 model="gpt-4",

12 messages=[{"role": "user", "content": text}]

13 )

14 response = completion["choices"][0]["message"]["content"]

15 tts = pyttsx3.init()

16 tts.say(response)

17 tts.runAndWait()

18Testing and Scaling Considerations

- Latency: Benchmark ASR, LLM, and TTS separately; optimize with streaming and low-latency models.

- Scalability: Use containerized deployments, autoscaling APIs, and distributed agentic stack for enterprise-grade reliability.

- Monitoring: Implement observability for error tracking, response quality, and user sentiment.

- Compliance: Ensure data privacy and regulatory compliance, especially for voice recordings and persistent agentic memory.

Future Trends in LLM for AI Voice Agent

In 2025, the frontier of LLM voice AI includes multimodal LLMs (integrating speech, text, and vision), fine-grained agentic memory for persistent context, emotion modeling for empathetic AI agents, and support for global languages. Open-weight LLMs and RAG (retrieval-augmented generation) pipelines will democratize custom voice agent development, while hyperrealistic voice avatars will blur the line between human and AI-powered sales, support, and conversational experiences at scale.

Conclusion

LLM-powered AI voice agents are redefining how we interact with technology—offering hyperrealistic, context-aware, and empathetic voice automation. Developers and enterprises should experiment, iterate, and harness the power of next-gen conversational AI in 2025.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ