Introduction to Custom LLM for AI Agents

A custom LLM for AI agents is rapidly becoming the backbone of intelligent automation in 2025. Custom Large Language Models (LLMs) refer to language models that are fine-tuned, extended, or architected for a specific use case, domain, or workflow. AI agents are autonomous software entities that perform tasks or make decisions on behalf of users or systems, often leveraging LLMs to process language, retrieve information, and interact with external tools.

The rise of custom LLMs for AI agents is transforming how organizations build smart, context-aware systems. Off-the-shelf models often lack the nuanced understanding and task-specific capabilities that businesses require. By customizing LLMs, developers can create agents that exhibit deeper autonomy, integrate private or proprietary knowledge, and reliably automate complex workflows. This guide details how to build, integrate, and deploy a custom LLM for AI agents, using the latest techniques, tools, and real-world examples.

Understanding Custom LLM for AI Agents

What is a Custom LLM?

A custom language model (LLM) is a transformer-based neural network trained or fine-tuned with additional, domain-specific data and knowledge. Unlike general-purpose models, custom LLMs are optimized to understand jargon, context, and tasks unique to a particular field, such as legal, finance, or healthcare. Customization can involve supervised fine-tuning, prompt engineering, or integrating external data sources.

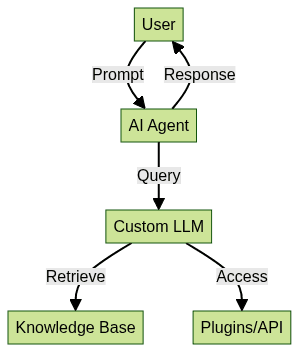

How AI Agents Use Custom LLMs

AI agents use custom LLMs to interpret instructions, reason over structured and unstructured data, and interact with APIs or databases. The LLM acts as both the "brain" for understanding and generating language, and the orchestrator for workflow automation. Agents equipped with a custom LLM can access proprietary knowledge bases, use plugins for real-time data, and leverage vector databases for long-term memory. For example, integrating communication features is now easier than ever with solutions like the

Video Calling API

, which enables seamless audio and video interactions within your AI agent workflows.

Key Benefits of Custom LLM for AI Agents

Enhanced Autonomy and Specialization

Custom LLMs empower AI agents with advanced autonomy, enabling them to make nuanced decisions, solve domain-specific problems, and independently orchestrate complex workflows. They can adapt to unique business logic and user preferences, far exceeding the capabilities of generic models. For instance, integrating a

phone call api

allows agents to initiate and manage voice communications directly, further enhancing their autonomy and real-world utility.Improved Knowledge Integration and Memory

With custom knowledge integration and vector memory via datastores, agents can recall facts, learn from user interactions, and deliver context-aware responses. This persistent memory enables agents to reference previous conversations, maintain long-term context, and provide tailored advice or solutions.

Popular Platforms and Tools for Building Custom LLM for AI Agents

OpenAI (GPTs, Plugins)

OpenAI offers GPT-4, GPT-3.5, and the GPTs platform, which support fine-tuning, plugin integration, and secure deployment. Developers can use OpenAI's API, customize workflows, and plug into a growing ecosystem of third-party tools. When building agents that require real-time communication, leveraging SDKs like the

python video and audio calling sdk

can accelerate development and integration.Anthropic Claude 3

Anthropic Claude 3 provides robust tools for safe, steerable custom LLMs. With advanced prompt engineering and privacy features, Claude is increasingly popular for responsible AI agent development, especially in regulated industries. For developers working with web technologies, the

javascript video and audio calling sdk

offers a straightforward way to add communication features to AI-powered applications.TeamAI and Others

Platforms like TeamAI, Hugging Face, and open-source solutions empower developers to build, deploy, and scale custom LLMs for AI agents, including support for plugins, vector databases, and multi-agent orchestration. Those building mobile-first agents can benefit from the

react native video and audio calling sdk

, which simplifies adding video and audio call functionality to cross-platform apps.Step-by-Step Guide: Building a Custom LLM for AI Agents

Step 1: Define Your Agent's Purpose and Domain

Begin by specifying the tasks your AI agent must perform, its target domain, and the scope of autonomy required. For example, a legal assistant agent will need access to legal databases and specific reasoning abilities, while a marketing agent may require integration with social media APIs and campaign data. If your use case involves embedded communication, consider using an

embed video calling sdk

to quickly add video calling capabilities without extensive custom development.Step 2: Select or Fine-tune a Language Model

Choose a base LLM (e.g., GPT-4, Claude 3, Llama 3) and fine-tune it with domain-specific datasets. Fine-tuning can be supervised (labeled data) or unsupervised (domain text corpora), often using frameworks like Hugging Face Transformers or OpenAI's Fine-tuning API.

1from transformers import AutoModelForCausalLM, AutoTokenizer, Trainer, TrainingArguments

2

3model = AutoModelForCausalLM.from_pretrained("gpt-4-custom")

4tokenizer = AutoTokenizer.from_pretrained("gpt-4-custom")

5

6# Prepare dataset and training args...

7trainer = Trainer(

8 model=model,

9 args=TrainingArguments(

10 output_dir="./results",

11 num_train_epochs=3,

12 per_device_train_batch_size=4,

13 ),

14 train_dataset=custom_dataset,

15)

16

17trainer.train()

18Step 3: Integrate Knowledge Bases and Datastores

Connect your agent to internal knowledge bases, web sources, and vector databases (e.g., Pinecone, Weaviate, Qdrant) to enable context retrieval and persistent memory. This allows the agent to answer complex queries, reference internal data, and learn from ongoing interactions. For agents that require real-time engagement with audiences, integrating a

Live Streaming API SDK

can unlock interactive live video capabilities.1knowledge_base:

2 type: vector_db

3 provider: pinecone

4 api_key: "your_api_key"

5 collection: "legal_docs"

6Step 4: Add Plugins and External APIs

Extend your agent's capabilities with plugins or API integrations (e.g., calendar, CRM, or custom business logic). Many LLM platforms support plugin ecosystems or REST API calls that your agent can invoke for real-time information and workflow automation. If your application targets Android devices, leveraging

webrtc android

technology ensures low-latency, high-quality communication features for your users.1{

2 "plugins": [

3 { "name": "calendar", "endpoint": "https://api.calendar.com/v1/events" },

4 { "name": "crm", "endpoint": "https://api.crm.com/v1/contacts" }

5 ]

6}

7Step 5: Deploy and Test Your Custom LLM-powered Agent

Deploy your agent using cloud platforms (AWS, Azure, GCP) or on-premises infrastructure. Monitor performance, run integration tests, and iterate based on user feedback. Ensure that privacy, security, and compliance requirements are met during deployment. For those building web-based video communication, exploring

react video call

solutions can help you deliver seamless real-time experiences in your AI-powered applications.1agent:

2 name: legal_assistant

3 model: gpt-4-custom

4 plugins:

5 - calendar

6 - crm

7 datastore:

8 type: pinecone

9

Real-World Use Cases for Custom LLM for AI Agents

Legal Assistant Agent

A custom LLM-powered legal assistant can draft documents, analyze contracts, and answer legal queries by integrating with legal databases, leveraging fine-tuned legal language models, and complying with privacy requirements. This enhances law firms' productivity and accuracy.

Marketing Automation Agent

Marketing agents use custom LLMs to generate personalized content, track campaign analytics, and interact with CRM tools. By integrating with external APIs and vector databases, these agents optimize marketing workflows and maximize ROI.

Personal Productivity/Assistant Agent

Personal assistant agents are enhanced with custom LLMs to manage schedules, summarize emails, and automate repetitive tasks. Persistent vector memory enables them to learn user preferences, recall previous conversations, and provide proactive suggestions, streamlining daily routines. If you want to experiment with these capabilities, you can

Try it for free

and explore how AI agents can be customized for your needs.Challenges and Best Practices for Custom LLM for AI Agents

Cost, Complexity, and Privacy Concerns

Building custom LLMs involves significant resource investment, from compute costs for training and inference to managing complex integrations. Privacy is critical, especially when handling sensitive data—ensure compliance with regulations (e.g., GDPR) and use privacy-preserving architectures.

Best Practices for Reliability and Security

Adopt robust testing, monitoring, and logging for your agents in production. Secure API keys, access controls, and encrypted data flows are essential. Regularly update your LLM and plugins to mitigate vulnerabilities and improve reliability.

Future Trends: The Evolution of Custom LLM for AI Agents

In 2025, multi-agent systems will become mainstream, enabling teams of agents to collaborate on complex problems. Continuous learning, where agents update knowledge bases in real time, and the adoption of open source LLMs will accelerate innovation and democratize access to advanced AI capabilities.

Conclusion: Why Custom LLMs are Transformative for AI Agents

Custom LLMs are revolutionizing how AI agents operate, allowing for deep specialization, persistent memory, and seamless workflow automation. By following best practices in model selection, knowledge integration, and secure deployment, developers can unlock the full potential of autonomous agents for any domain.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ